Research & Teaching

Initial Development and Validation of the Biology Teaching Assistant Role Identity Questionnaire (BTARIQ)

Journal of College Science Teaching—May/June 2023 (Volume 52, Issue 5)

By Amy E. Kulesza and Dorinda J. Gallant

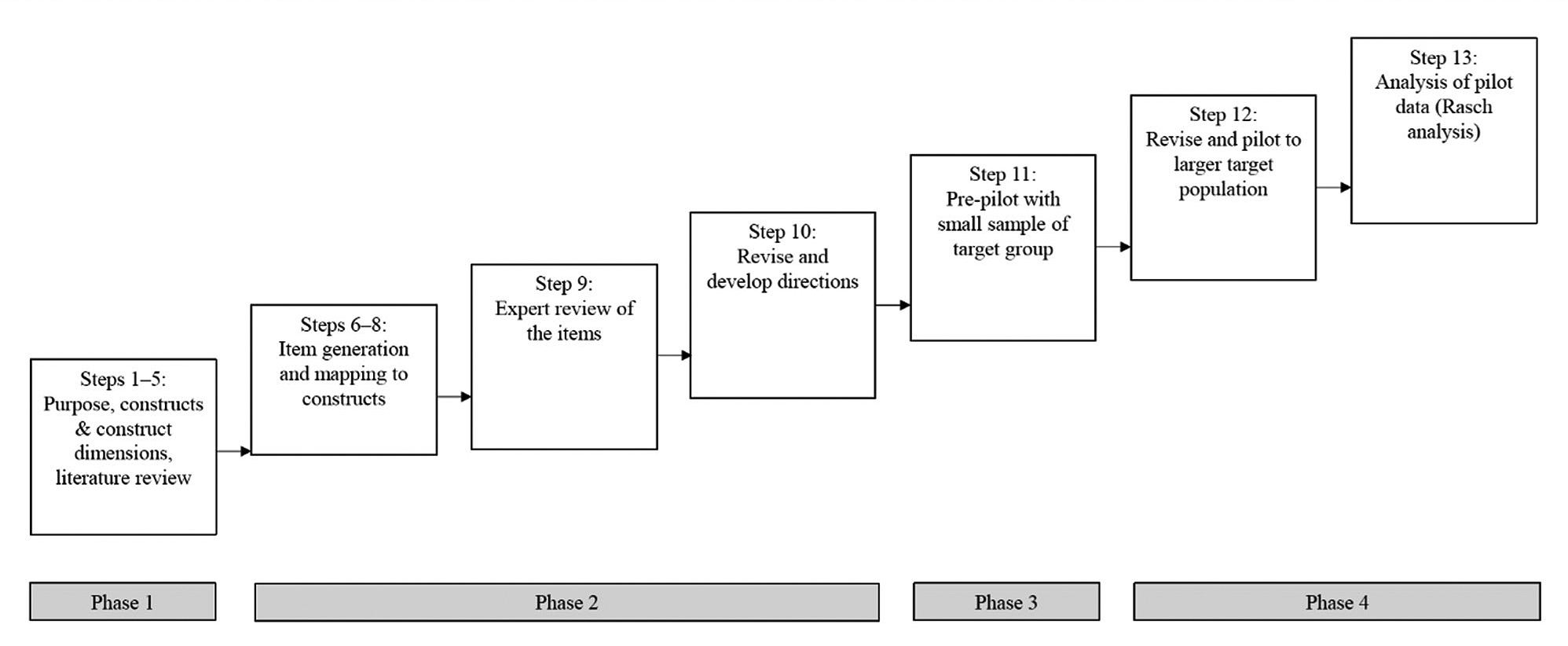

Graduate teaching assistants (TAs) may identify with multiple roles during their introductory biology teaching experiences. This study developed an instrument that measures TA role identity in introductory biology. Initial development and content validation of the Biology Teaching Assistant Role Identity Questionnaire (BTARIQ) occurred in four phases. The first phase included the specification of instrument purpose and descriptions of the conceptual definitions. The second focused on item generation mapped to the constructs and dimensions based in the literature. An expert review panel validated the instrument content for the constructs, and a draft of the instrument was developed based on this review. The third consisted of a pilot to a small group of the target population using the draft instrument, and the instrument was further revised based on the results. In the final phase, an exploratory Rasch analysis was conducted with a field test sample to examine dimensionality, model fit, person and item separation and reliability, and rating scale category functioning. Findings suggest some evidence to support the interpretation and use of scores from the BTARIQ.

Graduate teaching assistants (TAs) are responsible for educating large numbers of science undergraduate students. Sundberg et al. (2005) report that 91% of science lab sections at research-intensive universities are led by TAs. While instructing undergraduates in the laboratory, biology TAs may experience multiple role identities (e.g., teacher, researcher, mentor). As teachers, TAs help students achieve learning outcomes and practice skills they need to succeed in introductory biology. As researchers, TAs help students conduct scientific research by troubleshooting experimental issues and providing expertise. As mentors, TAs guide undergraduates to overcome challenges in the laboratory, deal with failure, and think scientifically about problems. As graduate students, TAs take on these various professional roles, but it is unclear whether TAs identify with these roles in the context of the undergraduate laboratory classroom. We developed the Biology Teaching Assistant Role Identity Questionnaire (BTARIQ) as an instrument that measures graduate TAs’ role identity.

Building on Kajfez and McNair’s (2014) work that developed an instrument focusing on the roles of engineering graduate students as teachers, researchers, and lifelong learners, the BTARIQ will help identify the professional roles with which biology TAs associate. Understanding the extent to which biology TAs identify as teacher, researcher, and mentor is important for faculty who advise, mentor, and supervise graduate students; TAs who aspire for an academic career as a faculty member at a college or university; and undergraduate students whose engagement with TAs may affect their overall experience in a laboratory setting. Moreover, understanding how biology TAs see themselves as teachers, mentors, and researchers can potentially shape conversations between graduate TAs and advisors regarding career aspirations and TAs’ engagement with undergraduate students. This influence can benefit biology TAs by raising self-awareness and signaling opportunities for professional development that leads to being productive in their careers. The BTARIQ provides a way to examine current biology TAs’ roles.

Using both social identity and identity theories, Ashforth (2001) defines role identities as a persona someone enacts. In the context of graduate school, students may adopt a variety of roles in their social environment. In both social identity and identity theories, self is considered adaptive and can be changed by taking on various social categories. Through this process of self-categorization, identities are formed (Stets & Burke, 2000). In addition to self-categorizing, individuals compare themselves with others, a process called social comparison (Stets & Burke, 2000) that helps define roles in a particular context (Ashforth, 2001). In identity theory, the main tenet is that an individual has a role. That role has expectations and standards that influence behavior (Stets & Burke, 2000). During graduate school, students may adopt various professional roles such as teacher, researcher, and mentor, all of which may shift during different experiences. For example, Rivera (2018) found that novice TAs often view their teaching role as similar to a lecturer, but after an intensive summer training, they begin to see themselves more as facilitators in the classroom.

A professional role in the context of this study is a persona that a graduate student enacts during his or her time as a TA that influences the TA’s behavior. In this study, we limited role identities to three dimensions that are prominent roles biology graduate TAs may adopt in the teaching laboratory: teacher, researcher, and mentor. Although other roles certainly exist for biology graduate TAs, we chose roles that biology faculty members typically adopt in academia and with which graduate students may identify during their training; these roles may also be fostered during TAs’ teaching experiences.

Although there has been extensive work looking at role identity in higher education, many studies focus on faculty (Komba et al., 2013) or undergraduates (Gilardi & Lozza, 2009; Hunter et al., 2006). Of those centering on graduate students, most focus on teacher identity (Gilmore et al., 2009; Marbach-Ad et al., 2014) or constructs other than identity such as self-efficacy (DeChenne et al., 2012). Additionally, previous studies have focused on small case studies or used qualitative data (Sweitzer, 2009; Volkmann & Zgagacz, 2004). This study aimed to address a gap in the literature, as it relates to graduate students and their role identity, by developing and validating a self-report measure that focuses on graduate students’ identities as teacher, researcher, and mentor.

BTARIQ development

Within four phases (Figure 1), we used the first 13 (of 16) steps outlined by McCoach et al. (2013) in the development and initial validation of the BTARIQ. The four phases were as follows: (i) specification of the instrument’s purpose and description of conceptual definitions and construct dimensions (Steps 1–5); (ii) item generation and content validation using experts (Steps 6–10); (iii) a pilot of the instrument with TAs (Step 11); and (iv) a field test and Rasch analysis (Steps 12–13). The four phases are described in detail in the following sections. The study was determined exempt from Institutional Review Board review at The Ohio State University.

Process of initial BTARIQ development and validation.

Note. Based on Steps 1–13 in McCoach et al. (2013) for developing an instrument in the affective domain.

Phase 1: Conceptual definitions

Teacher, researcher, and mentor are commonplace words, and often they are not conceptually defined in the literature. Palmer et al. (2015) suggest that for mentor, this is due to the word being used in a variety of contexts. This may be true of researcher and teacher as well.

Teacher

The literature discusses what successful teachers do; however, it is rare for authors to agree on a definition of a teacher. At its core, teaching is sharing knowledge of the material with students. Parker et al. (2009) define a TA as someone who helps individuals or groups of students with their learning. Nyquist and Wulff (1996, p. 2) define TAs as “graduate students who have instructional responsibilities in which they interact with undergraduates.”

Kalish et al. (2012) developed a set of competencies to help TAs become successful educators in their field of study within three areas: foundational, postsecondary, and pedagogical. TAs must bring foundational knowledge to the classroom regarding their particular discipline, meaning that they have certain skills and content knowledge that their students do not. TAs should have an understanding about how their work fits into the context of higher education and understand education standards of their field. Teachers also read and discuss current research on how people learn and use evidence-based practices in their teaching (Kalish et al., 2012). Based on these parameters, a teacher in the context of this study is a graduate student helping undergraduates learn biology with purpose, thought, and reflection.

Researcher

A researcher engages in the scientific process to answer questions about the world around them. Auchincloss et al. (2014) suggest that science researchers engage in five activities during the process of conducting scientific experiments: use of scientific practices, discovery, relevance, collaboration, and iteration. Using these components of scientific practice, a researcher in the context of this study is defined as a graduate student who engages in the process of science, including, but not exclusive to, using scientific practices, participating in discovery, asking relevant questions, collaborating with others, and revising and repeating their work.

Mentor

A mentor provides knowledge, advice, and support. Mentors are often role models from whom students learn through observation, discussion, and demonstration. Schunk (2012, p. 159) defines mentoring as a formal or informal way of “teaching of skills and strategies to students or other professionals within advising and training contexts.” Schunk asserts that an ideal mentorship will result in learning for both the mentor and the mentee. Schunk adds that mentoring is “a developmental relationship where more-experienced professors share their expertise with and invest time in less-experienced professors or students to nurture their achievement and self-efficacy” (p. 159). This is similar to Pfund’s (2016) definition of a collaborative relationship with the goal of helping mentees achieve career competencies. Adapting these definitions, a mentor in the context of this study is defined as a graduate student who shares their expertise and experiences with their undergraduate students to help develop those students’ skills and self-efficacy.

Phase 2: Item generation and content validation

We generated 46 initial items for the BTARIQ from three primary sources. As previously described, roles are personas that someone enacts (Ashforth, 2001) and that therefore influence their behavior (Stets & Burke, 2000). Consequently, we operationalized our roles as behavioral items. The teacher role was operationalized based on the graduate student competencies presented by Kalish et al. (2012). These competencies were modified into action items to represent potential TA behaviors. The researcher role was operationalized based on the five activities of scientists (Auchincloss et al., 2014). The mentor role was operationalized based on the work of Palmer et al. (2015), who compiled eight common components of mentoring definitions in the literature. Additional items for each role were added based on Kajfez and McNair (2014), resulting in 15 items in the teacher dimension, 18 items in the researcher dimension, and 13 items in the mentor dimension.

To establish content validity, we invited experts to participate in a judgmental review of the items using a modified worksheet based on the recommendations and example in McCoach et al. (2013). Seven experts in biology education research and TA professional development were targeted for this review panel, with five responding. These five experts have degrees in science and science education and have previously conducted TA professional development in some form.

During this content validation process, we asked the experts to categorize items into one of the three dimensions to provide corroborative evidence for our item placement into the different dimensions. Qualitative and quantitative data were collected simultaneously due to a short timeline for feedback from participants. The experts were first asked to identify which of the three dimensions an item represented, how confident they were in their choice, and how relevant the item was to the construct. Reviewers then responded to a series of open-ended questions to establish that items were clear and understandable to participants and appropriate for TAs.

We analyzed the experts’ feedback based on McCoach et al.’s (2013) recommendations. First, we evaluated experts’ match of the items to the intended dimension. For individual items, matched percentages ranged from 0% match to 100% match by all five experts. There were 25 items with 100% matching by the experts to the intended dimension (Table 1). One item matched by all the experts was eliminated from the instrument based on its specificity. Of the remaining 24 items with 100% expert match, the average confidence of each expert in matching the item to the dimension ranged from 2.6 to 4.0 on a 4-point scale. The average relevancy of these items to the construct assigned by the experts ranged from 2.6 to 3.0 on a 3-point scale, which met the criteria suggested by McCoach et al. (2013). The number of matched items to the intended dimensions by individual experts ranged from 60.8% (28 items) to 91.3% (42 items) of the 46 total items.

Open-ended comments from the experts were used to retain, modify, or eliminate the remaining items that did not match at 100%. Two items with zero matches by experts to the intended dimension were eliminated. Based on feedback, we removed five additional items from the instrument and modified 14 items. Expert reviewers identified two items as double-barreled, so these were broken into two items each, and one new item was added based on reviewer suggestions. At the end of this process, 42 items were included in the pilot questionnaire, 14 for each dimension, ensuring adequate content representation for each dimension.

Phase 3: Pilot

To this revised 42-item instrument, we added demographic questions about features such as the type of lab being taught, the degree the TA was pursuing, and a TA’s current year in their program. We grouped items by dimension (i.e., teacher, researcher, mentor) and separated items into three sections to reduce the potential for questionnaire fatigue. Instructions for completion and a brief description of the instrument were also included.

We chose a frequency rating scale as the scaling technique because we intended to measure an affective construct and to distinguish variation between TA identities. We used five response choices—“always,” “very often,” “sometimes,” “rarely,” and “never”—which we selected because of the behavioral aspect of the statements. Therefore, if a TA identified with a particular role or roles as defined, the TA should enact the behaviors in the items. Based on the recommendations of McCoach et al. (2013), a 5-point scale has been shown to be reliable and good when expecting moderate responses (e.g., “sometimes”) from the participants. Given the age and educational background of the TA respondents, they most likely could have responded reliably with more than five options. However, to provide balance between the number of responses and the number of items, five options presented a good middle ground to avoid questionnaire fatigue.

We then solicited feedback from a few members of the target population. We asked TAs currently teaching introductory biology courses at a large midwestern university to complete the draft BTARIQ and provide at least three constructive criticisms of the questionnaire. From a pool of approximately 50 TAs, we obtained responses from 12. The focus of the current study is on graduate students, so out of the 12 respondents, only eight were retained for analysis (five doctoral and three master’s of science students). The TAs taught a mixture of biology courses for majors and nonmajors.

Three main themes emerged from the pilot feedback from the eight graduate TAs regarding questionnaire instructions, scale, and item wording. Positive feedback indicated that the three sections made it easier to compartmentalize the concepts and answer the questions more accurately. Based on reviewers’ suggestions, changes to the instrument instructions were made. Demographic questions were changed to include other types of TAs (such as undergraduate and contract), which required additional modification of the “year in program” demographic question to account for the changes in TA type and adding a “not applicable” option. To further identify types of lab experiences, we added an open-ended question for TAs to describe the course in which they were a TA.

Consistent feedback was for the roles to be defined, especially the mentor role, to aid their responses to the items. TAs found it difficult to understand the differences between the teacher and mentor roles and suggested that having the definitions would help them better understand the items. These definitions were included on each page of the revised questionnaire. Other TAs had issues with choosing from the scale provided for some of the items. Because changing the scale could change the flow of the questionnaire as well as the analysis, we instead modified those items to better reflect the response scale. Some TAs were not familiar with terminology related to educational standards and pedagogical recommendations; therefore, we revised the instrument to include examples. Finally, specific phrasing in some items confused TAs, so we modified those phrasings to clarify the issues identified.

Phase 4: Field test and Rasch analysis

TAs were recruited for the field test through two email lists with graduate students in the life sciences and specific national colleague contacts. The instrument (see Online Appendix A) was administered through Qualtrics. An exploratory Rasch analysis using the Andrich rating scale model (Andrich, 1978) was performed on the field test data using WINSTEPS® 4.0.1.

For each of the three scales, we assessed dimensionality, model fit, person and item separation and reliability, and rating scale category functioning. To determine how well the data fit the model, we examined both the infit and the outfit mean square (MNSQ) statistics for each item on each scale. For rating scales, infit and outfit MNSQ statistics in the range of 0.6 to 1.4 suggest a reasonable fit of the data to the model (Bond & Fox, 2001; Wright & Linacre, 1994). However, given the exploratory nature of our analysis, we did not remove potential misfitting items.

Incomplete responses, and responses from those not seeking graduate degrees, were removed (n = 18) from the field test sample, resulting in 31 cases for analysis. Most respondents were female (71%), in the second year of their program (40.7%), sought a PhD (61.3%), and held a traditional teaching assignment (83.9%) at doctoral research-intensive universities (71%). Initial principal component analysis (PCA) of standardized residuals for the 14 items on each of the role identity scales suggested multidimensionality (i.e., measuring more than one construct), with unexplained variance in the first contrast > 2 eigenvalues (Table 2).

In addition, we found that between 41% and 55% of raw variance was explained by Rasch measures for scales. Using the standardized residual plot and the standardized residual loadings from items, we identified dimensions that emerged and used those subdimensions in subsequent analyses (Tables 3–5). Subsequent PCA of standardized residuals using the Teacher, Researcher, and Mentor Role Identity Subscales revealed unidimensionality (i.e., raw unexplained variance in the first contrast < 2 eigenvalues; Table 6).

We flagged six items across the instrument as potential items that may not reasonably fit the model based on infit and outfit MNSQ statistics (Table 7). (See Online Appendix B for full item-fit statistics for each subscale.) We assessed person and item separation and reliability to examine the extent to which scores were reproducible. Person separation and reliability results suggest that three of the seven subscales (Researcher Role Identity Subscale 2 [RRIS2], Researcher Role Identity Subscale 3 [RRIS3], and Mentor Role Identity Subscale 2 [MRIS2]) may be sensitive enough to distinguish between high and low performers on the constructs (Table 6). We found six of the seven subscales to have reliability indices > 0.70, with five of those six reliability coefficients > 0.80. Item separation and reliability results suggest that only the RRIS2 may have a sample large enough to confirm the item difficulty hierarchy (i.e., construct validity; item separation > 3 and item reliability > 0.9).

Rating scale category functioning was assessed to determine the extent to which the rating scale functioned properly (Bond & Fox, 2001). We examined category frequencies to determine the distribution of responses across each category as well as the average measure at each category to ensure that the average measure increased with each category, indicating a higher ability on the construct at the higher levels of agreement (Bond & Fox, 2001). Guidelines indicate that thresholds should increase by at least 1.4 logits but no more than 5 logits (Bond & Fox, 2001). The rating scale diagnostics consisted of examining category frequencies, measures, thresholds, and category-fit statistics (Table 8). During the initial analyses of the Teacher Role Identity Scale, we found that the rating category “rarely” was not functioning well. Hence, for the Teacher Role Identity Subscales, we collapsed rating categories “never” and “rarely.”

For all role identity subscales, the measures increased with each category value (e.g., from -0.81 logits to 2.66 logits for Teacher Role Identity Subscale 1 [TRIS1]), suggesting that as the frequency in which TAs viewed their professional role as teacher, researcher, or mentor in relation to the biology course they taught increases, so does the average ability (Bond & Fox, 2001). In addition, fit statistics for the categories on all subscales revealed statistics less than 2, which is considered acceptable (Bond & Fox, 2001).

For the thresholds, which are the estimated difficulties of observing one category over another category, we found differences across subscales. For TRIS1 and RRIS2, the thresholds increased by at least 1.4 logits between all rating scale categories, suggesting that the rating scales were functioning ideally. Conversely, for Teacher Role Identity Subscale 2 (TRIS2), Researcher Role Identity Subscale 1 (RRIS1), RRIS3, Mentor Role Identity Subscale 1 (MRIS1), and MRIS2, the thresholds did not show increases of at least 1.4 logits between all rating scale categories. We found the rating scale categories were not functioning adequately between “very often” and “always” on TRIS2, between “sometimes” and “very often” and between “very often” and “always” on RRIS1, between “rarely” and “sometimes” on RRIS3, between “rarely” and “sometimes” and between “very often” and “always” on MRIS1, and between “sometimes” and “very often” on MRIS2.

Discussion

Some validity evidence to support the interpretation and use of scores from the BTARIQ emerged from the described four-phase process. In the first three phases, we attempted to gather validity evidence based on instrument content. We conducted an analysis of the association between the content of the instrument (e.g., items, wording, and format) and the constructs of teacher, researcher, and mentor role identity. An expert review of items allowed us to ascertain the extent to which items aligned with the constructs of interest for the instrument. With the items grounded in the literature, more than half of the initial items were matched by the experts to the intended dimensions, providing support for content validation, representativeness, and alignment of items to constructs. Results from the pilot provided additional information regarding questionnaire instruction, scale, and wording of items. Overall feedback was positive, and the suggestions by TAs led to a clearer and more useful instrument. Collectively, information from content experts and TAs provided us with insights into the association between the content of the instrument and the constructs of interest.

The fourth phase provided a more in-depth investigation into the dimensionality of the scales, item fit, score reproducibility, and functionality of the rating scale. We found each scale to be multidimensional, which violates the unidimensional assumption for Rasch analysis. Hence, in subsequent analyses, we used only the unidimensional subscales for each scale. The Teacher Role Identity Scale analysis suggests two subscales: aspects of reflective teachers (TRIS1) and outside perceptions and teaching practices (TRIS2). For the Researcher Role Identity Scale, perceptions of TAs as researchers (RRIS1), using the process of science (RRIS2), and TA-student relationships (RRIS3) emerged as themes. For the two subscales that encompass the Mentor Role Identity Scale, the themes that emerged were that there were activities TAs may be more able to see themselves doing (MRIS1) and aspects of more higher-level mentoring (MRIS2).

In examining the psychometric properties of the BTARIQ regarding the fit of data to the Rasch model, distinguishing between high and low performers and reliability, construct validity, and rating scale functionality, we found some evidence to support the interpretation and use of scores from the BTARIQ. However, it is worthy to note that three of the subscales (TRIS2, RRIS1, and MRIS1) had less than 50% raw variance explained by measures. We found reasonable fit of data to the Rasch model across the subscales for teacher role identity, researcher role identity, and mentor role identity. However, there were rating scale issues for many of the subscales, highlighting the need for further investigation. Although the sample size for the Rasch analysis for this study was small, it was sufficient for an exploratory analysis. Future studies will attempt to increase the sample size in data collection to provide additional information regarding psychometric properties of the BTARIQ from both classical test (e.g., factor analysis) and modern test (e.g., Rasch) theory perspectives. Future studies will also examine additional variables such as ethnicity, gender, and year in program.

We anticipate that the BTARIQ may be used to understand TA role identities in different lab contexts. Importantly, the results of the BTARIQ can help shape conversations between TAs and supervisors regarding TA professional development. Understanding the roles with which TAs identify less frequently can prompt supervisors or mentors to recommend or encourage TAs to seek workshops and other professional development opportunities that will help them grow as teachers, researchers, and mentors. In addition, departments may use data from the BTARIQ to develop appropriate professional development activities for TAs.

While recognizing their own identities, TAs can also positively contribute to undergraduate learning as laboratory instructors and may influence student retention in science based on the classroom climate they develop (O’Neal et al., 2007). Therefore, mentoring may be important for student retention. Furthermore, Huffmyer and Lemus (2019) found that TAs’ behaviors such as pace of instruction and checking for student understanding have a positive relationship with student homework grades. Although this was a preliminary study, the initial instrument provides a mechanism for understanding TAs’ role identity in the context of different teaching experiences.

Acknowledgments

We would like to thank the teaching assistants who participated in this research and our panel of expert reviewers for the valuable feedback on the initial instrument. We also thank the staff of The Ohio State University’s Research Methodology Center for providing constructive feedback on an earlier version of this manuscript. Finally, we thank the Department of Educational Studies at The Ohio State University for their support of this work.

Amy E. Kulesza (kulesza.5@osu.edu) is the assistant director of education research and development in the Center for Life Sciences Education, and Dorinda J. Gallant (gallant.32@osu.edu) is an associate professor in the Department of Educational Studies, both at The Ohio State University in Columbus, Ohio.

Biology Preservice Science Education Teacher Preparation Postsecondary