feature

Is That Plausible?

How to Evaluate Scientific Evidence and Claims in a Post-Truth World

The Science Teacher—January/February 2023 (Volume 90, Issue 3)

By Imogen R. Herrick, Gale M. Sinatra, and Doug Lombardi

There has never been a more pressing need for students to learn how to evaluate scientific information online than during the COVID-19 outbreak. Information, misinformation, and disinformation spread quickly across online news and social media platforms. This misleading or incorrect scientific information about infectious diseases could lead to negative outcomes for those who believe it is true or follow the information.

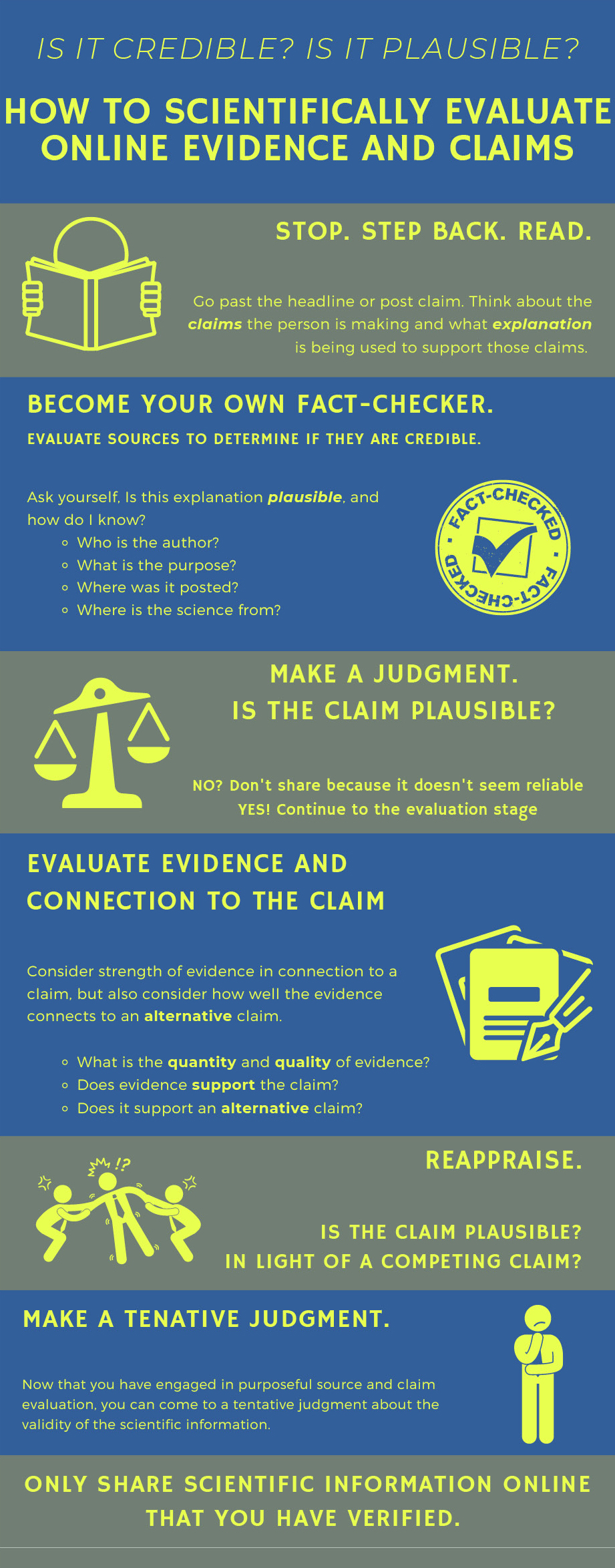

The set of six steps described in this article will support students in evaluating scientific claims online, particularly claims that might influence their understanding or future behaviors concerning disease prevention and transmission. These steps walk students through how to critically evaluate claims, consider alternative claims, and reason scientifically through the evidence presented. Specifically, when evaluating the connections between sources of information and knowledge claims, it is important for students to reconsider the plausibility of competing claims. Thus, as students work through the six steps, they are prompted to ask “Is it plausible?” when evaluating competing claims. This process requires students to engage in many of the science and engineering practices outlined in the Next Generation Science Standards (NGSS) and supports their scientific sensemaking around the information they encounter in news sources or social media posts.

Given the sheer volume of misleading information online, students must learn how to evaluate scientific information they encounter in news sources or social media posts. Our research shows that evaluating the connections between sources of information and knowledge claims is imperative. More specifically, this process is supported by reconsidering the plausibility of various competing claims. Thinking about plausibility requires a student to make a tentative judgment about a claim’s truthfulness. Unlike personal beliefs, students are not committed to their plausibility judgments, leaving them open for consideration that can lead to a more scientific stance and deeper understanding (Lombardi et al. 2016). For example, when evaluating the safety of the MMR (measles, mumps, rubella) vaccine, a student will encounter many competing claims online. The student needs to come to a reasoned and scientific conclusion about the sources and truthfulness of each claim about the MMR vaccine they encounter (Lombardi et al. 2018; Lombardi et al. 2014). After doing so, the student can assess the plausibility of each claim in relation to a competing claim and come to a tentative judgment about the safety of the MMR vaccine.

Steps in evaluating online scientific claims

We drew on the literature examining sources of scientific information and claims, and developed six steps that students can take to evaluate scientific claims. These six steps align with many of the NGSS science and engineering practices (NRC 2012); you can support students in learning about and employing these steps when they encounter scientific information online (Sinatra and Lombardi 2020).

Walking students through these six steps can be done in different ways; they can be used as a guiding post for an entire unit or as a one-day classroom activity. We recommend using the steps and the associated infographic (Figure 1) with students in 5th through 12th grade. In this article, we describe how to use the six steps during a 60-minute class period using the 5E framework as a planning tool (Bybee 2015). Table 1 displays the lesson plan and how it aligns with the NGSS science and engineering practices, which is followed by information to help you plan for using the six steps with your students.

Setting the stage: What is misinformation?

To begin, we discuss the reasoning behind each step and how to tackle it as a whole group. Then students work in a smaller group of two to four and practice the skills in each step using online content. We start by helping students understand why they need to be critical when reading information online. We explain to students how important it is to evaluate online scientific information about science and health issues.

We provide students with the following example of misinformation that could be harmful: A headline from the Gateway Pundit (an outlet known for spreading misinformation) claimed a “Stanford study” demonstrated that face masks do not protect against COVID-19 and are harmful to students’ health. We then help students identify why this article is misleading by pointing out that the “study” was just a hypothesis, with no scientific evidence to back it up. Also, this hypothesis came from an author who had no connection to Stanford University. Then we discuss how dangerous misinformation like this could be for public health because this post was shared thousands of times on social media, leading to some people believing that masks were harmful. Using this article, or others like it, can help set the stage for students to understand that in this age of misinformation, they need strategies to help them critically evaluate scientific claims, particularly claims that might affect their understanding or future behaviors concerning disease prevention and transmission.

After students have an idea about how easy it is to encounter misinformation online, we discuss how students typically use the internet. We pose a simple question: “How do you typically search for information online?” We find the most common strategy students report for online searches is to “just Google it.” However, given the proliferation of misinformation online, this strategy is not only insufficient but also dangerous to students’ health and well-being. When students “Google it,” they read the first couple of articles found by the search engine’s algorithm. Sources at the top of the list have the most “hits” but are often inaccurate and misleading. Using this strategy, students are likely to come across information that fits into one of the following categories: misinformation, disinformation, or malinformation (see Table 2). Share these categories and definitions with students so they can begin to understand the complex world of online information searches.

Step 1: Stop. Step back. Read.

The first thing students should do when they read a piece of scientific information online is to stop and think. To support students in slowing down their online habits, or what the experts call exercising “click restraint,” ask students to share their previous information-sharing behaviors. For example, you could ask students if they have ever engaged in the practice of clicking, reading a headline, agreeing with the headline’s information, and then sharing the article without ever reading it. You could also share a time you engaged in this same behavior to demonstrate that everyone is guilty of this occasionally. This behavior is so typical that Twitter attempts to slow down users by asking if they want to open and read an article before sharing, if they have not already. Engaging students in a conversation about click restraint will allow them to reflect on their online sharing habits and might encourage them to stop, step back, and read before sharing in the future.

Reading past the headline is essential, but it is not enough. Students must also resist the urge to quickly accept the claims made in the information presented (Sinatra and Lombardi 2020). Instead, students should engage in the science and engineering practice of asking questions and defining problems by stopping to think about the claims presented and what explanations are offered in support of these claims (NRC 2012). For example, perhaps a student reads an article that falsely claims only women can contract the human papillomavirus (HPV) and states that the virus causes cervical cancer as evidence for this claim. This student should stop and ask, Is this explanation plausible, and how do I know?

Small group practice

Are they trying to sell you something? Have students work with a neighbor or place them into groups of two to four students. Ask student groups to pull up the main page of a popular news outlet such as Yahoo News, Fox News, MSNBC, or CNN. Using the content on the front page, ask small groups to select four to five pieces of content displayed in different locations on the page (top-middle-bottom, left-center-right). Using these selections, provide students 10 minutes to determine if the content they selected is trying to sell something. Groups should document the evidence they gather and use it to make a decision for each piece of content. Bring the whole group back together and ask each small group to share one type of evidence they employed to make a decision.

Step 2: Become your own fact-checker

Once students have stopped making an automatic non-evaluative judgment, they can engage in the scientific and engineering practice of obtaining, evaluating, and communicating information by doing some fact-checking. To do this, students should check the source by asking the following questions:

- Who wrote the article and where did the information first appear online?

- Is the person who wrote it an expert, or did the author draw the information from expert sources?

- Did the information appear on a reputable outlet?

- Does the author have a financial or political motivation for making the claim that could compromise their objectivity?

Experts on source evaluation recommend that students act like a fact-checker by immediately opening up other web pages of known credible sites to cross-check and compare (Bråten et al. 2019; McGrew et al. 2019). This strategy is called lateral reading (as opposed to vertical) because students are reading across credible websites within their browser to fact-check information in an article or web page (Wineburg et al. 2022; Wineburg and McGrew 2017). A few websites students can use to cross-check scientific information on infectious diseases are: the Centers for Disease Control and Prevention (www.cdc.gov), the World Health Organization (www.who.int), the National Institute of Allergy and Infectious Diseases (www.niaid.nih.gov), or the Infectious Diseases Society of America (www.idsociety.org).

After students have engaged in their own fact checking, they should make a judgment by asking, Is the claim plausible? If the answer is no, they should not share the information. If the answer is yes, they should move to the next step and evaluate.

Small group practice

What’s their motivation? Provide small groups with a piece of misinformation that is not at face value obviously fake. We have used posts from Twitter that make over-the-top claims about current infection rates of diseases like monkeypox, COVID-19, or the flu. Encourage groups to dig deeper into the claim made in the misinformation by answering the questions listed above. You can also provide groups with a list of credible websites such as the examples provided. Groups should use the lateral reading approach to determine if the source of content is credible. Once groups have determined if the source of the information is credible, they should make a plausibility judgment about the claim by asking and answering, Is the claim plausible?

Step 3: Evaluate evidence and connection to the claim

Once students identify a plausible claim, they need to critically evaluate how well different pieces of evidence support that claim. To do this effectively, students must consider the strength of evidence in connection to a claim and how well the evidence connects to an alternative claim. For example, in the initial days of the COVID-19 pandemic in the United States, some claimed that protective masks did nothing to stop the virus spread, whereas others alternatively claimed that wearing protective masks were beneficial and could help “flatten the curve.” Further refinements to these claims included the notion of the availability of protective masks for medical personnel. As the crisis has progressed, various lines of evidence have emerged that when considered together support the claim that protective masks are indeed beneficial, with priority of use going to frontline medical and emergency responders.

Having students think about how well particular evidence supports a claim or an alternative claim engages students in the science and engineering practice of engaging in argument from evidence (Lombardi 2019). It is critical at this step to consider alternative knowledge claims that also relate to the evidence students encounter. You can support students in their critical evaluations by asking or having them ask the following questions:

- What is the quantity of evidence?

- What is the quality of evidence? How do you know?

- Does the evidence support the claim?

- Does it support an alternative claim?

Small group practice

What’s the alternative? Provide students with a photo or video form of content that makes a claim (e.g., information claiming that vaccines cause autism). We find apps such as Instagram or TikTok to be good sources for these types of examples. Provide small groups with 10–15 minutes to evaluate the claim using the questions above and employing the lateral reading technique. End the activity with a whole-group discussion in which small groups share out how they determined the quality of evidence.

Step 4: Reappraise

Students are likely to make plausibility judgments about claims without purposeful thought and commitment. However, our classroom research demonstrates that when students examine their plausibility judgments explicitly and scientifically, these judgments shift toward more scientific stances (Lombardi 2019; Lombardi et al. 2013). After students have considered how well multiple lines of evidence support alternative and competing claims, they should return to their plausibility judgments about the claim presented and reappraise. They can ask themselves, Is the claim plausible in light of a competing claim?

Step 5: Make a tentative judgment

At this point, students have engaged in multiple NGSS science and engineering practices while evaluating the sources of scientific information and claims related to infectious diseases.

Supporting students in the purposeful evaluation of scientific claims they encounter online is critical because scientific evidence can change rapidly, as the COVID-19 pandemic clearly showed. This flexible nature of science might prompt students to view such moments of uncertainty and change as lowering the validity of the scientific process. However, when they engage in more purposeful source and claim evaluation, they come to understand that the scientific process is highly reasoned and more clearly see how reappraising plausibility judgments increases confidence, and understanding of science.

In fact, when students reappraise their plausibility judgments through the evaluation of evidence sources and alternative claims, they engage in scientific thinking (Lombardi et al. 2022). Through this process, students can make a tentative judgment about the validity of the scientific information they encounter. They may also gain a greater understanding of the scientific processes, which could support them in placing more value on explanations and solutions proposed by the scientific community.

Step 6: Only share information you have verified

Once students have made a tentative judgment that a scientific claim is plausible and supported with credible evidence, only then should they share the information. However, students need to share this information thoughtfully by naming the source and qualifying the information. For example, if a student engaged in the purposeful evaluation of the claim “Individuals could become infected with the chicken pox virus more than once” and came to the tentative judgment that this claim is credible, then the student should share that information by saying, “I found this information about the chicken pox virus on TikTok and checked it out on the CDC website. CDC confirms that you can catch the chicken pox virus twice because not everyone will produce enough antibodies to protect them from contracting it again.”

Small group practice

To share or not to share? Prompt groups to discuss how they determined the quality of evidence and any differences that came up between groups in Step 3. Have them reappraise their plausibility judgment about the claim in the content from Step 3 by asking, Is the claim plausible in light of a competing claim? At this point, groups must decide if they will or will not share this information. Each group must defend its decision to share or not share the information. If students decide to share, they should construct an appropriate post that shares the information and explains how they verified it (see example in Step 6).

Conclusion

These steps seem complicated and time-consuming, but with practice, students can take these six steps almost as quickly as they can text six friends. You can support students in developing these habits by hanging the How to Scientifically Evaluate Online Evidence and Claims infographic (Figure 1) in your classroom and reminding students to use these six steps. Once students become proficient, they will automatically find themselves asking, Is this plausible, and how do I know?

Imogen R. Herrick (iherrick@usc.edu) is a PhD candidate and Gale M. Sinatra (gsinatra@usc.edu) is a professor, both at the Rossier School of Education, University of Southern California, Los Angeles, CA. Doug Lombardi (lombard1@umd.edu) is an associate professor at the University of Maryland, College Park, MD.

Advocacy Citizen Science Crosscutting Concepts Curriculum Environmental Science Equity General Science Instructional Materials Interdisciplinary Learning Progression Lesson Plans Literacy NGSS Pedagogy Policy Preservice Science Education Professional Learning Research Science and Engineering Practices Teacher Preparation Teaching Strategies Technology High School