Research and Teaching

Building First-Year Science Writing Skills With an Embedded Writing Instruction Program

Journal of College Science Teaching—January/February 2020 (Volume 49, Issue 3)

By David Dansereau, L. E. Carmichael, and Brian Hotson

An important foundational skill developed in an undergraduate science program is the ability to find, critically evaluate, and communicate scientific information. Effective science communication depends on good writing; therefore, we leveraged student support services offered by the Writing Centre and Academic Communications, in conjunction with the Office of the Dean of Science and the departmental chair of Biology at Saint Mary’s University (Halifax) to help meet science-writing outcomes in the Biology program. Our initiative began with writing-instruction workshops, embedded into firstyear labs, which supported student writing of formal lab reports. The program also featured instructor feedback on drafts and final resubmissions, and mandatory consultations with disciplinespecific writing tutors during the revision phase. We used surveys, attendance records, and grades to evaluate the program’s success. Writing tutoring was incentivized and well attended, and we measured a significant improvement on final lab reports grades for students who made use of the program. Over 80% of participants, both science majors and nonmajors, reported that the program had prepared them for future courses.

In biology laboratories, we offer students opportunities to perform simple experiments to develop practical skills, gain experience in data collection and analysis, and better understand both the scientific method and course subject matter. At Saint Mary’s University (SMU) in Halifax, Nova Scotia, Canada, we recognize that students are from diverse educational backgrounds and cultures, with plurilingual aspects to their knowledge production and acquisition (Common European Framework of Reference for Languages, 2017). The knowledge-building opportunities provided in biology guide students toward an understanding of science and science writing, as an approach that provides evidence, rather than a collection of facts to memorize (Druschke et al., 2018). We evaluate students using the science writing genre of lab reports, where students interpret data from graphs, construct explanations, and practice linking empirical evidence to analytical claims. The main goal of these reports within a course is to develop content knowledge and conceptual understanding (Armstrong, Wallace, & Chang, 2008; Gerdeman, Russell, & Worden, 2007). More broadly, we assign lab reports to teach the scientific method, to help develop the clear and concise writing skills that are essential to success in the sciences, and to build students’ understanding of a main genre of science writing typical to biology (Franklin, DeGrave, Crawford, & Zegar, 2002).

Many first-year students are surprised to discover that writing is required in science programs and are consequently anxious about their ability to meet expectations (Carlson, 2007). Others are averse to what they perceive as the subjectivity of writing (Franklin et al., 2002) or view required writing assignments, such as lab reports, as pointless busy-work (Carlson, 2007).

In contrast, faculty recognize the importance of written communication in science courses (e.g., Coil, Wenderoth, Cunningham, & Dirks, 2010; Druschke et al., 2018; Firooznia & Andreadis, 2006) but also report reluctance to teach or assign such writing, citing lack of writing instructional time, overwhelming grading load, and the generally poor quality of first-year work (Franklin et al., 2002; Lankford & vom Saal, 2012; Train & Miyamoto, 2017).

Science majors at SMU are expected to master discipline-specific genre writing skills, beginning in introductory courses such as SMU’s Molecular and Cellular Biology (BIOL 1201). Involving both lecture and lab components, BIOL 1201 is a required course with a registration of up to 250 students. To meet our first-year writing objectives, the Faculty of Science and the Writing Centre and Academic Communications (WCAC) at SMU partnered in the development and implementation of a novel, embedded writing-instruction program for BIOL 1201. Launched in 2013, the Report Support Program (RSP) consisted of mandatory, in-class writing workshops and optional one-on-one tutoring sessions. Both components of the program were facilitated by WCAC staff purposefully hired with background and/or firsthand experience in science writing, many of whom had advanced degrees in science.

The program’s goals were: (a) to introduce the nature and importance of science writing; (b) to give students an opportunity to integrate feedback via a revision-and-resubmission progression; (c) to provide opportunities for students to synthesize and better understand lab content (Armstrong et al., 2008; Gerdeman et al., 2007); and (d) to improve the overall quality of student assignments, thus reducing marking time (Haviland, 2003). We tracked participation in the program and evaluated whether it improved grades and increased students’ confidence in their writing abilities.

Methods

Program description

Statistical analysis

The correlation between reported confidence in writing and survey responses on prior writing experience was evaluated by linear regression analysis using the ordinary least squares LINEST and FDIST functions of Microsoft Excel. Survey responses of “no” and “yes” were assigned values of 0 and 1. Survey responses on a Likert scale were assigned values from 1 (strongly disagree) to 5 (strongly agree) and comparisons were made with 2-tailed Student’s t-tests. Differences between the grades of tutored and untutored students were tested with 2-tailed Student’s t-tests and a Bonferroni multiple-test correction. A single-factor analysis of variance (ANOVA) with Tukey’s post hoc test for multicomparison of means was used to test whether repeated tutoring sessions affected grades on the final lab report. Graphs were made with Plot2 (plotdoc.micw.org) or Seaborn 0.9.0 (seaborn.pydata.org).

Results and discussion

Student demographics and writing confidence

In 2014, 21% of BIOL 1201 students self-identified as international, 53% of whom were second-language learners (L2 learners). Seven percent of domestic students were also L2 learners. International students were significantly less confident than domestic students in their English-language writing skills (Figure 1, 2-sample t(178) = 3.0, p = .003), regardless of first language (data not shown). However, we found no difference between domestic and international students in confidence in writing lab reports (Figure 1, 2-sample t(173)=—0.7, p = .5). Only 24% of domestic students, and 22% of international students, responded agree or strongly agree to the statement, “I am already well-prepared to write lab reports,” suggesting widespread anxiety about discipline-specific expectations. When asked to identify their concerns, most students mentioned lab report format (31%), followed by writing a Discussion section (19%), and then by sentence-level concerns such as passive voice, formal style, or appropriate use of scientific vocabulary (10%).

More than 90% of students had written lab reports in high school, but because BIOL 1201 is a first-year course, only about 25% had previous experience writing university-level lab reports. This experience was the strongest predictor of writing confidence at the outset of the program (Table 1). Factors such as high school writing experience, previous writing instruction, or reading peer-reviewed articles had little bearing on writing confidence.

| Table 1 | ||||||||||||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

In-class workshops

Despite instruction that was provided during 2013 in-class workshops, certain genre errors—such as writing Materials and Methods as imperative voice “recipes” rather than describing experiments in past tense paragraphs—were widespread in student reports. In 2014, we modified workshops to target these common misunderstandings (Pagnac et al., 2014). Addressing basic structure and formatting issues in workshops allowed tutors to focus on finer points of structure and style during WCAC writing tutoring consultations (Franklin et al., 2002).

Use of tutoring support

We logged 392 visits to drop-in tutoring in 2013; individual students used the resource between one and nine times. Demand for tutoring was highest immediately before deadlines: In one memorable 3-hour block, 51 students signed in, many of whom walked out before receiving help. In this pilot year, the WCAC had only two science writing tutors on staff, so generalist tutors assisted when available. Science writing tutors also used a modified group-tutoring model on heavy-traffic days. Group writing tutoring was an efficient strategy for addressing common errors, such as including interpretation in Results sections, but many students were frustrated by the lack of personalized feedback. In 2014, the Centre hired a third tutor, and the WCAC Director (BH) assisted during deadline weeks. In 2015, we switched from a drop-in tutoring model to scheduled appointments. This further reduced the strain on tutors and ensured that every student received the same focused attention.

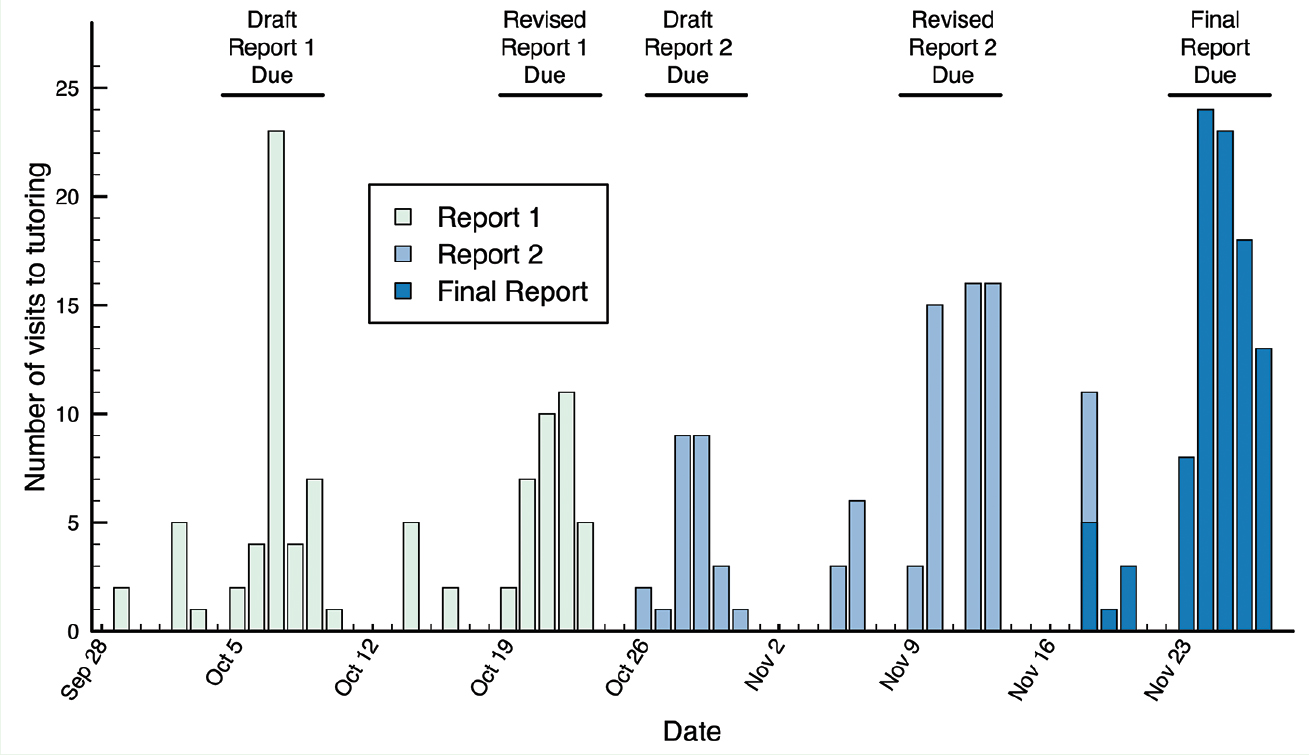

In both 2013 and 2014, students working on Report 1 were more likely to seek tutoring before draft submission than students working on Report 2 (Figure 2). On surveys, students cited increasing busyness as the semester progressed, but also an increased awareness of the safety net supplied by the option to revise their reports if needed. Attendance prior to submission of the final report was highest of all, consistent with a higher assignment weight and lack of a revision cycle on this report (49 visits, Report 1; 25 visits, Report 2; 95 visits, final report).

Visits to tutoring during 2014. Students in each lab section had one week to complete each draft or revision following grading. Demand for tutoring was highest during deadline weeks. Demand for tutoring also varied from assignment to assignment, as students determined how to make best use of the program.

Although traffic patterns were consistent in 2013 and 2014, attendance in 2014 was lower across the board (279 total visits by 101 students). Improved in-class writing workshops may be one reason, but a shift in grading policies seems more likely. In 2014, one lab instructor marked so generously that many students received very high marks on their first draft; another took the opposite approach, marking many reports with minor omissions as “incomplete” and forbidding those students to resubmit. However, 47% of students who used the RSP in 2014 made between 3 and 10 visits, suggesting that many who attended considered the time well spent.

Tutoring improves both grades and confidence

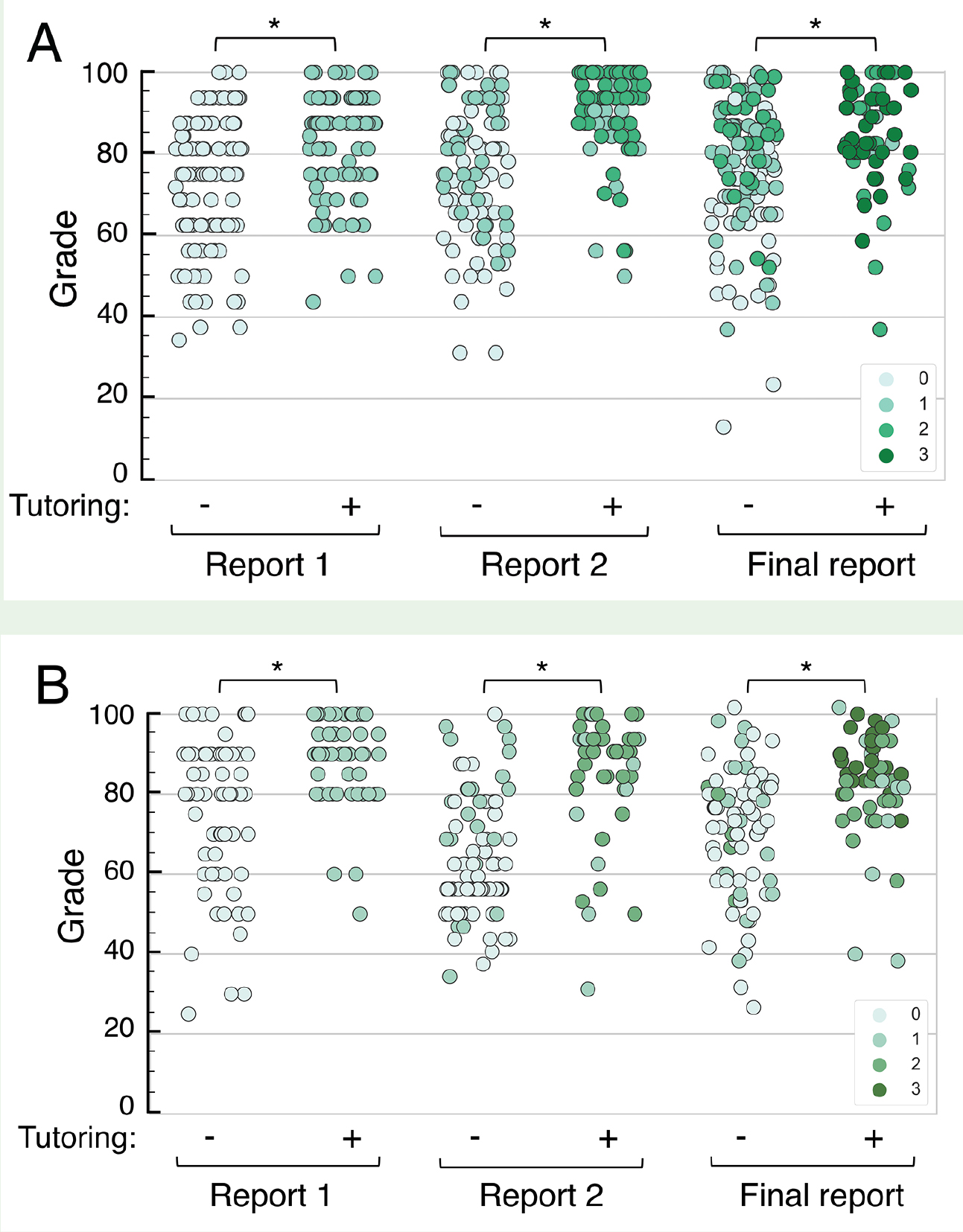

Students who attended one or more tutoring sessions attained higher grades on average than those who did not use the resource. This was true on both Reports 1 and 2, where students were permitted to resubmit their work, and on the final report, when they were not. Students who were tutored and revised their lab reports scored significantly higher on Reports 1 and 2 in both years (Table 2). In both years, students who were tutored while preparing their final lab report also scored significantly higher than students who were not (Table 2). Because students did not resubmit their final lab report, this suggests that tutoring attendance has a positive effect on grades above and beyond that of simply following a grader’s written feedback during revision. Survey data supports this conclusion: as one student said, “I got almost 100% on my lab. I had so many mistakes within [sic] originally, I probably would’ve received a 50%.”

| Table 2 | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

We also observed a positive relationship between the total number of tutoring sessions attended throughout the semester and the student’s marks on their final lab reports. An analysis of variance on final report scores showed that the effect of tutoring was significant (Table 3). Post hoc analyses using the Tukey-Kramer test indicated students tutored on all three lab reports, in both 2013 and 2014, performed significantly better on the final lab report than students with no tutoring (Table 3). The effects of fewer writing tutoring events varied slightly from year to year, but a general, positive trend in grades with increased tutoring is evident (Figure 4). In 2013, we found that students tutored on any two lab reports performed significantly better than students with no writing tutoring, and in 2014, students tutored on three lab reports had significantly higher grades compared with students tutored only once (Figure 4, Table 3). Our data shows that increased frequency of writing tutoring has a positive effect on final lab report grades, and that multiple writing tutoring consultations had additive impacts on performance.

The distribution of grades for 2013 (A) and 2014 (B) on three lab reports: a Materials and Methods section (Report 1), a Results section with figure and caption (Report 2), and a combined final report. Grades for each report were clustered into strips by tutoring (+) or no tutoring (–) on that lab report. A green hue value of between 0 and 3 illustrates the cumulative number of lab reports tutored. Asterisk denotes a significant difference between the tutored and nontutored groups, p < .01, t-test with Bonferroni correction.

Writing tutoring increased grades on the final lab report in 2013 (A) and 2014 (B). Each dot represents one student’s grade on the final lab report. Grades are clustered into strips according to the total number of lab reports tutored, which increased by one for each of the three lab reports if a student attended at least one tutoring session for that report. The horizontal line is the mean. Asterisk denotes a significant difference between two groups, p < .01, analysis of variance, Tukey post hoc test.

| Table 3 | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

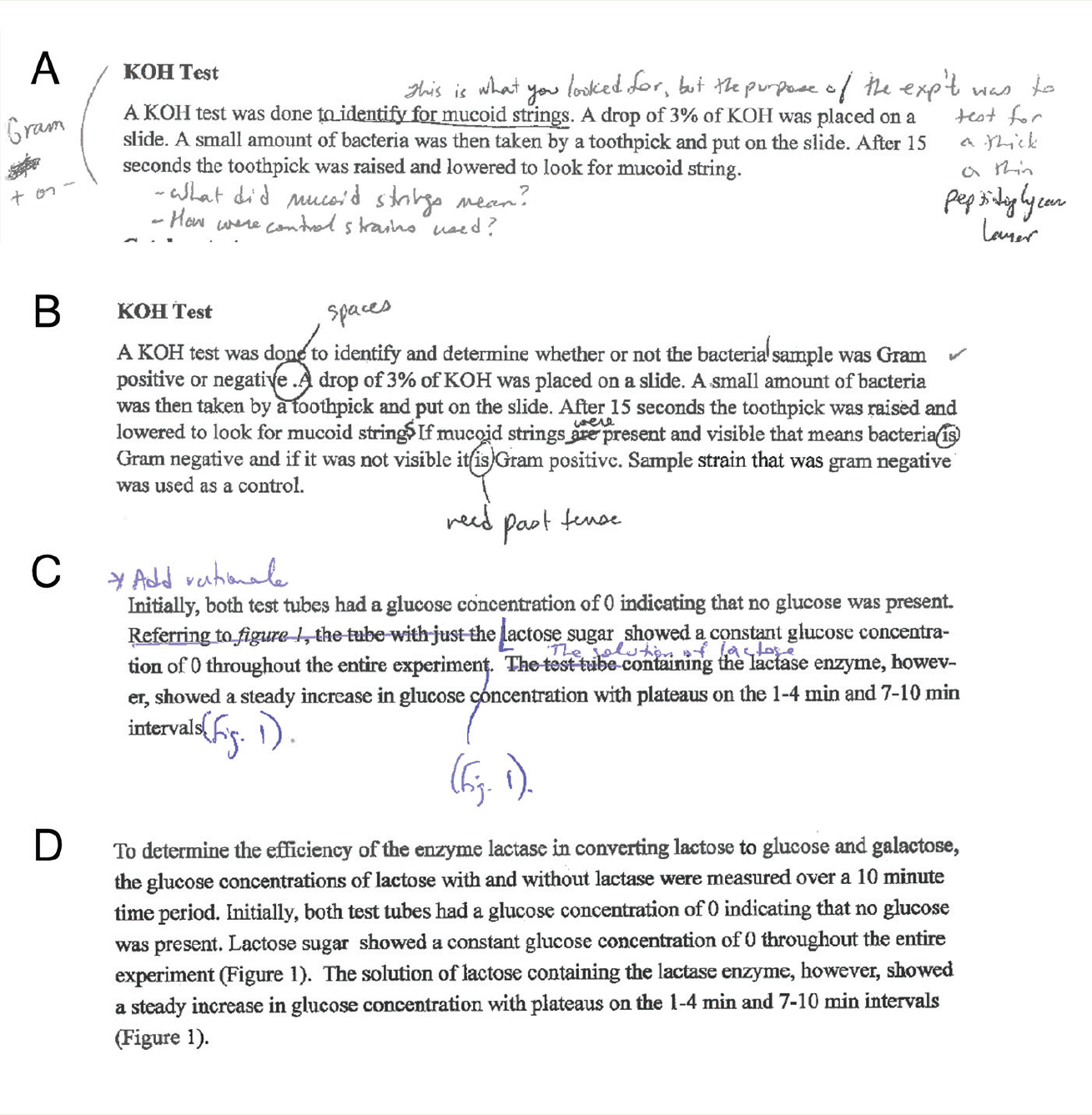

Although many students viewed grade improvements as the most important reward for participating in Report Support, others acknowledged the development of their own skills as writers. Areas of improvement noted on surveys include: format and structure, “being organized,” clarity and concision of sentences, and scientific style. One student said, “I believe that the Report Support system helped me to improve my own writing, as it showed me a better way to organize and refine my writing process.” Further to this point, writing tutors reported that many students applied feedback from consultations broadly, noting systemic improvements in subsequent drafts (Figure 5).

Excerpts of student reports before and after tutoring. Handwritten annotations are original notes made by graders and/or writing tutors. (A) Report 1, initial submission, (B) same paragraph, following tutoring and revision. (C) Report 2, initial submission, (D) same paragraph, following tutoring and revision.

In 2013, 87% of students reported increased confidence when writing lab reports at the end of the semester; in 2014, that number had jumped to 97%. We attribute this increase to improvements in the content of the workshops, staffing changes in 2014, and the development of handouts that provided sample paragraphs and answered common questions (Titus, Boyle, Scudder, & Sudol, 2014). Perhaps most encouraging, 86% (2014) to 88% (2013) of BIOL 1201 students said that the RSP had prepared them for future courses, including those who were not majoring in science.

Lessons from the RSP

In exit surveys, we asked students to identify their favorite component of the RSP. Responses were evenly split between “workshops” and “tutoring.” Those who chose workshops appreciated foreknowledge of expectations, or cited scheduling conflicts that prevented them from attending writing tutoring sessions; those who chose writing tutoring appreciated the chance to receive personalized feedback. The most popular response, however, was “revision,” indicating the value in creating low-stakes opportunities for students to practice and learn from their mistakes. Although some students felt that writing tutoring consultations should not be a requirement for resubmission, we consider these interactions critical, as they forced students to think critically about their work within the science writing genre and to view revision as part of their holistic writing process. Writing tutors also acted as “translators” for graders’ feedback and comments (DeLoach, Angel, Breaux, Keebler, & Klompien, 2014), showing students which aspects of their work had prompted particular pieces of feedback: “I learned more about writing than I did in the classroom and sometimes when I didn’t understand what the TA’s comments meant the Writing Centre could help me out.…Every rewrite I did, with help from the tutoring sessions I got a better grade on.”

Given the number of staff involved—both within the Faculty of Science and the WCAC—consistency of messaging was the single biggest challenge in administering this program (Franklin et al., 2002; Webster & Hansen, 2014). Students reported receiving conflicting advice when working with more than one writing tutor; others said they made changes based on a tutor’s advice, only to lose points during marking (Webster & Hansen, 2014). Variations in expectations were also observed between lab sections, despite the fact that all graders were working from the same instructions and rubric, written by the course lecturer (DD). It is not suprising that both students and writing tutors found these conflicts frustrating, and we took several steps to mitigate them. DD hosted weekly meetings for lab instructors, teaching assistants, and WCAC staff in which assignment expectations were discussed. The program coordinator (LEC) stayed in close communication with DD throughout the semester, reporting his expectations to the writing tutoring staff and reporting issues arising during writing tutoring back to DD (DeLoach et al., 2014; Titus et al., 2014). In 2015, we improved grader training with the addition of two program coordinator–led grading workshops for the lab instructors, teaching them to identify writing-quality issues in student reports, in addition to factual errors (Pagnac et al., 2014). Despite these efforts, conflicts were inevitable. In all cases of uncertainty, tutoring staff referred students to their graders for further advice.

Lessons learned from other courses

Following the success of the RSP, WCAC launched embedded writing tutoring programs for a number of other science and engineering courses at SMU, some at upper year levels. Success of these programs, defined as use of the resource, has been mixed. Two factors seem to have moderate impact on student attendance at tutoring: in-class workshops and writing tutors with experience in the relevant discipline. In-class workshops show students that professors consider writing important (Webster & Hansen, 2014), but also introduce tutors to students, helping them become less intimidated about asking for help (Pagnac et al., 2014; Titus et al., 2014). One BIOL 1201 student said, “It helps having someone experienced who isn’t a teacher. Not because [teachers] don’t help, but because it is easier to talk, explain, and understand someone around the same age (references, examples, etc.).” This comfort level may be especially important for those international students whose academic cultures emphasize a hierarchical authority structure in which questions, especially of assignment marks, are not permitted; indeed, one such student felt intimidated by certain writing tutors, as well as by professors, saying, “I’m shy to ask some question if the tutor not really kind or lovely!”

Similarly, our experience suggests that science and engineering students are far more likely to seek help from tutors they perceive as peers—in other words, those with science and engineering backgrounds who are more likely to understand their particular concerns (Gentile, 2014; Jerde & Taper, 2004). Although writing center scholarship has long debated the value of specialist versus generalist tutors (e.g., Dinitz & Harrington, 2014; Gentile, 2014; Hallman, 2014; Walker, 1998), we find that disciplinary experience lends writing tutors authority, making it more likely that students will implement their advice.

In our experience, the single largest driver of attendance for tutoring are assigned requirements or incentives: mandatory consultations (Franklin et al., 2002), revision cycles, or bonus points (Titus et al., 2014). In addition to the heavy course loads common in science and engineering programs, a high percentage of students at SMU attend class while holding down part-time or even full-time jobs. Relative to students in humanities or business programs, these students must make highly strategic decisions about where to invest their time and will not do so unless a payoff of some kind for that investment is obvious. Other than the revision cycle used in BIOL 1201, we had best results with a “loyalty-card” model developed for a first-year engineering communications course (EGNE 1206). Students received a stamp for each completed consultation with a writing tutor; after three stamps, the student earned an additional 10% toward their final grade in the course. Data collection for this course was much more limited than that for BIOL 1201. However, writing tutors reported that many students completed their mandatory visits in early October but continued to make use of writing tutoring throughout the semester. We interpret this as an indication that students saw improvement in their work and their grades that justified further investment in writing tutoring support (Franklin et al., 2002; Titus et al., 2014). Indeed, several students in both BIOL 1201 and EGNE 1206 indicated their intention to continuing using the Writing Centre for assignments from other classes (DeLoach et al., 2014).

Conclusions

The RSP helped students improve their grades and increased their confidence in their own writing skills. Perhaps more significantly, the program eased first-year students’ transition from high school and equipped them to handle university-level expectations, contributing to their future success as science majors. Participation in the program also increased awareness of the WCAC as an academic support service, and the probability that science students would make use of it throughout their academic careers (Pagnac et al., 2014). This result is critical because although a few introductory assignments can improve writing, they are insufficient to develop mastery (Libarkin & Ording, 2012). As a writing-in-the-disciplines initiative (Hall & Birch, 2018), our program was designed to expose students, via lab reports, to the demands of the biology science-writing genre of scholarly research articles. Students completing our program would benefit from continued instructional scaffolding and writing support in upper year courses.

We found three similar programs in the literature that also demonstrate correlations between embedded writing support and improvement in students’ grades (Franklin et al., 2002; Pagnac et al., 2014; Titus et al., 2014). However, we also found two programs that showed no such correlation (Guenzel et al., 2014; Jerde & Taper, 2004). Both of the latter programs used generalist writing tutors, whereas the programs reporting grade improvements used writing tutors with experience in the course discipline. We believe a major factor in the success of our program was the use of specialist writing tutors who were well-equipped to guide students through writing strategies, as well as to help them achieve command of the biology science-writing genre required by their assignments. Incentivizing the program by tethering revision privileges to attendance at writing tutoring helped overcome any resistance students might have felt toward using the service. These factors, combined with scaffolded assignments and frequent, open communication among program staff, clearly and significantly benefited BIOL 1201 students—both during the course and after it ended.

David Dansereau (david.dansereau@smu.ca) is a senior lecturer in the Department of Biology, L. E. Carmichael is a science writing specialist in the Writing Centre & Academic Communications, and Brian Hotson is the director of Academic Learning Services, Writing Centre & Academic Communications, all at Saint Mary’s University in Halifax, Nova Scotia, Canada.