Arriving at a Better Answer: A Decision Matrix for Science Lab Course Format

Arriving at a Better Answer: A Decision Matrix for Science Lab Course Format

By Emily K. Faulconer, Laura S. Faulconer, and James R. Hanamean

This article presents a single case study and a decision matrix for how one institution informed their choice for the modality of a chemistry lab course.

When nontraditional (online) and traditional (face-to-face) courses are pitted against each other, the general consensus is that nontraditional modalities produce equal or better student outcomes (Allen & Seaman, 2013; Bernard et al., 2004; Cavanaugh, Gillan, Kromrey, Hess, & Blomeyer, 2004; Nguyen, 2015; Xu & Jaggars, 2013). Some types of learning settings tend to be viewed as impossible or very difficult to move to an online learning format, and this includes laboratory courses. In addition to the traditional hands-on laboratory experience, lab courses are now being offered online (simulation based), as virtual reality, and using mail-order lab kits (Faulconer, Griffith, Wood, Acharyya, & Roberts, 2018; Faulconer & Gruss, 2018; Gould, 2014; Pienta, 2013).

Most comparisons of science lab modality in the literature focus on a single experiment or unit. However, several recent whole-course studies have shown that many student outcomes are achieved at equal or greater frequency in nontraditional labs than traditional labs (Biel & Brame, 2016; Brinson, 2015; Faulconer et al., 2018). Understandably, best practices would include monitoring student learning outcomes in the rollout of a major course change. However, the course format decision process does not need to center on comparable student outcomes (acknowledging the data for these comparable outcomes is influenced by variables like pedagogical methods) when considering the ideal laboratory experience for students. Instead, an institution might seek answers to several important questions: What is the primary purpose of the laboratory experience (teaching laboratory skills or reinforcing lecture content)? What are the budget constraints? What is the targeted rate of program growth and the infrastructure capacity? What are acceptable safety and access parameters?

Decision matrix for selecting lab format at ERAU

At Embry-Riddle Aeronautical University (ERAU), a best-fit decision was reached as these very questions were weighed. The decision was made around institution-level criteria, not classroom-level ones, like the pedagogical approach. The introductory general chemistry laboratory courses had been taught at two traditional campuses (using traditional face-to-face labs), whereas the online campus had used laboratory simulations. A decision matrix was used to arrive at the ideal lab format for ERAU students taking the lab course through distance education (Table 1). The first step was to rank the importance of the five key criteria: skills teaching, budget, infrastructure capacity, accessibility, and safety. A criterion ranking of 1 indicated a less important criterion and 5 indicated a very important criterion.

| Decision matrix for lab format at Embry-Riddle Aeronautical University. | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

As with the ranking of the importance of each criterion, the rating of each lab format for each criterion is subjective and will vary from institution to institution and possibly by individual lab courses. The following case study presents some considerations that informed the ranking for each modality for the five key criteria. The modality rankings were also weighted so that a 5 represented a strong fit of the modality with the criteria and a 1 indicated weaknesses for that criterion in that modality.

Laboratory purpose: What’s the take-away?

Lab courses inherently will teach students the scientific concepts; laboratory courses can also teach skills. There are two typical approaches to lab course design, segmented by whether the course is for nonmajors or is a majors/upper level course (Adlong et al., 2003; Boyer, 2003; Feig, 2010; Woods, Felder, Rugarcia, & Stice, 2000): The purpose of nonmajors courses is typically to support lecture content. The purpose of majors/upper level courses is typically to develop practical instrumentation and process skills.

In the redesign of the chemistry lab course at ERAU, the goal of the course was clearly defined to include instrumentation, process, and safety skills. A common argument in support of traditional labs is the hands-on nature—tangible results with sensory feedback. However, this can be achieved through lab kits and, to a certain degree, through virtual reality. Simulation platforms often simplify techniques and processes and may not be an acceptable substitution for hands-on lab experiences. It is important to note that touch sensory feedback is not always a critical component for learning through science experimentation, though the research is not yet clear on frameworks where touch sensory feedback is a critical component of learning through experimentation (Zacharia, 2015).

Budget: More bang for buck (but not literal explosions)

Traditional lab courses have high operating and maintenance (O&M) costs, exceeding $20,000 annually. Although O&M costs vary significantly by institution, as does the implementation of lab fees, many of these O&M costs are not deferred to students. Labs must pay for labware consumables like chemicals and materials (e.g., pipette tips, disposable gloves, weighing dishes). Additionally, overhead costs include salaries for faculty and staff, the cost of running energy intensive equipment (e.g., furnace or fume hood) or water-intensive equipment (distillation unit), as well as the recurring cost of hazardous waste disposal. In some cases, a portion of these costs can be recouped through indirect costs covered by research grants.

Simulations, lab kits, and virtual reality are affordable options for nontraditional lab experiences, with some simulation platforms hosting free experiences (PhET) and virtual reality or lab kits ranging from $75 to $200 per student. Administrators should consider the cost-sharing structure of the institution to evaluate what impact a specific option will have on the direct cost to the student. At ERAU, the cost of all three nontraditional formats was deemed acceptable by the administration.

Infrastructure capacity: Fit for growth

Physical laboratory spaces have practical limitations: fire codes and associated room occupancy, new laboratory space construction/renovation, and upfit/refit. Reflecting on the laboratory purpose (teaching skills vs. reinforcing content), establishing a new laboratory facility to accommodate program growth may not pass administrative cost–benefit analysis if the lab course does not support a closely related major.

This consideration was rather straightforward at ERAU. Traditional laboratory space is not practical because of access requirements, and nontraditional lab formats are easily scalable with program growth as more STEM (science, technology, engineering, and mathematics) undergraduate and graduate degrees are launched in support of their strategic growth plan.

Accessibility: A strong student value proposition

Because of the globally distributed locations of students enrolling in the distance education lab course, accessibility was ranked as the most important criteria at ERAU, which supports a high proportion of nontraditional students. Particularly for those who have career and family responsibilities or military deployments, having multiple access opportunities can be a significant factor in deciding the modality and platform for a laboratory experience. Students identify access as a significant benefit (Turner & Parisi, 2008).

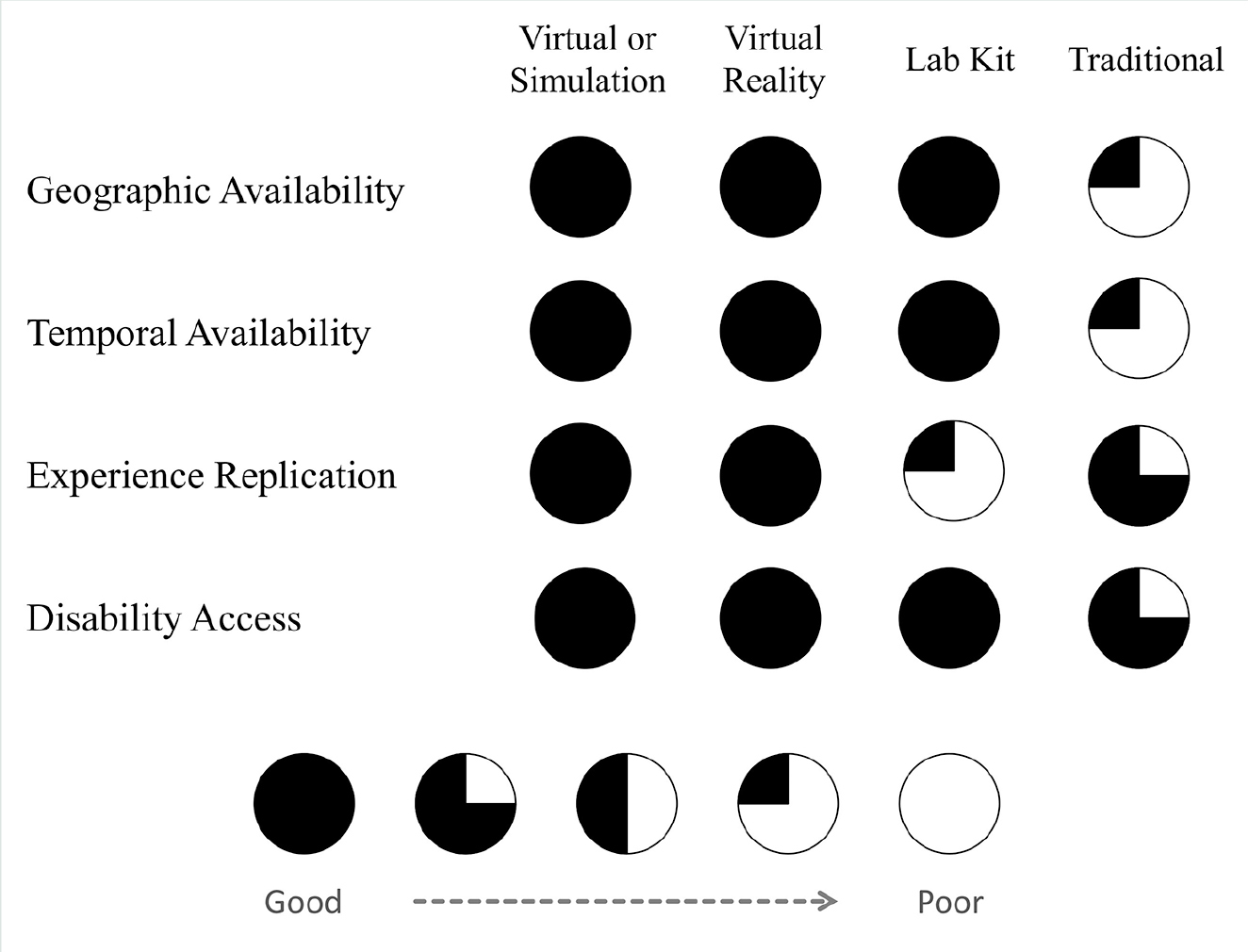

Access considerations go beyond simple geographic availability to include temporal availability, experience replication opportunities, and access for those with disabilities. The subjective ranking of accessibility for each lab format is visually represented in Figure 1. Traditional laboratory spaces have availability limited to the operating hours of the lab and building while access hours to laboratory simulations, virtual reality labs, and laboratory kits are not restricted. Simulation and virtual reality platforms often allow multiple access opportunities for students to explore and replicate their work. This can also be offered in traditional laboratory spaces. This opportunity for replication is often limited for laboratory kits because of the microscale nature of the kits. For students with disabilities, all traditional and nontraditional platforms have methods to improve accessibility. Nontraditional formats sometimes offer increased access to those with physical or psychological disabilities that prevent them from attending traditional laboratories.

Safety: Preventing preventable accidents

Students or instructors working with real chemicals and equipment presents inherent chemical and physical risks. Accidents in educational laboratories periodically occur, even during demonstrations or simple teaching exercises (Balingit, 2015; Hollander, 2011). Because of shipping and waste disposal concerns, laboratory kits use lower hazard chemicals and procedures than traditional laboratory activities. However, they too pose inherent safety risks that are further increased when they are performed outside of a controlled laboratory space and without supervision by trained personnel.

The cost of an accident can go beyond physical damage to the facility or medical bills. Accidents can result in lawsuits from injured parties as well as fines from regulatory agencies like OSHA or the EPA (Ryan, 2001). Online simulations and virtual reality, where there are no physical repercussions for experiment failure or human error, are the safest options.

At ERAU, a comprehensive risk assessment was performed and presented to the internal safety review board. The nature of the chemicals in the kit posed minimal risks that were further reduced through comprehensive safety training built into the course design. This training not only minimized risks due to the unsupervised nature of the kit-based course, but also provided key training for students to apply in future laboratory experiences.

Online simulations may cover safety superficially or have inaccuracies that would make students unprepared for later laboratory coursework. For example, LateNiteLabs does not address chemical spills. Wastes are co-mingled, labware and all, in a trash bin labeled with a biohazard marker. For obvious reasons this does not adequately prepare students for future hands-on laboratory work. One interesting benefit of online simulations, though, is their ideal use for laboratory activities that are just simply too dangerous or expensive to execute in a hands-on setting, such as thermite reactions and the rainbow flame demonstration.

No right answer, but perhaps a better answer

The ERAU case study ranked the lab-format criteria on the basis of university priorities, and then each lab format was evaluated for alignment with the criteria to calculate a weighted score for each lab format. For example, the criterion of accessibility was ranked as a 5. With a rating of 5 for the online modality in this criterion, the weighted score accessibility of an online lab was 25 points. Using the subjective criteria and modality ranking, the sum of all the weighted scores for all criteria for the online modality was 59. Both virtual reality and hands-on lab kits marginally exceed this score, whereas the traditional modality significantly trails.

According to the decision matrix, virtual reality and lab kits were deemed ideal choices for ERAU. Ultimately, the decision was made to use lab kits provided by eScience Labs. This company is expanding into virtual reality. Because of the good fit of virtual reality with our priorities, this may be a viable option for ERAU to explore in the future.

In this decision matrix, the ranking criteria were linear. However, we recognize the possibility of nonlinear weighting by institutions. The matrix could be adjusted to accommodate this nonlinear relationship. The purpose of the decision matrix presented here is to concisely present critical criteria to consider and to share a single case study of this decision-making process at one institution. Applying best practices in a sandbox environment will allow institutions to evaluate new approaches and optimize their modality and platform decision process in delivering a high-quality, engaging, and safe science laboratory course.