Research and Teaching

Construction Ahead: Evaluating Deployment Methods for Categorization Tasks as Precursors to Lecture

Construction Ahead: Evaluating Deployment Methods for Categorization Tasks as Precursors to Lecture

By Anne Marie A. Casper, Jacob M. Woodbury, William B. Davis, and Erika G. Offerdahl

The authors of this article briefly review the literature rationalizing sorting tasks as a preclass activity for fostering deep initial learning and transfer.

There is mounting evidence that active learning is more effective than lecture-only instruction both in terms of decreasing course failure rates and increasing student performance in undergraduate STEM (science, technology, engineering, and mathematics; e.g., Freeman et al., 2014; Prince, 2004). Although there is no single definition of what constitutes active learning, there is broad agreement that it includes student-centered instructional strategies that engage students in higher order, constructive tasks during class time (Bonwell & Eison, 1991; Freeman et al., 2014). Higher order tasks require students to leverage their content knowledge about the topic of interest to tackle analysis, synthesis, and/or evaluation activities (Anderson et al., 2001). In practice, this means students should be afforded opportunities to acquire content knowledge before class in preparation for higher order, constructive tasks in class where they will gain further fluency with the content (e.g., DeRuisseau, 2016; Gross, Pietri, Anderson, Moyano-Camihort, & Graham, 2015; Lieu, Wong, Asefirad, & Shaffer, 2017).

Not surprisingly, recent research has turned toward understanding the nature of various preclass activities in preparing students for active learning environments (e.g., DeRuisseau, 2016; Gross et al., 2015; Lieu et al., 2017). In this article, we explore a single type of preclass activity, sorting tasks, because they have been shown to promote both initial learning and subsequent transfer (Kurtz et al., 2014). In a sorting task, students categorize problems or scenarios into two or more groups with shared characteristics. A priori groups can be defined for the student (i.e., framed sorting task) or the student can independently define the groups (i.e. unframed sorting task). Sorting tasks are a type of constructive activity because the learner generates additional outputs (i.e., categorical groups) that extend beyond the information provided on the cards (Chi & Wylie, 2014). Constructive activities have been shown to be more effective at supporting deep initial content learning than active retrieval (e.g., reading quizzes) or passive (e.g., watching recorded lecture) activities (Chang, Sung, & Chen, 2002; Menekse, Stump, Krause, & Chi, 2013). Although there is strong theoretical and empirical support for sorting tasks as an effective activity to prepare students for future (i.e., in-class, active) learning, there is little practical guidance on how to implement them, particularly in support of student learning in large, introductory STEM courses. The overarching goal of this article is to provide insight into the practical implementation of sort tasks in large-lecture STEM courses by exploring the affordances and limitations of two sort task deployment options used in large-lecture introductory biology.

In the following narrative, we begin by leveraging existing literature to rationalize sorting tasks as a preclass activity for fostering deep initial learning and transfer. We then describe two potential sort task deployment options for large-lecture courses (course management system and online sorting task software) and share classroom data related to their implementation. Next, we summarize practical considerations for instructors interested in using sort tasks as preclass activities in large-lecture courses. We conclude with a brief discussion of the implications for instructors of large-lecture undergraduate STEM courses.

Constructive tasks support deep learning

Students often find it challenging to transfer material from familiar contexts to new situations (Chi & VanLehn, 2012; Kurtz, Boukrina, & Gentner, 2013). This is potentially problematic for active learning courses where students may be expected to acquire basic knowledge outside of class and then apply (transfer) it to a “new” context in class during higher order activities (Gross et al., 2015; Lieu et al., 2017). Chi and VanLehn (2012) argued that failure to transfer knowledge acquired in one context to a new one is likely due to a lack of deep initial learning; that is, students do not gain sufficient understanding in an initial context to apply the material to a new context. They further hypothesize that passive activities (e.g., reading textbooks, viewing a recorded lecture) are unlikely to result in deep initial learning because passive activities do not explicitly challenge students to attend to relationships between ideas or notice interactions between these relationships (Anderson et al., 2001; Chi & VanLehn, 2012; Chi & Wylie, 2014). In contrast, activities like sorting tasks, which require students to (a) recognize or infer meaningful relationships between relevant surface features of different scenarios, problems, or cases and then (b) notice interactions between these relationships by looking across the scenarios, are more likely to promote deep initial learning (Chi & VanLehn, 2012; Kurtz et al., 2013). This is because they require students to go beyond passive review of material and instead perceive the deep structure underlying the content (e.g., Bissonnette et al., 2017; Chi & VanLehn, 2012; Hoskinson, Maher, Bekkering, & Ebert-May, 2017; Kurtz et al., 2013; Kurtz & Honke, 2017). For example, consider a sorting task designed to elucidate the concept of structure and function (a structure’s physical and chemical characteristics influence its interactions with other structures and therefore its function), articulated as one of five overarching biological concepts for undergraduate biology (Brownell, Freeman, Wenderoth, & Crowe, 2014). Each card would exemplify the deep feature (structure and function) with a different scenario. In this example, the scenarios would contain relevant surface features such as specific cellular structures (e.g., protein, DNA, membrane) and chemical features of those structures (e.g., charge, hydrophobicity). Relationships between relevant surface features would be inferred or retrieved (e.g., oppositely charged molecules attract, DNA is found in the nucleus). Sorting facilitates students in seeing the deep feature across multiple contexts—thereby increasing the likelihood of spontaneous retrieval of the deep feature or underlying principle (Gick & Holyoak, 1983).

There is some literature to suggest that physically maneuvering cards during the sort task might facilitate learning by supporting the development of mental schema and reducing cognitive load. Theories of embodied cognition propose that our sensorimotor experiences influence our cognition and support construction of more stable mental models (Pouw, van Gog, & Paas, 2014). Further, the physical maneuvering of objects to represent ideas is hypothesized to decrease cognitive load by providing external representations of the material, lessening the amount of things a learner must keep track of and providing external supports to increasing the stability of mental models (Pouw et al., 2014).

Sorting tasks in large-lecture biology course

The classroom context influences instructor’s decisions about teaching (Gess-Newsome, Southerland, Johnston, & Woodbury, 2003). Factors such as the physical organization of the instructional environment, class size, and access to technology come into play, particularly in terms of how they might facilitate or inhibit the achievement of desired learning objectives. The high enrollments of introductory undergraduate biology courses at our institution present logistical challenges in terms of administering and managing student responses to preclass activities. Our learning management system (LMS) seemed an obvious solution for implementing a preclass sorting task, particularly because it is routinely used to administer a preclass reading quiz in introductory biology. But given the potential influence of maneuvering cards for reducing cognitive load and developing mental schema, we looked for other platforms that would allow students to physically move cards while sorting. Online platforms (e.g., Proven By Users [PBU], OptimalSort, usabilitiTEST) have been developed within the field of information science as a tool to determine the most efficient information architecture structures (Jacob & Loerhlein, 2009). These platforms allow users to sort cards by “dragging and dropping” them into groups, making the platform an attractive option for administering a preclass sorting activity. In the remainder of this section, we describe how we utilized an LMS (Blackboard) and an online sorting task platform (PBU, 2017) to deploy a preclass sorting class activity. In the subsequent section, we discuss our experiences with the two deployment options as well as the affordances and limitations of each from the perspective of an instructor.

Course context

The course in which sorting tasks were implemented was a large (N = 439) undergraduate introductory biology course at Washington State University, a public land-grant university in the western United States, a conjoined lecture/laboratory course convening three times weekly for 50-minute lectures and once weekly for a 170-minute lab. Enrolled students represent diverse majors (>55 on average) spanning the life and physical sciences, political science, natural resources, humanities, and engineering. In the semester where sort tasks were implemented, 25% of the students were freshmen, 44% were sophomores, 22% were juniors, 8% were seniors, and 0.4% were postbachelors or in a nondegree granting program.

The course is generally taught with student-centered, interactive approaches. In a typical class section, students are engaged with clicker questions that require (a) application of material from previous class sessions and/or or prelecture readings or (b) analysis of biological data and/or models. Daily preclass reading quizzes are assigned to reinforce active retrieval of key points from the prelecture reading. We designed a sorting task activity to replace a prelecture reading quiz and administered it during a unit on protein trafficking.

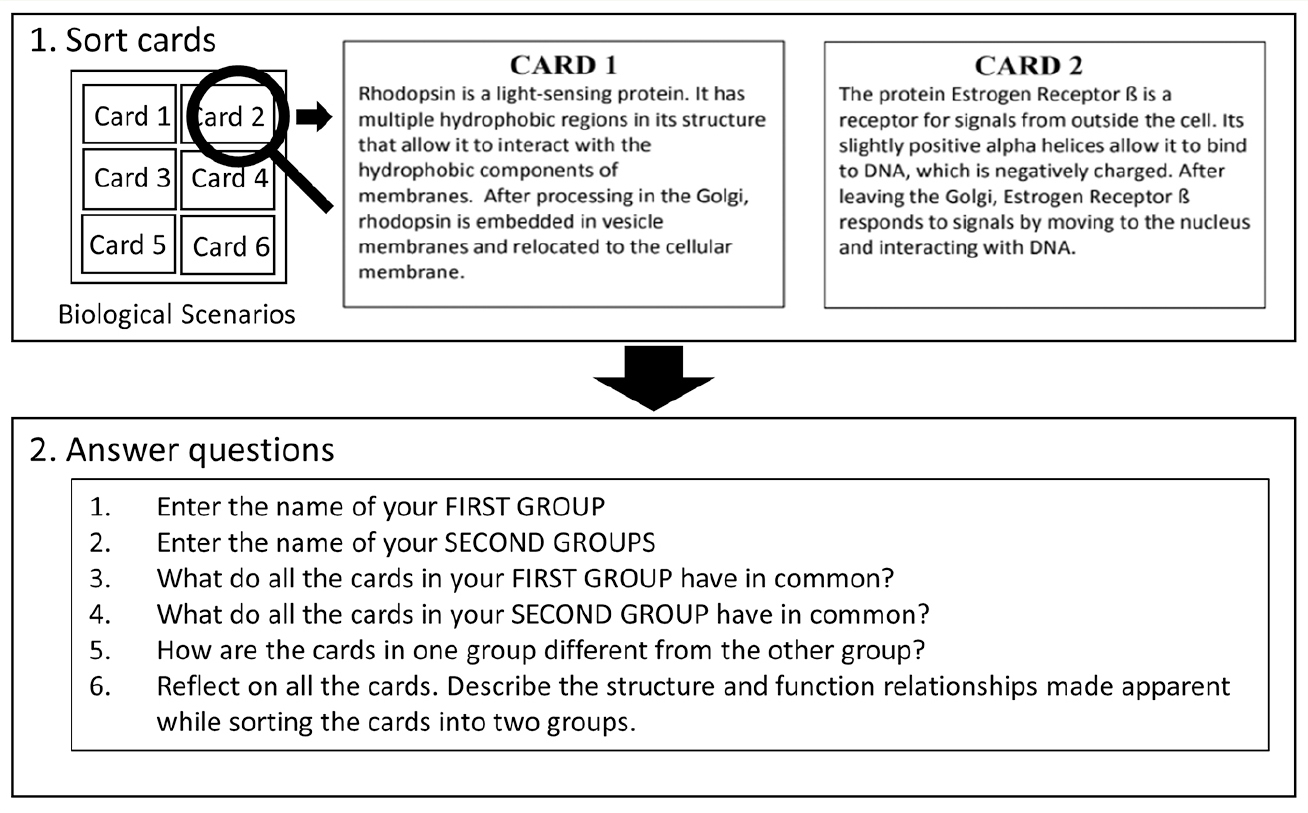

We randomly assigned students to complete the sorting task via one of two platforms: Blackboard (n = 221) or PBU (n = 218). Of these students, 71% (n = 310) completed the assignment, which is comparable to the usual completion rates for the daily preclass reading quizzes (70%–86%). As usual, students were given a reading assignment as well as annotated lecture notes before class. Though the sorting task was assigned individually in the same way as a prelecture reading quiz, there was no way to prevent students from meeting and completing the task together. In both platforms, the sort tasks and instructions were the same: Students completed identical sorting tasks and then explained their rationale (Figure 1; see also Appendix, available at ). We included questions to encourage reflection on the sorting process and reduce the number of students who “click through” the task with minimal cognitive engagement. As described next, the only differences between the two platforms were the way students received and manipulated the cards, how they submitted information about the sorts, and the requirement that students on PBU had to manually enter their name and student ID number.

Because students completed the task outside of class, we do not know exactly how much time students spent on task. The PBU platform automatically tracked the time elapsed while the task was open, but Blackboard did not. Therefore, we only know that of the students who used the sorting platform, 36% completed the task in less than 5 minutes, 68% completed the task in less than 10 minutes, and 86% completed the task in less than 20 minutes. The remaining students’ times ranged up to 15 hours. We cannot know how much of the time was actually spent on task, but we do know that most students completed the task in under 20 minutes.

Implementation via LMS

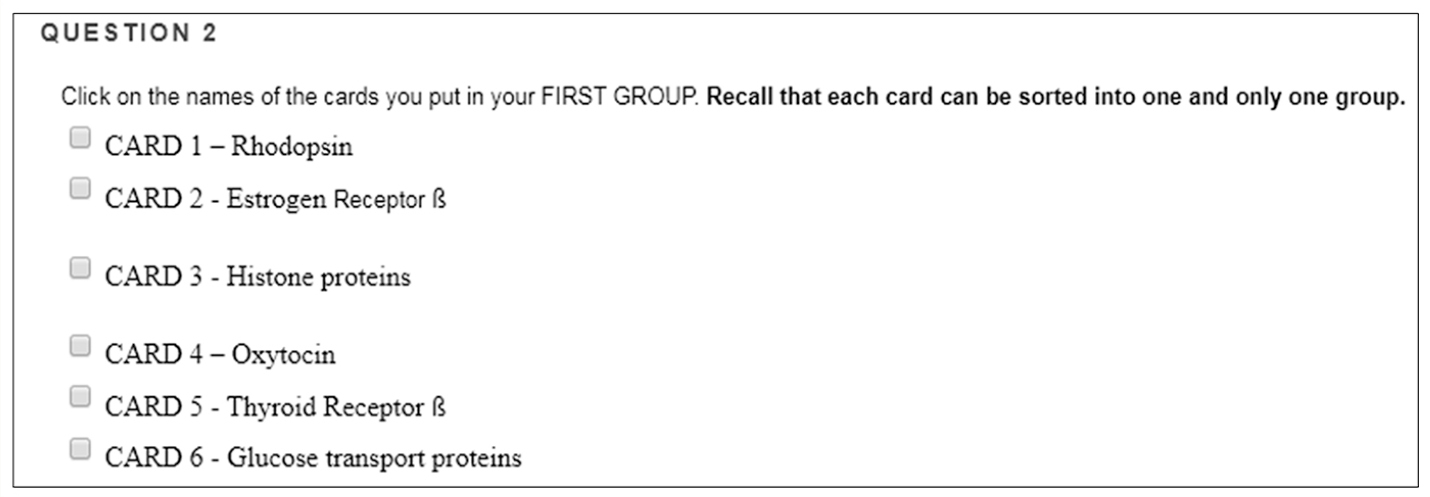

The LMS at our institution (Blackboard, Bb) does not have a tool for sorting items into categories. As a result, an electronic document (PDF) of the cards was posted on Bb with instructions to print the cards out, sort them into two groups, and then report the names of their groups and the cards that were assigned to each group. Students reported this information by completing short-answer (to report each card group name) and multiple-choice questions (Figure 2). Following the sort, students also answered the reflection questions to explain their rationale.

Implementation via online sorting task platform

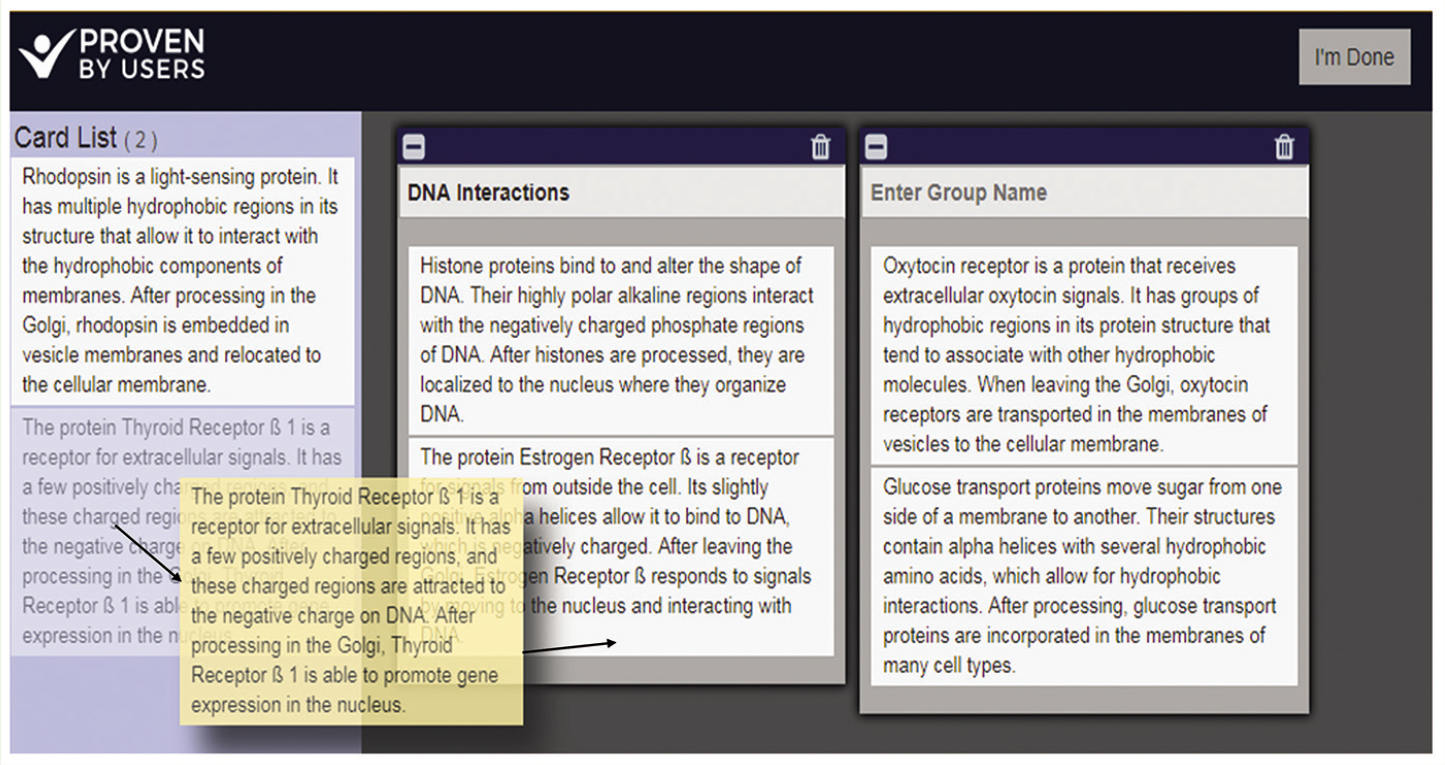

PBU is a specialized web-based card sorting software that allows users to sort virtual images of cards by “dragging-and-dropping” them into groups. We used PBU to administer the same sorting task as Bb. On the PBU platform, all of the cards were displayed simultaneously on the left side of the screen (Figure 3). Students then dragged and dropped each card to the center of the workspace to create groups and typed text in the top of the group box to name the group. Students could rearrange the cards and/or rename their groups repeatedly. Once satisfied with the task, students then clicked a button to submit. After completing the sort, students answered the same reflection questions as students on Bb.

Practical considerations for sort task implementation

We implemented two deployment options for administering sorting tasks as preclass activities in large-lecture introductory biology. In this section we discuss our experiences including the affordances and limitations of each option (LMS and specialized sorting task software). In our discussion we attend to a number of factors that would likely be of concern to other instructors, including student learning, faculty time, convenience, and money.

When developing the task, we were concerned about the fidelity with which the sort task was to be completed. In Bb, there is no way to ensure that students actually maneuver cards to complete the task. In contrast, PBU requires moving cards within the user interface. Embodied cognition theory suggests that maneuvering the cards may be an important part of the learning process in sorting tasks. We worried that the students assigned to the Bb group, instead of printing off cards and sorting, would just read the card information and as a result perform lower on the task.

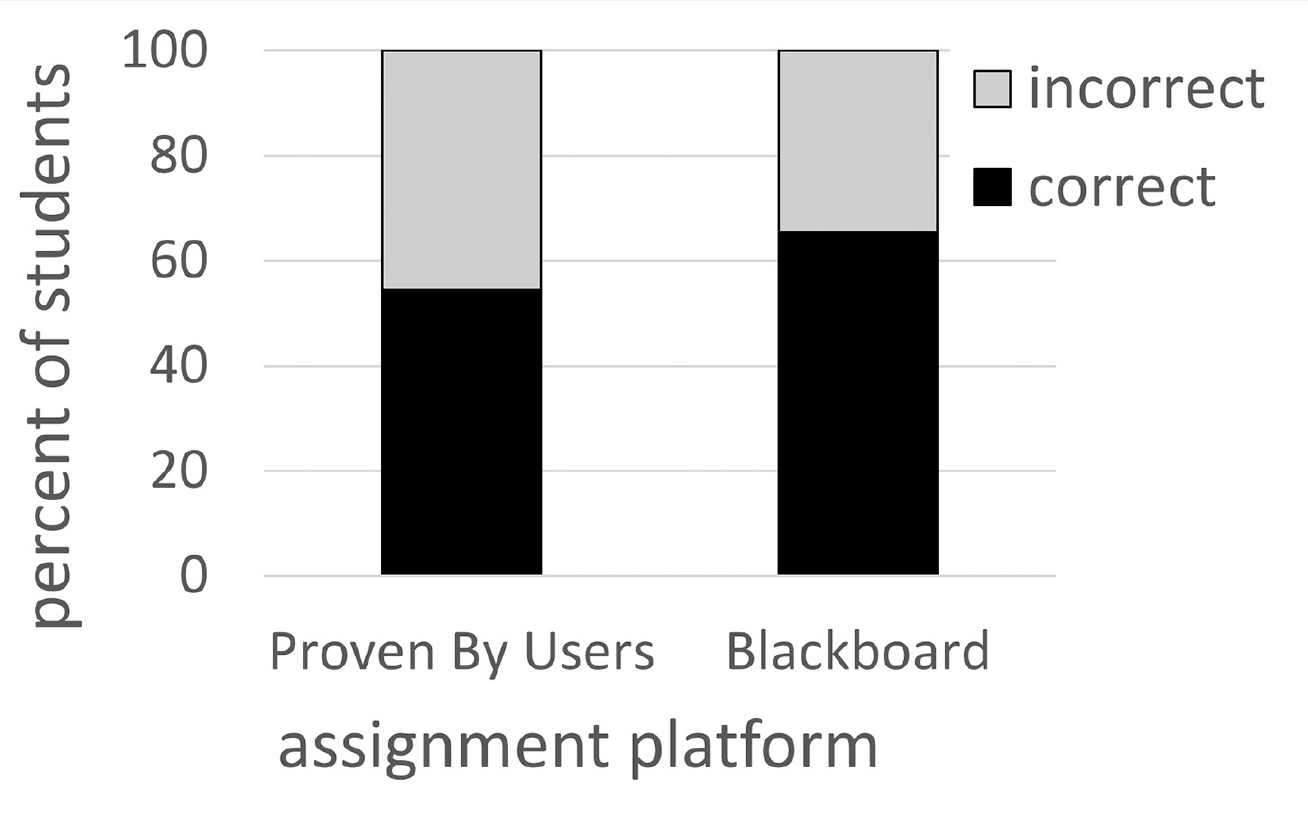

To address this concern, we examined the percentage of students who arrived at a correct card sort. Sixty percent of all students (174 of the 290 who both followed directions and completed the task) sorted the cards correctly. Twenty of the students who submitted a response for the assignment failed to follow directions (i.e., sorted all cards into a single group) or did not fully complete the assignment and were therefore omitted from our analysis. There was no significant difference between sorting platforms in the percentage of students arriving at a correct sort (Figure 4; Pearson’s chi-squared with Yates’ continuity correction: χ2 = 3.2328, df = 1, p = .0722; RStudio Team, 2005), nor in the percentage of students who completed the assignment between the two platforms (Pearson’s chi-squared with Yates’ continuity correction: χ2 = 0.00005, df = 1, p = .9942). Furthermore, there did not appear to be a qualitative difference in students’ responses to the reflection questions between platforms. From a practitioner perspective, this was sufficient evidence for us that students are engaging in the sorting task similarly enough across platforms to allow for considerations of practicality, convenience, and cost to drive final decisions about which platform to adopt.

Affordances and limitations of a common LMS

Course LMSs are an attractive option for instructors, particularly if they already use an LMS to support their teaching efforts. But in instances when grading the sorting task for correctness (instead of completeness) is desired, the potentially unpredictable sorting patterns (i.e., a sort task with six cards could result in two or three groups with two to four cards each) of a completely unframed task make it difficult to use the available tools to collect student responses in a way that would be easily gradable. We overcame this limitation by providing some minimal framing in the task instructions; we prescribed the number of categories students should sort the cards into but did not otherwise indicate how the cards should be sorted. In this manner, the constructive nature of the task was preserved; students created categories that were not provided in the assignment. Another advantage of using a course LMS is the gradebook integration; students’ scores are updated in the course gradebook, decreasing the time and logistics of providing information about individual student performance.

There are a few drawbacks to using a course LMS such as Bb. First, there is still no way to ensure that the students actually go through a sorting process. And, in the case of a framed sorting task, if students have not done the sort and only answer multiple-choice questions, then the nature of the task has been changed from a truly constructive task, where students compare across cards, to an active task, where students evaluate each option in isolation and determine its appropriateness. Second, in an unframed task the number of groups into which the cards should be sorted is not specified. It is not possible to create an open-ended question that can be autograded by the LMS for correctness, therefore completely unframed sorting tasks that do not specify a set number of groups may be best suited for instructors wanting to use the sorting task solely to elicit student understanding and/or misconceptions or in situations where grading for correctness is not a concern.

Affordances and limitations of specialized software

Although specialized sorting task software varies in much the same ways as LMSs (i.e., interface, specialized tools), they are similar in that they require “dragging and dropping” into groups, thereby ensuring that the card manipulation component of the task is maintained; students cannot simply write down or type in an answer. Many also have a backend database that collects information about the diversity of ways that students sorted, which helps instructors identify patterns in student reasoning. This may be particularly useful, because the way students sort cards in a sort task reveals information about the way they structure knowledge (Chi & VanLehn, 2012; Smith et al., 2013). Easily generated outputs usually include the most common card sort and a matrix of the frequency that a given card is grouped with a second card. This type of information is potentially useful when an instructor uses a sorting task to diagnose student understanding in a “just-in-time-teaching” approach.

Although these sorting task platforms solve the challenge of engaging the students in sorting, there are a number of potential limitations. First, there is a learning curve associated with the online platform for both instructors and students. Second, grading is usually not integrated with the course gradebook or management system. Also, not all platforms support embedded images within the virtual cards. Finally, the specialized software programs are generally proprietary in nature and potentially expensive (common platforms range from $25 to $200 per month, although some offer free trials), thereby limiting accessibility to the instructor and/or students.

Summary

We considered a number of factors when determining how to implement sorting tasks in large-lecture biology. There are certainly also procedural or logistical factors to consider, such as how time-consuming developing and implementing an activity will be and the resources available for activity implementation. Instructors might also be concerned with the relationship between student affect and performance—to what degree does student “buy-in” to an activity impact student performance? Are there particular aspects of the activity (i.e., method of deployment) that affect student buy-in, motivation, or performance? The work described here provides insights for instructors as they balance these trade-offs in ways that are best for their context.

The work described here was conducted within the context of a single undergraduate biology class. Factors such as type of course (e.g., chemistry vs. biology, majors vs. nonmajors), differences in instructors’ and students’ prior experience with active learning activities, and level of computer access and literacy may influence the enactment, and therefore effectiveness, of different sort task deployment options. Future research should further investigate how context influences student performance on different platforms to produce more generalizable recommendations about what works, in what contexts, and for whom (Brewer & Smith, 2011; Brownell et al., 2014; Dolan, 2015; Freeman et al., 2014).

Conclusion

Sort tasks have the potential to facilitate deep initial learning (Kurtz et al., 2013; Kurtz & Honke, 2017) and are therefore an attractive option for preparing students for engaging in higher order, active learning instruction. But the large enrollments of many introductory STEM courses pose a practical constraint for implementation of sorting tasks. Our work demonstrates the feasibility of implementing sorting tasks in large-lecture STEM courses. In doing so, we provide insight into the affordances and limitations of two deployment options, which may be useful to instructors interested in exploring the use of sorting tasks in their own classrooms. Although our goal was to rationalize the potential utility of sorting tasks for undergraduate STEM and to share practical options for their implementation, our work also lays the foundation for more systematic research comparing the effects of variations in sort task activities (framed vs. unframed) on students’ initial learning.

Research on preclass activities for active learning classrooms has often focused on passive or active retrieval activities; however, there is clear evidence that constructive activities are better at facilitating deep learning (DeRuisseau, 2016; Gross et al., 2015; Kurtz et al., 2013; Lieu et al., 2017). The work described here demonstrates the feasibility of sorting tasks, thereby laying the groundwork for future research comparing passive (i.e., viewing a lecture), active (i.e., reading quizzes), and constructive (i.e., sorting tasks) preclass activities in terms of students’ deep initial learning and preparation for in-class active learning activities.