Case Study

Creating a Video Case Study

By Annie Prud’homme-Généreux, J. Phil Gibson, and Melissa Csikari

This column provides original articles on innovations in case study teaching, assessment of the method, as well as case studies with teaching notes. This issue describes how to create a video case study.

“Cases are stories with a message. They are not simply narratives for entertainment. They are stories to educate” (Herreid, 1997, p. 92). By this definition, a case study is two things: a story and a strategy to drive learning. Although the story typically comes from a narrative purposefully written for the classroom, such as the case studies in the National Center for Case Study Teaching in Science collection, other types of stories could be used, including newspaper articles, news broadcasts, oral stories, songs and poems, and online videos.

Current students are often digital natives who find videos engaging and intuitive to use (Eick & King, 2012; Moghavvemi, Sulaiman, Ismawati Jaafar, & Kasem, 2018; Prensky, 2001). A recent survey (n = 2,587) conducted by the global market research firm Harris Poll, found 59% of Generation Z (ages 14–23 years old in 2018) and 55% of Millennials (ages 24–40) said that they prefer YouTube over books or printed materials to learn (Pearson, 2018).

Given that a large proportion of current students report enjoying learning via digital media, educators must embrace the many sources of science stories available online (Table 1). A recent paper in the journal Nature encouraged scientists to create videos to accompany the release of their papers to make their science accessible to the public (Smith, 2018). Videos that tell authentic stories of scientists and show them working in their research environment expose students to the diversity of researchers and environments involved in the science enterprise. That’s something that students can rarely glean from a textbook.

| Sources of narrative science videos. | ||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

The weakness of using video as a pedagogical tool is that it’s usually a complete story that may pique their interest in a topic but doesn’t allow students the opportunity to pause and consider how they might have investigated a problem if they were in the shoes of the scientist. In other words, it doesn’t encourage them to practice the skills of thinking like a scientist.

However, this can be remedied by identifying strategic points along the narrative where taking the time to pause and ask students carefully crafted questions will invite them to think scientifically. In other words, instructors can transform a video into a case study. Several studies have confirmed that asking students to answer questions while watching an educational video leads to higher cognitive gains than watching the video without questions or simply taking notes (Lawson, Bodle, Houlette, & Haubner, 2006; Lawson, Bodle, McDonough, 2007). Indeed, a recent review of the literature found that strategies that reduce cognitive load when watching a video, such as using short clips and chunking information, and those that facilitate active student engagement with the content were more effective for learning (Brame, 2016). Thus, a video case study that segments a video using questions to cue student attention and encourage critical thinking would be an effective learning strategy.

Creating a case study in this manner is quicker and easier than writing a case study from scratch because it capitalizes on an instructor’s existing skills of fostering inquiry in students rather than asking them to engage in the novel skill of storytelling. Once an instructor becomes familiar with the format, he or she will be able to transform a short online video (less than 10 minutes) into an approximately 60-minute, student-centered activity.

In an article published in 2014, Pai advocated for the inclusion of videos in the classroom when doing case-based instruction. The article provided strategies for doing so, along with online sources and suggestions for how to select the best ones for the classroom. The present article picks up where Pai’s article left off, focusing on the creation of effective questions that supplement a video and, in so doing, describing how to create a video case study.

A video case example

An example of a video case study, the “bee video,” was developed using HHMI BioInteractive’s video Scientist at Work: Effect of Fungicides on Bumble Bee Colonies. The video case study is presented in Table 2. The objectives of this video case study are to develop students’ abilities to think like a scientist and practice experimental design while learning about course-related concepts (the effect of fungicides on some bee species).

| Example of a video case study that accompanies the video Scientist at Work: Effect of Fungicides on Bumble Bee Colonies, which is available on the HHMI BioInteractive website (). | ||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

Articulating learning objective(s)

When developing questions to accompany a video, it is essential to keep the learning objective(s) in the forefront of the activity design. For example, the bee video (Table 2) could be used to teach about bee biology, ecology, microbes, experimental design and methodology, and/or about how research can impact policy. Starting with the learning objective(s) in mind, the instructor can design a series of questions to push students to investigate that topic. This backward design approach ensures that students going through the video case study are led to the desired outcome (Wiggins & McTighe, 2005).

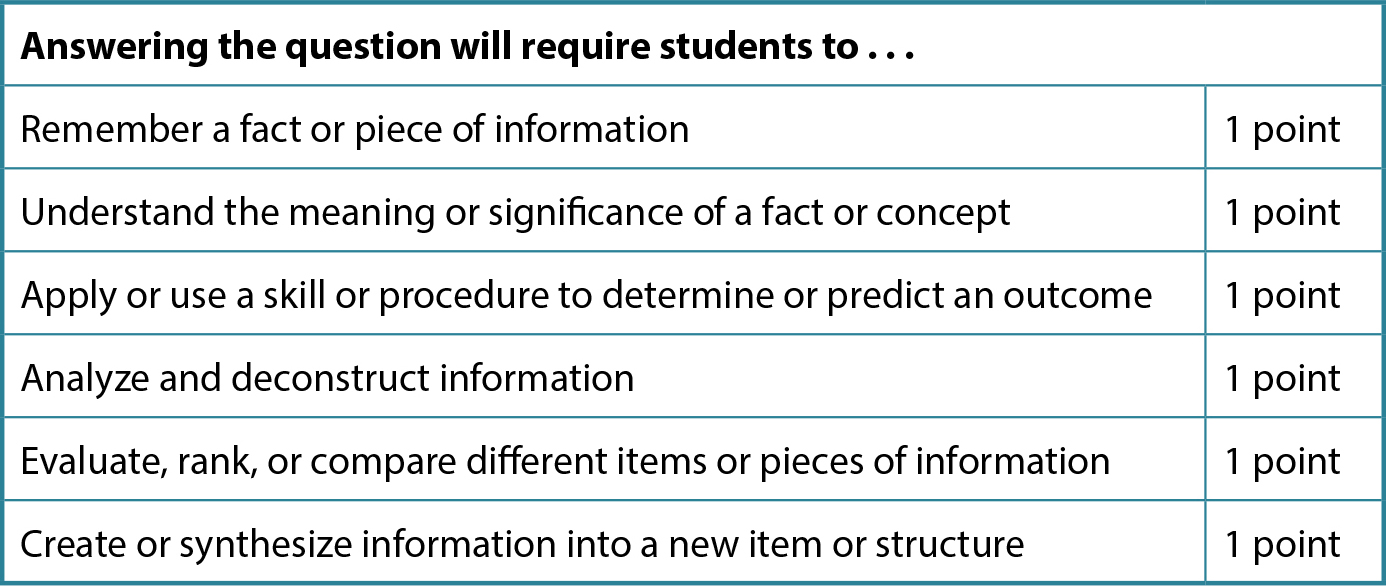

Bloom’s taxonomy should be considered in identifying and articulating the learning objective(s) of the case study (Krathwohl, 2002). As an initial step, the case developer must decide which one of Bloom’s four knowledge dimensions (Factual—knowing terms and facts; Procedural—knowing procedures, methods, and skills; Conceptual—knowing principles, theories, and models; or Metacognitive—knowing about one’s thinking) will be the goal of the case.

Next, one should determine which of Bloom’s cognitive process dimensions will be the focus of students engaged in the case study. As a reminder, Bloom’s cognitive process dimensions are: Remember—recognize and recall; Understand—interpret and explain; Apply—use and implement; Analyze—differentiate and dissect; Evaluate—judge and rank; and Create—produce and form. These cognitive dimensions build on one another so that to analyze a concept, a student would need to be able to remember some ideas, understand them, and be able to apply them as prerequisite steps.

Once these two intentions are articulated, the learning objective(s) can be written down in the form of “At the end of this video case study, students will be able to …” Formulating the learning objective in this way ensures that they are focused on observable student behaviors. All the questions of the video case should be designed to help students achieve the learning objective(s).

For example, if the learning objective of the bee video case study was “At the end of this video case study, students will be able to read a bar graph,” that learning objective falls at the intersection of the procedural dimension (how to do something) and the apply process dimension (how and when to use a particular skill). To achieve this objective, the case study should include questions that get students to access lower level (below the skill that is being developed) Bloom’s cognitive domains in addition to the level that is developed within the lesson. This may include questions to remember (retrieve from memory the parts of a bar graph), understand (articulate the utility of the different parts of the graph), and apply (practice reading bar graphs).

Identifying pause points

After identifying the learning objective(s) for the video case, the second task is to identify when to pause and integrate questions into the video. In the bee video case example shared in Table 2, students are asked the first two questions of the case before they view the video. At this point, students may have preexisting knowledge that they can bring to bear on the question, but they are not expected to know the answer. It has been documented that predicting answers is a powerful tool for learning. In fact, students who engage in pretesting typically perform 10% better at the end of the lesson than students who have not (Lang, 2016). What is surprising is that the questions can be different in the pretest and posttest. Students are not simply memorizing the answer to a previously studied question, but performing better with novel questions on the concept. Pretesting makes students learn more robustly.

Carpenter (2009) proposed that pretesting activates existing schema in the students’ brain associated with the topic and therefore makes them more accessible for later retrieval. But it is also possible that the act of predicting an outcome on the pretest increases motivation (the students have a stake in the answer because they want to know if they were correct), which is linked to better learning. Weinstein, Gilmore, Szpunar, and McDermott (2014) thought the questions serve as cues that focus the students’ mind to what will be important. It’s also possible that pretesting serves as a “fluency vaccine” that makes students realize that they may not already know the materials.

It’s unclear what mechanism causes the testing effect, but it is clear that asking students a few questions about key aspects of a topic ahead of teaching improves learning. It therefore seems worthwhile to include a couple of engagement questions before beginning the video.

Once students have interacted with the precase questions, begin the video. “When” to include a question in the video requires a bit more thought. The purpose of pausing the video and asking questions is to give students the time to think of what they would do in the shoes of the scientist. It’s an opportunity to think like a scientist. Therefore, whenever the video is about to reveal information about what the scientist did or thought, a learning opportunity is presented, and the video should be paused to ask students to predict the next step.

In the bee video case example, the video is paused before it reveals the experimental design that the scientist used, and students are given the opportunity to design the experiment. Novice students are encouraged to think conceptually and to focus on what they want to accomplish with their experiment rather than the specific methods used (e.g., in a biochemistry experiment they might say they want to separate the cell’s proteins rather than using the tool fast protein liquid chromatography [FPLC], which they may not know). Once students have the time to plan the experiment for themselves, the different proposals should be discussed among peers. The video should then be resumed to reveal what the scientist did. Often, novice students come up with the same experiment as the researcher, boosting confidence in their abilities to think scientifically. Differences in what students propose and what the researcher did can be used to discuss why the scientists may have chosen one strategy over another (e.g., a student’s proposal may be a better approach but would depend on access to an expensive tool that the researcher could not access). This is a rich opportunity to explore science as it is done in the real world and in a way that textbooks rarely allow.

In the bee video example, by selecting pause points that give students the ability to predict the next step, students practice the skills of hypothesizing, designing an experiment, predicting results, and analyzing those results—that is, thinking like a scientist, which is the learning objective of the case study.

A pause point for questions is recommended for approximately every minute in the video. In our experience, a 5- to 7-minute video containing six questions gives students the opportunity to explore the content in depth while maintaining focus on the task at hand. A 5- to 7-minute video, with six questions where students work collaboratively on each prompt, takes about 60 minutes of class time to implement.

A video case study planning tool is provided in Table 3. Case designers can use this tool to indicate the learning objective(s) of the activity, the timing of each pause point, and the question to ask students at that pause point. The Bloom and risk score of each question will be discussed next.

Blooming the questions

As with identifying learning outcomes, Bloom’s taxonomy can be used to evaluate the suite of questions used in the case study. Crowe, Dirks, and Wenderoth (2008) developed a method of assessing the level of Bloom’s cognitive process dimension accessed by a question. The method evaluates which levels are needed to answer a question. For example, a question asking students to recall the definition of a pesticide would be rated “1” because it only requires students to remember the first level of Bloom’s taxonomy. A question asking students to design the experiment in the video would be rated as “5” because it requires students to engage in activities that touch on five of Bloom’s taxonomy levels: remember the steps of experimental design, understand how each one contributes to an experiment, apply the appropriate skills of experimental design, analyze how effectively the results will address the question and hypothesis, and evaluate how the results from the proposed experiment will build on the results of the preceding experiment. In other words, students must engage in five of the six levels of Bloom’s taxonomy to design an experiment. A combination of several low-rated questions and one or two higher rated questions can help students learn the basic content within the case study and provide the challenge to think deeply (Figure 1).

Scaffolding the risk

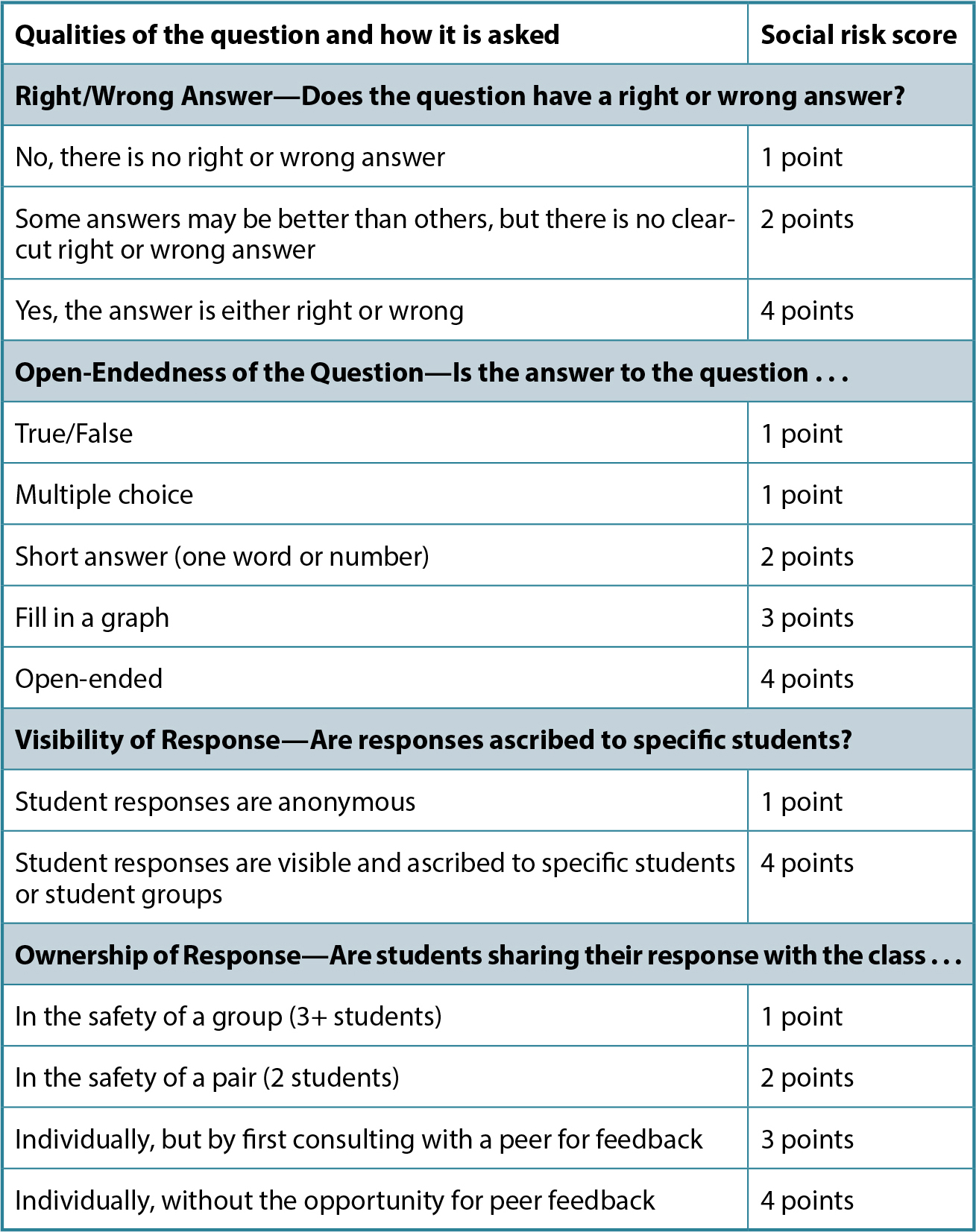

Classrooms that are supportive and respectful elicit greater student participation (Fassinger, 2000; Frisby & Martin, 2010; Hirschy & Wilson, 2002), leading to improved learning outcomes (Rocca, 2010). Creating these cooperative environments must be intentional. For this reason, in developing questions for a video case study, instructors should carefully consider the format of the questions and how students are expected to provide their response. Tanner (2013) encouraged instructors to consider not just what is being asked, but also how it is being asked and who is invited to the discussion. There are a variety of methods for collecting student responses, and each one will expose students to different amounts of social risk (e.g., risk of being wrong in front of peers). The recommendation is to start the video case with low-risk questions and to end the case with higher risk questions. The riskier questions tend to be ones that push students to higher levels of the cognitive processes (higher level of Bloom’s taxonomy), but to get there, students must warm up to the group and be comfortable enough to take the risks. This progression gives students the opportunity to develop confidence in their ability to tackle the case and to build trust and rapport with their peers and the instructor.

We propose that the risk to the student in answering a case question can be analyzed along four axes. The first axis is the possibility of being wrong. Some questions, particularly factual questions, have a right or wrong answer. Providing an answer to these questions places the student at high risk because they may be embarrassed in front of their peers. These should be avoided at the beginning of a video case. Rather, the first few questions of a case should serve to explore the background that students bring to the topic. Pooling past experiences or knowledge in this way can create a whole-class word cloud that makes every learner feel valued and that can be used to structure new information as the case proceeds.

The second axis along which the risk to the student can be analyzed is the openness of the question. Some questions are lower stakes, such as true/false and multiple-choice questions that provide a restricted choice of solutions (students select an answer among the given options). This is our preference for the first few questions of the video case. Once students have warmed up, the questions can become less guided. The goal is to build toward questions where students are provided little structure and must come up with their own response. This includes questions such as “Design an experiment” or “What are the ethical implications of this work?” Typically, the answers to these questions are more nuanced and the instructor can use different student answers to explore the reasoning and arguments that led each student to their conclusion.

The third axis of social risk stems from how visible a student’s answer is to peers. At the beginning of a case, it may be helpful to seek ways of collecting answers anonymously, for example, through the use of a clicker system. As students develop trust in their peers and instructors, their answers may become more visible to peers, for example, by sharing their predictions on a graph on a whiteboard in front of the class.

Finally, the fourth type of risk comes from the level of ownership that a student is asked to demonstrate in answering a question. Initially, students should be able to make their pronouncements within the safety of a small group or team. The group might discuss a multiple-choice question and hold up a colored index card to indicate the team’s unified answer, giving the group ownership over the answer rather than an individual student. If the answer is wrong, no single student is singled out to the class. As the video case progresses, instructors may wish to make individual students accountable for their response, forcing each student to engage and take ownership for their thinking. This is a higher level of social risk and should only come after trust has been developed in the class. To scaffold it, students should first be given the opportunity to test their ideas on a peer and receive feedback (i.e., engage in think-pair-share) before sharing their answer with the class.

The questions in a video case study should be scaffolded such that the social risk to the student starts low and builds during the activity, ensuring maximal participation as it gives students the opportunity to warm up and build trust and rapport. Figure 2 proposes a scoring system that analyzes a question along the four axes and provides a risk score. Using this tool, instructors can rapidly assess the risk level of each question in their video and modify the type of question and how the responses are collected to scaffold the progression of risk during the video case study. The risk score of the questions should start low and increase during the activity. This tool should not be thought of as rigidly prescriptive, but rather as a quick way to assess risk progression. Instructors should gauge the level of trust in their class and determine how best to use this tool. It is far more important to scaffold the risk at the beginning of a course while the students don’t know one another than it is at the end of the semester when the class rapport is well developed.

Video case studies as homework

Video cases have the flexibility to be assigned as homework for flipped learning or within a distance learning environment. Video case studies can be crafted using tools that pause the video and question the students at locations determined by the instructor. These tools solicit an answer to a question from the viewer and then record each student’s response for the instructor to access. EdPuzzle () and PlayPosit () are two examples of such tools.

Instructors can also encourage classroom discussion on the video case study out of class. Discussion boards embedded in a course management system, or tools such as FlipGrid (), which allows students to post a video response, may facilitate the exchange of ideas among students.

Limitations of a video case study

By virtue of the fact that the video used in a video case study is short (under 10 minutes), videos are less detailed than a scientific article in presenting the methods, data, and nuances of their interpretation. Furthermore, by watching a video, students are not becoming accustomed to reading the scientific literature and are not familiarizing themselves with the way in which scientists write and present their work. However, a video case study can serve to introduce and guide students—particularly novices—to understand the concepts of an experiment which then prepares them to tackle a related scientific article. Thus, as a follow-up activity, instructors should consider assigning the original paper to provide fuller knowledge of the scientific method, other results of the study, and broader conclusions reached by the researcher.