feature

Show Your Students How to Be More Persuasive When They Write

Journal of College Science Teaching—July/August 2020 (Volume 49, Issue 6)

By David J. Slade and Susan K. Hess

After a grueling grading campaign, a chemist asked a writing and rhetoric specialist for help improving formal reports in the introductory organic chemistry lab. Together, we realized that the very best student reports employ many persuasive moves in the combined results and discussion subsection, whereas weaker papers omit the persuasive language. To make students aware of the need for persuasive language throughout their reports, we then developed a reworked assignment prompt and a lab-period-long workshop, in which we highlight persuasive moves by walking students through three key steps: oral argument, analysis of key rhetorical patterns (color-coded) that should be present in both a combined results/discussion section and an introduction section, and peer review for those key rhetorical patterns. Set in the context of discussions of the argumentative and rhetorical functions of each subsection of a lab report, this workshop helps illustrate the purpose—to convince a skeptical audience of the most plausible interpretation of some collection of data—behind many chemistry writing conventions. After the workshop, student work shows modest but visible improvement in their use of evidence in science writing; student understanding of their task shows appreciable (and appreciated) improvement.

How do good formal reports differ from weaker examples?

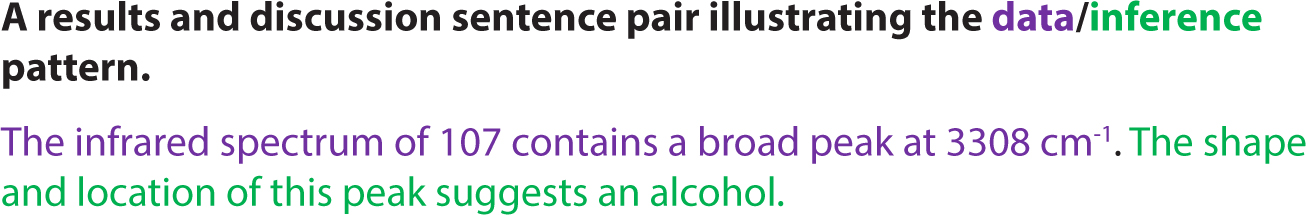

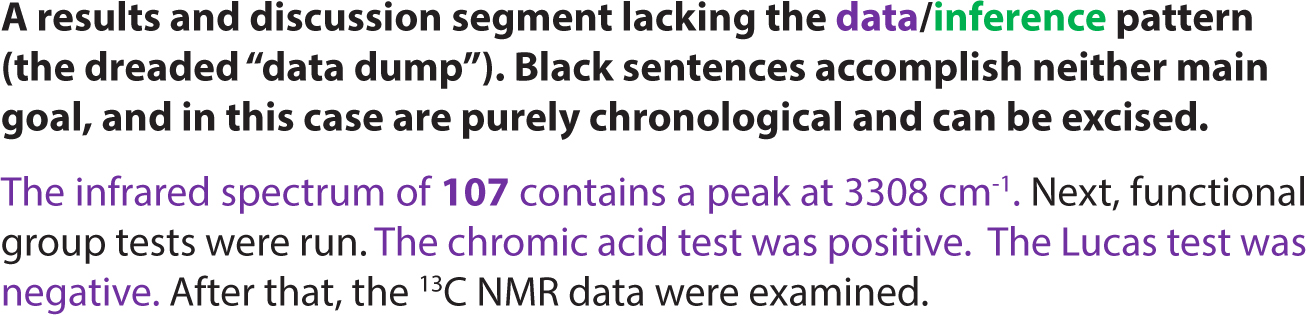

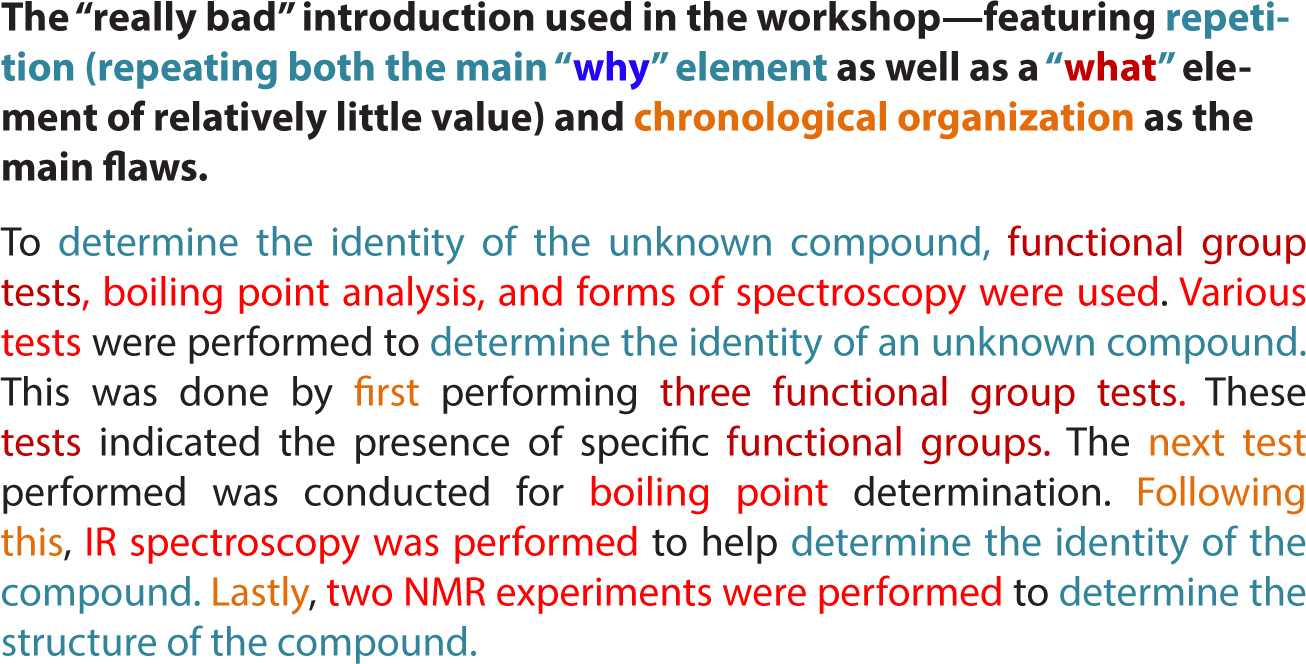

When grading a large stack of formal lab reports, a chemist (DJS) was struggling mightily with reports that had no major scientific errors and were nevertheless underwhelming. There were enough of these papers, and identifying the nature of the weaknesses in them was difficult enough (Sommers, 1982), that he sought help from a writing and rhetoric specialist (SKH). She compared many well-written results and discussion samples with poorly-written samples and noticed a recurring rhetorical pattern, which she color-coded for easy recognition (Figure 1): Any combined results and discussion section must both include key results (or data), followed immediately by interpretation (inferences that follow from the result). The pattern is not a surprise to any serious writer in the sciences (Robinson et al., 2008 p. 112), but seeing it color-coded was nonetheless revelatory for DJS. Even where the scientific data had been interpreted well, papers that did not contain the pattern were unconvincing, often relying on simple chronological structures or containing “data dumps” (Figure 2). The good papers, in contrast, revealed that the pattern was both necessary and sufficient: As interpretation clauses build on each other, eventually all the evidence leads to a cohesive, logically coherent conclusion.

The key insight for DJS, not apparent until color coding laid the pattern bare, is that interpretation clauses are inherently persuasive in nature—SKH is not a chemist, and she was color-coding purely on the basis of the rhetorical moves in the language. Good student writers (and science faculty) reflexively and subconsciously advance an interpretation of our data using patterns that can be reliably color-coded by those without knowledge of the underlying chemistry; this is how we write persuasively. While students usually have the ability to write persuasively, and usually do so in their concluding paragraph(s), they rarely use such moves elsewhere in the reports, even when a combined results and discussion makes such moves necessary.

In the specific context for our assignment, which requires identifying a compound by nuclear magnetic resonance (NMR) and writing a report in the style of a journal article, the scientific task is challenging: (1) students are relatively inexperienced with the science (interpretation of various spectra), (2) the science is hard (students struggle to convert raw data files into worked-up spectra, let alone interpret those spectra well), and (3) the data students have collected are often confusing, or in extreme cases, contradictory. Unfortunately for students, identification of the compound, however difficult, remains the easier portion of their assignment: The harder portion is convincing an audience that their identification is the best possible explanation for their data (Lunsford, 2015), especially since that audience is professionally skeptical and will be looking for alternative explanations.

Illustrating what students should be doing and why

Because we wanted to reach students who lack deep knowledge of their task, we reworked the prompt for this assignment to stress the need for good argumentation (Walker et al., 2011) and persuasion (Osborne, 2010). Students’ task is now framed as trying to use evidence to convict the compound in a court of law—an inherently persuasive task. Simultaneously, we created a writing/revision workshop devoted to using evidence well in formal scientific writing. We require students to submit a rough draft report, so that they have already committed to an interpretation of their data. Next, after some analysis of persuasive moves (color-coded) in samples, we ask students to identify the patterns present in a peer’s paper, which are more easily spotted than those in their own work (Tinberg, 2015), as a means of training in the desired approach to peer review (Guilford, 2001; Gerdeman et al., 2007; Topping, 1998) and in the capacity to seek out (and color code) the desired patterns in their own work. All students benefit greatly from direct feedback that reveals their progress—and color-coded feedback enhances that “reveal” greatly.

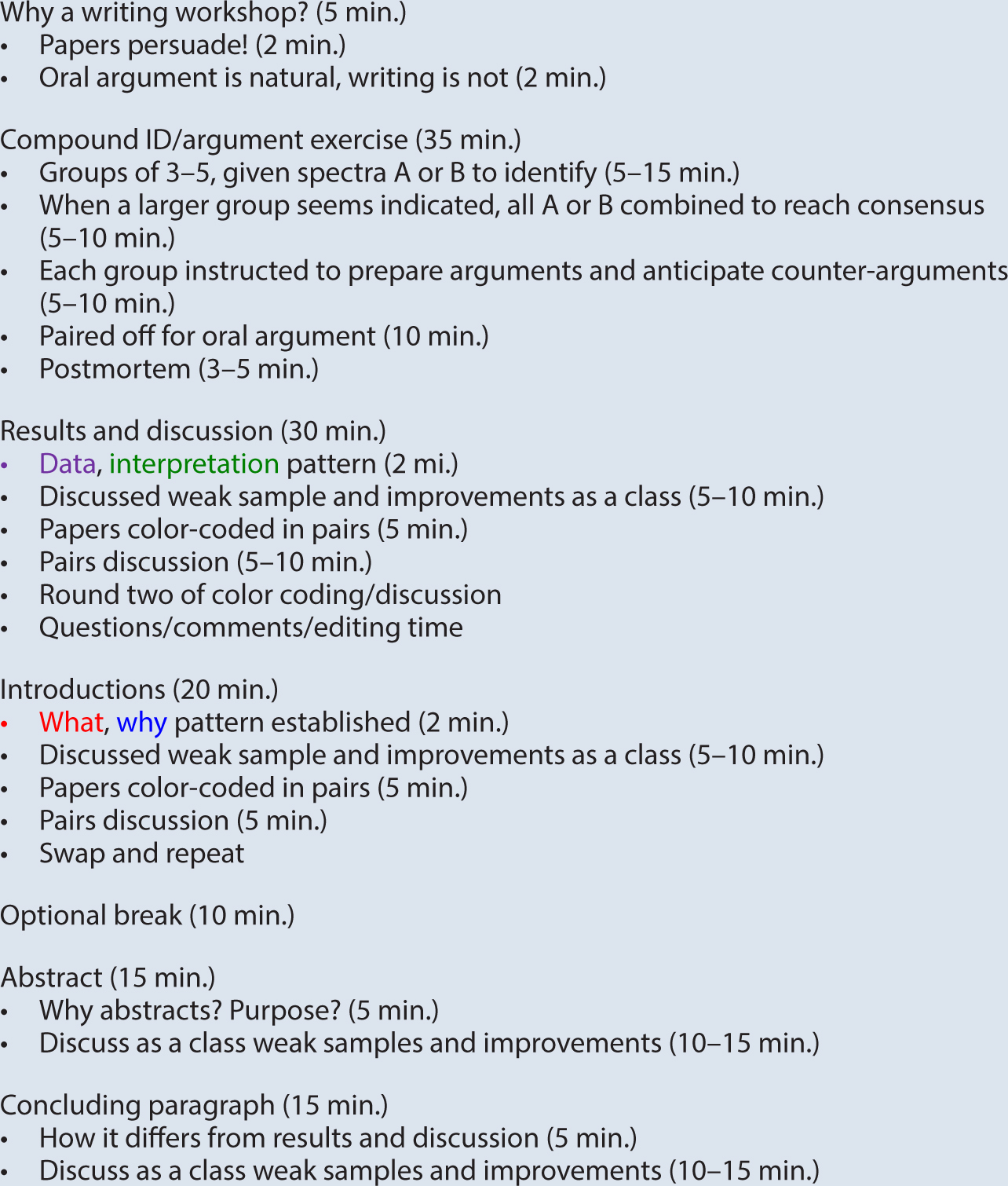

How we run the workshop

We open with the most important element of the workshop, the oral argument exercise, to illustrate the nature of the writing task and prospective audience for the reports. Although our workshop focuses on organic chemistry compound identification, nearly any other interpretive activity would fit in nicely here. We mean for this exercise to inculcate belief in the skeptical nature of any scientific audience (Lunsford, 2015) and show that the logic underlying many chemistry writing conventions stems from a need to persuade this professionally skeptical audience (Robinson et al., 2008). The specific details of the interpretive tasks are irrelevant as long as there are two such tasks that can be completed simultaneously in groups.

Students are divided into small teams (3–4 students), and given a fresh identification problem to work on, with half the groups receiving problem A, and the other half (a somewhat more difficult) problem B. After about 10–15 minutes, at least one team should have made significant progress, or even arrived at a solution. This is an appropriate time to join all “team A” students together and all “team B” students together. With gentle nudging, if necessary, the larger groups usually settle on the correct solutions to their problems in short order. At this point, each team is tasked with preparing oral arguments as to the identity of their compound. Students are informed that they will be paired off, and each will be trying to convince a skeptical opponent, so that their arguments must anticipate likely objections. Because both problems (A and B) were chosen so as include a bit of difficult or confusing data, students can identify what the muddiest point is likely to be. With an odd number of students present, the science instructor must participate in the arguments to follow; mentioning to students that they may find themselves arguing with the instructor tends to concentrate their minds wonderfully as they prepare their arguments and counterarguments. Once the teams are ready, students are paired off with a counterpart from the other team, and the arguments begin. Students in the listening role are instructed to disagree with the given interpretation, to advance counterarguments, or to provide alternative explanations where possible. Naturally, the speaker’s role is to convince this skeptical audience. Then students switch roles.

Because arguing persuasively in an oral setting is hard-wired into our species, we know students will do this task well, which makes it an effective simulation and opening step. The oral exercise immediately illuminates the need for a formal report to be persuasive. Students also recognize how much we rely on nonverbal cues when we argue out loud and, when the conversation partner does a good job of playing devil’s advocate, students experience the need to choose the simplest logical path when explaining their identification, and even how complex data (which may be initially confusing) can later confirm what is believed to be true. Experience of the complexity of oral argument in this exercise helps make transparent the complexity of and careful crafting needed in written argument, and we will, throughout the workshop, refer back to the oral exercise as needed.

Arguing orally also primes students to recognize that science writing relies heavily on visual aids. Prior to this exercise, students tend to see visual aids as mere decorations, not as integral methods of presenting evidence in an argument. In rough drafts, students tend to lump all their visual aids together at the end; however, when arguing aloud, students point and refer to the spectra that they are holding and/or draw structures on the page as and when these become relevant. As workshop facilitators, we simply point out this behavior, and mention that the text itself must serve as a guide to and through the visual aids. After the oral exercise, students understand the need to persuade as well as the need to use data and figures as evidence while they are arguing, a good first step to writing better reports.

We note, as we transition to the rhetorical patterns we wish to highlight, that a skeptical audience will distrust conclusions unless the groundwork has been effectively laid first. Our workshop is designed with a combined results and discussion section in mind (as is convention in organic chemistry), and our primary goal is to help students understand and see the key pattern—data first, followed by interpretation—that students must employ in their own writing (Figure 1). We show two samples, one with the desired pattern and a longer, weaker sample (Figure 2) that contains only data (purple) and (black) filler. Students are asked to analyze the difference between the two samples; the complete absence of the necessary interpretation in sample 2 is striking enough that students immediately express that when they are revising, they should ruthlessly remove filler, and add interpretation. We end this sample analysis step by leaving the “somewhat improved” sample, with filler removed and interpretation added, visible as a model during peer review.

We now ask students to color-code a partner’s results and discussion sections. After about 10 minutes, students almost always spontaneously start a discussion; if not, we simply ask them to start talking. When both writing specialist and chemist are in the room, a very useful and wide-ranging question and answer session tends to break out, invaluable both to students and to us in developing and refining subsequent iterations of the workshop—as a “novice writer of chemistry,” the writing specialist can serve as an avatar for students and ask probing questions of the chemist, forcing the scientist to think carefully about elements of science writing previously “known” only at a subconscious level. We would strongly advocate for a “dual facilitator” model, especially in initial iterations of similar workshops.

Once the peer review activities and pace of questions slow, students are asked to write notes to guide their future revisions of their own report.

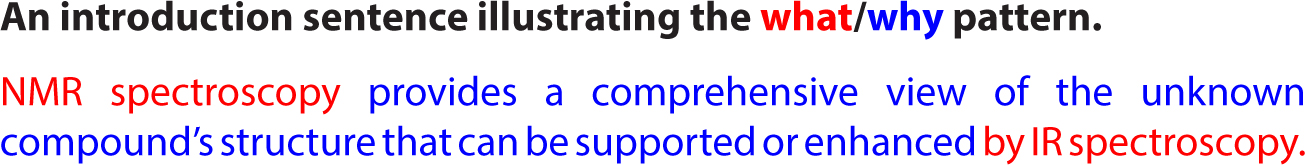

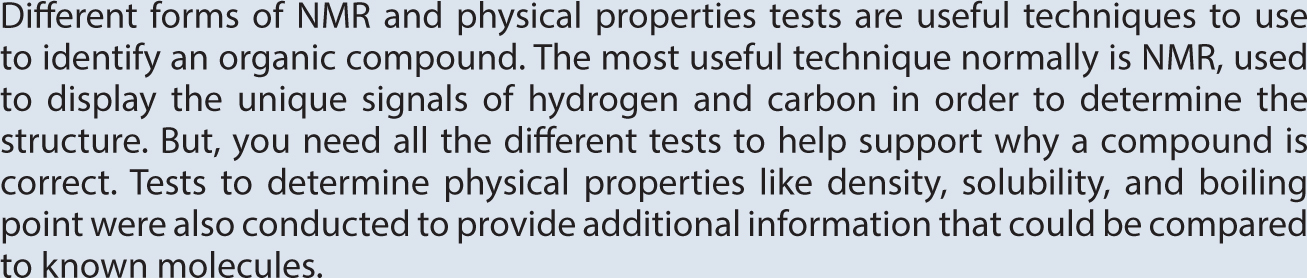

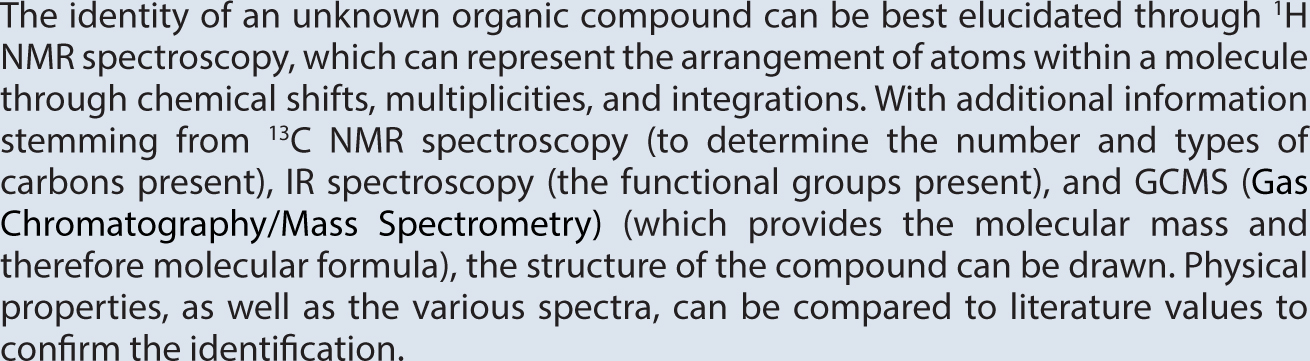

We then repeat the cycle of analysis of rhetorical patterns and peer review, moving to the introduction section, which requires a different rhetorical pattern: of “what you did” (identity of technique employed) and “why you did it” (value of techniques) sentences (Figure 4). Here, again, weaker and stronger samples are color-coded to help students see the patterns, and we intend for sample quality to increase incrementally, going from “really bad” to “somewhat improved” to “better still.” Small increments serve to make the mechanisms of improvement transparent: When a set of samples jumps too quickly from “bad” to “excellent,” students lose the opportunity to process how and why the improvement happens at the metacognitive levels needed for transferable learning (Tinberg, 2015; Dryer, 2015). The weak sample in Figure 5, a composite sample written in the style of the formal reports that led to the creation of the workshop, illuminates the error of including far too many “what was done” sentences, failing to address why those techniques were chosen, (other than the obvious “why” represented by the overall goal of the paper) and is organized chronologically—a logical but ineffective choice that fails to set the groundwork for persuasion by identifying the relative importance of each technique and prioritizing the most valuable. With a clear rhetorical pattern (what/why) visible in a “somewhat improved” sample for students to refer to, we once again ask for a round of peer review and feedback, and for students to write notes ahead of their revision of their own report.

To end the workshop, we put the rhetorical patterns of the introduction and results/discussion section into context by tackling the abstract and conclusion as a class: We discuss the rhetorical function of each and give key pointers before examining weaker student samples. Most students in their second or third college chemistry course have read very few (if any) scientific abstracts and have little appreciation for the rhetorical challenges inherent in these. Using an authentic sample to illustrate the “advertisement” function of a good abstract clarifies the conventions involved (Lerner, 2015).

The conclusion is also deceptively challenging for students to write well. Even though few are confused as to its rhetorical purposes, in journal article-style reports, especially with this specific assignment, students must reach their key conclusion(s) part-way through the results and discussion section, and use the remainder of their results and discussion to confirm their identification. Therefore, a well-written results and discussion section paradoxically makes the conclusion much more difficult to write, if one wishes to avoid repetition—a key point that can be stressed in the context of a full-class sample discussion. While peer review might be of value here, we find that mid-level students are not yet able to give effective feedback on the abstract or conclusion sections; students also are cognitively fatigued by the long workshop at this point. We also choose not to undercut our message that the conclusion and abstract sections are best written, or at the very least revised, after the rest of the report is complete by asking students to review these sections at this stage.

The workshop concludes at about the two and a half hour mark, and an improved draft based on findings from the workshop is requested within one week.

Other points of emphasis: “point last” versus “thesis first”

Throughout the workshop, we explicitly describe the rhetorical purpose of each subsection: (1) the abstract argues that the reader should purchase the paper, (2) the introduction argues that the reader should apply the paper’s approach when faced with a similar problem, (3) the results and discussion section reaches a conclusion as to the most probable identity of a compound, based on the data, and (4) the conclusion serves as a final, but nonrepetitive, summary of the entire paper. Each section in turn serves the overall goal of persuading the reader of a plausible identification.

As mentioned before, we rely on specific moments in the oral argument exercise to help us reveal to students the need for these persuasive moves, but we also stress just how different a good results and discussion is from students’ previous persuasive writing experience in other courses; these often encourage a “thesis first, support second” structure (Anson, 2015). Indeed, many students provide the identity of their compound at the outset, and then provide interpretation (if any) only to support the claim they have assumed to be true. By contrast, because of the professional skepticism of the audience, we expect that the data will be provided first, and then interpretation of that data will follow—in other words, the point or “thesis” of the result/interpretation sentences or paragraph or paper comes last—purple precedes green. During the wrap-up of the oral argument and when establishing the rhetorical pattern of the results and discussion, we explicitly highlight the need for data-heavy visual aids, and the associated interpretation of those visual aids, to come well before the figure that reveals the identity of the compound.

Beyond the content goals discussed, we mention to students that the workshop was created and implemented primarily to demand an additional draft while offering some feedback (Lim, 2009; Slade, 2017, Slade & Miller, 2017). We stress the need for revision (Downs, 2015; Sommers, 2006; Dusseault, 2006), while noting how effective peer review can be when students have been trained in what to look for (Osborne, 2010; Gragson & Hagen, 2010; Guilford, 2001).

Does it work?

Comparing original and revised student drafts shows modest but visible improvement in the results and discussion section: Students focus on adding green sentences interpreting the data with variable success—but the attempt to interpret the data is the key process improvement that we are looking for, regardless of the outcome. Improvements in organization and in correspondence between figures and text are also visible in some reports, especially those that previously lumped visuals at the end. Scientific errors present in original drafts, however, often remained unchanged, suggesting that (as expected) many students use revision opportunities only to make minor tweaks, as opposed to more global changes, in language, structure, or content revision (Sommers, 1980). Consequently, the final drafts do not necessarily score much higher on the scientific aspects of the rubric than original drafts. However, because a host of formatting errors also are routinely identified and corrected by the peer-review process, student scores tend to improve as they follow the directions of the assignment more carefully.

Students more often attempt to improve their introduction sections by subtraction: removing filler phrases and sentences and removing chronological information (Figure 6). Postworkshop, most students organize by relative importance of the techniques, which suggests that the main messages have been internalized. Students also clearly have heard the message to “be more specific” as many students err by including their specific results (and attempting to interpret them) in the introduction section. These well-intentioned (but flawed) introductions reveal the difficulty of adhering to the conventions of disciplinary writing. We are encouraged by the visible progress toward the goal and are not disheartened when the desired final outcomes (after a single additional draft!) remain aspirational for the median student.

The very best introduction sections are represented by the composite sample in Figure 7. A significant challenge in the introduction is to be specific about the techniques used, and their broad utility for a reader’s own problem, without simply diving into the results. Success in this task requires both a deep understanding of the scientific techniques used, as well as an understanding of the disciplinary conventions described in the workshop. These examples (starting with the very weak work in Figure 5) represent something of a continuum, and vastly more students are able to improve by subtracting filler or repetitive text than are able to reorganize well or to add new, more specific text. Finally, student improvement was most visible in the introduction section for precisely the reason we have highlighted introduction samples in this manuscript: This section is the one that is most accessible to students without discipline-specific scientific knowledge.

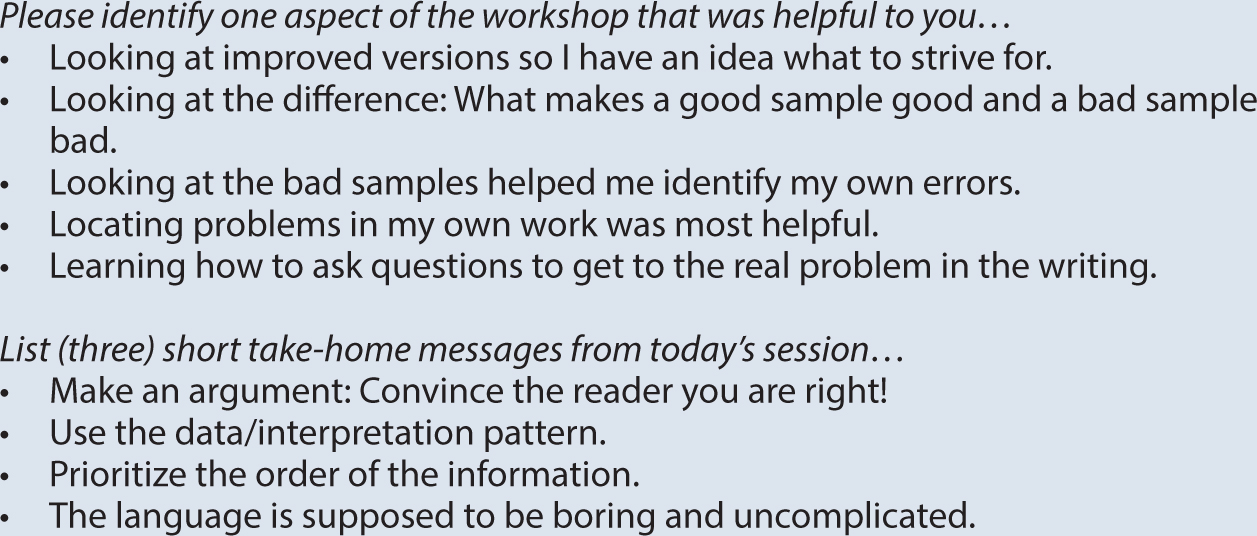

In brief postworkshop surveys, Figure 8, students stated that their initial confusion about how to write a formal report was a significant impediment to their success and that the workshop had clarified their task. Specific responses also signal a more nuanced understanding of the rhetorical moves within scientific writing. Overall, students (and faculty) perceived the workshop as valuable, even though—or possibly because—scientific writing is such a complex act that student performance overall changed only incrementally. We created this workshop to help students understand the persuasive moves a good lab report must make, including effective use of visual evidence; to force students to write a second draft based on effective feedback; and to train students in a specific peer review task and begin to inculcate scientific skepticism as an approach to their own work—and we considered it a bonus when students thanked us for the opportunity to do more work to create their revisions.

David J. Slade (dslade@hws.edu) is the organic chemistry laboratory coordinator in the Department of Chemistry, and Susan K. Hess is a writing and teaching/learning specialist at the Center for Teaching and Learning, both at Hobart and William Smith Colleges in Geneva, New York.

Literacy Teacher Preparation Postsecondary