Research & Teaching

First Do No Harm

In-Class Computer-Based Exams Do Not Disadvantage Students

Journal of College Science Teaching—July/August 2022 (Volume 51, Issue 6)

By Deena Wassenberg, J.D. Walker, Kalli-Ann Binkowski, and Evan Peterson

Computer-delivered exams are attractive due to ease of delivery, lack of waste, and immediate results. But before moving to computer-based assessments, instructors should consider whether they affect student performance and the student exam experience, particularly for underrepresented student groups and students with exam anxiety. In this study, we gave students in an introductory biology class alternating computer and paper examinations and compared their performance and exam anxiety. On average, students did not perform differently on the two exam types. Additionally, the performance of different subgroups (e.g., non-native English speakers, White students, non-White students, first-generation college students, male and female students, and students who have exam anxiety) was not affected by exam type. Students overall and in different subgroups reported nearly identical amounts of test anxiety during paper and electronic exams. The average time to complete the exam was shorter for computer exams. Students were relatively evenly split as to expressed exam-type preference and did not indicate that one format facilitated cheating more than the other. Based on these results, we believe computer-based exams can be an efficient and equitable approach to assessment.

Computer-based exams offer advantages over paper-based exams, including grading of certain question types automatically (e.g., multiple choice, fill-in-the-blank, matching), providing immediate feedback on exam performance, generating multiple versions of exams easily, reducing resources invested in the exam (in terms of paper, photocopying, and purchase and upkeep of automated grading machines such as Scantrons), administering exams in alternate settings outside of class time (home or elsewhere), embedding multimedia, utilizing computer tools or specialized programs (as outlined in Fluck, 2019), and reducing instructor workload for each exam, which can encourage more frequent, lower-stakes assessments (an approach that has been shown to improve student achievement; Roediger & Karpicke, 2006). These advantages and the availability of affordable proctoring software have led to computer examinations being implemented with increasing frequency in university courses (Fluck, 2019).

Questions and reservations about computer-based exams include concerns about technology glitches, student access to computers for taking the exam, whether this exam format disadvantages students who would perform better on paper-based assessments, and academic integrity (if the computer format would make it easier to cheat; e.g., Carstairs & Myors, 2009; Dawson, 2016).

Some research has compared student performance and students’ perception of computer exams. Earlier literature yielded mixed results, with some studies showing a “test mode effect” favoring paper exams, some favoring electronic exams, and some reporting no significant difference (Bunderson et al., 1989; Mead & Drasgow, 1993). Many of these earlier studies were concerned with the effects of student familiarity and experience with computer technology, a factor that can reasonably be expected to have declined in importance for American college students (Dahlstrom et al., 2013).

In more recent literature, several studies used an experimental design similar to the format we employed in this research—namely, a split-half design with random assignment. Ita et al. (2014) found no test mode effect when it came to student performance in an introductory engineering course, but students expressed a strong preference for the paper exams. Washburn et al. (2017) studied the effects of test mode among first-year veterinary students and found a small but significant test mode effect favoring electronic exams, along with a student preference for paper exams.

Other recent relevant literature includes a study of the effect of switching to a computer exam format in K–12 standardized testing (Backes & Cowan, 2019), in which the authors described a significant “online test penalty” for the first year of implementation and a smaller penalty in the second year of testing. Boevé et al. (2015) observed student performance and perception of a computer-delivered versus paper-based exam in a university psychology course. While Boevé and colleagues found no difference in performance, the students reported significantly better abilities to take a structured approach to the exam, monitor their progress during the exam, and concentrate during the exam when taking the exam on paper. Additionally, more students preferred paper-based exams than computer-based exams. Bayazit and Askar (2012) found no significant difference in performance on computer exams but did find that students taking the computer-based exam spent significantly more time on the exam. Some studies indicate that students’ prior experiences with computer testing positively impact their perception and preference for computer testing in the future (Frein, 2011; Boevé et al., 2015).

As we looked to convert our examinations to computer examinations, we felt it was necessary to explore whether or not this new exam format would disadvantage students. We had four research questions for this study:

- Do computer-based exams affect overall student performance?

- Do computer exams disproportionately impact various subgroups of students in our classes1 (as shown in some studies, such as Parshall & Kromrey, 1993)? We were particularly interested in the effect of computer exams on students who are traditionally underrepresented in the sciences (students from underrepresented racial minority backgrounds, first-generation college students, non-native English speakers, and female students), as we did not want to inadvertently add a barrier to science participation for these groups.

- How do computer-based exams impact the student experience of taking the exam in terms of feelings of anxiety during the exam? Exam anxiety disproportionately affects female students (Hannon, 2012; Ballen et al., 2017), and students who have high levels of exam anxiety are likely to face academic barriers (Chapell et al., 2005; Rana & Mahmood, 2010; Vitasari et al., 2010).

- What is the effect on student perception of the ease of student academic misconduct (cheating) during exams when switching to computer exams?

To address these questions, we designed a within-subjects experimental study in which students were given two (of four) exams in an introductory biology class on paper and two on the computer. Student results on the exam and their perceptions of their exam anxiety were analyzed, incorporating demographics of interest.

Methods

We chose to conduct this study in a relatively large enrollment (130–150 students) biology course for non-biology majors. This study took place over two semesters (fall 2018 and spring 2019), with identical setup in each course. Students were randomly assigned to lecture teams by laboratory section, such that, as much as possible, students were in lecture groups with individuals from their lab section. There were some students who did not take the laboratory component of the course, and these students were also mostly seated together.

Students were assigned to testing group A or B by their lecture team (odd teams were A, even teams were B). Students in group A were assigned to have computer exams for Exams 1 and 3. Students in group B were assigned to have computer exams for Exams 2 and 4. This course had four exams; three exams had equal value (11% of the lecture and lab total score), and the fourth exam had a higher value (16% of the total score).

All students were surveyed at the beginning and end of the semester. Students were awarded a small extra credit incentive to take the survey. Survey response rates were all above 80%. In the survey, students were asked questions relating to exam anxiety, using four questions that have been adapted from the Motivated Strategies for Learning Questionnaire (MSLQ; Appendix 1 online; see Pintrich et al., 1991). The test anxiety questions showed good reliability (Cronbach’s alpha = 0.896 for the presemester survey and 0.888 for the postsemester survey). These questions were designed to measure “trait” anxiety (the tendency for a person to feel exam anxiety generally; Sommer & Arendasy, 2014), but we also measured “state” anxiety (the anxiety experienced during this exam) by administering the same four questions that were adapted to encourage students to reflect on the exam experience they just had at the end of each exam (Appendix 2 online). In a postsemester survey, students were asked questions about their preference for paper versus computer exams and their perceptions of the ease of cheating on the two exam formats, as well as for free-response comments on their thoughts on the exam format. This study was approved as an exempt study by the University of Minnesota Institutional Review Board (Study 00003608).

Exams

All exams were multiple choice and had between 45 and 50 questions; students answered the same questions regardless of exam format. Each exam had four optional survey questions at the end relating to students’ experience with exam anxiety during that exam. Exams were proctored in person by at least two people who circulated the room, answered questions, and observed test-taking behavior to watch for possible cheating activity.

Paper exams were administered by photocopied test packet with a Scantron answer sheet on which students used a pencil to fill in bubbles that corresponded to the correct answer. Students wrote their names on both the test packet and the answer sheet. Students’ answer sheets were identified by university-issued student identification numbers. Three forms of the paper exam were created, with the same questions in different orders. The three forms were mixed so that it was unlikely that people sitting next to each other would have the same form; the form variation was done to discourage cheating. Students needed to fill in a bubble corresponding to their exam form so the exam could be graded with the proper key. As students turned in the paper exam, the time they turned them in was noted. Time data for computer exams was obtained from test data on the Canvas Quiz interface.

Computer exams were delivered in the classroom (alongside students taking the exam on paper) using the Classic Quiz function on the Canvas educational platform. Students taking the computer exams brought their own laptops or borrowed one from a supply we brought to class (most students brought their own). The program Proctorio was used as a remote proctor. Proctorio lockdown settings were set to force the exam to fill the entire screen, disallow multiple open tabs, log the students out of the exam if they navigated away from the open exam window, display on only one monitor, disable clipboard, disable printing, clear cache, and disable right-clicking (video and audio recording were not enabled). The exam was set to randomize the order of the questions.

Students taking the paper exams were required to take the exam on paper. Students who were assigned to take computer exams who had technical difficulties or who had a disability accommodation and preferred paper exams were given paper instead of computer exams. Fewer than five students per exam who were assigned to the computer platform ended up taking the exam on paper.

Results

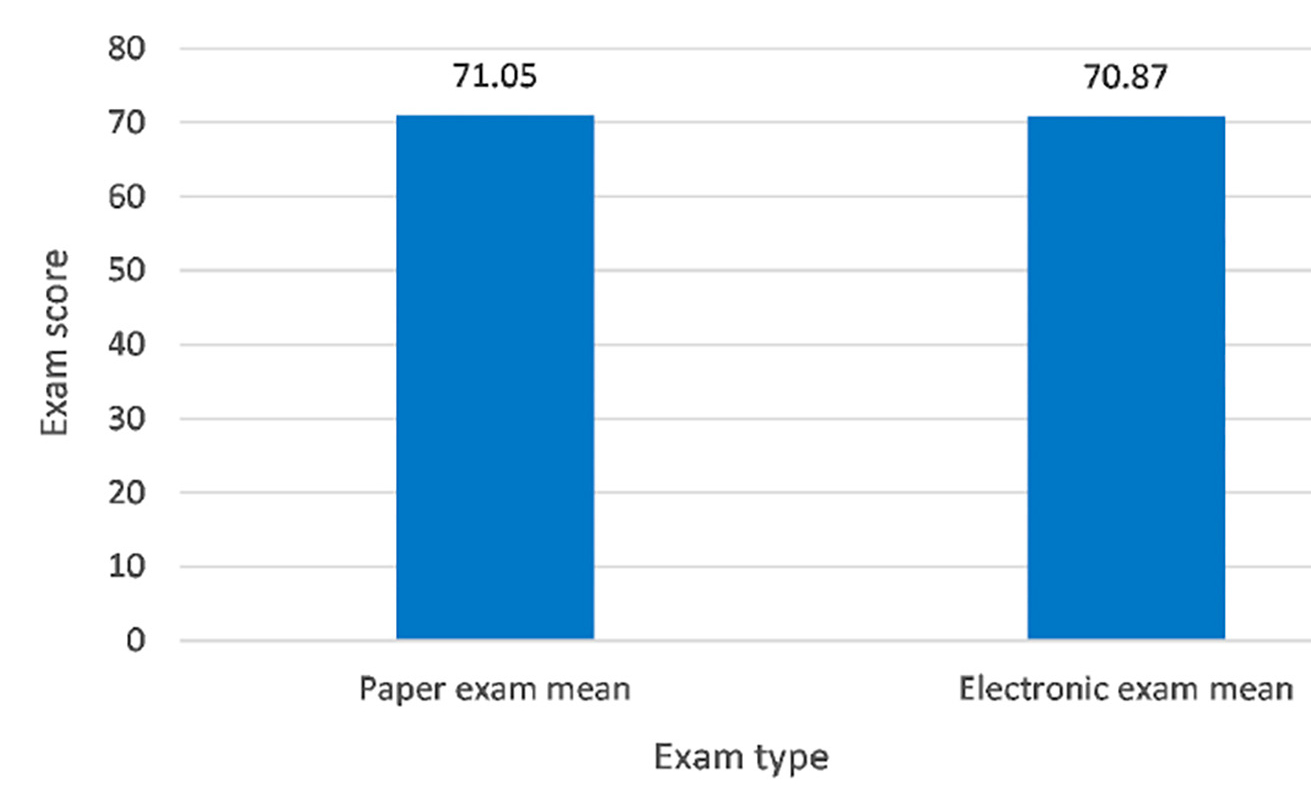

Students in the two semesters examined in this study did not differ significantly in terms of any available demographic variable (e.g., gender ethnicity, age) or performance metric (e.g., ACT score, grade point average), so data from the two classes were aggregated for analysis purposes. Students in testing groups A and B also did not differ significantly on any available exogenous variable. A total of 186 students took all four exams in the course in the targeted pattern (two on paper and two electronically). Exam scores for these students were averaged and examined by means of two-sample t-tests. The difference between the exam formats did not significantly affect student performance on exams (Figure 1).

Mean score out of 85 total points on exam.

Note. p = 0.634.

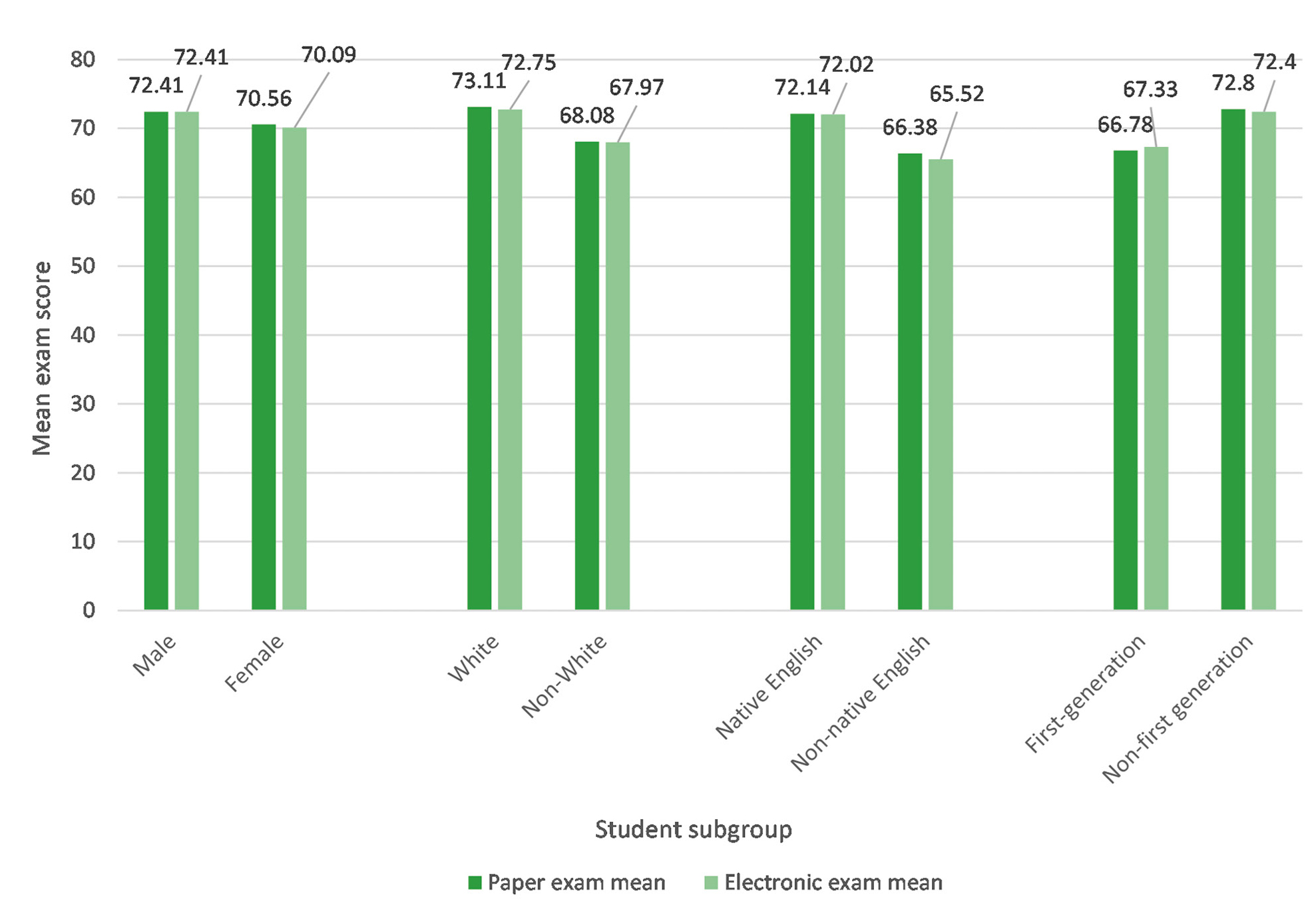

Performance by subgroup

We examined the performance of different student subgroups to determine whether paper versus electronic delivery had differential effects. The data are displayed in Figure 2. Males and females, native and non-native English speakers, first-generation and non-first-generation students, and White and non-White students all performed equally well on paper and computer exams (all p values > 0.375).

Mean score (out of 85 total points) for different student subgroups.

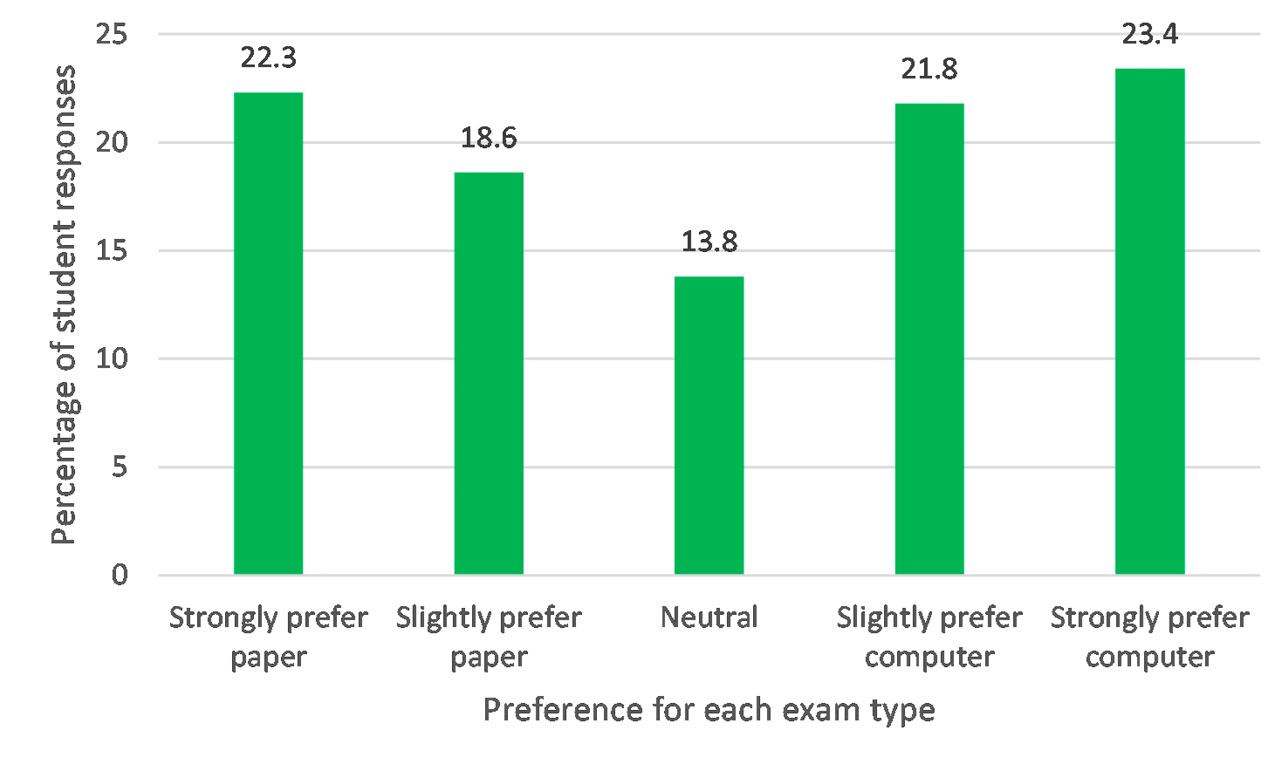

Student preferences

Relatively few students were neutral about exam format. As shown in Figure 3, in response to the question “This semester you took part in a study regarding computer versus paper multiple choice exams. Which exam format do you prefer?” 86% of students expressed a preference for one format over the other, creating an approximately symmetrical bimodal distribution. A small plurality expressed a preference, slight or strong, for computer-based over paper-based exams (45.2% for computer-based exams compared to 40.9% for paper-based exams). There was no relationship (r = 0.002) between exam-type preference and student trait anxiety.

Percentage of students who prefer each exam format.

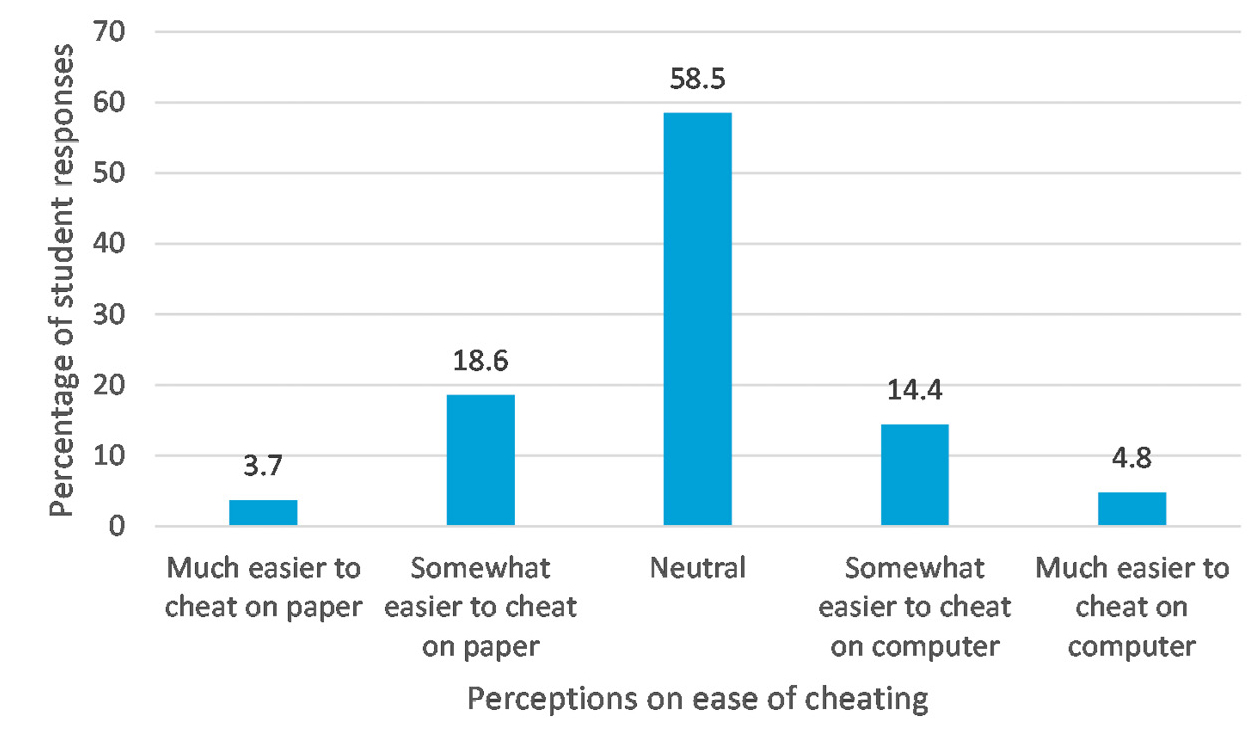

Cheating

A clear majority of students believed that neither computer-based nor paper-based exams made cheating easier. In response to the question “When thinking about issues of academic integrity (cheating), do you perceive one format or the other as easier for cheating to occur?” of the students expressing a view that one format or the other was more conducive to cheating, the distribution was relatively symmetrical. As shown in Figure 4, 22.3% of students said paper exams make cheating easier, while 19.2% of students said the same about computer-based exams.

Student perceptions of the ease of cheating by exam type.

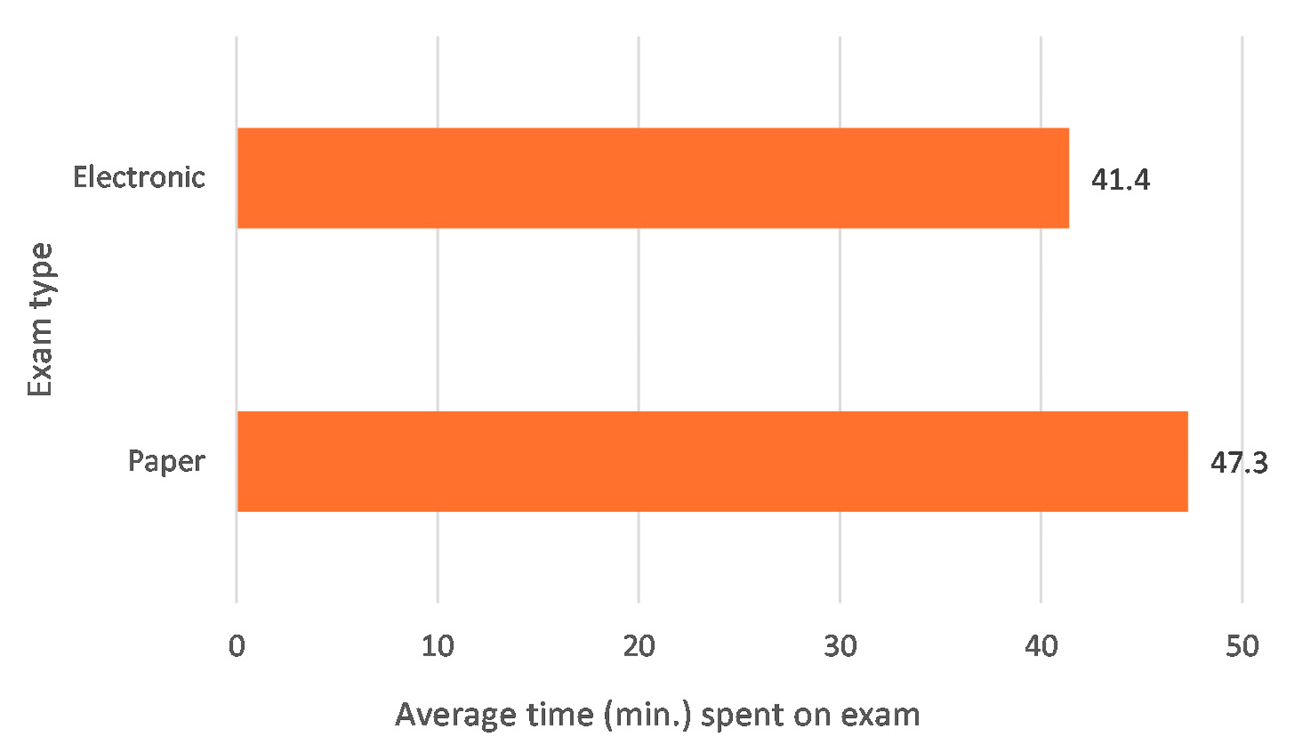

Time spent on exam

As shown in Figure 5, students took significantly less time to complete exams delivered in an electronic format than exams delivered on paper—an average of about 6 minutes less per exam (p < 0.001).

Average time to complete the exam (in minutes).

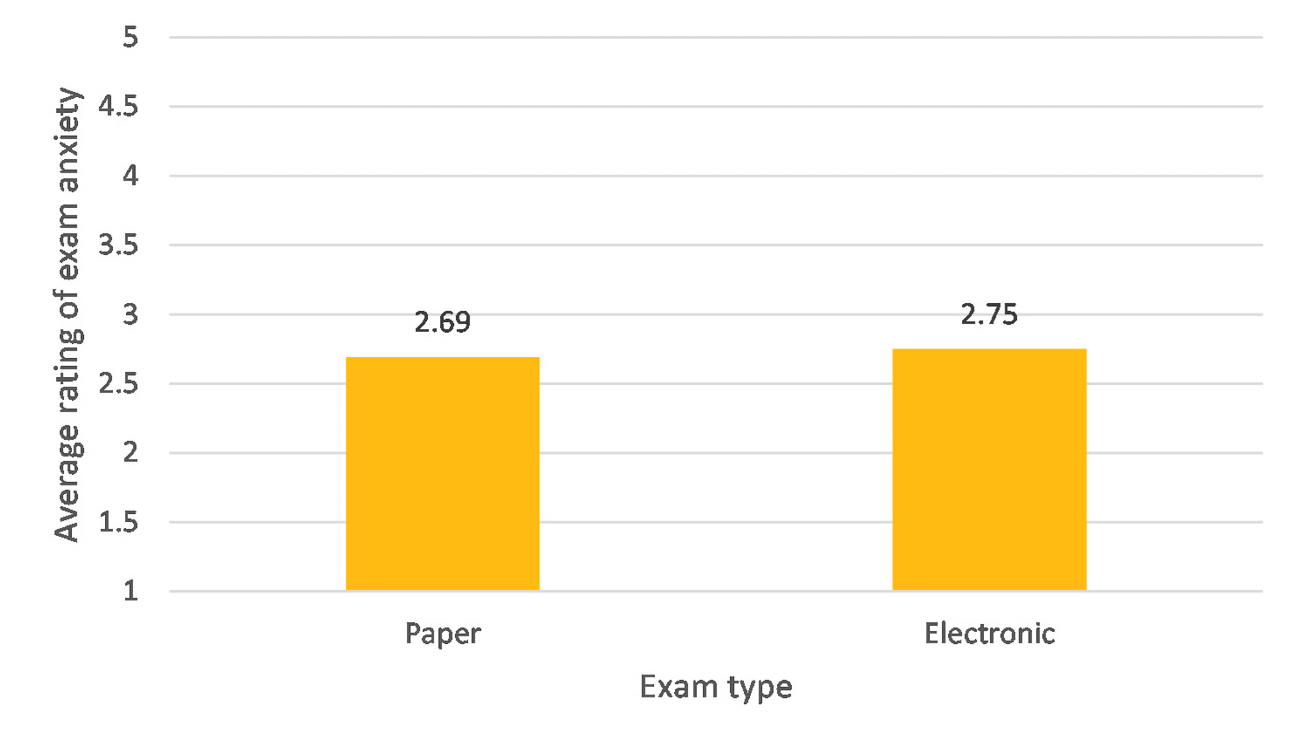

Test anxiety

State test anxiety was measured immediately after each exam, using the four questions that compose this construct from the MSLQ (adapted to reflect the way the student felt on the exam they had just taken). As shown in Figure 6, students reported equivalent levels of test anxiety following both the electronic exams and the paper exams.

Average exam anxiety score on four exam anxiety–related questions.

Note. p = 0.183.

Trait test anxiety was measured by delivering the same four MSLQ questions to students at the beginning of the semester. Trait test anxiety was negatively associated with average exam scores, to a similar degree for paper (r = -0.200) and for electronic exams (r = -0.172).

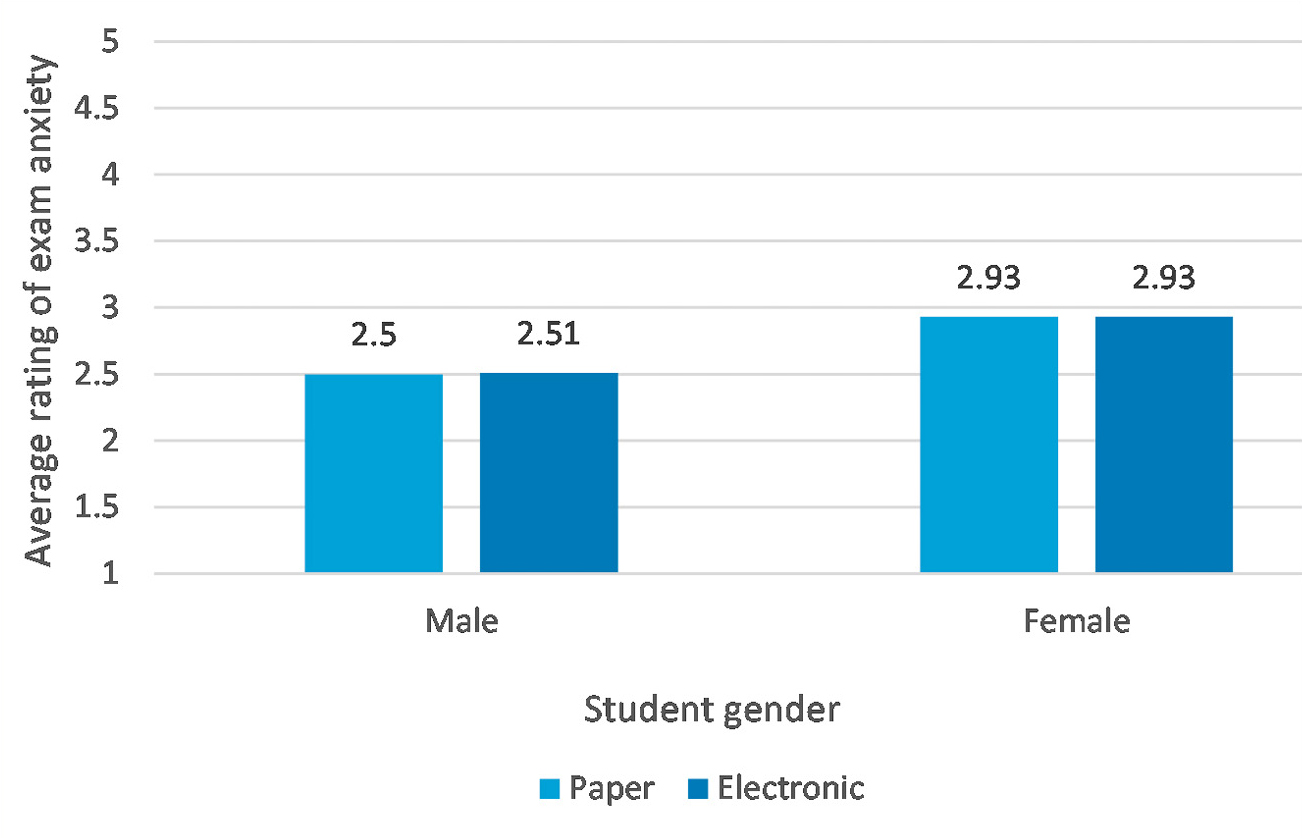

Because test anxiety is known to affect female students to a greater degree than male students (Hembree, 1988; Segool et al., 2014), we examined the relationship between state and trait test anxiety separately for male and female students.

As shown in Figure 7, female students reported higher levels of state test anxiety than male students, but both genders reported near-identical levels across exam formats (p values > 0.8).

Average exam anxiety score on four exam anxiety–related questions for male and female students.

Female students reported higher levels of preclass trait anxiety than male students (mean 4.43 vs. 3.46, p < 0.001). As displayed in Table 1, for male students, preclass anxiety had a similar, negative relationship with their performance on both paper and electronic exams, but for female students, the relationship between preclass anxiety and exam performance was substantially stronger for paper exams.

On the end-of-semester survey, students (n = 114) provided written comments about their perspective on online tests. In Table 2, student comments are placed into categories and expressed as a percentage of student comments (some students commented on more than one topic).

| Table 1. Correlations (r values) between average exam scores and preclass trait anxiety for male and female students | |||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

|||||||||||||||

| Table 2. Percentage of student comments in each category. | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

Discussion

For the first research question, “Do computer-based exams affect overall student performance?” the answer appears to be no. Students had statistically indistinguishable mean scores on the exams. Moreover, there was not a trend in either direction that would suggest a slight advantage or disadvantage to computer exams.

For the second question, “Do computer exams disproportionately impact various subgroups of students in our classes?” again the answer appears to be no. We found no evidence that exam format impacts students differently based on their gender, race, first-generation status, or English as a second language status. While we did not analyze our data for an overall effect of demographic group on exam scores (as this was not the focus of this study), there is a consistent trend in our data for lower scores in underrepresented demographic groups. This trend has been reported elsewhere (e.g., Canning et al., 2019; Alexander et al., 2009), and there are evidence-based strategies that have been reported to reduce these types of gaps (e.g., Casad et al., 2018; Haak et al., 2011). The fact that the results of this study suggest that students are not disproportionately affected by computer exams only means that the different exam format does not exacerbate performance gaps; it does not remove the need to address observed gaps.

The third question, “How do computer-based exams impact the student experience of taking the exam in terms of feeling anxiety during the exam?” has two parts, based on the distinction between state anxiety (immediately following the exam) and trait anxiety (as measured by presemester survey). For state anxiety, students reported indistinguishable levels of anxiety after the two types of exam, and this effect did not vary by student gender. However, for trait anxiety, the relationship between this variable and performance on the two types of exam did vary by gender. Trait anxiety had a similar negative relationship with the performance of male students on paper and on electronic exams, but for female students, trait anxiety was more strongly negatively related to their performance on paper than on electronic exams. This result may indicate that moving to electronic exams could remove a barrier to the performance of female students with higher levels of anxiety.

For the fourth question, “What is the effect on student perception of the ease of student academic misconduct during exams when switching to computer exams?” there is not a clear directional indication of which format makes it easier to cheat on an exam. In trying to assess academic dishonesty, we did not have confidence in asking students about their own conduct. However, we felt that student perceptions of academic dishonesty might be an adequate surrogate for actual dishonest activities. From our observations of student behavior during the exam, we are confident that the vast majority of students were not engaging in academic dishonesty, but we realize that students may be more aware of suspicious activity around them than those proctoring the exam. The majority of students did not have a perception of which way would be easier for cheating. Slightly more students indicated that they thought it would be somewhat or much easier to cheat on paper exams. We are cautiously reassured by these results. There is no indication that we had introduced a new, easy way to cheat by introducing the computer exams.

One unexpected but significant finding was that students took significantly less time doing the computer exams than they did when taking the paper exams. This result was in contrast to the faster performance on paper exams observed in Bayazit and Askar (2012), and time differences were not noted in other direct comparisons that we found in the literature (e.g., Boevé et al., 2015; Frein, 2011; Fluck, 2019; Backes & Cowan, 2019). The shorter average time spent on computer exams was consistent across all of our exams. This result could have been due to the computer exam format or the somewhat awkward task of finding and filling in the proper bubbles on the answer sheets. It is possible that the delay between finishing the exam and turning in the paper exams (due to students gathering their coats, for example) could be responsible for a portion of the difference observed, but several students provided comments that they noticed and appreciated that the computer exams were faster.

Study limitations

During the first and (to a lesser extent) second of the eight exams included in this study, there was a technical issue in which the figures embedded in exam questions did not show up on the computer format. This issue was dealt with on the fly by distributing blank paper exam copies and allowing students to look at the figure on the paper. This slowed the computer-based exam takers down somewhat, compared to other electronic exams, but did not measurably increase the state anxiety they reported after the exam. The fact that, in spite of this mishap, we observed shorter computer exam times and equivalent state exam anxiety in the computer testing group makes us confident in the stability of these trends.

According to the written comments, concerns and anxiety about technical issues (whether realized or not) during a computer exam are significant. As just noted, during our first two exams using the computer format, there were issues with images loading on the computer. This situation was stressful, and this stress was reflected in the student comments (but not reflected in state anxiety measures). However, the comments also indicated that the potential for technical problems was a significant source of stress. Students were worried about what would happen if their battery died or their internet connection was lost. Both of these issues suggest that faculty adopting computer exams need to be ready to work with students when technical issues do arise and should also invest time in explaining to students what will happen if batteries fail or an internet connection is lost. We believe some anxiety will be relieved if there are clear policies in place and students can trust that they will not be disadvantaged by technical difficulties. Reducing anxiety can be accomplished by allowing for extra time in case of technical difficulties and instilling trust that accommodations will be made for technical difficulties.

The tests used in this study were all multiple choice. We are not sure whether the results observed in this study could be generalized to other exam types, such as exams requiring extensive calculations or essay exams.

This study was conducted in the academic year covering fall 2018 through spring 2019. This was prior to the massive shift to online learning that happened in spring 2020 because of the COVID-19 pandemic. This study tested the effect of in-classroom computer exams as opposed to the at-home computer exams that many university courses implemented in spring 2020. We do not know how generalizable these results are to at-home exam formats. In particular, in this study, there was no audio or video proctoring enabled. After the COVID-19–related large-scale switch to online and remote proctoring, students and others have voiced concerns about the impact and accuracy of remote video and audio proctoring and have highlighted racial biases in some of the surveillance programs (e.g., Mangan, 2021; Parnther & Eaton, 2021). From personal experience, student anxiety about potential technical issues and being falsely flagged for cheating was a significant issue during at-home exams, so some of the strategies suggested in the following section may also be helpful in a fully distance learning environment, but care should be taken not to extend the results reported here to remote surveillance exam techniques without further study.

Recommendations

Based on our experience implementing and studying the implementation of in-class computer exams, we have compiled some suggestions for faculty considering using computer-based exams. Where possible, we recommend that faculty do the following:

- Make sure there are written instructions on the computer format posted to the learning management system (LMS) page. Instructions should include how to install required software (remote proctoring software) and get technical support.

- Prior to the exam day (but not too far in advance, as software updates can affect functionality), have students run a practice zero-stakes “quiz” using the settings that will be used for the actual exam.

- Clearly explain what will happen in the case of common technical difficulties (internet connection lost, laptop battery failure) in a way that instills confidence that students will not be disadvantaged if these problems occur.

- Build in extra time for the exam in case of potential technical difficulties.

- Have paper copies of the exam ready in case of trouble. We suggest having a full class set of paper exams for the first implementation. Once the computer testing has been successfully run, we suggest having about 10% of the class number available in paper copies.

- Have loaner laptops available. We found having around five laptops for a class of 130 (65 of whom were taking the test on computer) was sufficient to account for forgotten computers, chargers, and other technical issues.

- Have several people in the testing room with technical skills in the LMS and end-user support, especially on the first exam. This person could be an undergraduate who has been trained in troubleshooting common exam issues.

Conclusions

We found no evidence of computer testing negatively impacting student performance, including for groups that are currently underrepresented in the sciences. With regard to exam anxiety, we found no effect of exam format on state anxiety in the exam-taking situation, and we found a weaker relationship between trait anxiety and exam performance for female students when taking electronic exams, suggesting that the computer-based exam does not worsen test anxiety and may even lessen its effects for some students.

Based on these data, we cautiously propose that in-person computer testing can be effectively and equitably implemented in introductory courses. Given the relative ease of implementing computer exams and the flexibility of some of the computer exam platforms, we envision that using computer exams might enable the implementation of more evidence-driven methods to improve assessment, such as (a) increasing the number of assessments (thereby reducing the stakes of each individual assessment; Cotner & Ballen, 2017); (b) increasing the diversity of question type (with regard to Bloom’s hierarchy and mechanics of questions); and (c) allowing for immediate feedback on exam performance (Kulik & Kulik, 1988). We feel that computer testing can be used as an effective tool for assessment in college courses, and we find no evidence that the implementation of computer tests is disadvantageous for students underrepresented in the sciences or those who experience exam anxiety.

1. Demographic data used in this study derive from institutional sources, rather than from student self-reports. It is well established that gender does not exist as a binary variable but rather as a spectrum of identities. Unfortunately, at the time of this study our institution included only male and female options on its application, so we are limited to reporting only male and female as genders, which may mask other identities.

Deena Wassenberg (deenaw@umn.edu) is a teaching associate professor in the Department of Biology Teaching and Learning, J.D. Walker (jdwalker@umn.edu) is a research associate in the Center for Educational Innovation, Kalli-Ann Binkowski (kbink@umn.edu) is an academic technology coordinator in Research and Learning Technologies in the College of Biological Sciences, and Evan Peterson (evanp@umn.edu) is an instructional technologist in Research and Learning Technologies in the College of Biological Sciences, all at the University of Minnesota in Minneapolis, Minnesota.

Distance Learning Equity Pedagogy STEM