Model, Assess, Repeat!

A rubric for assessing student understanding of models in grades 3–5

When teachers first wrap their heads around scientific modeling, it can be a bit tricky to distinguish scientific models from diagrams and threedimensional models. If a fifth grader draws and labels a food web, it’s a model. Right? Not necessarily. According to the Framework, students’ scientific models are intended “to represent their current understanding of a system (or parts of a system) under study, to aid in the development of questions and explanations, and to communicate ideas to others.” (NRC 2012, p. 57). The key idea here is system. When creating a model, students should be representing a system inspired by an inquiry or phenomenon. Because models represent their current understanding, students’ models will differ from each other. Additionally, the models will change throughout the unit of study as student conceptual understanding matures. If students draw a textbook-perfect food web diagram, memorized from class resources, they’re not yet making a model. But a food web drawing, with arrows and labels explaining what students think is happening between plants and animals, perhaps revised and changed as they learn more—that is a scientific model.

After exploring digital models for a couple of years with my third graders, I began to wonder how I should assess and grade them. A shift of teaching has teachers assessing students through science and engineering practices (SEPs) and crosscutting concepts (CCCs), but it is not always clear how to do so. My first attempt at creating rubrics only included the science concepts from the unit. I realized this one-dimensional, disciplinary core idea (DCI) assessment didn’t give a full picture of student learning. To remedy this, I undertook the task of creating a more three-dimensional rubric to assess students’ digital models. I wanted a way to assess students’ conceptual understanding and their progress on the components of modeling, as well as connection to the crosscutting concepts.

Literature about modeling and rubric creation informed development of this rubric along with heavy reliance on Appendix F (NGSS Lead States 2013) and the NGSS Evidence Statements (see Internet Resources). I used samples of student-created digital models alongside the literature to create rubric descriptors. A completed version of the rubric was sent to educators well versed in NGSS and technology integration. I used their feedback to make the rubric more user-friendly and clear (see Table 1 for a sample of one rubric component; the entire rubric is shared online; see NSTA Connection). The biggest change was the addition of student sentence frames to support modeling skills. I tested the rubric and sentence frames in my classroom. The sentence frame additions were immediately helpful, pushing students to higher levels of achievement in their models. In this article, I describe the digital modeling activity my students completed and then explore ways to use the rubric to assess their understanding.

Appendix F of the Next Generation Science Standards (NGSS; NGSS Lead States 2013) shows a clear progression for developing and using models from grades K–12. When K–2 students create science models, the NGSS ask students to make the connection that their model is a representation of something in real life. In grades 3–5, students begin revising their models to show changes in understanding over time (plus using them to make predictions and identify limitations of the model).

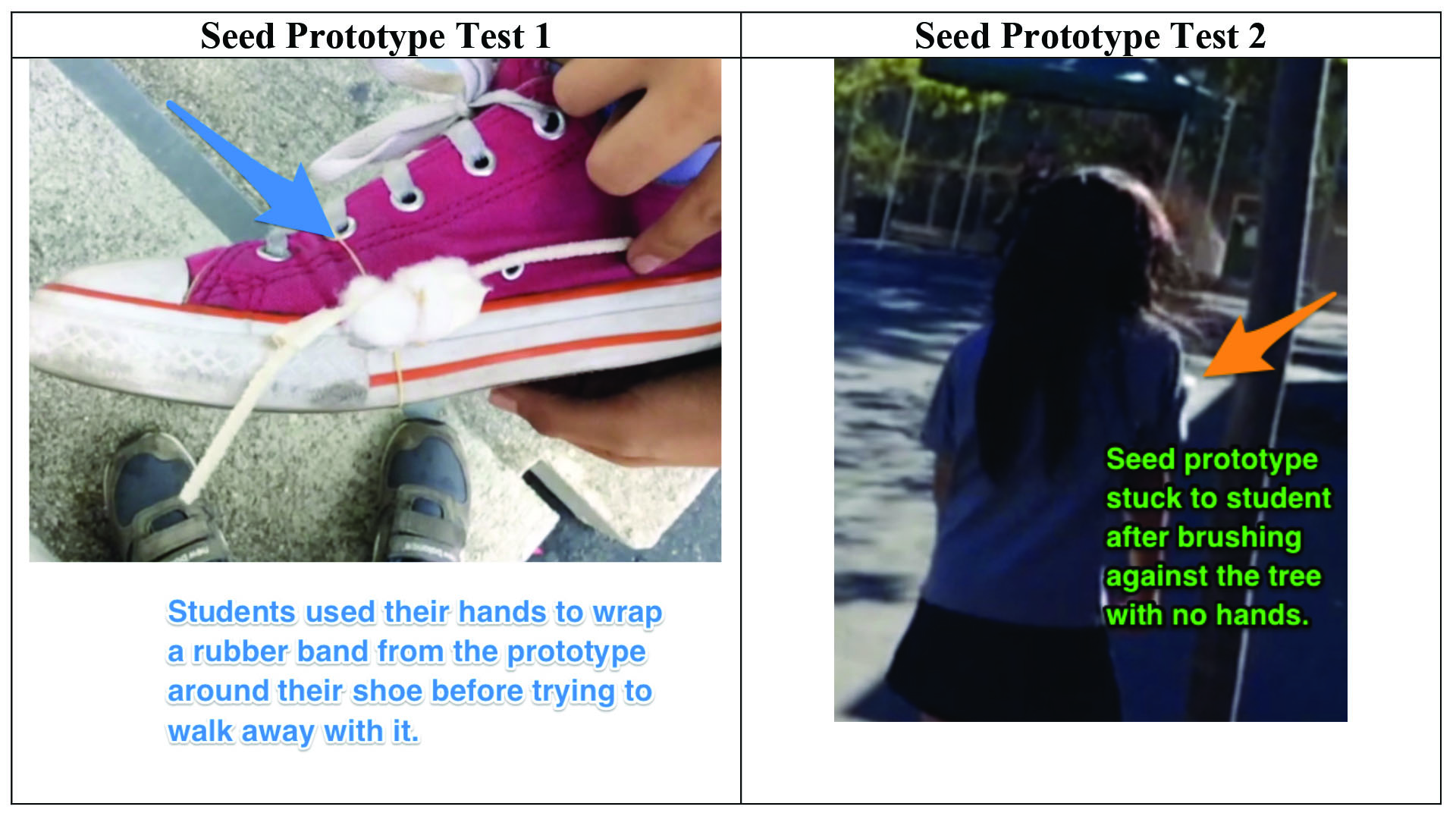

One common practice is to have students create initial models on whiteboards or paper using a single-color pen. After students explore a concept further, they revisit and revise their model in a different color pen. For example, every group in class might draw their initial models in a black pen, add their first revisions in a green pen, their second revisions in a blue pen, and so on. This allows both students and teachers to see how ideas change or thinking deepens. Students cannot erase initial ideas, but can strike through them as their understanding changes. This repeated cycle clearly shows students’ thinking and changes to their thinking. Models can also be developed digitally. Apps like Educreations, ShowMe, or Explain Everything show a snapshot of student models on an interactive whiteboard slide. When students want to revise their model, they create a new slide. Eventually slides are combined/published into a single video. The final video showcases students’ thinking over time, and also allows students to access more tools to explain their thinking. For example, Figure 1 shows screen captures of third graders’ digital model showing a seed prototype they created, tested, revised, and tested again. They included video recording of tests to their prototype in addition to photos explaining the prototype parts. Audio recordings are one of the most helpful modeling tools. Young students usually share more about their thinking through talking than through only drawing, labeling, and writing. Miller and Martin (2016) discuss how using multiple mediums (various digital tools) in digital science notebooks is especially helpful for young students to express their thinking. The Miller and Martin article also likens students’ audio recordings of their scientific thinking to the rehearsal stage of writing in the Writing Workshop model. Once a science unit is completed, students create a final slide explaining their most up-to-date understanding. Students then download the screencast as one complete video and share it with the teacher (via email, Airdrop on Apple products, or uploading them to a classroom page such as Seesaw, ClassDojo, or Google Classroom). For more resources around creating digital models, see Internet Resources.

How to Use the Rubric

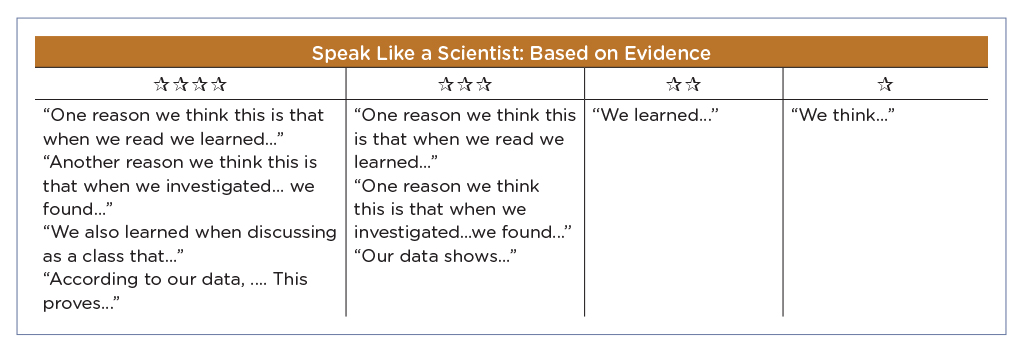

My first caution is not to get overwhelmed by the number of categories. The rubric includes everything in the grades 3–5 progression for modeling, which takes the entirety of the grade span for students to master, meaning third graders won’t have mastered all of the categories, even by the end of the year. I recommend teachers choose particular parts of the rubric on which to focus, instead of trying to address all components, especially when students are creating initial models. Once teachers have prioritized which categories/rows they want to address, they can teach the corresponding sentence frame language to their students. Each time students revise by adding to their digital models, teachers can explicitly teach language either from the next higher proficiency level, or for another category in the rubric they want to emphasize. This helps increase the clarity and the thoroughness of students’ explanations. I usually list the sentence frames for a couple categories on the board, using stars instead of 1, 2, 3, or 4 to show students how they can increase their sophistication of explanations (see Table 2).

You can then focus on tailoring the rubric to the specific DCIs being taught by listing key DCI components to be described in the model into the Components box of the rubric. (There is space just beneath the category title to list the key components.) Teachers can find these components in the NGSS Evidence Statements (NGSS Lead States 2013) if the DCI is part of a performance expectation that includes modeling. Otherwise, teachers can list key components by choosing the most important vocabulary, concepts, or relationships the model should explain. It is important to emphasize the key components of the DCI in instruction and for students to include them in their models. When preparing to use the rubric, teachers can add categories/rows to the rubric to fit their needs. For example, they may want to add a row based on Speaking and Listening Common Core Standards. Or a row for English Language Development standards if you’re working on a particular speaking or grammatical skill that can be integrated.

Just as I couldn’t include details for a DCI/concepts row in the rubric, the CCC employed during instruction will be specific to that particular lesson sequence and rubric. While Cause and Effect or Patterns are incorporated in the Relationships row of the rubric, other CCCs must be added in order to force students and teachers to be mindful of, and evaluate, all three dimensions.

Finally, I recommend not assessing Technology Used to Enhance the Model on students’ very first digital model attempt. Students need time when using a new app. Accidentally deleting slides and exploring new tools is par for the course. It will be important to eventually teach students to cut out blank or accidental recording time in their screencast, so that the teacher is not wasting grading time listening to accidental recordings that are not explaining the model.

This rubric can easily be used for paper/pencil models by teachers who aren’t ready to teach digital modeling or prefer hard copies of models. Simply exclude the Technology Used to Enhance the Model category.

Grading Student Work With the Rubric

Once the rubric is personalized for a lesson/phenomenon, it’s ready for use to assess student understanding. Teachers will need to watch each digital model video several times, likely watching once for each category they are assessing. Scoring becomes more efficient as teachers get more practice with the rubric. Teachers should be sure students are creating models in reasonable size groups (I usually do groups of four students) so there are not too many screencasts to watch and assess, but small enough groups that all students have an opportunity to participate. The length can vary, but digital model explanations from my third-grade students typically last four to five minutes.

While watching and assessing the digital model videos at the end of the unit gives a summative view of students’ understanding, teachers can use the rubric formatively as well. Slides in the interactive whiteboard apps can be converted to video at any point in the process. With updates to the model in the beginning and middle of the process, the teacher can monitor and identify which sentence frames to emphasize or which scientific concepts need more exploration. Figure 2 shows an example of scoring with the rubric

Value of the Rubric

After creating the final version of this rubric, I tested it on existing digital models from my third graders. Prior to going through the process of creating this rubric, I had created simple rubrics to grade digital student models that only had categories for each concept covered in the unit. Questions arose when focusing only on the concepts. What should I do about groups who left out entire concepts? What about when students named concepts but couldn’t explain relationships between them? Which concepts get graded when students’ conceptual understanding changed? By focusing only on conceptual ideas (what we used to think of as the “content”), I realized that key elements of modeling (and associated CCCs) were missing.

When I reassessed the student models using the rubric in this article, I was ecstatic! I got a much stronger, holistic assessment of students’ work. After re-grading several models for the class, I knew immediately which sentence frames from the rubric I would focus on the next time we created models. I knew which categories were lacking that would need my focus during instruction in the future units. The most important outcomes of using this rubric are that students have a tool to support their developing modeling skills, and teachers gain immediate insight into what they need to teach their students next to increase students’ threedimensional science understanding and skills.