A Short, Course-Based Research Module Provides Metacognitive Benefits in the Form of More Sophisticated Problem Solving

By Caroline L. Dahlberg, Benjamin L. Wiggins, Suzanne R. Lee, David S. Leaf, Leah S. Lily, Hannah Jordt, and Tiara J. Johnson

This article describes how a short, course-based research module that provides metacognitive training in a high-enrollment biology course enhances complexity of student responses to a research-related problem-solving exercise.

Although completion of an undergraduate degree in STEM (science, technology, engineering, and mathematics) can take more than 5 years, there is no guarantee that STEM graduates will encounter courses that directly facilitate the development of problem-solving and research skills that are expected of professionals with degrees in STEM fields (President’s Council of Advisors on Science and Technology, 2012). In part, this is because traditional STEM courses often prioritize memorization of factual content and proper execution of lab techniques, rather than helping students to build skills that enable creative and constructive problem solving (American Association for the Advancement of Science, 2011; Brownell, Freeman, Wenderoth, & Crowe, 2014; Hoskinson, Caballero, & Knight, 2013; Lawson, Banks, & Logvin, 2007). One important problem-solving skill is the ability to use metacognition to self-reflect and recalibrate one’s actions. In scientific research, metacognition is regularly used through the process of choosing a research question, designing hypotheses, and running and analyzing experiments (Tanner, 2012). Despite its importance, metacognition is often implicitly conveyed, rather than directly taught, through extended apprenticeships in a research setting or graduate school (Schwartz, Tsang, & Blair, 2016).

At the undergraduate level, students can acquire research experience by participating in research projects led by science faculty or by participating in course-based undergraduate research experiences (CUREs; Kloser, Brownell, Chiariello, & Fukami, 2011; Lopatto, 2007). Faculty-led research in a laboratory is beneficial to students’ identities as scientists and often leads to interest in further scientific education (Brownell & Kloser, 2015; Gregerman, Lerner, von Hippel, Jonides, & Nagda, 1998; Russell, Hancock, & McCullough, 2007). However, independent research experiences are unavailable to many students due to a number of factors, including limited faculty laboratory space, funding, and mentoring time; limited student time; and the challenges of navigating academic hierarchy to find research opportunities. The latter two impediments are most salient for students from traditionally underserved populations and first-generation college students (Kuh, 2008). To mitigate these barriers, many undergraduate institutions work to bring research into their curriculum through CUREs (Brownell & Kloser, 2015; Hurtado, Cabrera, Lin, Arellano, & Espinosa, 2008; Kloser et al., 2011; Wei & Woodin, 2011). Although implemented over shorter time scales, CUREs typically incorporate several aspects of independent research, including investigation of the unknown. Students benefit from their CUREs by building stronger identities as scientists and by connecting content from previous coursework to “real life” experiences (Brownell, Kloser, Fukami, & Shavelson, 2012). It is important to note that, as is the case when students are involved in faculty-led research, CUREs offer a good venue for teaching the principles of metacognition in problem-solving in research early in students’ scientific development (Wei & Woodin, 2011).

Large-scale CUREs such as SEA-PHAGES and the Genome Education Project (GEP) are examples of multi-institution CUREs or inclusive Research Education Communities (iRECs) that enable thousands of students to participate in research projects (Hanauer et al., 2017; Jordan et al., 2014; Shaffer et al., 2010; Staub et al., 2016). Single-institution CUREs are also valuable because they allow students to participate in relevant research at their own institution (Auchincloss et al., 2014; Brame, Pruitt, & Robinson, 2008; Brownell et al., 2015; Brownell et al., 2012; Jordan et al., 2014; Wiley & Stover, 2014). Yet in some departments and academic programs, the development of new, free-standing CUREs is logistically complicated and can be difficult to insert or renovate within existing STEM curricula (Bangera & Brownell, 2014; Shortlidge, Bangera, & Brownell, 2017). An attractive alternative is inserting research experiences as short modules into existing curricula (Howard & Miskowski, 2005). These shorter experiences could increase the depth of learning in STEM without introducing entirely new courses or completely redesigning existing courses.

It is not known how a short, embedded module might impact the development of student problem-solving skills, nor how research experiences in courses of any length could affect metacognition in biology students. We were thus interested in probing whether a short, modular CURE can alter student approaches to problem solving. If short modules can introduce and help hone metacognitive skills, as measured by changes in approaches to problem solving (Dye, Stanton, & Tomanek, 2017; Schraw, Crippen, & Hartley, 2006; Stanton, Neider, Gallegos, Clark, & Tomanek, 2015), it may be simpler for some programs to consider embedding them as part of an existing curriculum. If short modules are not sufficient to catalyze gains in student skills, then larger curricular redesign into course-length research experiences remains necessary.

As described here, we embedded a 3-week research module into a large enrollment, Introductory Cell and Molecular Biology course (See Supplementary Document S1: Overview of Goals and Activities in the Authentic Research Module, available at ). Students were presented with a biological research problem: What might be the cellular effects of changes in DNA replication machinery? To enable students to address this question, our module provided a framework for meaningful experimental design, experimentation, and analysis in a format designed to spark metacognition and run during part of an academic quarter.

Students who participated in the research module gave more sophisticated responses to a task-based problem-solving question that required self-direction and self-reflection compared with students who participated in a series of standard “cookie-cutter”-type laboratory activities. Moreover, through focus group interviews with students in the research module, we found that the opportunity to make independent choices was an important aspect of our research module, which helped students feel engaged and invested in the research experience. Together, our data suggest that even short research modules can provide measurable benefits to students.

Methods

This research was conducted at Western Washington University, a midsized regional university that serves ~14,500 undergraduate students, and ~700 master’s degree students. The 3-week research module ran during the last 3 weeks of a 10-week introductory Cell and Molecular Biology course. For this course, there are three classroom/lecture sessions per week, with an associated laboratory once per week. Two or three concurrent sections of 72–96 students run every academic quarter, and students are divided into laboratory sections of 24 students. Biology faculty lead the classroom sessions for each section (different instructors for each section), while master’s degree graduate teaching assistants (GTAs) from the Biology or Environmental Science departments lead the laboratory sessions. Annually, approximately 700 students participate in the course, which is a prerequisite for a variety of life science–related majors, and is open to nonscience majors.

IRB exemption approval was awarded under #EX16-023. Students were given extra-credit points for participating in responding to a task-based question and an online pre–post survey.

Research module intervention

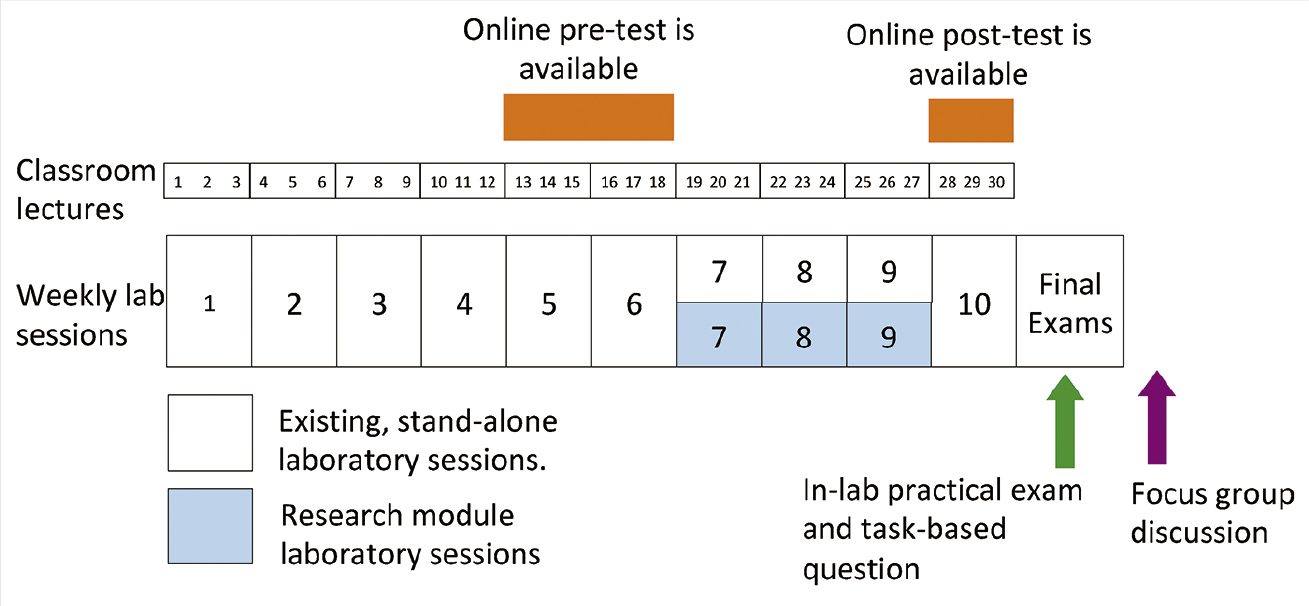

Students participated in either a short research module (79 participants), or in preexisting “recipe-like” technique-oriented laboratory sessions (73 participants) as part of their 10-week course. We introduced the short research module in one section of the course (see Supplementary Document S2: Description of the Research Module; also in revision, CourseSource; available at ), while the other section of the course ran without the intervention. For the first 6 weeks of the quarter, all laboratory sections used a laboratory manual that provided “recipe”-like experimentation, observation, and/or data collection, with minimal data analysis (Figure 1). The research module, which focused on the consequences of overexpression of a gene that is required to initiate DNA replication, ran during the last 3 weeks in one section of the course and included a computer-based DNA sequence analysis lab (see Supplementary Document S1: Overview of Goals and Activities in the Authentic Research Module, available at ); the other section of the course continued to use the original and topically similar laboratory manual, which included the same DNA sequence analysis lab, and one-day sessions on microscopy of mitotic chromosome structures and photosynthetic efficiency, respectively.

The research module required students to use prior knowledge of cell biology to formulate a testable hypothesis and design experiments to test their hypothesis to answer a research question. Throughout the module, prompts were provided to encourage students to actively self-reflect (“Where have you seen these genes before? How did you come to your conclusion?”) and to self-direct (“What tools will be the best for testing your hypothesis? What would a result of × mean with respect to your hypothesis?”) just as they would during scientific research (see Supplementary Figure S3: Metacognitive Prompts, available at ). Because learning metacognitive practices could be novel to many students, prompts were presented as low-stakes formative assessments and in-class discussion questions instead of more stressful summative assignments (Sawyer, 2006). Student grades were independent of formulating a preliminary “good” hypothesis or the “success” or “failure” of a student-designed experiment. To ensure that students took the metacognitive training seriously, students were graded on the completion and clarity of their responses to metacognitive prompts.

Task-based question

At the end of the quarter, students were challenged with a complex written problem related to laboratory experiments they had attempted in the course. The problem was presented as an extra-credit question on the laboratory practical exam; student responses to the question were graded on participation. The question consisted of a 168-word description of a research scenario that was written to be intentionally complex and ambiguous to solicit a wide variety of possible responses (see Supplementary Document S4: Task-Based Question, available at ). Increased complexity in the answers to this task-based question would be evidence of stronger metacognition (Schraw et al., 2006; Tanner, 2012).

Student responses were iteratively coded for a final set of 25 types of responses (see Supplementary Figure S5: Coded Answers to a Task-Based Question, available at ). Coders were blinded to treatment type and student identity. Interrater reliability on these codes was higher than 93%. The final codes were independently binned as complex, medium, or simple on the basis of the cognitive depth and understanding of research methods, intent, and troubleshooting. All student answers were then analyzed using this code to determine the number of discrete codes (total) and their complexity (simple, medium, complex) per student respondent. Total numbers of simple, medium, and complex codes were compared between students in the research module and traditional lab version of the course.

Focus groups

Focus group interviews are rich sources of qualitative data that allow researchers to access different points of view (J. Cameron in Hay, 2005). These rigorous conversations explore complicated experiential themes that impact the student experience but would be logistically impossible to control completely. For each of two focus groups, four to six students were recruited randomly from within each Introductory Cell and Molecular Biology course that used the experimental module. Additional targeted recruitment was used to ensure that each focus group had sufficient numbers of participants. Prompts for discussion focused on broad topics including “thinking like a scientist” and “learning how to think,” but students had leeway to explore and explain the themes they found most salient. These qualitative interviews were used to give depth to existing data, and we followed best practices for recruitment and interviewing, such as those described in Rubin and Rubin (2012). Note that our use of a sample of greater than 10% of the target population (students who participated in the experimental module) exceeds normal best practices. Transcripts were analyzed for themes of participation and metacognition and suggestions for improvement in the design of the lab module as in Wiggins et al. (2017).

Pre–post survey

A 26-question online Likert scale survey was administered to the participants from the control and intervention groups. Survey items probed student identification with statements on research, metacognition, and science education. Some of the questions were from the previously validated Colorado Learning Attitudes about Science Survey (see Supplementary Document S6: Pre–Post Survey Questions, available at ); others were novel but were confirmed for readability and concept clarity by students similar in level at the university to those who participated in this study. All students completed the presurvey before the beginning of the authentic research module (Figure 1). The corresponding postsurvey was completed within one week of completing the authentic research module (Figure 1). Gains in score between the pretest and posttest for Questions 1 through 24 were averaged per student. Questions that were negatively worded were reverse coded such that a gain always indicated an increased positive attitude toward that response item. We used a linear regression model to compare the average gain per student in the control and intervention groups. AIC (Akaike’s information criterion) was used to compare this model to one controlling for the impact of the instructor to determine which model best fit the data. Using AIC we also confirmed that the model fit was not improved by a censored regression (which accounts for ceiling effects should they exist). The model was fit in R version 3.3.3. The censored regression model was fit using the “censReg” R package version 0.5-26, [R (> = 2.4.0), maxLik (> = 0.7-3)], (Arne Henningsen, 2017; retrieved from ).

Results

Assessment of impacts: Task-based question

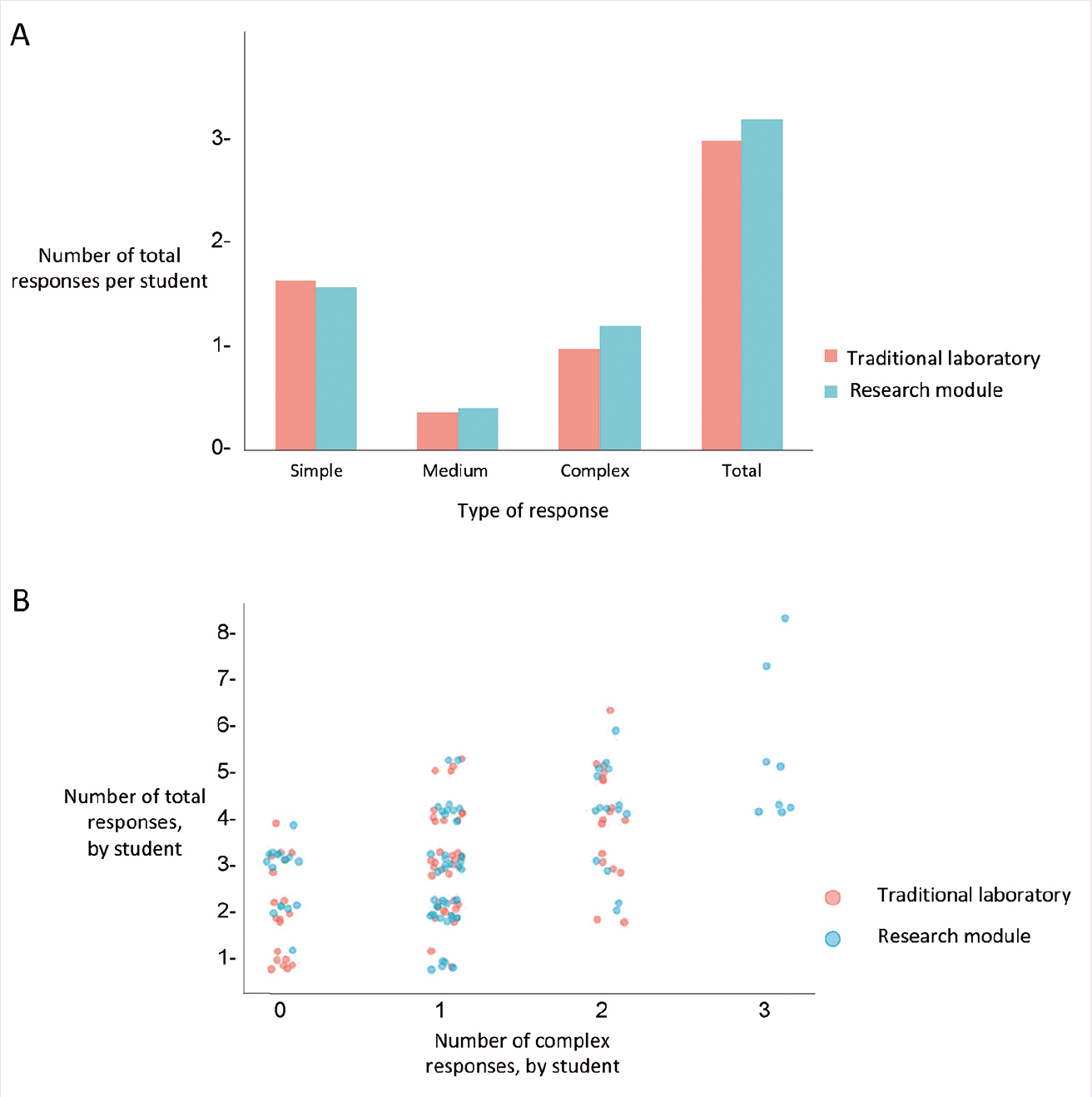

To directly assess whether students were more likely to solve problems differently after the research module, we provided students with a fictitious, but realistic, scenario representing an ambiguous and complex laboratory problem. Students were then asked to identify what they might do to overcome this problem. After transcribing and coding written student responses to this task, 25 student codes were binned into simple, medium, or complex, based on cognitive engagement with the material. All student answers were then analyzed using this code to determine the number of discrete codes (total) and their complexity (simple, medium, complex) per student respondent (Table 1). Students who took the research module tended to contribute a greater average number of response codes, though this was not significant at an alpha of 0.05 (3.16 codes per written response compared with 2.96 codes for control group, p-value = .1, using a standard two-tailed T-test). Students in the research module contributed more complex responses (1.19 codes per response compared with 0.97 for the control group, p-value < .03, using a standard two-tailed T-test; Figure 2A). Although there were students from both the intervention and the control groups that contributed zero complex answers, only students from the intervention group contributed three complex responses; those students also tended to have higher numbers of total responses (Figure 2B).

| Analysis of responses to the task-based question. | ||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

Assessment of impacts: Focus groups

To understand how students approached and experienced the research module, we recruited students for focus groups as outlined in Hay (J. Cameron in Hay, 2005) and in Rubin and Rubin (2012). We specifically hoped to gain insight into how the research module impacted students, so we did not compare their answers with those of students who had not participated in the experimental module. Discussions with students who completed the research module coalesced around the major themes of openness, self-efficacy, and synthesis of course topics. Following are highlights of those discussions: Though only a limited number of opportunities for making research choices were given to students within the module itself, students felt that they had autonomy in their scientific decisions. When pressed, students revealed that the choice between only a small number of variables (two to four) would be enough to retain the feeling of autonomy and ownership over the experiment. Focus group participants expressed identification with (and in some cases pleasant surprise at) their ability to carry out a meaningful scientific experiment. We had expected to hear more discussion of troubleshooting as a salient example of metacognitive practice within the module, but students did not indicate that this was particularly important for them. Students also reported feeling more equal with their teaching assistants and supervisors, identifying as partners in a problem-solving team rather than participants in a course. This module was viewed as intentionally bringing together multiple topics. Students noted that their overall STEM knowledge and cross-topic conceptual understandings were most improved by instructor suggestions that were specifically focused on improving student execution of lab techniques. Students were strongly motivated to put extra effort into the project when they expected to make a real (if small) impact on professional science, and they did not report that examinations or grade pressure influenced their experience with the research module.

Assessment of impacts: Pre–post survey

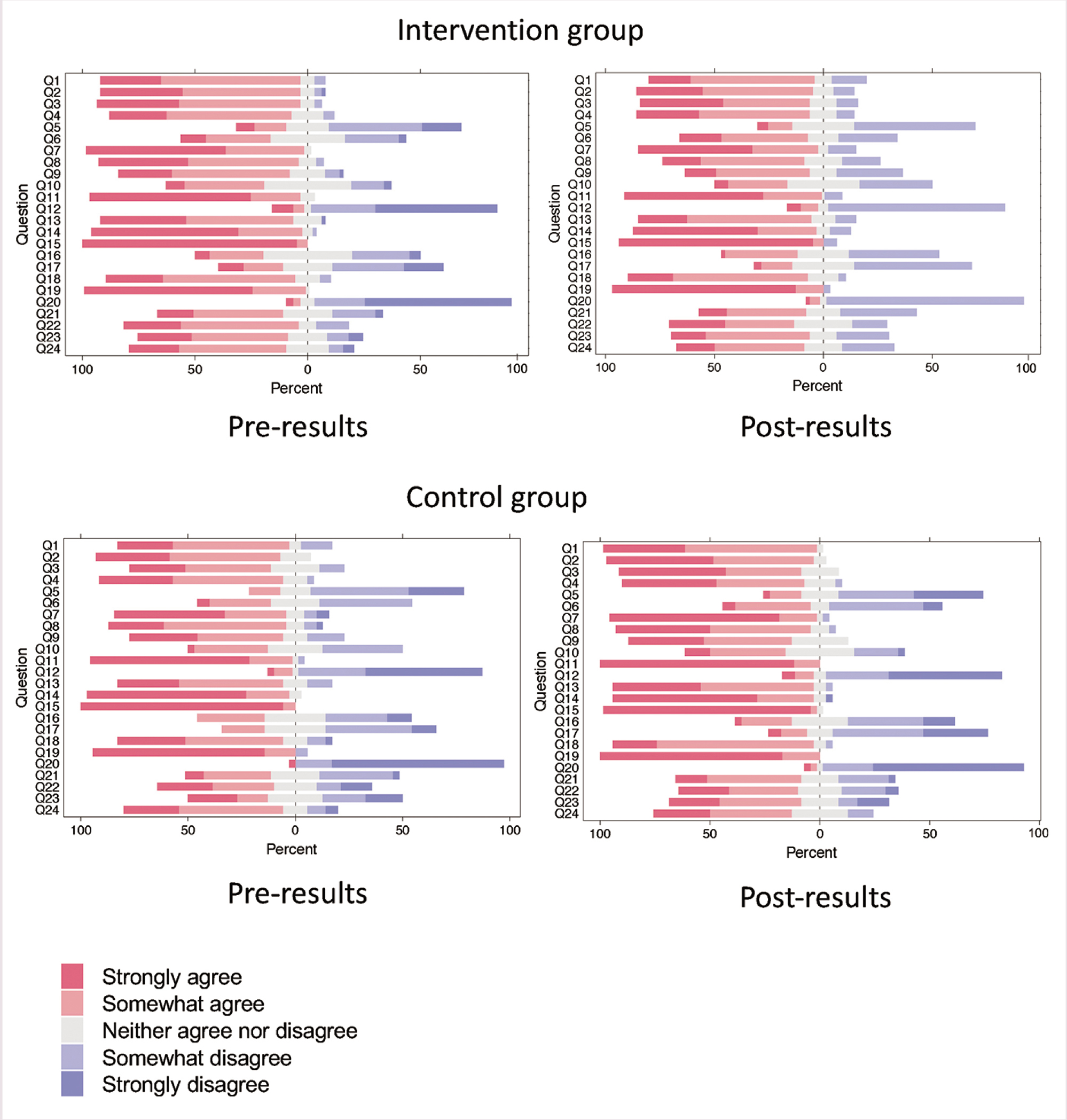

Students in the control and intervention groups were asked to complete an online survey before and after the 3 weeks that included the research module (Figure 1). Using linear regression, we determined that a student’s average gain per question was not impacted by participating in the authentic research module (β = 0.038 ± 0.044, p = 0.38). According to AIC (see Methods section), controlling for the impact of instructor improved the fit of our model to the data but did not affect our conclusion that the treatment had no impact on student gains on the survey (β = 0.019 ± 0.059, p = 0.75). Figure 3 shows the percentage of response types to each survey question for students who participated in the authentic research module (Figure 3, top panels) and those who did not (Figure 3, bottom panels).

Discussion

In the laboratory sciences, metacognitive skills are often built over time through apprenticed practice. Without the chance to participate in research projects during their college careers, undergraduates may not be introduced to, or find venues in which to hone, metacognitive skills. To introduce undergraduate students to metacognition in research, we developed a short CURE that ran as part of an existing introductory Cell and Molecular Biology course. Our research module includes metacognitive prompts that we expected could help train students in self-reflection and redirection during research (see Supplementary Figure S3: Metacognitive Prompts, available at ).

As seen from responses to a task-based question, students who participated in this research module have more sophisticated approaches to problem solving in laboratory scenarios. Specifically, students who participated in the research module offered more complex problem-solving approaches than their counterparts (Figure 2), suggesting that they approached the task-based question with stronger metacognition (Schraw et al., 2006; Tanner, 2012).

Intriguingly, students who participated in the module did not significantly change their self-reported attitudes toward approaching problem solving in biology or the process of science, as judged from a pre–post survey (Figure 3), suggesting they may not be aware of any increased sophistication. One possible explanation is the divide between ability and self-perception of that ability (Dye et al., 2017; Kruger & Dunning, n.d.; Stanton, Neider, Gallegos, Clark, & Tomanek, 2015). Notably, the lack of differences in gains in student self-reported attitudes between the treatment and control groups suggests the research module did not negatively impact student attitudes.

Students who participated in the research module reported through focus group interviews that having choice in a research experience was important for their engagement. They also suggested that complete freedom is not a requirement, which is important for designing future modules that must have limited degrees of freedom to be logistically manageable. This is exciting because it provides evidence that students appreciate a realistic example of how and why many research laboratories focus on a few related questions. Future experimental data will be needed to characterize any correlation between freedom and positive student impacts.

We heard evidence of positive outcomes for self-efficacy, personal relevance, and synthesis across topics from focus groups of students who participated in the research module. All of these benefits have been observed previously in larger research experiences (Auchincloss et al., 2014). It is important that these benefits can be retained in smaller modules, because it suggests that more topics may be treated similarly without requiring wholesale course redesign. This is especially useful in the absence of curricular flexibility that would allow redesign of an entire course or suite of courses.

To summarize, our short research module serves as a potential model for others hoping to bring CUREs to large-enrollment courses. The module did not negatively affect student views on research or scientific identity, and it was successful in promoting deeper thinking toward open-ended problems. We anticipate that similar short modules providing course-based metacognitive training in additional biology courses taken by students could strengthen the effects that we saw. Introducing research to more advanced levels of the curriculum could also provide students with increasingly intricate research questions and enable students to go progressively deeper into the practice of research. In such a scenario, we would expect student gains to be even greater, which may ultimately make the gains apparent to students themselves.