Turning Tests Into Tasks

Learn how to build a summative performance assessment from a standardized test question.

Ten years ago, the award-winning educator James Manley called on science teachers to confront an increasingly standardized test–oriented climate with the courage of our conviction that inquiry-based science instruction promotes student learning (Manley 2008). Though today we speak in terms of the Next Generation Science Standards (NGSS) and three-dimensional learning (NGSS Lead States 2013), Manley’s challenge to fight for inquiry in the face of standardized testing still holds true. Fortunately, some states are beginning to build standardized tests that holistically integrate science and engineering practices, crosscutting concepts, and disciplinary core ideas (Achieve 2018). These new tests feature questions sets that require students to apply their reasoning skills to a detailed problem-based scenario that is similar to a performance task. These “next generation” standardized tests hold promise for changing the assessment paradigm in science education. However, state-level shifts in testing move slowly, and many states remain committed to traditional test designs.

In the meantime, science teachers can reinvigorate their own assessment strategies by designing summative performance assessments to use in their own lessons. Performance assessments are “tasks conducted by students that enable them to demonstrate what they know about a given topic” (Flynn 2008, p. 33). These tasks not only increase the depth of insight that teachers have into students’ learning (Clary, Wanders, and Tucker 2014), but they can be especially transformative for how teachers evaluate students with limited English proficiency, as students can use multiple modes of communication (speaking, gesturing, interacting with physical materials) to demonstrate the true complexity of their scientific understandings.

Middle school science curricula often embed performance tasks within the investigations that students conduct during the Explore phase of a 5E lesson and have teachers use these tasks to formatively track student development. However, most curricula rely on traditional pencil-and-paper tests rather than performance-based approaches to evaluate student mastery of learning objectives at the end of a lesson. Because of this, teachers who want to use summative performance assessments often have to design their own tasks.

Creating a summative performance assessment from scratch can, at first glance, seem a bit overwhelming. Ironically, even traditional standardized test materials can serve as a starting point for teachers to design more sophisticated assessments, as many questions include actions or scenarios that can be adapted into student performances (Matkins and Sterling 2003). By using standardized test questions to inspire summative performance assessments, teachers can not only improve their evaluation of student learning, but they can also indirectly prepare students for success on state accountability measures (Flynn 2008).

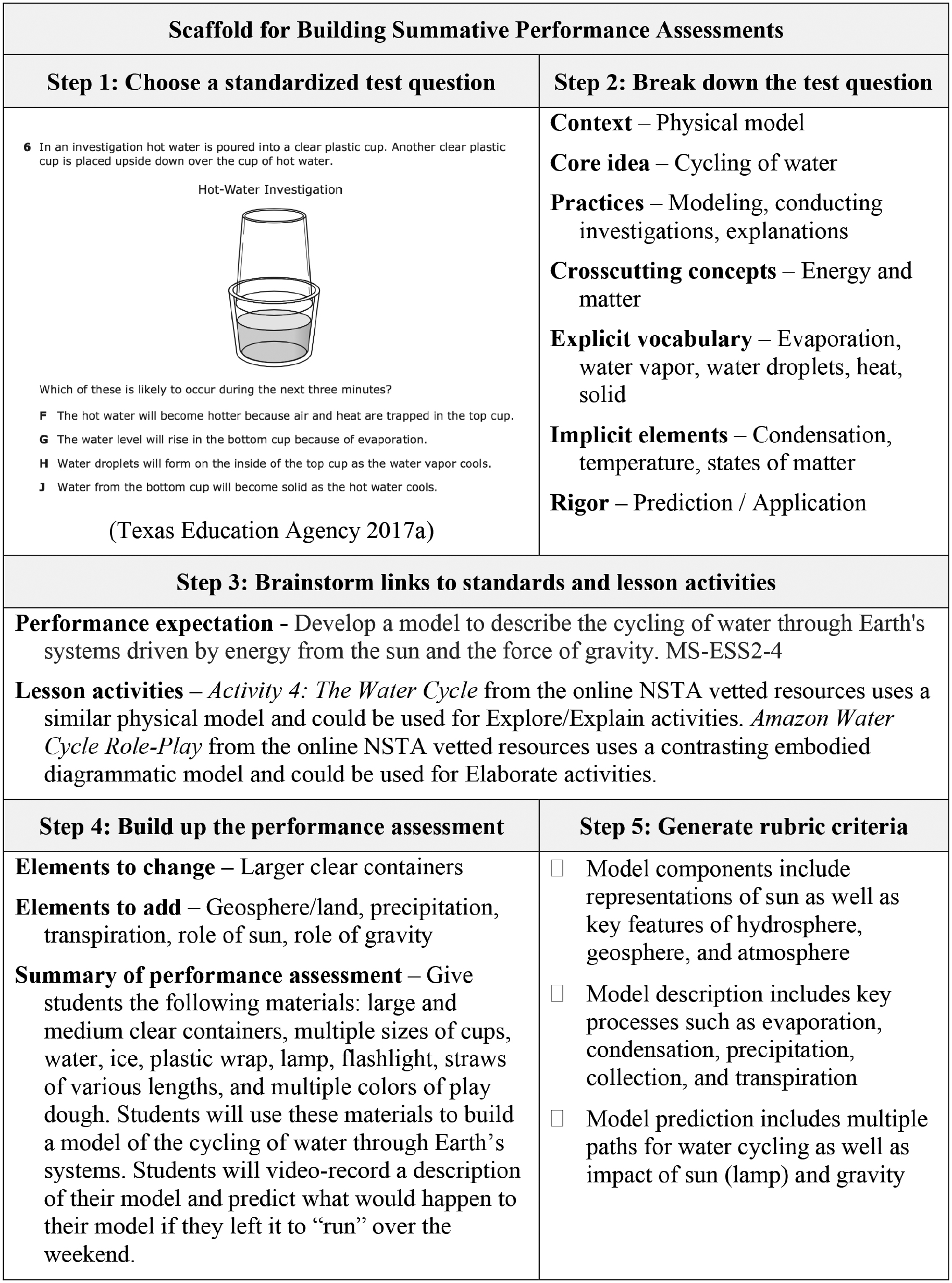

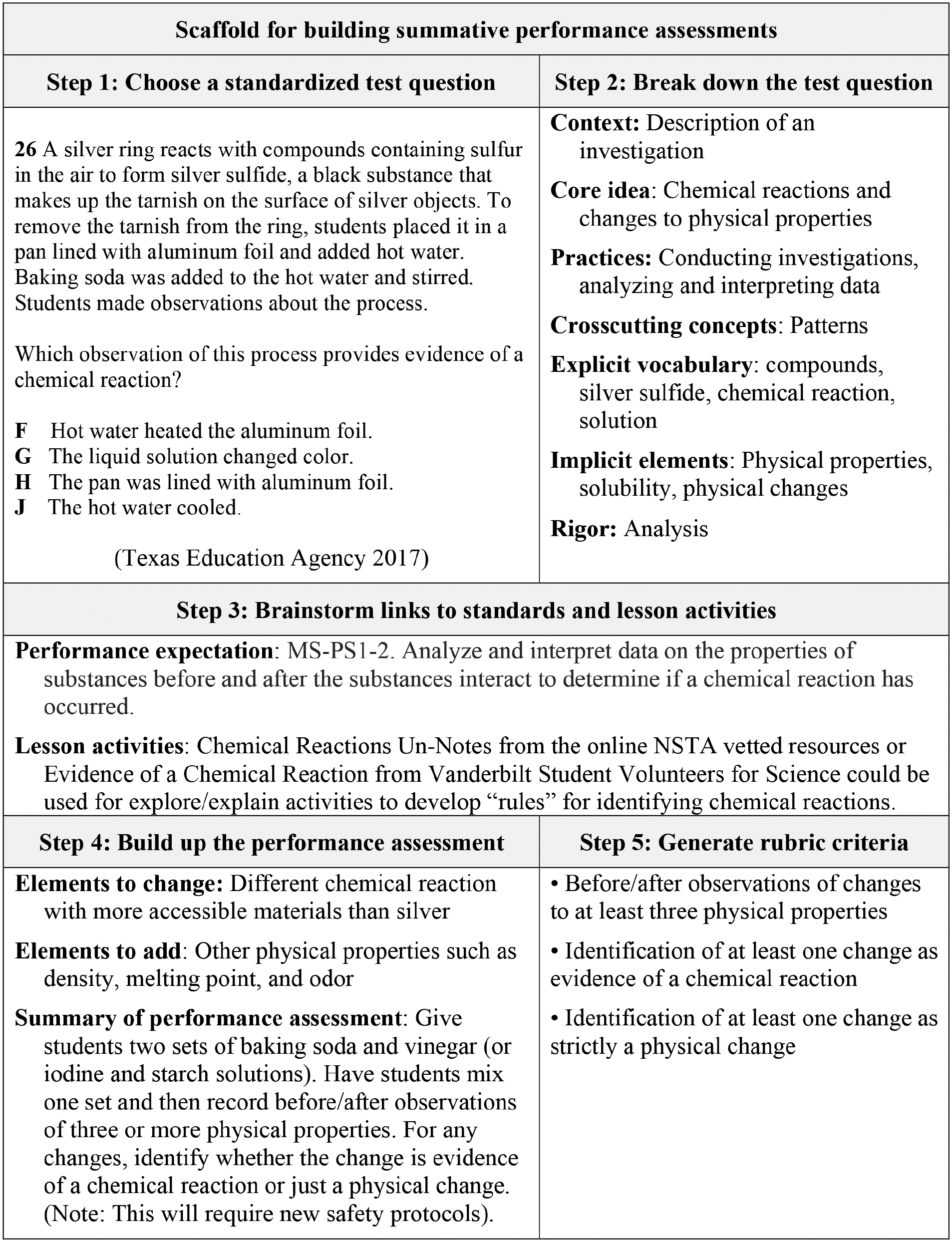

In this article, we share a five-step scaffold that we use to help teachers design their own summative performance assessments based on traditional standardized test questions (see Online Supplemental Materials). This scaffold uses the strategy of backward design (Wiggins and McTighe 1998) to build authentic classroom assessments.

Step 1: Choose a standardized test question for inspiration

Not every standardized test question is created equal. Some offer a stronger base for building performance assessments than others. We begin by looking for a question or collection of questions that includes a description of an activity or an investigation setup that we could adapt for a classroom setting. We also take note of any test items that include representations, pictures of physical models, complex contexts, or everyday experiences. These features can all be used to add depth to a performance assessment. Our eyes were immediately drawn to the water cycle test item analyzed in Figure 1 because it included a diagram of a physical model that we could replicate in a classroom. Likewise, the chemical reaction test item analyzed in Figure 2 caught our attention because the scenario in the question asked students to interpret observational data.

Although teachers may want to begin with standardized science tests designed by their state, district, or local curriculum, they should not feel bound to use only these resources. Often the questions in other state, national, or international science assessments address similar learning targets. Teachers can look for inspiration outside their own grade level as well. An item on a fifth-grade science test might include an everyday experience that can be adapted for use in a performance assessment for a seventhgrade lesson.

Step 2: Break down the test question

After finding a test question to inspire the performance assessment, we use multiple perspectives to dissect that question. This allows us to make sense of how test developers operationalized a given standard. It also enables us to start gathering features that we might want to include in our own performance assessment. Although there are many ways to break down a standardized test question, we focus on seven features: the context, disciplinary core idea, science and engineering practices, crosscutting concepts, explicit vocabulary, implicit elements, and rigor of action. We also take note to what extent the original test question assessed rote understanding of any of the three NGSS dimensions so that we can better integrate these dimensions in our revised performance assessment. The recently released “Criteria for Procuring and Evaluating High-Quality and Aligned Summative Science Assessments” (see Resources) offers detailed suggestions on how to design assessment tasks for authentic three-dimensional performance and can be a valuable tool for brainstorming how to integrate disciplinary core ideas, science and engineering practices, and cross-cutting concepts instead of assessing them in isolation (Achieve 2018).

When we went to break down the water cycle test item, we first noticed that the context of the question included a physical model that was described in the text and with a diagram. We also saw that the test item aligned with the NGSS disciplinary core idea of water cycling; the crosscutting concept of Energy and Matter; and the science practices of Modeling, Conducting Investigations, and Developing Explanations. The explicit academic vocabulary used in the item included the terms evaporation, water vapor, water droplets, heat, and solid. However, students also needed to be fluent in other elements such as condensation, temperature, and changes in the state of matter that were embedded implicitly in the item, but not overtly addressed. Finally, we noticed that the rigor of the action in this test item was fairly high as it asked students to predict what would happen to the physical model over the next three minutes.

Step 3: Brainstorm links to standards and lesson activities

In this step, we shift our focus from analyzing the standardized test question to narrowing down what students should be able to do by the end of the lesson in which this performance assessment will be used. By aligning a question to a specific state standard or NGSS performance expectation, we can identify the elements of inquiry within the question that we might want to emphasize in students’ performances, as well as any important features that might be missing in the original scenario. For example, we found that the water cycle item aligns with the NGSS performance expectation MS-ESS2-4: Develop a model to describe the cycling of water through Earth’s systems driven by energy from the Sun and the force of gravity. Here, the original test question paired well with the modeling focus of the performance expectation, but failed to explicitly address the roles of the Sun in providing energy to drive the cycle and of gravity as the key force moving the water. Although many standardized test items are aligned by the test designers to one specific standard, we have found that the scenario underlying a question can often be used to build performance assessments that address additional standards as well.

During this step we also research how the scientific understandings that are central to the test item have been supported in inquiry-based lesson activities within in our district curricula or from trusted resources such as NSTA’s online NGSS Hub. This activity brainstorm provides inspiration for new scenarios, examples, and materials that could be used to transform the key features of a standardized test question into a student performance. It also challenges us to consider how the storyline of a lesson, from the lesson’s launch to students’ investigations to the final summative performance assessment, fits together. For the chemical reaction item, we used NSTA’s database of vetted classroom lessons, as well as a science lesson developed by the Center for Science Outreach at Vanderbilt University (see Resources) to find other chemical reactions that we could use safely in a classroom setting. During this brainstorm time, teachers often spontaneously think of new ways to infuse inquiry into other parts of their lessons as well. Consequently, we have successfully used this scaffold to reverse-engineer student performance tasks for small-group investigations and extension activities, as well as summative assessments.

Step 4: Build up the performance assessment

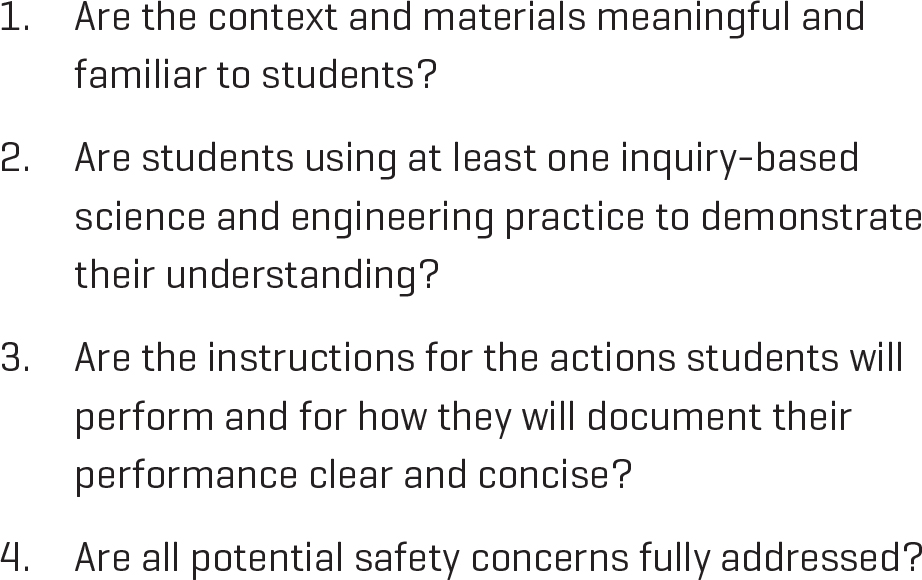

Building the summative performance assessment requires a balance of creativity and clarity in order to challenge but not confuse students. Here, we use the test item analysis from step 2 of the scaffold and the activity brainstorm from step 3 to narrow down which scenario, materials, science practices, core ideas, and vocabulary we want to emphasize. Often there are multiple ways a single test question could be transformed into an inquiry-based performance assessment. For example, the water cycle item could inspire an assessment in which students are given assorted materials and asked to construct a model of the water cycle and predict what would happen with that model over a series of days. Alternatively, students could be given a teacher-constructed model and asked to observe this model using their sight and touch senses and develop explanations for their observations. Both approaches can lead to strong performance assessments in which students use different practices of science to demonstrate their understanding of the water cycle. The scaffold includes initial space to brainstorm and summarize the assessment design. However, the final student instructions will likely need to be more detailed than this space allows. We have found the questions in Figure 3 useful during this step for self-assessing how we are designing our performance assessment.

Sometimes there are elements in the original standardized test question that will need to be changed or removed. Most often, this happens because the scenario in the test item is unsafe to duplicate within our classroom, the timescale of the phenomenon will not fit within the scope of a lesson, or the necessary materials are not readily available. For example, it might be difficult to gather enough tarnished silver to replicate the scenario presented in the chemical reaction test item. Because of this, when building a performance assessment based on this question, we might have students conduct an alternative chemical reaction (e.g., between a base and phenolphthalein), that also includes an unexpected color change in place of the original scenario. Whenever we swap out one material for another, we always make sure to double-check the safety protocols for the performance assessment to ensure that all the proper safety precautions are in place.

At times, there are also elements in the original standardized test question that will need to be added to in order to better align the final performance assessment to a specific grade level or standard. For example, the model in the water cycle test item does not clearly include either the geosphere or the Sun. Because of this, we might add additional materials to the model, such as clay to form a landscape or a flashlight to represent the Sun, so that students could demonstrate their understanding of these aspects of the water cycle in their performance.

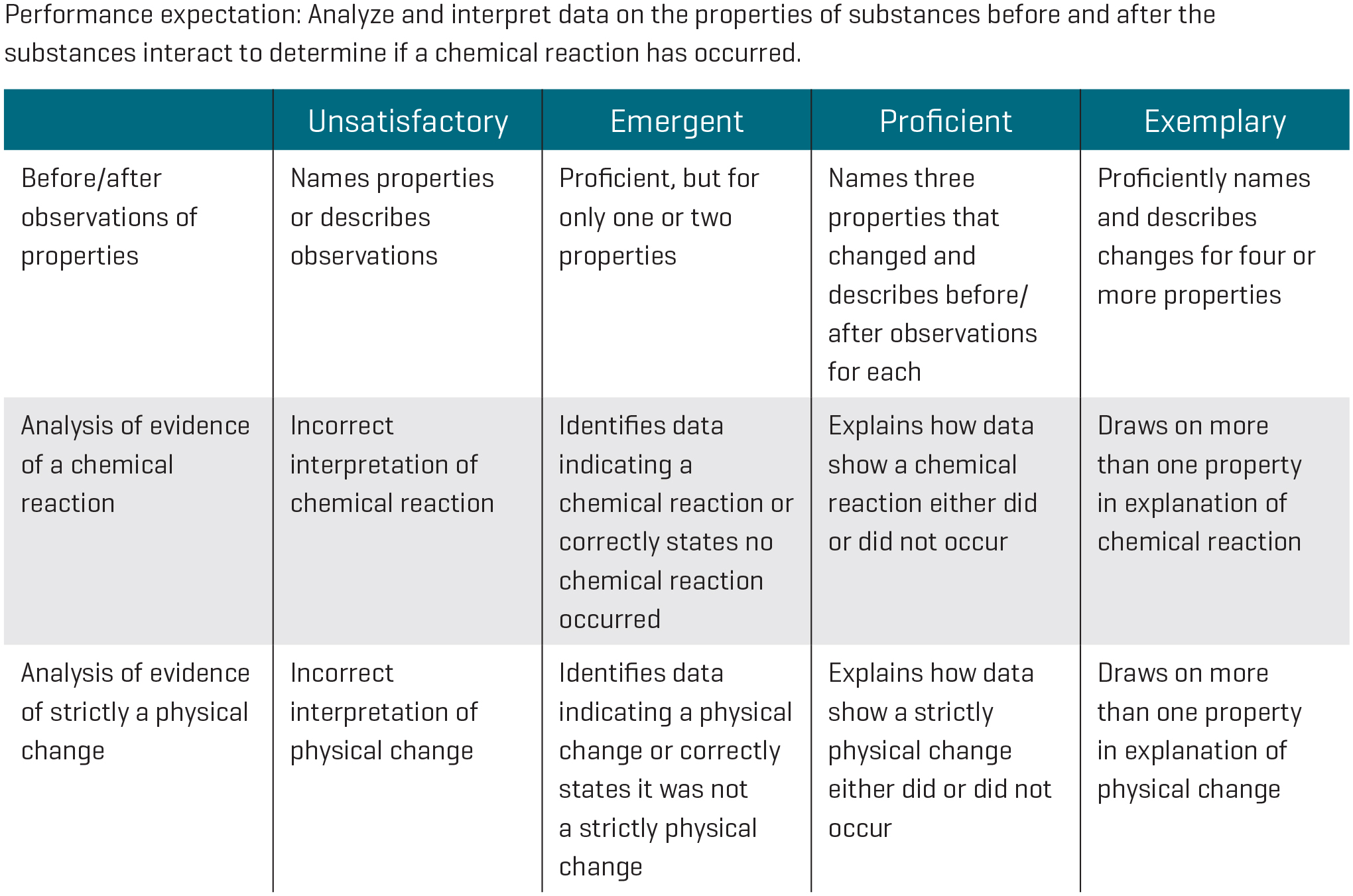

Step 5: Generate rubric criteria

Once we have designed a strong assessment task, we work to develop clear expectations as to what will count as a satisfactory student performance. We typically use rubrics to guide what we will be looking for in students’ actions and products. The time taken to write a quality rubric streamlines the assessment process during the lesson and helps ensure that we treat all students equitably in our evaluation of their performance. Rubrics also help us to focus on how students’ use of academic language aligns with their actions and to avoid becoming distracted by minor mistakes in nonessential vocabulary. We have found that performance assessments often allow for more equitable assessment than written tasks because students can leverage multiple modes of communication, such as speaking and gesturing, to demonstrate the true sophistication of what they know and are able to do. As students become more comfortable with conducting performance assessments, they can even use student-friendly versions of a rubric for self- and peer-assessment (Flynn 2008).

The performance assessment scaffold includes space to detail three or four rubric elements. Teachers often draw rubric elements from their language analysis in step 2 of the scaffold and their summary of the assessment in step 4. Later, teachers can use these elements in their final lesson plan as the rows of an analytic rubric for evaluating students’ performance. Figure 4 shows how we transformed the rubric elements from the chemical reaction item scaffold (see Figure 2) into a full analytic rubric. We recommend using an analytic rather than a holistic rubric for performance assessments (Carin et al. 2017). Holistic rubrics assign a single overall score to a student’s entire performance. These rubrics are often simpler to create, but their “big-picture” perspective can often mask areas in which students still need to improve. An analytic rubric, on the other hand, scores a students’ performance across several independent criteria, which are typically listed in separate rows. These rubrics often take time to initially design, but their detail allows us to pinpoint specific strengths and struggles for each student.

Taking a performance assessment to the next level

The scaffold explored in this article can help teachers kick-start their journey to using summative performance assessments to evaluate student learning in science. However, there are many other ways to add depth to performance assessments. For example, we have used the video camera on an iPad or a movie-generating app such as Flipagram to record our launch of a performance assessment and to provide oral directions that can be replayed instead of giving solely written instructions to students. Similar video technologies can also be used by students to record their actual performance during the assessment. Teachers can also differentiate the assessment according to student needs and strengths by adjusting the physical materials and instructions that they give to each student. For example, in the chemical reaction summative performance assessment, students could be given different initial substances so that they end up observing and interpreting different chemical reactions. Because the outcomes of some chemical reactions are more difficult to observe and interpret than others, this form of differentiation can be used to tailor the complexity of the task to the current level of each student without diluting the core performance within the assessment.

The process of turning tests into tasks has transformed both our and our students’ relationship with assessment. We are more effectively able to capture evidence of three-dimensional learning, and our students are excited to demonstrate their expertise in “doing science.” The indirect practice that students receive with the scenarios and academic vocabulary common on standardized tests is, for us, a side benefit to a solid assessment strategy.