Feature

Evaluating Nature Museum Field Trip Workshops, an Out-of-School STEM Education Program

Using a Participatory Approach to Engage Museum Educators in Evaluation for Program Development and Professional Reflection

Connected Science Learning July-September 2019 (Volume 1, Issue 11)

By Caroline Freitag, and Melissa Siska

Out-of-school learning opportunities for students in science, technology, engineering, and mathematics (STEM) represent an important context in which students learn about and do STEM. Studies have shown that in- and out-of-school experiences work synergistically to impact learning. New education guidelines, particularly the Next Generation Science Standards (NGSS), place a significant focus on increasing students’ competence and confidence in STEM fields, and note that “STEM in out-of-school programs can be an important lever for implementing comprehensive and lasting improvements in STEM education” (NRC 2015, p. 8).

Figure 1

A museum educator holds a seed specimen for students to observe during A Seed’s Journey. In this workshop, students discover different methods of seed dispersal and participate in an investigation to determine the seed dispersal methods employed by a variety of local plants.

Effective out-of-school STEM programs meet specific criteria, such as engaging young people intellectually, socially, and emotionally; responding to young people’s interests, experiences, and culture; and connecting STEM learning across contexts. Effective programs also employ evaluation as an important strategy for program improvement and understanding how a program promotes STEM learning (NRC 2015). In the past decade, the National Research Council (2010) and the National Science Foundation (Friedman 2008) have published materials citing the importance of rigorous evaluation and outlining strategies for implementing evaluations of informal science education programs. The Center for Advancement of Informal Science Education specifically targeted their 2011 guide toward program leadership to empower them to manage evaluations and work with professional evaluators.

In this article, a team of collaborators from the education department of the Chicago Academy of Sciences/Peggy Notebaert Nature Museum (CAS/PNNM) share the outcomes of a recent evaluation of Field Trip Workshops, a STEM education program in which school groups visit the Nature Museum. The purpose of the evaluation was to collect data about the program’s impacts and audience experiences as a foundation for an iterative process of reflection and program development. We hope this case study provides ideas for other institutions.

Field Trip Workshops program

School field trips to cultural institutions provide rich opportunities for out-of-classroom learning. At CAS/PNNM, school groups can register for Field Trip Workshops to enhance their museum visit. During the 2017–2018 academic year, educators at the Nature Museum taught 900 Field Trip Workshops totaling over 23,500 instructional contact hours with classroom teachers and students. Field Trip Workshops account for approximately one-fifth of the total contact hours between the education department and Chicago schools and communities, making this program the department’s largest.

Program structure

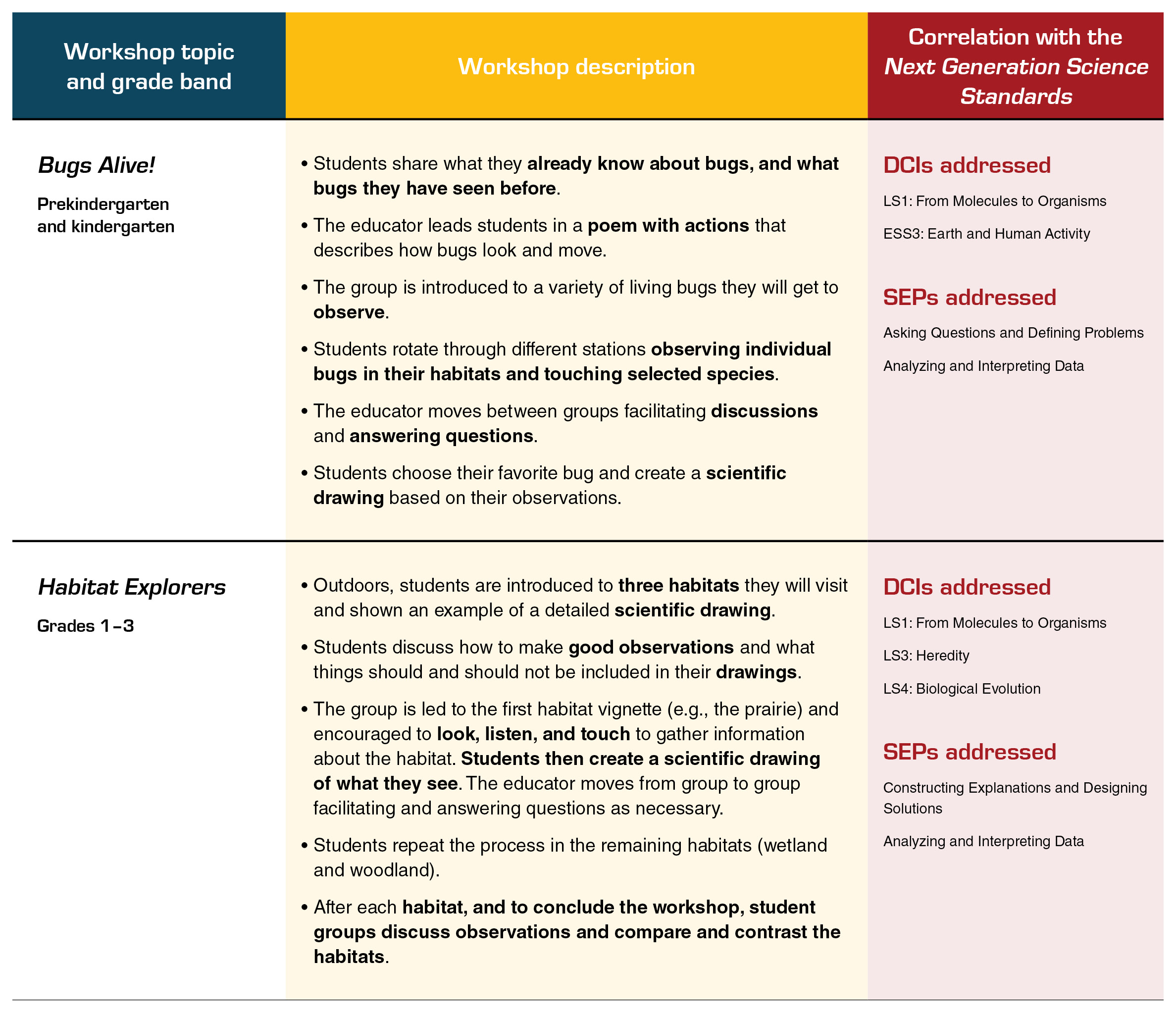

Field Trip Workshops are one-time, 45-minute programs taught by the museum’s full-time, trained educators, who use museum resources to enrich student learning. These hands-on, inquiry-based programs engage students with the museum’s collections and specially cultivated habitats on the museum grounds. Workshops connect to NGSS science content and practices while remaining true to the museum’s mission: to create positive relationships between people and nature through local connections to the natural world. Field Trip Workshops are designed for students in prekindergarten through 12th grade, with both indoor and outdoor workshops offered at each level. These workshops are an opportunity for students to learn science content, engage in science practices, and create personal connections to local nature through interactions with museum resources. Workshops highlight the museum’s living animals, preserved specimens, and outdoor habitats, and often discuss the conservation work done by the museum’s scientists.

All workshops emphasize students’ interactions with the museum’s living and preserved collections or outdoor habitats. Workshops are offered for different grade bands and correlate to the Next Generation Science Standards’ disciplinary core ideas (DCIs) and science and engineering practices (SEPs).

Education team

The education staff of the Nature Museum come from a variety of professional backgrounds. Several members hold advanced degrees in science, instruction, or museum education. Staff have experience in informal education settings and/or are former classroom teachers. Their diverse training and skills create a team with science content and pedagogical expertise.

Figure 2

Field Trip Workshop students in Exploring Butterfly Conservation inspect a case of mounted butterfly specimens while discussing their prior knowledge of common Illinois butterflies. Students are then introduced to two rare butterflies for deeper investigation.

Planning the evaluation

Setting the stage

Basic evaluation is a regular part of growing and developing the Field Trip Workshops program. Museum educators participate in team reflections, revise curriculum, and review workshop alignment with overall program goals on an ongoing basis. Because Field Trip Workshops are a fee-based program without a grant budget or a funder to report to, there had never been sufficient time or resources to support a comprehensive, mixed-methods evaluation. Still, the desire to more fully evaluate the program was present in the minds of the Nature Museum’s education department leadership and program staff. Thus, the in-depth evaluation of the Field Trip Workshops program reported here was an unprecedented opportunity.

In 2014 the Nature Museum developed and implemented an organization-wide 10-year plan. Evaluation of all programs is a key component of the education department’s plan. During a retreat in spring 2017, the staff reflected on their department’s work in achieving these goals and agreed that Field Trip Workshops needed detailed evaluation to grow and refine the program. Team members observed that, although staff worked diligently to align each workshop with the program’s goals, they had never obtained large-scale feedback from program participants to see whether those goals were truly being met.

An evaluation opportunity and its challenges

In the spring of 2019, a new museum volunteer with experience in research expressed an interest in out-of-school STEM experiences and learning more about the museum’s education programs. After careful consideration, education department leadership and the student programs manager chose to seize the opportunity, and an evaluation team including the student programs manager, the volunteer, and two members of department leadership was formed.

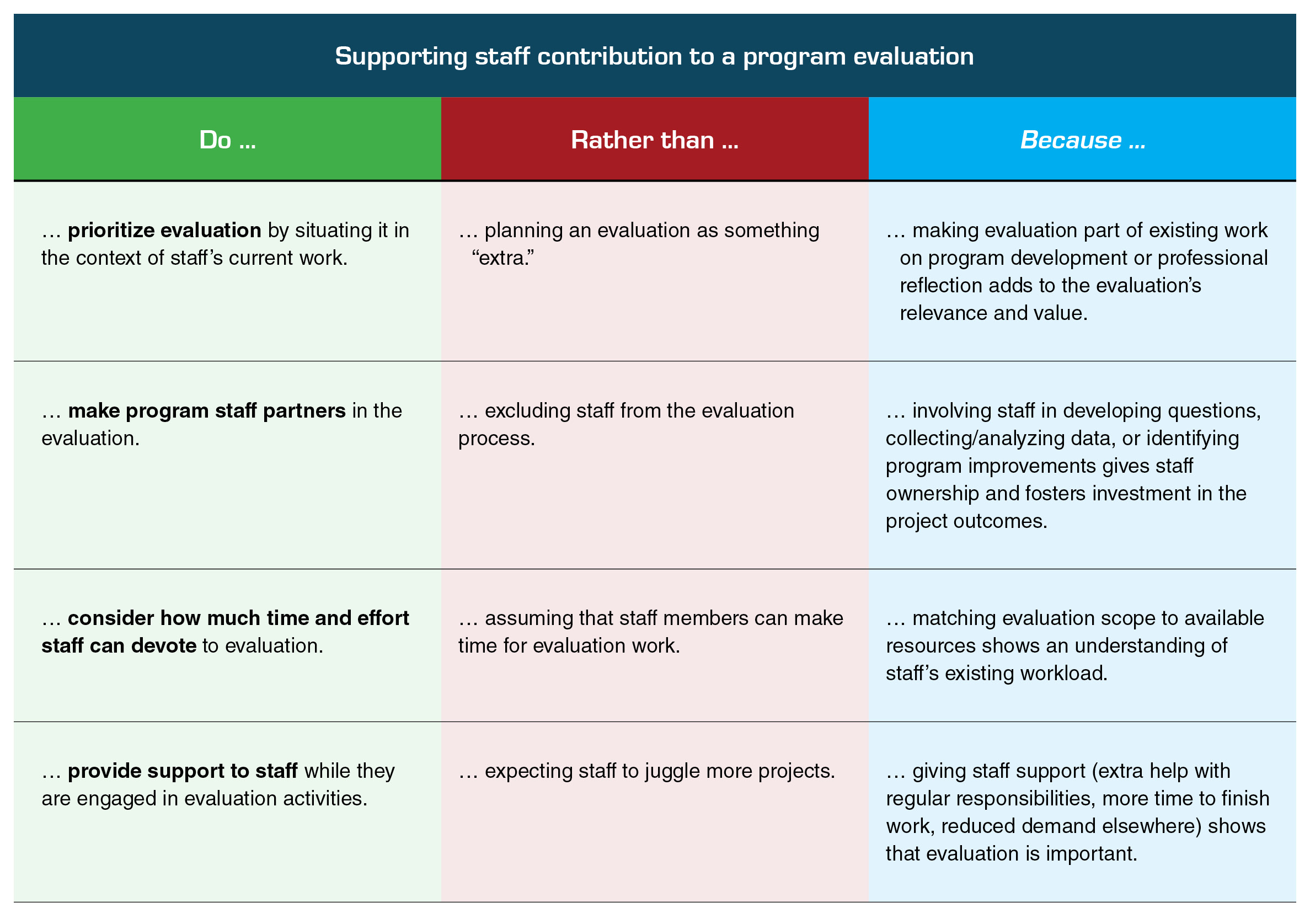

The education staff was interested in contributing to an evaluation, but it was clear that staff time would be limited. Thus, the team realized that achievable evaluation objectives (i.e., choosing only the most popular workshops to evaluate rather than the entire suite, setting a six-week data collection time limit) and staff buy-in (i.e., focusing on staff questions/priorities) would be required for the evaluation to succeed. The evaluation team kept a careful eye on the evaluation’s impact on education staff, asking them to be upfront about any concerns (e.g., disruptions to the normal schedule or difficulties with the evaluation/evaluator) and standing ready to make adjustments as necessary (e.g., rescope or end the evaluation).

Collaborating with experienced volunteers to facilitate the evaluation

The program evaluation was greater in scope than would have been possible without additional support while having a minimal imposition on staff members and programming because a museum volunteer served as the primary evaluation facilitator. The volunteer had research experience in biology and psychology, but was making a career change toward STEM education, and thus was particularly interested in learning more about informal education and program evaluation. As a result, the collaboration gave the volunteer an opportunity to learn and develop new skills, while the museum benefited from the volunteer’s research experience.

Practical consideration: Finding volunteers

Finding volunteers with enough background to support evaluation can be challenging, and all volunteers’ skills, interests, and outlooks should fit the organization and the project. Volunteers may want to contribute to evaluation efforts due to interest in the project, support for the institution or program, or to gain experience. For example, graduate students or senior undergraduates may be interested in facilitating evaluation or collaborating on a larger project. Additionally, retired professionals or part-time workers may have the necessary expertise and flexibility to donate their time to a project. Depending on background, skills, and time commitment, a volunteer may be able to help recruit participants, conduct interviews, distribute surveys, follow up with participants, and enter and visualize data. Volunteers may also help with tasks such as keeping records, scheduling meetings, and providing support for staff members.

Choosing a participatory evaluation approach

Participatory evaluation is defined as a collaboration between evaluators and those who are responsible for a program and will use the results of the evaluation. Participatory evaluation involves these people in the fundamental design, implementation, and interpretation of the evaluation. While the trained evaluator facilitates the project, the evaluation is conducted together with program staff (Cousins and Earl 1992). Participatory evaluation’s theoretical underpinnings rely on the idea that evaluation is only effective if results are used (Brisolara 1998). Utilization-focused evaluation further argues that evaluation should be useful to its intended users (Patton 1997), and usefulness can be limited if the results are not suited to those users (e.g., evaluation questions are not relevant or reports are not tailored to the audience). Participatory evaluation seeks to encourage use of evaluation results by involving those who are most interested in the outcomes in conducting the evaluation itself.

The Nature Museum’s education department was the primary intended user of this evaluation, as they implement the program and play a vital role in its development. The intent of the evaluation was to generate information that would aid in improving the program. Although this evaluation project did not use a professional evaluator, the volunteer’s research background was useful in designing the study. Education department leadership was also familiar with evaluation basics from collaborating with and training under the research and evaluation consultancy that conducts evaluations for the department’s grant-funded programs.

The evaluation team took inspiration from the participatory evaluation model specifically because it was a good fit for the education department culture. Museum educators already contribute to defining directions for programs and recommend curricular improvements. Thus, it made sense for these educators to contribute their insights to evaluation as well. Most importantly, educators’ involvement in evaluation gave them information about their highest priorities: identifying patterns of success, challenges in program implementation, and key characteristics of effective interactions with students.

Participatory approach: Museum educators’ experiences were an evaluation focus

At the start of the evaluation, the student programs manager held a meeting for all museum educators to discuss priorities for evaluating Field Trip Workshops. Together, they identified questions about student experiences and questions to ask of their classroom teachers. The educators also described factors that made them “feel like I taught a good workshop” and those that made a workshop feel less successful.

The evaluation team realized educator viewpoints would provide a critical third perspective alongside student and classroom teacher experiences, and that key insights might lie in the similarities and differences between how museum educators, students, and their classroom teachers perceived the same workshop.

Figure 3

Students gather at the sides of the walking path in the prairie to make scientific drawings of their observations of the habitat, while a museum educator discusses local plants and animals.

Participatory approach: Museum educators’ questions steered the evaluation direction

After the initial staff meeting, the evaluation team reviewed the educators’ evaluation questions and comments. They then organized the questions, reviewed possible methods for answering the questions, and considered the practicality of collecting data. Ultimately, the questions of highest priority that also met the practical limitations of data collection were selected. The remaining questions were set aside for a future opportunity.

The education staff and evaluation team articulated three evaluation questions:

- To what extent were classroom teachers and museum educators satisfied with their Field Trip Workshop, and to what degree do they think student needs were met?

- In what ways do Field Trip Workshops impact students during and after their experience, specifically (a) student engagement with and attitudes toward science and nature, (b) their science content and practice learning, and (c) the types of personal connections students made to science and nature (e.g., “I have a pet turtle like that!” or “We’re raising butterflies in class”)?

- In what ways do Field Trip Workshops connect to in-school science (e.g., the school science curriculum, the workshop’s relevance to in-school work, similarities and differences in the types of learning experiences in the workshop compared to classroom)?

Conducting the evaluation

There were several practical considerations that affected the scope of the evaluation plan.

Practical considerations: Museum educators’ schedule impacted the evaluation scope

The evaluation team decided to include only the five most popular Field Trip Workshops in the evaluation. These workshop topics are taught frequently enough that sufficient data could be collected: 10–12 workshop sessions in each topic during the six-week data collection period, which avoided the complication of carrying the project over into the next academic year. In total, the evaluation included 13 museum educators, approximately 60 classroom teachers, and about 1,500 students.

The evaluation team chose data collection methods to fit not only the evaluation questions, but also the program schedule, staff responsibilities, and evaluation time frame. First, the team decided to have the evaluation volunteer sit in and observe workshops. Together, the evaluation team developed an observation protocol so that the volunteer could take notes about student learning and record student comments about personal connections to science and nature.

Then, the team wrote a short series of follow-up questions for museum educators to answer after each observed workshop. These included rating and free-response questions about their satisfaction, perception of student learning, and personal connections students shared with staff.

Practical considerations: Museum educators’ responsibilities affected the evaluation plan

The 45-minute workshops are scheduled back-to-back with a 15-minute “passing period,” during which museum educators reset classrooms for the next workshop. In the end, it was decided that the volunteer would ask educators follow-up questions in an interview. This way, educators could prepare for their next group and participate in the evaluation. After the interview, the volunteer helped educators prepare for the next workshop.

Finally, the evaluation team developed a follow-up survey for classroom teachers. To streamline the process, the team put together clipboards with a brief request-for-feedback statement and printed sign-up sheets for classroom teacher contact information for education staff to use when approaching teachers. The evaluation team made sure that clipboards were prepared each day, rather than asking museum educators to organize survey materials in addition to their regular workshop preparation.

Program evaluation findings

The Field Trip Workshop evaluation explored three questions (listed above) by collecting data from students, their classroom teachers, and museum educators.

Overall, classroom teachers reported they were “very satisfied” (75%) with their workshops, largely because they thought the workshops and the museum educators who led them did a good job of meeting students’ needs. Classroom teachers attributed their sense of satisfaction to their assessment of the staff’s teaching, the workshop curriculum, students’ learning and engagement, and their overall experience at the Nature Museum.

Museum educators were usually less satisfied than classroom teachers. Staff linked their satisfaction to perception of student experience, student learning outcomes, and reflections on lesson implementation. Staff often attributed their modest satisfaction to perceived imperfections in implementation and to a high standard for student experience and learning. Positive teacher feedback encouraged museum educators to view their own work in an optimistic light, and they found it valuable to learn more about the factors underlying teacher satisfaction.

The second evaluation question asked in what ways students were impacted by their workshop both on the day and at school in the days/weeks following. Nearly three-quarters of classroom teachers reported their students’ engagement was “very high” during the workshop, and along with staff and volunteer observations, commented that engaging with the museum’s collections and habitats was a novel type of learning experience for many students.

After their Field Trip Workshop, teachers observed that in class and during free time, their students demonstrated improved awareness of living things and increased interest and curiosity. Classroom teachers also reported that student attitudes toward science and nature improved, a view shared by most museum educators. The education staff credited this change to reduced anxiety with living things/the outdoors, increased connection to living things, and growing confidence in new environments. The education team found it validating to see that improvements in student attitudes toward science and nature were attributed to object-based learning with the museum’s unique resources.

The evaluation also explored workshop impacts on student learning and practicing of science. Although classroom teachers said their students typically learned the science content and practices explored in their workshop “excellently” or “well,” museum staff held a slightly different view. Museum educators reported reduced student learning in many Bugs Alive! workshops and some outdoor workshops (Habitat Explorers, Ecology Rangers). A deeper exploration of these workshops revealed (1) the difficulties staff experienced in promoting effective chaperone behavior and (2) the challenges of teaching outdoors under variable conditions. These findings served as the basis for immediate reflection, group discussion, and program improvements.

The evaluation also asked classroom teachers and museum education staff what kinds of personal connections to nature and science students made during their workshops. Students made many personal connections as evidenced by their comments to classroom teachers, classmates, museum educators, and the evaluation volunteer. Analysis showed that students make a variety of internal and external connections to science and nature through workshops. Internal connections are those that link workshop experiences to students’ internal worlds, including prior emotional and sensory experiences or things the student knows and can do (such as: “I’m thinking of being a zoologist when I grow up” “It [wild bergamot] smells like tea!” “I had a goose. It got caught, and we rescued it”). External connections are those that students make between the workshop and people, places, and things (such as: “We had a lizard and two turtles in our class” “My group [project] at school is [on] woodlands” “I have one of those [flowering] trees, it’s similar, at my house”). Although museum educators occasionally hear personal connections from students, they were delighted by the variety and depth of the student statements captured by the volunteer and reported in the evaluation.

The last question focused on connections between in-school science and Field Trip Workshops. Classroom teachers reported contextualizing their field trip within their current science curriculum (unit kickoff, in-unit, unit-capping activity) most of the time. Only one in six workshops was a stand-alone experience (i.e., not explicitly connected to the science curriculum). Teachers also said they valued their workshop experience because it differed from classroom work by using resources they usually could not access: living things, preserved specimens, and outdoor habitats. Further, classroom teachers were glad their students were engaged in doing science: exploring, observing, and recording. Teachers were also pleased with workshop elements’ similarities to the classroom, including familiar teaching methods used by the trained museum educators. The education staff was happy that their prior anecdotal observations regarding close connections between their workshops and what students experience in school were confirmed by evidence.

Figure 4

On the Nature Walk during a stormy day, Habitat Explorers students make and record observations of the woodland habitat with guidance from a museum educator. Students take these “nature journals” home to share or to school for further development.

Engaging with the evaluation results to make recommendations for change

After data collection was complete, the evaluation volunteer entered and organized data for the evaluation team and education staff to review. The student programs manager and department leadership decided that the best strategy for engaging with the evaluation data was to discuss results and recommendations with museum educators over a series of meetings. An unintended benefit to this was that the evaluation stayed in the minds of leadership and staff, and continues to be a guide for program growth.

The first evaluation discussion began with an overview of findings presented by the evaluation team. After the “big-picture” presentation, the entire group discussed the results the educators found most interesting and questions about the evaluation outcomes, and began to articulate recommendations for program changes and professional development.

Participatory approach: Museum educators’ views shaped the evaluation recommendations and changes in program implementation

About a month later, at the fall education department meeting, the student programs manager chose two topics for deeper consideration. In the earlier meeting, museum educators focused on challenges with chaperone behavior in workshops. With the evaluation volunteer’s help, the student programs manager assembled observation and free-response data about effective and ineffective chaperone behavior. During the meeting, educators reviewed this evidence, discussed classroom management, and brainstormed ways to address chaperone challenges. Afterward, the student programs manager selected recommendations for managing chaperones and included those in the Field Trip Workshops lesson plans.

The education team also proposed a deeper dive into the challenges of teaching outdoor workshops, such as the variable conditions introduced by inclement weather or seasonal changes to habitats. In fact, evaluation findings showed a greater variability of experience in outdoor workshops compared to indoor ones. Together with the evaluation volunteer, the student programs manager compiled rating, observation, and free-response data from Habitat Explorers and Ecology Rangers. The education staff discussed the data and their experiences teaching outdoors. As a result, museum educators and student programs manager updated the outdoor workshops curriculum to include options for teaching in inclement weather and specific guidance about habitat content to observe and teach in different seasons.

Figure 5

Field Trip Workshop students use magnifying glasses to examine a turtle and record their observations in Animals Up Close.

Museum educators reflect on the impact of evaluation on their practice

As the evaluation drew to a close, the team asked museum educators to comment on their experiences participating in the evaluation and the ways it impacted their thinking and professional practice. Two educators’ insights are presented below.

Example 1

One museum educator, a two-year member of the education team with an M.A. in curriculum and instruction and an endorsement in museum education, wrote:

Having the opportunity to reflect directly following my implementation of a Field Trip Workshop with the evaluator was enriching to my teaching practice and allowed me to feel a sense of measurable growth. Especially when there was the opportunity to compare and contrast my various implementations of the same program, I felt growth in that I could identify goals with the evaluator and immediately assess if I had reached those goals.

For example, after teaching the workshop Metamorphosing Monarchs, I shared with the [evaluation volunteer] that in the next workshop, I wanted to limit my narration of a life cycle video to about five minutes in order to increase student engagement and [a lot] more time for exploration of specimens. Following the completion of the next workshop, we were able to directly access my progress towards that goal and more holistically discuss larger goals for my teaching practice.

Example 2

Another educator, a team member of over three years with a background in art history and an MA in museum education, explained:

I really enjoyed the overall experience with the evaluation and have found the results to be extremely helpful in informing my overall practice. [The evaluation volunteer] always made the process extremely easy for us—even during some of our busiest programming times. As an extremely self-reflective person, it was nice to spend a few minutes after each lesson that [the volunteer] observed reflecting on it … It allowed me to critically reflect on the lesson immediately after the program concluded while my thoughts were still fresh in my mind. I also feel like I benefited from rating each lesson on a scale since it pushed me to view each lesson [in a larger context], rather than getting lost in specific or smaller details or moments within [a workshop].

And now that the results, as it were, are in, it’s been especially helpful in informing my practice. I’ve found the direct feedback from [classroom] teachers, in particular, helpful as we often have very little, if any, direct contact with these teachers after the program is over. To be able to step back and see the big picture of our programs’ impact on our audiences has been very impactful and has made me begin to really think about how to help mindfully train our new hires with this all in mind.

Insights

This evaluation project was beneficial to the program for several reasons. First, the feedback received from the evaluation demonstrated how program goals were being met from a participant perspective. It also illuminated several patterns of experience where the team felt both the participant (student and teacher) and educator experience could be improved. These areas aligned with changes already on the minds of department leaders, helped provide additional evidence for specific improvements, and fostered support for those changes.

A valuable, if unintended, outcome of this evaluation’s participatory approach was the opportunity for staff to reflect on their teaching practice with the volunteer during data collection. Many team members found it helpful to reflect on their instruction immediately after a workshop and then make adjustments in the workshop that followed. This not only helped the staff holistically improve their teaching practice, but it also helped them become better supports for each other and more effective mentors to new team members.

Figure 6

As the rain clears, a group of Field Trip Workshop students make their way onto the fishing pier to observe the living and nonliving things in the wetland habitat.

Conclusions

Evaluation can be a powerful and important tool for building and maintaining effective out-of-school STEM programs. Evaluations need the support of both leadership and staff to be useful, but when done well they can yield insights and promote positive change for both programs and staff. Including staff in an evaluation—taking advantage of their knowledge of the program, instincts, questions, and contributions—yields a stronger project and a positive evaluation experience. Further, involving education staff in the design and direction of the evaluation means they place a higher value on the results.

Evaluation is often not a final destination, but rather a process of evolution for programs and their staff. Thus, creating positive evaluation experiences can help build a culture of evaluation. This is key to creating and sustaining excellent out-of-school STEM experiences.

Caroline Freitag (caroline.freitag@northwestern.edu) is an evaluation specialist at Northwestern University in Evanston, Illinois. Melissa Siska (msiska@naturemuseum.org) is the student programs manager at the Chicago Academy of Sciences/Peggy Notebaert Nature Museum in Chicago, Illinois.

Administration STEM Informal Education