Feature

Formative Assessment of STEM Activities in Afterschool and Summer Programs

Connected Science Learning July-September 2019 (Volume 1, Issue 11)

By Cary Sneider and Sue Allen

The positive impacts of STEM (science, technology, engineering, and math) afterschool and summer programs have been well documented and summarized in a number of review papers and books (e.g., Allen, Noam, and Little 2017; Krishnamurthi and Bevan 2017; NRC 2015). Growing awareness of STEM’s value in outside-of-school time (OST) has in recent years led education leaders to develop the STEM Ecosystem Movement, an effort to form collaborations among formal and informal educators with support from local businesses, universities, science centers, and other partners, with the goal of creating more effective ways of fostering student learning. At last count, 68 city and regional teams have joined StemEcosystems.org, a collaboration involving 1,870 school districts, 1,200 OST providers, and 4,350 philanthropic, business, and industry partners, serving more than 33 million preK–12 children and youth.

Of central importance in these new collaborations are partnerships between school teachers and facilitators of afterschool and summer programs. These partnerships have great potential to coordinate otherwise separate efforts in order to provide more engaging, meaningful, and educationally effective STEM experiences for youth. However, given the differences between the two distinct teaching environments, it is not always clear how teams can best collaborate. Preliminary evaluations have shown that one of the major challenges for these teams has been “finding time and trust to successfully navigate differences among formal and informal cultures, including language and terminology, education and experience, accountability and vision” (Traphagen and Trail 2014, p. 7).

In a nutshell, the idea is to engage formal and informal educators in collaborating on developing ways to use formative assessment—an instructional approach widely recognized for its value in schools—in afterschool and summer programs (Black et al. 2003; Black and Wiliam 2009; Yeiser and Sneider 2013). In formative assessment, teachers identify what they want their students to learn, and then provide activities to see which students are learning and who needs more help. Teachers can then adjust their instruction to achieve their goals. Formative assessment activities can range from quizzes to hands-on activities to group discussions—whatever helps the facilitator see whether students are learning the targeted concepts and skills.

We were initially concerned that classroom teachers would have a greater focus on concepts and skills, whereas afterschool and summer camp facilitators would place a higher value on fun and engagement. However, as illustrated in the three case studies reported in this article, that did not appear to be a problem because the classroom teachers in this study recognized the special nature of STEM outside-of-school time, and the OST facilitators wanted their students to develop knowledge and skills that would be valued in school. Although our sample is small, we expect that mutual understanding and trust are achievable for participants in many STEM ecosystems.

In early 2016 we had an opportunity to work with four citywide teams (Boston, Massachusetts; Providence, Rhode Island; New York, New York; and Nashville, Tennessee) through the efforts of Every Hour Counts, a national coalition of organizations representing cities and regions across the United States that have formed STEM ecosystems (Traphagen 2018). In the sections that follow, we summarize a one-day workshop we offered on formative assessment, and then report results from three of the pioneering teams that approached the task of developing and testing a formative assessment activity in different ways. In sharing the results, we have drawn heavily from reports written by the teams as part of their reflections on their learning experiences. To preserve the privacy of individuals and schools, we have assigned pseudonyms and acknowledged their assistance as a group at the end of this article.

Workshop: Formative assessment in afterschool and summer programs

Thirty-one educators from seven citywide STEM ecosystems participated in the workshop, including the four that subsequently worked with us to develop and test formative assessment activities. The workshop involved a series of practical activities on formative assessment. We defined formative assessment as gathering information on students’ learning during instruction, and listed the many benefits of using it in informal as well as classroom learning settings. We then guided the participants through a simple hands-on activity intended to teach the distinction between criteria and constraints in the process of engineering design. The challenge was to build a tower to support a stuffed puppy (Figure 1). Participants were tasked to meet the criteria of height and stability and the constraint of using a given number of index cards as construction materials. Much like youth in afterschool and summer STEM programs, the participants engaged in the activity with enthusiasm.

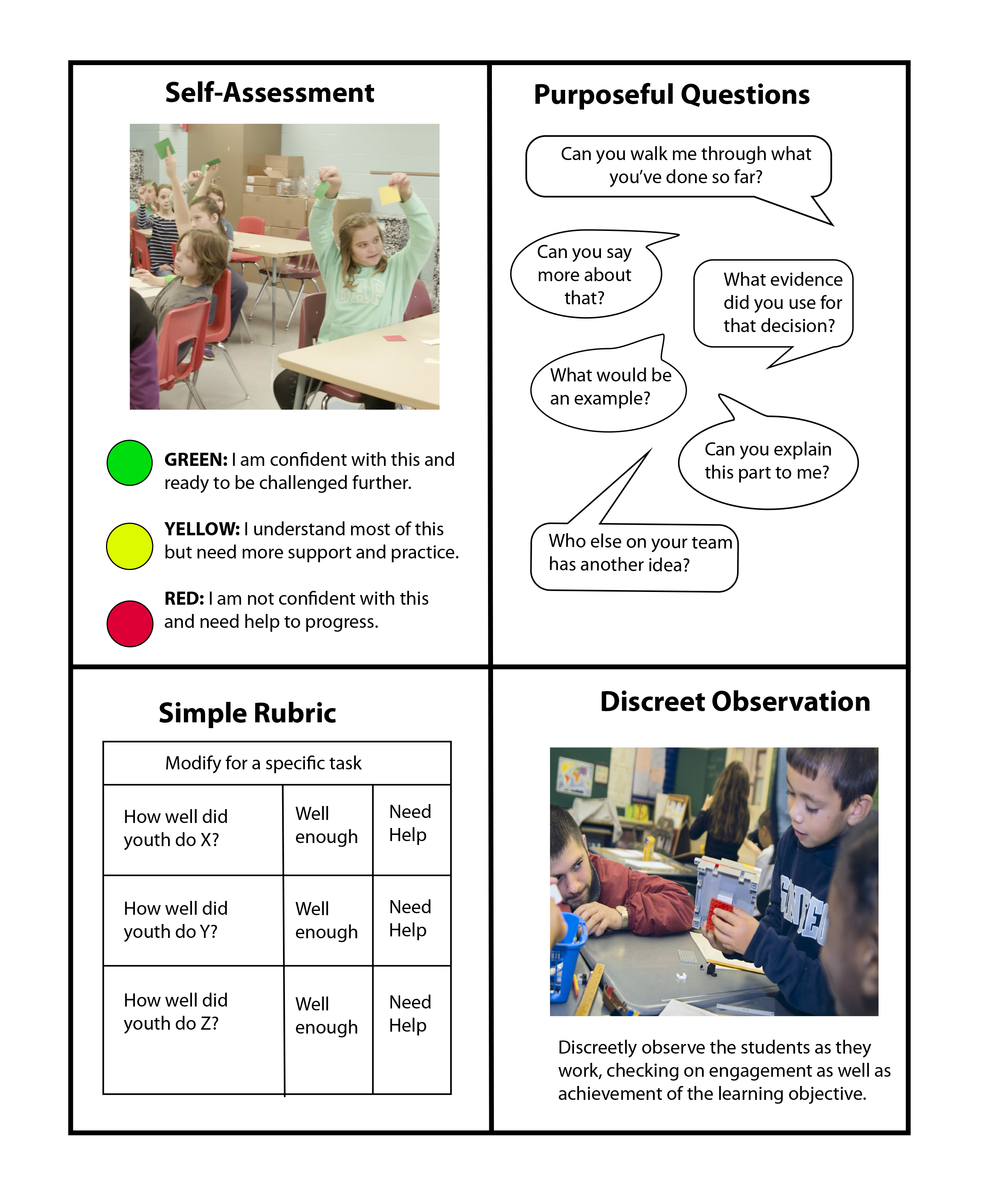

During the activity, we modeled the following formative assessment strategies:

- asking youth to flash colored sticky notes to self-report their level of understanding;

- making discreet observations of youth at work;

- asking youth open-ended, purposeful questions about their activity; and

- using a simple rubric.

These strategies were integrated into the activity, did not take a lot of time, did not disrupt the experience, and gave the facilitator a rough sense of whether most of the group had met the goal of the activity—to meet the criteria and constraints of the task—or needed more help. Because the larger goal of the workshop was to demonstrate the use of formative assessment, we ended by asking participants to share their experiences, summarizing the four strategies we used during the activity, and leading a short discussion about the pros and cons of each strategy. We gave the participants a handout (Figure 2) as a take-home reminder of the strategies.

Figure 2

Workshop handout showing four useful strategies for formative assessment in afterschool and summer programs.

We also provided a brief review of the Next Generation Science Standards’ science and engineering practices as a set of learning goals that would be of value to both formal and informal educators (NGSS Lead States 2013). Then, we assigned teams to select an activity they might do at their site that would align well with one or more NGSS practices, as if they were going to present the activity the next day. Teams left with the expectation that they would attempt to use formative assessment at their sites and report on their experiences. Although not all STEM ecosystems were able to commit to working with us to pilot this approach, four of the citywide STEM ecosystems did so, and we coordinated the work of eight teams (two teams in each of the four cities) of formal and informal educators via phone or Zoom videoconferencing. We selected three of the eight case studies for this article because they represent a diversity of OST settings.

Case study: Formative assessment in a summer school program

In comparison to afterschool, summer programs provide much more time for students to engage in extended projects, and thereby develop deeper skills. That was the case with the middle school Fashion Futures summer program, which ran for five weeks, five days per week, for three and a half hours each day. Tracey, an informal educator, and Mariel, a formal educator, designed the course. Although fashion design was not in their state’s standards, Tracey and Mariel recognized the value of the program for youth age 11–14 to learn physical science concepts (properties of materials), mathematics skills (measuring and scaling), and practices of science and engineering (solving problems and arguing from evidence). As Tracey and Mariel later reflected:

During the course of the 2017 Fashion Futures program, youth engaged in many hands-on activities where they learned to measure, scale, manipulate, and create full garments. [We] expected youth to learn how to do all the things listed above through models and hands-on activities. We used various forms of formative and informal assessment during our day-to-day activities to ensure youth understanding. One of the most important aspects of our program was to make sure all youth were proud of their outfits and they could explain and show off their hard work. We did this through a fashion show at the end of the program on the last day of camp.

We completed two activities where youth needed to provide evidence to support their claims. The first formative assessment task was when youth tested over 20 pieces of material that could be used for clothing. Based on their designs, they would need to decide which fabric they would choose and provide evidence as to why this worked. For example, if a youth was creating a rain jacket, they would need to use evidence to support their claim that a plastic bag would be a good material for a rain jacket, [as] opposed to a white, cloth material. Youth did this individually and handed in their reflections.

The youth completed the second activity toward the end of the summer. They were asked to independently reflect on their fashion designs, providing evidence in support of their decisions.

During the first of the two assessment activities, most of the youth did a great job [of] providing evidence to support their claims. Those who did not complete this efficiently discussed the assessment with one of us to make sure they understood what they were supposed to do to show that their claims and decisions were supported by evidence. We then followed up with those youth later on. By the time the second formative assessment activity was given, this was a much easier process for the youth because they were already exposed to it during the first round, so these discussions were beneficial.

During the course of the summer, we learned that the informal assessments don’t have to be as thoughtfully planned as formal assessments. With this being said, it is important to make sure that [assessment] is happening continuously throughout class time in order to make sure all youth understand the concepts.

This example illustrates that the team did not have to change their planned activities. They ran their Fashion Futures class as they had in the past, but they shifted their thinking about the activities, seeing them not only as learning opportunities for students, but also as opportunities to learn about student thinking. They also thought differently about their learning goal. Arguing from evidence had always been an important goal, but as a focus of formative assessment, the teachers found ways to accomplish that goal through different means.

Case study: Formative assessment in a one-day field trip

The setting of the second case study was a nature preserve that offers single-day field trip experiences for middle school students (grades 6–8) from the city. Senior staff developed the program, which was then delivered by more junior facilitators, few of whom had a background in teaching or science. The focus of this lesson was on the core ideas of adaptation and natural selection, and the practice of constructing explanations. The senior facilitators later reflected:

The first lesson, Micro-wilderness, had the children find insects and describe their adaptations. The goal was for them to think purposefully about how structures and behaviors increase the [chances of] survival of the population. [Students] made claims about the function of an adaptation by recording evidence that they observed on worksheets and researching additional information about their insect. The worksheets had the following questions:

- Name a physical and a behavioral adaptation, and explain how it helps your insect survive in its environment. What is your evidence?

- Let’s say in the next year, there’s a new species of bird introduced to the island that eats your insect. Describe a new structure or a change in behavior your insect population could evolve and explain how it will help the population survive this new threat.

Most students were able to name a physical and/or a behavioral adaptation correctly. While some students only named the adaptation, many were able to explain how that adaptation enabled the insect to survive. In the children’s responses to the second question, about what would happen if a new species of bird were introduced to the island, many were able to name either a physical or behavioral adaptation, and some (but not all) were able to explain how the adaptation would help it survive.

The students then presented their organism in a convention format, fostering discussion, questions, and idea-sharing amongst their peers. The objectives of this lesson were for the students to describe how specific features—structural or behavioral—provide advantages to an organism’s survival, and to explain how environmental changes impact adaptations within a population over time. The (junior) facilitators had been coached to ask the following questions during the convention:

- Tell me about your insect’s adaptation. What is your evidence?

- How will that adaptation help your organism survive the new bird species/predator?

- Is your adaptation structural or behavioral? How can you tell the difference?

Our formative assessment methods were purposeful questioning and direct observations. We provided the facilitators with instructions for what to do if their observations during the convention suggested that the class was having difficulty understanding the concepts of adaptation and natural selection. This took the form of open-ended questions that would encourage the students to construct these core ideas on their own:

- Can someone remind us what a physical or a behavioral adaptation is, or give us an example? What does your insect need to be able to survive?

- If you were being hunted by a pterodactyl, what behaviors or body parts would you like to have in order to not be eaten?

- Put yourself in your insect’s place. What body part or behavior would help you survive if a bird were trying to eat you?

The facilitators interacted with the children while they were working on … responses, making observations and asking purposeful questions to provide guidance. In the future, we expect to ask our facilitators to model correct responses by sharing out loud some of the most interesting observations and ideas that they heard. This can help promote more and better responses from the group as a whole.

In summary, we learned the following about informal assessments in our particular setting:

Pros

- Formative assessments are useful to focus the facilitator on the major objective of the lesson.

- They provide the program with a closer look at the quality of the lesson in meeting [the] objective(s).

- They can serve as a tool to provide feedback to the facilitator.

- They can be used to scaffold student learning and guide them back on track.

- They can provide information as to how the lesson may be revised.

Cons

- The limited time we have with children allows little time for … modifications for those [who are] not [“getting” the lesson].

- We don’t have the luxury of addressing what [students] didn’t [“get”] since there is no follow-up lesson.

- Our facilitators do not necessarily have a background in teaching and/or science and therefore must undergo extensive training prior to the beginning of each season. Because of the nature of this industry, we have a high turnover of facilitators from season to season. We develop [in facilitators] the necessary skills over time to apply formative assessments, and then they move on and we must start over with new facilitators. For these reasons, it is imperative that we develop well thought-out scripted lessons that the facilitators can deliver in a timely fashion that leaves little margin for error during delivery.

This was a particularly interesting case because the “pro” bullets show that formative assessment can work in out-of-school settings, even with facilitators with little or no background in teaching or science. Regarding the first two “con” bullets, we note that this lesson plan had additional activities built into it to help children who were struggling. The third “con” bullet is also an important lesson for leaders of afterschool and summer programs. In this case, “scripted lessons” did not mean providing information to be memorized and delivered, but rather good questions to ask so as to determine how well students are learning.

Case study: Formative assessment in an afterschool program

In our third case study, both formal and informal educators ran the afterschool program together, and both had considerable teaching experience. Louise taught all of the science classes in her urban elementary school of nearly 600 students. Her partner, Frances, taught preschool and afterschool.

In this case, the team decided to focus their afterschool program for grades 2–4 on a specific physical science standard—“Energy and matter interact through forces that result in changes in motion” (NGSS Lead States 2013)—rather than a more general science or engineering practice. Their plan was to provide experiences for their students to address this standard through three different activities that engaged students in applying the same core ideas. They also wanted to use formative assessment to determine how to modify each activity based on prior student performance. Each of the activities described below require approximately four one-hour lessons with youth (see Figure 3).

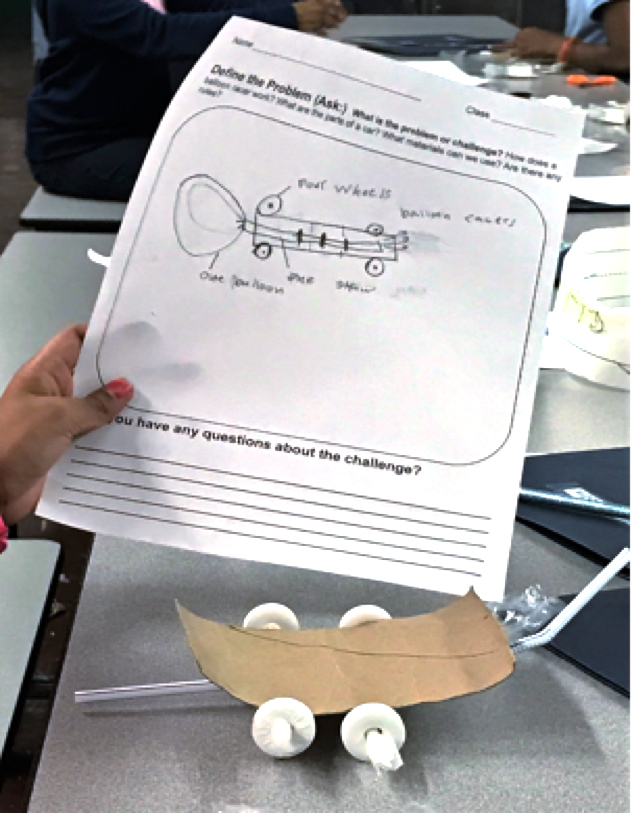

- Balloon racer: Our goal was to support the learning that goes on during the day by challenging students to use their understanding of concepts taught in school to solve real-life problems. The first challenge was to build a balloon racer that would use air in a balloon to push the racer forward. The first step was to have students do a 30-minute investigation pushing a toy car around the room, and make observations. Next, the students researched air-powered cars to draw inspiration for their own racer. Then they built and tested their racers. We had to stop at one point when we realized they had trouble with wheels and axles. They were taping the wheels to the racer, so they were not turning … [and] were sliding [instead of] spinning. Once the students solved the problem with the wheels, they discussed other ways to improve their designs. These discussions among the students served as our formative assessment. Some suggested having two straws from the balloon to the racer would let more air flow. Others thought a bigger balloon would give them more force. They also learned that heavier racers required a greater force than lighter racers. In the end 50% of the racers were successful, but all of the students appeared to understand the idea that more force gets the cars to go further, and many also realized that heavier cars require more force than lighter cars to go the same distance.

- Catapult: The children designed a catapult from rubber bands and spoons to hurl a marshmallow as far and accurately as possible. This second challenge helped them become more independent builders, so that we didn’t have to troubleshoot as often.

- Playground slide: The third activity was to plan and conduct experiments with a playground slide to see how the force of gravity affects objects of different weights.

My partner and I learned several things about ourselves and our students from these afterschool activities. One is that kids have comments and questions that are completely unpredictable and catch you off guard. Second, we really love creating a fun, interactive place to learn about science. We are very supportive of one another.

Figure 3

Two afterschool activities designed to help students develop understanding of force and motion

Keeping in mind that the purpose of these activities was for students to learn that “Energy and matter interact through forces that result in changes in motion,” the teachers’ deliberate attention to students’ discussions as they tested and redesigned their balloon racers led the teachers to conclude that “all of the students appeared to understand the idea that more force gets the cars to go farther, and many also realized that heavier cars require more force than lighter cars to go the same distance.”

For a teacher in the formal school system, this may not provide sufficient evidence that all students were taking important steps toward achieving the standard. However, this was not a science class in school, and requiring each learner to respond to a written quiz could easily have dampened any enthusiasm students had developed for experimenting with force and motion. What is most important in this setting is that by listening to their youth talk with each other, the educators became attuned to what to emphasize in the two subsequent activities, which ideas to reinforce, and how to deepen children’s understanding of the relationships among energy, matter, force, and motion.

Conclusion: What we learned about formative assessment in out-of-school time

Although the STEM ecosystem movement is still young, a survey of ecosystem leaders revealed some of the challenges of forming partnerships between classroom teachers and afterschool and summer program providers. The study found a common desire among leaders to encourage assessments for continuous improvement but difficulties achieving a common vision of what that means.

“In the words of one leader: ‘Useful assessment and evaluation always require a stable environment in which to assess[;] agreement on important goals, methods and techniques of assessment[;] carefully selected instruments upon which the various constituencies agree and approve[;] and the development of a common language/purpose of assessment’” (Allen and Noam 2016, p. 9).

The goal of engaging classroom teachers and OST facilitators in working together to develop formative assessments has been to help them achieve agreement on important goals, methods, and techniques of assessment that work in both environments, as well as a common language. In the afterschool and summer environment, that has meant teaching and assessing STEM more systematically, without losing the fun and engaging quality of STEM outside of school—some call it the “special sauce”—that has inspired so many of today’s scientists and engineers. Although we do not claim this should be the only approach to assessment, we did find that it is one way to help formal–informal educator teams work together to improve STEM education.

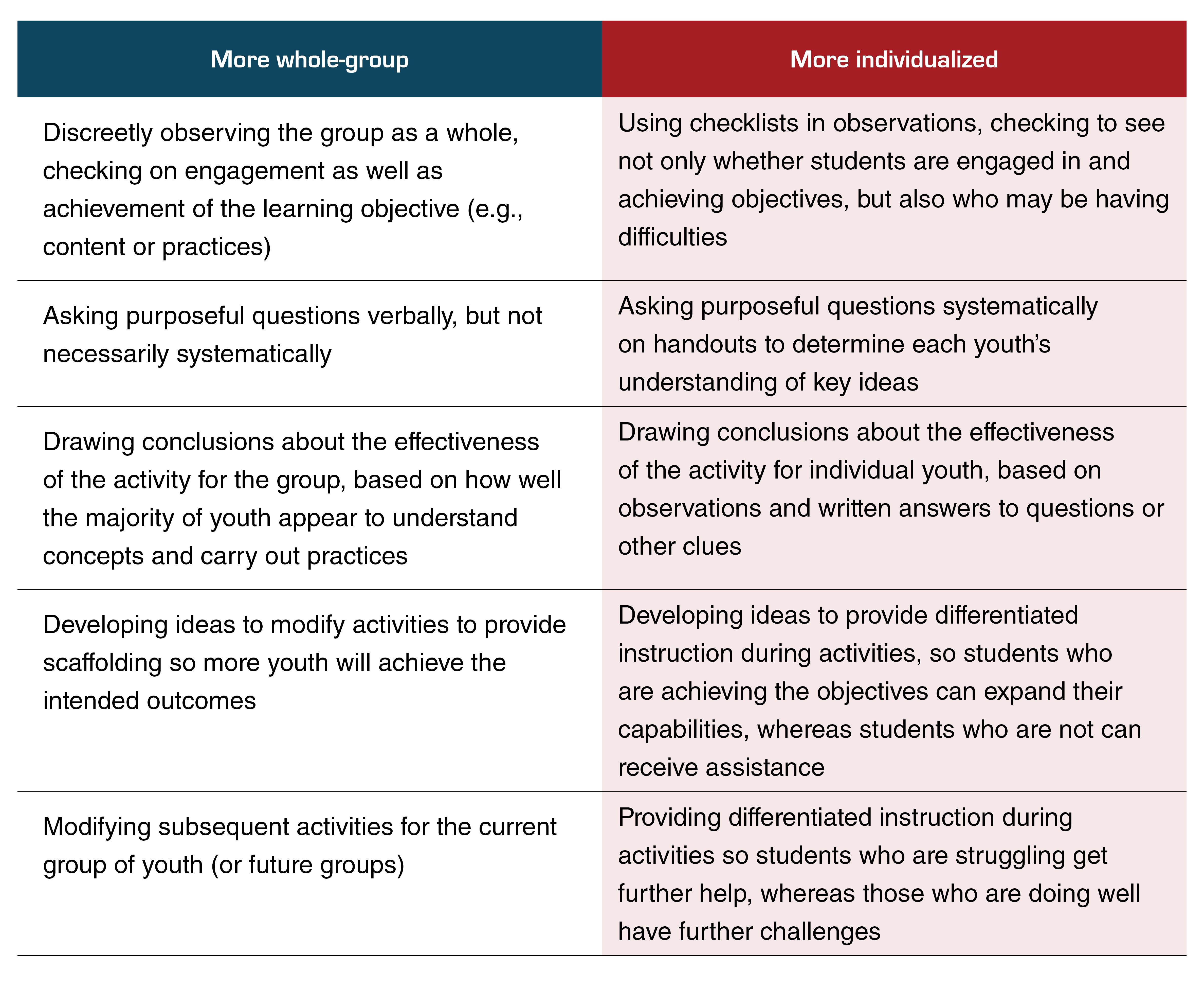

Looking across all eight of the case studies (three of which are reported above), we saw a continuum of approaches, ranging from a more whole-group focus to a sharper focus on individual youth. Table 1 illustrates these differences. These are emphases rather than distinct differences. Both extremes begin by specifying a clear and specific learning objective, and engaging youth in activities that can reveal their thinking. Which approach to emphasize depends on the setting, the number of youth, the experience of the facilitators, and the particular activity, including its learning goals and structure.

Table 1

Continuum of formative assessment methods

We commend the participants who designed and carried out these case studies for their willingness to explore new formative assessment approaches and share their experiences with us. We hope these examples will inspire readers to collaborate with their colleagues, take a fresh look at their lesson plans for the coming months—whether in school, afterschool, or summer—and design new ways of understanding how students are thinking and leaning so that you can continually adjust your teaching to meet their needs.

Acknowledgments

We are indebted to the pioneering teachers who volunteered their time to develop, implement, and write case studies on formative assessment in afterschool and summer programs: Olga Feingold, Steve Green, Sarah Abramson, and Kathleen Wright from Boston, Massachusetts; Cassie Deas, Anne Gensterblum, Eleanor Carter, Ebony Weems, Shayla Humphreys, Lauren Buford, Rachel Amescua from Nashville, Tennessee; Ruth Levantis and Angeli Lowe from New York, New York; and Audra Cornell, Allyson Trull, Ali Blake, and Hillary Genereaux from Providence, Rhode Island. We also thank Jessica Donner and Sabrina Gomez of Every Hour Counts and Saskia Trail of ExpandEd Schools for their leadership and insightful comments on this paper, as well as Kathleen Lodl, Page Keeley, and Sarah Michaels, whose ideas inspired our instructional methods, and the STEM Next Opportunity Fund for financial support of the STEM Ecosystem project of which this study was a part.

The handouts shown in Figures 1 and 2 were created as part of the ACRES project, supported by grants from the National Science Foundation and STEM Next Opportunity Fund, which offers coaching and professional development materials to afterschool educators in rural settings. You can find more information about the ACRES project online.

Cary Sneider (carysneider@gmail.com) is a visiting scholar at Portland State University in Portland, Oregon. Sue Allen (sallen@mmsa.org) is a senior research scientist at the Maine Mathematics and Science Alliance in Augusta, Maine.

Assessment STEM Informal Education