Fact-Checking in an Era of Fake News

A Template for a Lesson on Lateral Reading of Social Media Posts

Connected Science Learning May-June 2021 (Volume 3, Issue 3)

By Troy E. Hall, Jay Well, and Elizabeth Emery

As all science teachers know, the rate of scientific advancement is accelerating, far outpacing the ability of teachers or students to master. Nevertheless, scientific understanding is crucial to address contemporary social and environmental challenges, from climate change to food supply to vaccines. Citizens must be able to interpret scientific claims presented in the media and online to make informed personal and political decisions. Informed decision-making requires scientific literacy, the ability to decipher fact from fiction, and a willingness to engage in open-minded, productive discussions around contentious issues. Scientific literacy does not come naturally for most people; these skills need to be taught, practiced, and honed (Hodgin and Kahne 2018). Such scientific literacy skills are recognized specifically in the science and engineering practice of Obtaining, Evaluating, and Communicating Information described in the Next Generation Science Standards (NGSS; NGSS Lead States 2013). However, these skills can be difficult to integrate into lessons because—while these practices have been identified as important—it is not well understood how to teach them in the digital age.

This article describes a biology lesson we developed that incorporates a relatively new approach to teaching middle and high school students how to fact-check online information. This lesson emerged out of a partnership between school science teachers, an academic unit at Oregon State University (OSU), and OSU’s Science and Math Investigative Learning Experiences (SMILE) program. SMILE is a longstanding precollege program that increases underrepresented students’ access to and success in STEM (science, technology, engineering, and math) education and careers. For more than 30 years, the program has provided a range of educational activities, predominantly in rural areas, to help broaden underrepresented student groups’ participation in STEM and provide professional development resources to support teachers in meeting their students’ needs. Our lesson focuses on social media posts about genetic engineering (GE) of plants, but this promising approach to digital literacy can be adopted for other scientific topics and internet information sources.

Challenges in Curating Online Information Sources

Internet sites have expanded as sources of all types of information, including scientific research. Today, digital sources outcompete traditional news and information sources among the general public, including U.S. adolescents, who rely heavily on the internet for information (McGrew et al. 2019) and school assignments (Hinostroza et al. 2018). However, many internet sites have no gatekeepers to monitor the integrity of their content, and sophisticated producers can mask false or misleading claims as factual analysis (Wineburg and McGrew 2019). Many studies have shown that students lack the ability to adequately search for and assess online information (Hinostroza et al. 2018), which can leave them unprepared to think critically about issues raised in their classes and societally.

In the case of controversial socio-scientific issues like GE, social media serves as an outlet for both ardent proponents and objectors, and it can be difficult for even trained fact-checkers to locate and evaluate the quality of sources. In such cases, students can encounter biased or partial information, depending on how they search online. It is well-known that people gravitate toward information that resonates with their preexisting attitudes, a phenomenon known as confirmation bias (Sinatra and Lombardi 2020). Indeed, some research has shown that students judge claims that align with their prior views to be true, regardless of the actual validity of the claims (Kahne and Bowyer 2017). Adolescents trust favored search engines, often clicking on the first links that appear, unaware that such sites may contain sponsored content and unable to separate such content from objective facts (Breakstone et al. 2018; Hargittai et al. 2010; Walsh-Moorman, Pytash, and Ausperk 2020). Unless prompted to be more critical, students may rely on intuitive assessments of online information to judge content validity or the credibility of the source (Tandoc et al. 2018). Unfortunately, students may be unwilling to make an effort to critically evaluate content if they judge the task as having low stakes or being about something that does not personally interest them (Hinostroza et al. 2018).

Although partisan internet sites often portray an issue or technology like GE in black-or-white terms, it is rare for any significant issue of public concern to be such a simple matter. For example, GE has been used to create vaccines and insulin, which few would argue are bad. On the other hand, using GE technology to improve agricultural crops involves many complex environmental and ethical considerations; for example, GE can be used in applications to increase the global food supply but may promote herbicide resistance in weeds or allow modified genes to flow to non-GE crops. Given myriad controversial considerations, it is important to assess GE agricultural products individually and resist the tendency to engage students in lessons tasking them to evaluate GE agriculture as a single, monolithic entity.

Teaching Students to Become Fact-Checkers

In the face of such multifaceted issues, educators strive to shape students to become citizens and consumers who can carefully consider different dimensions of an issue, locate scientifically credible information, and critically evaluate both sources and content (Hodgin and Kahne 2018). Science teachers must spend adequate time cultivating these lifelong learning skills that today’s students will need in their adult lives. This is as much about learning how to learn (i.e., obtain, evaluate, and communicate information) as it is about mastering scientific content.

Considerable scientific study has identified the types of cues that signal the quality of an information source, such as the author’s credentials (Stadtler et al. 2016). However, the source is only one cue to the quality of information provided. In addition, one should evaluate the recency of information, look at the URL, evaluate the language used, and investigate the sponsorship of the site (Breakstone et al. 2018). Such items are often included in “checklist” approaches used to teach students how to evaluate online information. However, scholars have questioned the efficacy of checklists because internet sites promoting misinformation are becoming so prevalent and convincing that they pass these checklist tests (Fielding 2019; Sinatra and Lombardi 2020). Moreover, checklists do not teach students broader digital literacy skills, such as how different search terms generate different results. For example, “genetically modified organism” is a common search term that links to polarized information. However, “genetically engineered agriculture,” a related but less-common search term, links to less polarized information. This type of nuance is problematic for students who may rely on common search terms. Teachers need new strategies to address NGSS practices and give students a strong ability to evaluate the information they find when searching.

One promising alternative to assessing digital credibility with checklists is “lateral reading” (Wineburg and McGrew 2019; Walsh-Moorman et al. 2020). This technique, pioneered by the Stanford History Education Group (SHEG), is modeled after professional fact-checkers’ source evaluation strategies. Lateral reading involves validating unfamiliar sites by looking outside the site itself—using the power of web searches to cross-reference information in the unfamiliar site until its trustworthiness and credibility can be established. SHEG’s materials and evaluation have been developed for university students with a focus on social issues, as opposed to science, so there is an opportunity to refine them for different audiences and materials. Recently, Walsh-Moorman et al. (2020) called for more exploration of how educators could use lateral reading in middle schools, especially in ways that might be efficiently embedded in the curriculum. Our lesson adapts the process of lateral reading for science education with a K–12 audience.

In the following section, we describe this lesson, which was developed as one part of a larger curriculum for middle and high school science students on the science and social issues associated with GE agricultural applications. Our overall goal was to develop educational materials that encourage open-minded thinking about the breadth of social issues surrounding specific GE agricultural products, rather than understanding the basic science of GE or debating whether GE as a whole is good or bad. This particular lesson focuses on fact-checking information presented in social media using lateral reading.

The Fact-Checking Lesson

Lesson development

Knowing that GE is controversial—with multiple social, environmental, and economic dimensions—we invited middle and high school SMILE club teachers to participate in focus groups and surveys intended to understand their interest and knowledge in teaching about GE, their self-assessed capacity and comfort to deliver this type of material, their students’ interests and prior attitudes about the topic, and the need for our material to connect to NGSS. Thirty-nine teachers from 26 schools completed surveys, and 16 high school teachers participated in four focus groups lasting approximately one hour each.

To our surprise, these assessments showed that teachers did not consider the controversial nature of GE to be a barrier to teaching this type of material. Additionally, their interest in teaching about the environmental, food, and economic aspects of GE was relatively high. However, based on their self-reports and a quizlike assessment, their knowledge about these associated aspects was moderate to low, suggesting that they would benefit from a tool to help students assess validity of information they find when studying these topics.

In addition to teacher surveys and focus groups, we examined the GE curriculum teachers were referencing and using at the time. Teachers were generally teaching about GE as an applied lesson connected to an introductory genetics unit. Commonly, GE curriculum involved a class debate where students argued for or against GE technology over one or two class periods. As preparation, students developed lists of pros and cons from digital media sources. Because students are generally not skilled at fact-checking, this often resulted in their lists containing misinformation that might not be validated by teachers until the actual debate, if at all. This had the effect of confirming misinformation in students’ minds.

We identified two main concerns about these existing lessons. First, they assume GE can productively be discussed as good or bad. However, some of the many agricultural applications have been shown to have few adverse impacts, while others are more significant. Students need to understand the differences among specific GE agricultural products—including their various environmental, societal, scientific, and economical considerations—to be able to have a productive debate about whether a specific GE product should be used. Students should not be encouraged to think about all GE products as involving the same considerations.

Second, many existing lessons are outdated and do not reflect current understandings of the nuances of GE agriculture. Links embedded in curricular materials quickly become outdated, as advances in GE are so rapid. Therefore, rather than using static materials that contain old links, students need to be able to identify contemporary information resources regarding specific GE agricultural products. In addition to building digital searching skills, this will enable them to develop meaningful lists of the pros and cons associated with a specific GE product.

Thus, through our review and consultation with teachers, it became clear that students need the skills to curate information about GE agriculture and teachers need tools to formatively assess their students’ information-gathering skills. This led us to build upon SHEG’s work on lateral reading in our lesson.

Traditionally, fact-checking lessons involved what is called “vertical” reading, in which students systematically explore and critique elements within a source. Many students are familiar with and have used checklists based on vertical reading to determine whether a piece of information is credible, such as assessing the source’s authority, purpose, accuracy, currency, and relevance. However, in the digital age, verifying a source through vertical reading can be quite difficult, as some internet sites are deliberately designed to be misleading. Additionally, now that most students can access information via the internet, they should not be restricted to vertical reading. Instead, current recommendations suggest teaching to read laterally, that is, by examining other sources and triangulating findings. SHEG’s approach to this involves validating a target source using six steps: investigate the source’s author, perform keyword searches, verify information and quotations, research citations, look up organizations cited, and analyze sponsorship or ads (Walsh-Moorman et al. 2020).

Lesson overview

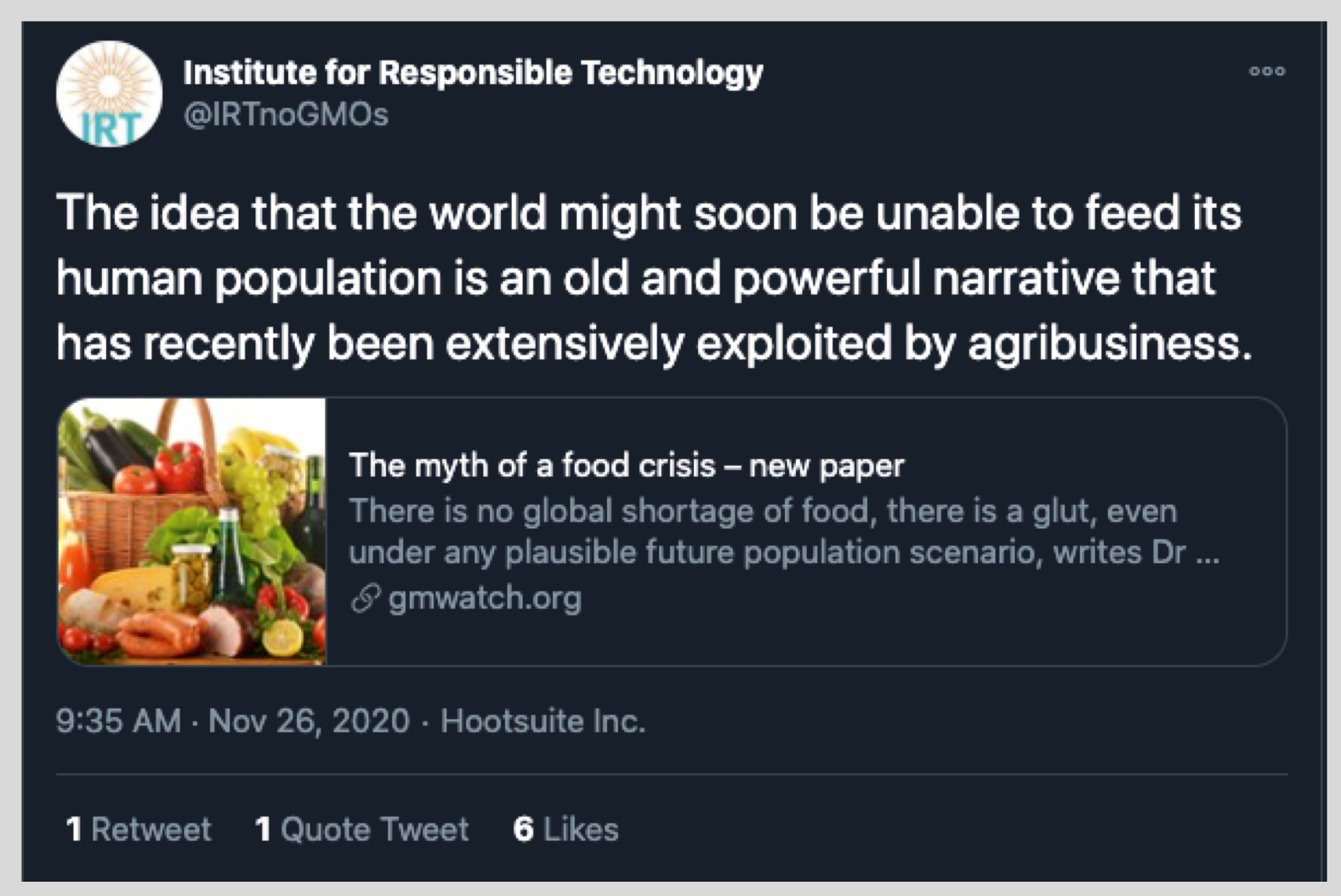

Our lesson promoted the basics of lateral reading in the context of topic-specific social media posts about GE agriculture. We drew from tweets, YouTube videos, and Facebook posts, because historical posts and pages are publicly available, easily accessible, and contain the types of elements students should learn to assess, such as the domain, the numbers of likes or retweets, and links to primary sources. The lesson has been designed to be completed in a single 60-minute class period but provides students lateral-reading skills they can build on in future units and lessons, regardless of the content area.

The lesson (see Supplemental Resources) begins with a classroom discussion about the term “fake news,” including where it comes from, its purpose, and its role in society. To aid this discussion, we provide resources for teachers to highlight how the process of developing news has changed over time, how advances in technology have made it easier for anyone to develop “news” or “user-created content,” and how fake news can quickly proliferate through social media networks.

This productive discussion about “fake news” encourages students to think about the importance of validating information and reflect on how they currently do this. At this point in the lesson, teachers introduce lateral reading as a strategy to validate sources of information. This introduction focuses on three key components: (1) the source, (2) the evidence, and (3) whether other reputable sources agree with the claims under scrutiny. Additionally, teachers highlight the importance of searching to confirm the information outside of the original source.

After introducing the basics of lateral reading, teachers use case studies we developed about specific GE agricultural products (Figure 1). In small groups, students review a case study, discuss the three lateral reading components, and determine whether the post is credible. If the students have access to the internet, they are encouraged to base their reasoning on information they found regarding each of the components, such as information about the author of the material. If students do not have access to an internet-connected device, they can describe the types of searches they would perform. Reconvening as a large group, teachers capture the students’ reasoning and categorize it into the key components of lateral reading. By doing this as a class, students can see the variety of ways the example source could be validated using the lateral reading strategy.

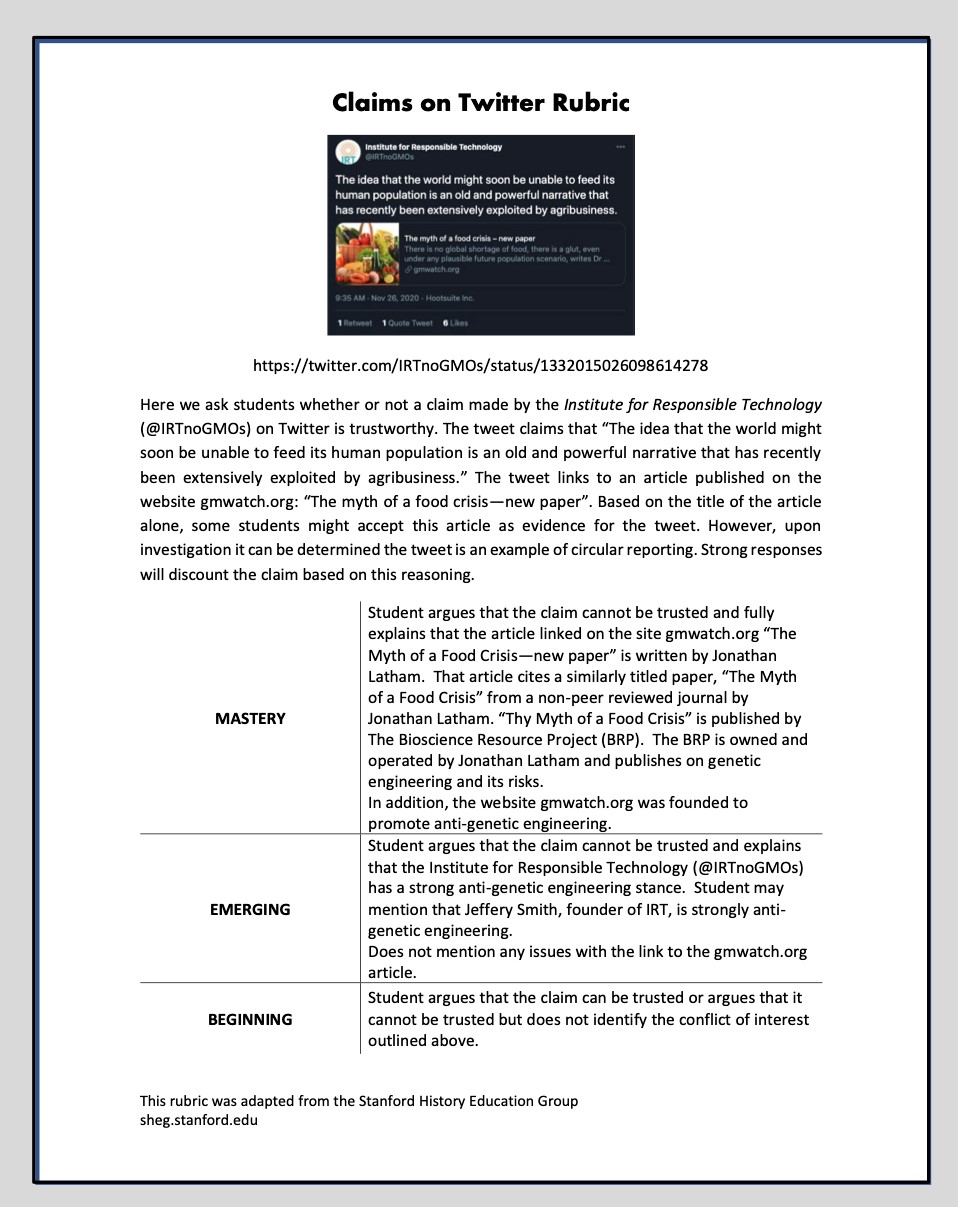

While lateral reading is often more accurate than vertical reading, it is less straightforward and often more cumbersome, requiring students to engage in complex reasoning and nuanced appraisals. Teachers and students need a way to assess their understanding and application of the skill of lateral reading as it develops. To assist in this, each case study has an associated rubric to evaluate competency as beginning, emerging, or mastery (Figure 2). For each skill level, an example of source validation reasoning is provided as a quick reference for teachers and students to assess and improve their lateral reading skills. These rubrics enable students to get quick feedback and provide opportunities to practice skills in an authentic way before searching for information on their own.

Once students have been introduced to lateral reading and the rubrics as a class, they work on their own in small groups to complete similar evaluations of new topic-specific case studies from a variety of social media sources. Students assess each source’s validity and provide a short written analysis. Afterward, groups pair up and share their reasoning for each case study. At this time, teachers provide students the rubrics specific to these cases, so students can determine how they can improve their lateral-reading skills.

To debrief the lesson, groups are provided a list of discussion questions that focus on how they consume and produce information, their responsibilities in checking the validity of the information they use and share, and when they would use lateral reading. During this debrief students reflect on their personal goals for validating sources and their skills around doing so.

Assessment of the lesson

Using SMILE’s statewide network of teachers, we provided professional development about this lesson to 17 middle school and 11 high school teachers, who then piloted it in their afterschool STEM clubs. This provided teachers a low-risk environment to experiment with the lesson and provide authentic feedback via teacher logs, ongoing professional development sessions, and personal conversations.

In a follow-up teacher workshop five months later, teachers reported that the lesson was well-received by their students. When paired with additional lessons about GE agriculture that required students to collect online information, teachers said that the lateral reading exercises led to more productive classroom discussions. This lesson was the most highly rated among the seven we provided in the GE agriculture unit; teachers reported that they planned to use it with other discussion-based science lessons in their classrooms. Further, teachers described how the lesson highlighted their students’ struggle to assess the accuracy of online information.

Despite the overall positive reaction to the lesson, some aspects of lateral reading were difficult for both students and teachers. Many of the teachers reported that they normally use vertical reading checklists to teach digital literacy skills—the same checklists that SHEG reports as problematic. Teachers were apprehensive about shifting away from them because the vertical reading checklists provide a concrete, straightforward approach to analyze a source. They tend to be easier for novice students to follow than the less-defined, more-nuanced skill of lateral reading. Teachers reported that lateral reading required a higher degree of critical reasoning and, if not scaffolded properly, some students became confused, frustrated, and gave up on the process.

The most success was obtained with tweets that were clearly true or false. In this context, students were able to demonstrate the validity of the source and provide reasoning to support their determination using one or two quick internet searches. However, it was more difficult for students to assess the validity of other social media posts with more nuanced misinformation that was harder to check. Additionally, reading laterally sometimes took students to scientific journal articles or other dense sources that provided contradictory claims. To validate the source and provide accurate reasoning, students needed considerably more time for reading or searching for other references. In these cases, students were less successful and often gave up on the process. Thus, it was clear from the feedback that this lesson is not a panacea and students need to practice the skills of lateral reading to be able to use them effectively and efficiently.

Discussion

Fake news and scientific misinformation are rampant on the internet. Science education must therefore expand from teaching primarily about scientific content to teaching how to obtain, evaluate, and use scientific findings. Existing approaches—such as vertical reading or lessons based around materials curated at a single point in time—are outdated and inadequate. Students do most of their information gathering and communicating in online environments using a wide variety of sources. The lateral reading approach we described builds on recent recommendations and may be more suitable for addressing NGSS recommendations in the dynamic digital landscape.

Using this lesson framework allows teachers to build fact-checking skills among their students that can be used in future exercises and provides teachers with a way to formatively assess students’ skills. This assessment can be valuable when conducted prior to students using information from the internet in a debate exercise and allows for a more productive, accurate debate.

Our partnership with teachers was critical to the development and refinement of the lesson. It also revealed some unforeseen challenges. First, some of our assumptions were incorrect, such as teachers’ reluctance to address a socially controversial topic. Other assumptions were more accurate; for example, teachers felt unprepared as content experts in this domain. The experienced STEM teachers provided feedback that was used to refine lessons over time.

While we see great benefit in teaching lateral reading, our experience suggests that it should be carefully planned, as it requires skills that many students have not yet developed. Thus, it is important to scaffold for students' needs. The initial lateral reading sources and assessments need to be content-specific, straightforward, and clear to help students understand the process and begin to build their skills. However, most socio-scientific issues are not straightforward, and lateral reading of online posts can prove challenging. This reveals a tension between the need to simplify a skill to teach to novices and the need to develop higher level, critical-thinking skills. Similar to how the NGSS recommends developing science and engineering practices among students over time, lateral reading is a skill that needs to be continually practiced for students to gain mastery.

To scaffold our lesson for science students, we recommend taking a long-term approach to skill progression over time. We suggest that teachers build students’ critical-thinking skills over an entire year through multiple applied lessons using lateral reading in which students curate information from a variety of internet sources. Initially, checklists could be used to introduce students to analyzing online sources. These early examples should be selected to illustrate checklists’ limitations and transition to using the lateral reading technique. We also suggest that teachers carefully select initial “case studies” that can be readily evaluated through lateral reading to help students self-assess their skill development. As students build this skill over the course of a school year, they can begin independently using lateral reading to critically evaluate online sources that they find on their own.

The lesson we developed can easily be applied to online information sources about many topics. Often, platforms such as Facebook, Youtube, or TikTok point to websites where additional information about the source or topic can be accessed. The goal of our lesson is for students to determine if these are quality sources. Only after making that determination should they be consuming any of the information provided.

Conclusion

Research has shown that media literacy education can be effective (Hodgin and Kahne 2018), specifically in improving students’ abilities to judge the accuracy of online posts (Kahne and Bowyer 2017). In this article we have described the development of a lesson and approach based in contemporary recommendations for increasing media literacy among youth by teaching the critical-thinking skills of lateral reading.

Acknowledgments

We thank the National Science Foundation Plant Genome Research Program (IOS # 1546900) for support of this project. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect the views of the National Science Foundation.

Troy E. Hall (Troy.Hall@oregonstate.edu) is Professor and Head of Oregon State University’s Forest Ecosystems and Society Department, Corvallis, Oregon. Jay Well is Associate Director of Precollege Programs and Science and Math Investigative Learning Experiences (SMILE) at Oregon State University, Corvallis, Oregon. Elizabeth Emery is Environmental Campaign Manager at the Association of Northwest Steelheaders, Portland, Oregon.

citation: Hall, T.E., J. Well and E. Emery. 2021. Fact-checking in an era of fake news: A template for a lesson on lateral reading of social media posts. Connected Science Learning 3 (3). https://www.nsta.org/connected-science-learning/connected-science-learning-may-june-2021/fact-checking-era-fake-news

Instructional Materials Lesson Plans STEM Teaching Strategies Middle School High School Informal Education