Engineering Essential Attributes of Cooperative Learning and Quality Discourse in a Large Enrollment Course by Leveraging Clicker Devices

Journal of College Science Teaching—March/April 2020 (Volume 49, Issue 4)

By Christopher Bauer

This article describes how clickers (student response systems) may be used to assess and support the development of productive process skills and discourse patterns within student teams during class periods. Clicker questions may poll the class about specific features of the internal workings of teams, such as role rotation, helpful or distracting behaviors, and the richness or evenness of discourse. Display of polling results to the class sets up a teachable moment regarding development of effective team communication. One question per class session provides several dozen opportunities over a course to raise student awareness concerning effective team communication and potentially to improve those skills. This innovation may be particularly advantageous for large classes where student team activity cannot be efficiently monitored by a single instructor. This is the first report of use of clickers for the purpose of assessing and supporting learning of team process skills.

The clicker (also known as “audience,” “classroom,” or “student” response systems) has become ubiquitous in STEM classrooms (Beatty & Gerace, 2009; Chen, Zhang, & Yu, 2017; Goldstein & Wallis, 2015). Using the Web of Science database, about 800 articles across 80 journals were identified with a title or abstract containing the word “clicker.” Clicker systems poll students about their ideas and project the aggregated data, informing both the instructor and students about what they were thinking. Wireless service has improved to the point where clicker hardware and software usage is straightforward, often supported by campus technology, and integrated with course management systems. Clicker devices typically allow multiple-choice response, although some allow text or graphics. Both free and fee-based polling services are available for cell phones. Despite wide integration, studies of chemistry higher education faculty suggested that the user pool (a decade ago) was dominated by early-adopters (Emenike & Holme, 2012) and that currently the utilitarian decisions of individual faculty are limiting implementation to large enrollment entry-level courses (Gibbons et al., 2017). Nevertheless, it is rare now to find a colleague who does not know what a clicker is.

Something is notably missing in studies of clicker implementation—using clickers to assess and promote effective team interaction and discourse. Nearly all of the literature is focused on content, not process (Freeman & Vanden Heuvel, 2015). This is surprising since the frequent intended purpose of clickers is to spark student interaction and discussion. Although there is increasing interest in how clickers may create (or impede) opportunities for students to interact and co-construct knowledge (see citations below), there have been no reports of direct use of clickers for assessing team process, for encouraging productive team behaviors, or for supporting effective team discourse. This article describes strategic design and implementation of clicker questions with the express purpose of revealing and improving these important characteristics of effective team learning (Hodges, 2017; 2018).

Abundant literature has accumulated over the past two decades. Citations here are narrowed to higher education STEM and psychology, but there are many reports in business, health, and other social sciences, and in pre-college settings. Some reports are personal case studies of faculty describing and encouraging implementation (Cotes & Cotua, 2014; Hodges et al., 2017; King, 2011; Koenig, 2010; Milner-Bolotin, Antimirova, & Petrov, 2010; Ribbens, 2007; Sevian & Robinson, 2011; Skinner, 2009). Other articles describe empirical or theoretically grounded studies of implementation strategies from quasi-experimental through more rigorously controlled designs (Adams, 2014; Brady, Seli, & Rosenthal, 2013; Buil, Catalan, & Martinez, 2016; Fortner-Wood, Armistead, Marchand, & Morris, 2013; Gray & Steer, 2012; Knight, Wise, & Sieke, 2016; Kulesza, Clawson, & Ridgway, 2014; Mayer et al., 2009; Morgan & Wakefield, 2012; Niemeyer & Zewail-Foote, 2018; Oswald, Blake, & Santiago, 2014; Pearson, 2017; Smith, Wood, Krauter, & Knight, 2011; Solomon et al., 2018; Terry et al., 2016; Turpen & Finkelstein, 2009; Van Daele, Frijns, & Lievens, 2017; Wolter, Lundeberg, Kang, & Herreid, 2011). A handful of status reviews and meta-analyses have recently appeared (Castillo-Manzano, Castro-Nuno, Lopez-Valpuesta, Sanz-Diaz, & Yniguez, 2016; Chien, Chang, & Chang, 2016; Hunsu, Adesope, & Bayly, 2016; MacArthur & Jones, 2008; Vickrey, Rosploch, Rahmanian, Pilarz, and Stains, 2015).

Fewer studies have addressed metacognition by probing specifically for judgments of knowing (Brooks & Koretsky, 2011; Egelandsdal & Krumsvik, 2017; Herreid et al., 2014; Murphy, 2012; Nagel & Lindsey, 2018). Several articles, through observation of student teams, have dug into the patterns of student discourse and decision-making (Anthis, 2011; James, Barbieri, & Garcia, 2008; James & Willoughby, 2011; Knight, Wise, Rentsch, & Furtak, 2015; Knight, Wise, & Southard, 2013; Lewin, Vinson, Stetzer, & Smith, 2016; MacArthur & Jones, 2013; Perez et al., 2010). These mechanistic studies explore whether students engage with each other, what their conversation involves, and how they make clicker-response decisions. Based on these observations, advice to students and faculty has been recommended (James & Willoughby, 2011; Knight et al., 2013; MacArthur & Jones, 2013).

This article takes a more direct approach and describes for the first time an explicit strategy for using clickers to assess these team process and communication behaviors, with concomitant reporting of results to the class, in order to encourage improvements in these behaviors.

Improving process skills (Stanford, Ruder, Lantz, Cole, & Reynders, 2017) and understanding discourse patterns (Knight et al., 2013; Kulatunga, Moog, & Lewis, 2014; Moon, Stanford, Cole, & Towns, 2017) are two current interest areas for active learning research and curriculum reform in STEM. Process skills include intra-team dynamics (management, teamwork, communication). Development of these skills has been a key reason for using cooperative learning. Research on cooperative learning establishes the importance of five critical features: face-to-face promotive interaction, positive interdependence, individual accountability, team process assessment, and team skill development (Johnson, Johnson, & Smith, 1991). Clickers may be used to assess student behaviors and provide evidence for how well course structure supports these critical features.

Student discourse (what they say, to whom, and how) is also of interest because particular types of discourse seem to encourage engagement and thinking (Chi, Kang, & Yaghmourian, 2017; Christian & Talanquer, 2012; King, 1990; Michaels, O’Connor, & Resnick, 2008; Moon et al., 2017; Young & Talanquer, 2013). Clicker questions can provide insight regarding discourse patterns and behaviors. This strategy may be particularly fruitful in large classes, where a single instructor would have difficulty monitoring and facilitating improvements in team behavior by direct engagement with every team.

Setting

Student participants were in a first-year general chemistry course at the University of New Hampshire from 2016 to 2018. The population was primarily 150 to 200 first- and second-year students in the biological and health sciences, with about 60% female and less than 5% non-English speaking or underrepresented groups. Class format consisted of frequent student-centered activities: content explorations (Process-Oriented Guided Inquiry Learning [POGIL]), think-pair-share, and group quizzes. The room was theater style with fixed seats, one projection screen, and front wall whiteboards.

Clicker questions were used for content review, to gauge progress and outcomes from POGIL explorations, and to complement slide-projected presentations. Clickers contributed 3% toward the course grade. Credit was awarded for each clicker response. Nearly all clicker events were “low stakes”; that is, students were not penalized for choosing “wrong” options. High-stakes questions tend to undercut the purpose of encouraging deeper and equitable discussions (James et al., 2008). Clicker questions regarding team processes were asked within the last two minutes of class. The instructor provided continual verbal support for clicker use and reminders about the purpose of process questions. Process questions were presented as “I’m really curious about …. Please give me an honest response.”

Positive incentives (contributions to grade) were used to leverage behaviors that supported stronger cooperative structure, particularly regarding promotive interaction and interdependence. Team functions were set up to become routine behaviors. Given the large class size, some team management responsibilities and potential record-keeping tasks were moved from the instructor to the student teams. Students were instructed each day by an initial slide to form teams of three to four, to assign team roles, to obtain materials, and to prepare for clicker use. Team membership was not assigned, and students were welcome to work with whomever they wished. This allowed teams to adjust membership to deal with absences. Regular roles within each team, at minimum, included a Recorder to make a collectable team record and a Spokesperson to report on behalf of the team either orally or by writing on the board. Each day, a team report form was completed with “things learned,” “questions arising,” or specific answers to questions posed in class. Each team listed the names and roles of team members. Individuals could earn final exam bonus points for being Reporter or Spokesperson at least one quarter of the class days. Thus, teams had to rotate roles so everyone would have a chance to earn the bonus. A more proscriptive stance can be taken regarding team membership and role assignments (e.g., fixed group membership using prior knowledge of student characteristics, and role rotation plans to ensure equitable opportunities) (Hodges, 2018; Simonson, 2019), but this requires additional instructor management. After several class periods, the daily reminders were minimized as everyone knew what to do.

Results and discussion

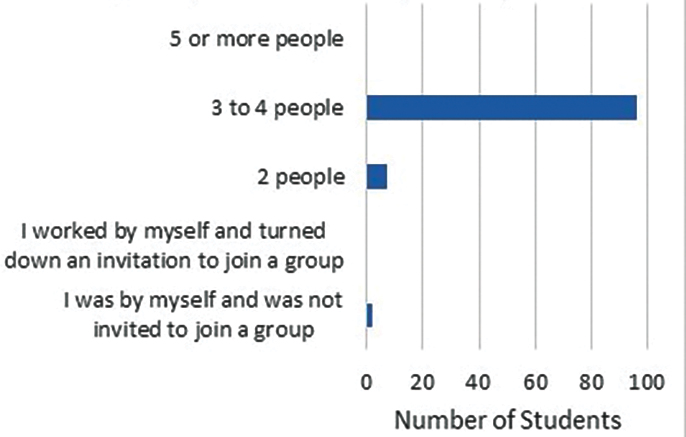

The argument here is that clickers may be used to check the status of and to promote improvements of key components of effective cooperative learning. Figure 1 shows a question asked at the end of one of the first class periods. It provided evidence that students joined together in teams of three or four, as requested. Three-member teams were suggested for this theater-style room because three in a row can see and refer to working materials in front of the center person. Teams of two were infrequent, foursomes were not reported, and singletons were rare. This team membership question can be asked multiple times early in the semester to provide formative feedback. It also confirms for the instructor that recommendations on team size were followed, and it shows students that compliance was nearly universal. A parallel question can be asked at the end of the semester as summative assessment. In this class, most students (about 80%) stayed with the same one or two teams all semester.

Response to “I worked in a group of how many today”

Two choices in Figure 1 also send a message concerning lone individuals. The choice “I was by myself and was not invited to join a group” suggests to all that inviting individuals to join your group is encouraged. In other words, it is not just the responsibility of lone individuals to reach out; it is everyone’s responsibility in this class to be inclusive. The potential for exclusion has arisen as an issue in team-oriented instructional settings (Eddy, Brownwell, Thummaphan, Lan, & Wenderoth, 2015; Hodges, 2018), hence this positive messaging and feedback may help mitigate this potential problem. The choice “I worked by myself and turned down an invitation to join a group” subtly suggests that people be willing to be accepted into a group. Although responses are self-reports and perhaps subject to social desirability bias, the anonymity of the clicker response will mitigate that effect (Paulhus & Reid, 1991). Students report appreciating anonymity (Fallon & Forrest, 2011), from which can be inferred they desire to report honestly.

The language of questions and choices was chosen to describe behaviors without being evaluative or judgmental: “Here is what I see” is the goal versus “Here is what I judge about the behavior.” This issue was also faced in designing the Classroom Observational Protocol for Undergraduate STEM (COPUS) (Smith, Jones, Gilbert, & Wieman, 2013) in that responses involving judgment of value were more likely to lead to bias or conflict. The tone in the clicker question was intended to encourage appropriate behaviors without being stigmatizing or embarrassing. Lastly, because clicker responses counted toward the class grade, it was important that every student see an option that they could choose with each question. Results were displayed to the class, allowing the instructor to reflect on the frequency of productive behaviors or to comment on changes in behaviors that would be improvements. In the case of Figure 1, students were thanked for organizing (for the most part) into groups of effective size, and the “singleton” issue was mentioned as something to be aware of for the future (i.e., you should invite people in). Thus, this one slide provided an opportunity to assess team performance and to provide support for building teamwork skill, two of the key components of strong cooperative learning principles.

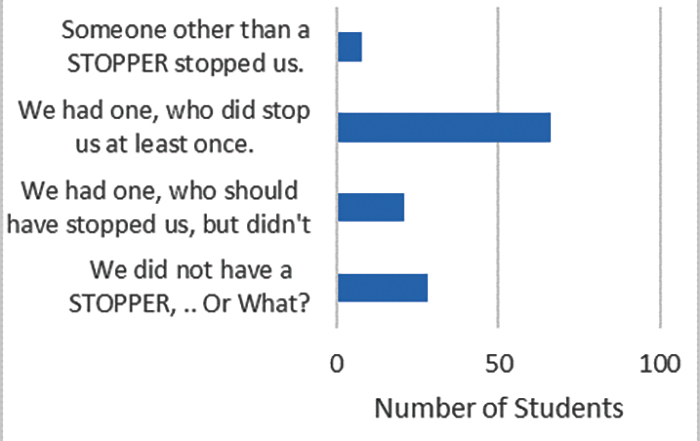

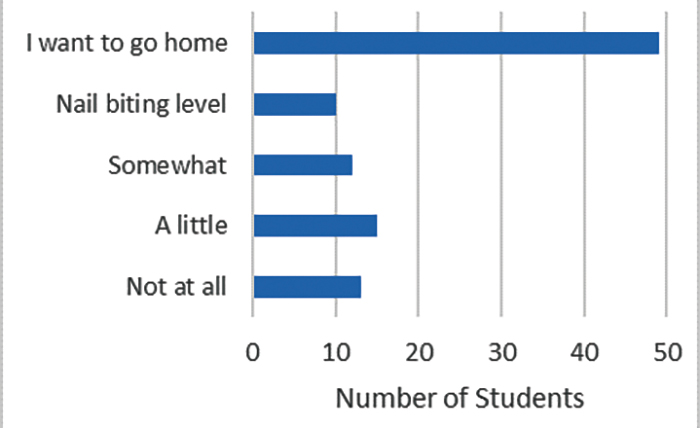

Figures 2 and 3 show questions regarding role implementation and perception (to support positive interdependence). Figure 2 looks at student use of a new role of “Stopper.” If the conversation seemed to be leaving anyone behind, the Stopper should intervene and “stop” the conversation. This question was a fidelity check on the use of that role. Figure 3 asked about the role of Spokesperson, who may have to report verbally, or write on the board. It is typically not a favorite role as Figure 3 shows. The wording was intended to ameliorate Spokesperson anxiety and open a dialogue regarding the value of the role, and to message that the team was responsible for supporting their Spokesperson. It also reminded students of the final exam point benefit.

Check for fidelity of implementation of role of “Stopper” in group

Response to “When you play role of spokesperson, how anxious are you really?”

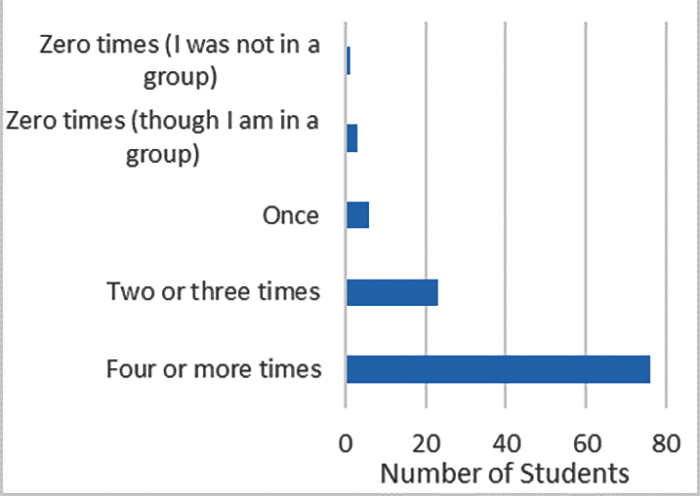

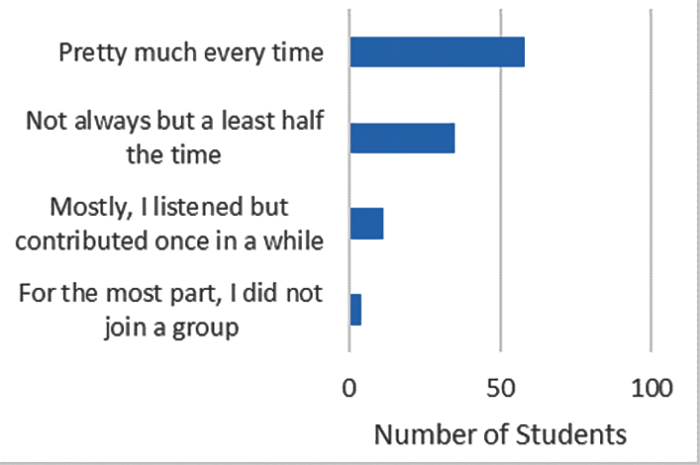

Questions can interrogate the patterns of talk occurring within teams. Inequity of contributions within teams has been noted in previous research (James et al., 2008). Figure 4 explores how often each person reported contributing to the conversation. Figure 5 asks a similar question, but in summative perspective over the semester. Results suggest that most students were participating.

Response to “So far today, how many times did you say something within your working group?”

Response to “Over the semester, how often during group discussion did you say something (observations, ideas, suggestions...)"

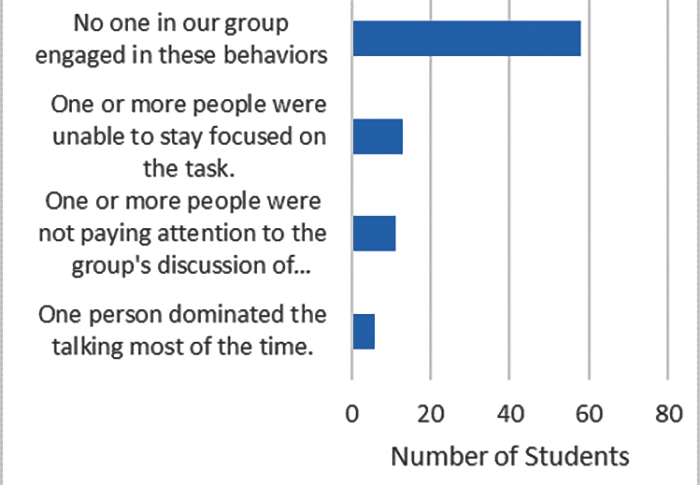

Figure 6 shows information regarding ineffective team behaviors. This question demonstrates that most teams were not distracted, but about thirty students reported team members who were off task or dominating the conversation. Assuming that some responses came from members of the same team, and a team size of three, one can estimate that perhaps ten teams (out of about 30 in class) had some challenges of this nature. The instructor praised team function as a whole and pointed out behaviors to avoid. Note that anonymity may have allowed this report on “bad behavior” to be an honest report.

Response to “One of the following behaviors happened during our group work”

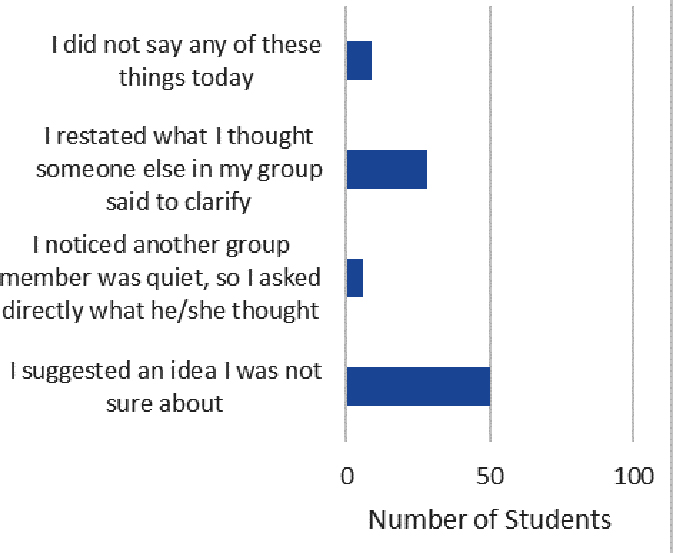

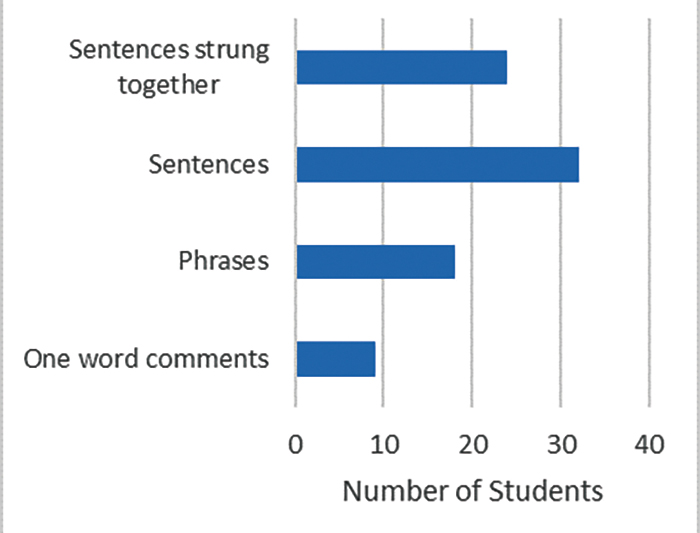

Clicker questions can encourage talk that is more intellectually rich. The question in Figure 7 provides insight into communication moves that are valuable for promoting productive and equitable discussion (rephrasing, inviting, proposing). The question in Figure 8 takes a different perspective, that of an observer reporting on overall discourse structure for the whole team. This question was seeking insight into whether students were expressing complete thoughts and (hopefully) arguments, as opposed to just identifying answers without much rationale. Single word utterances would be evidence for lower-level recall or recognition activity. More frequent use of full sentences would be evidence of higher-level thinking, explanation, and argument-building. (Research on student discourse in teams often needs extended verbal structures to assess that an argument is being built and justified.) Furthermore, just asking students directly about verbal communication patterns in a clicker question is a reminder to them to engage in thinking, and evidence shows that explicit reminders can lead to deeper reasoning (Knight, Wise, & Southard, 2013). Additionally, because clicker responses count, this accountability may nudge students to engage in better discussions (Knight et al., 2016).

Response to “(If you worked with a group), did you do any of these things?"

Response to “If someone were listening to your group today, they would have heard mostly...”

What are the relative affordances and costs of adding clicker questions to monitor and encourage team process? Students can read, process, and respond to a single question in about 30 seconds. Instructor comments and recommendations may take another 30 seconds. Questions can be repeated on subsequent days as reminders and to monitor for change. One question a day provides 30 to 40 opportunities in a semester to assess and improve team dynamics. Thus, a small investment of class time, using a technology that is likely already in place, can direct substantial attention to improving student team process skills. At the same time, the instructor will gain substantial insight concerning how students communicate, helping to guide decisions about how to structure discussion tasks.

Conclusions and implications

One approach to implementing cooperative learning in STEM higher education is to alter the physical teaching space, for example, the SCALE-UP model (Foote, Knaub, Henderson, Dancy, & Beichner, 2016). Rooms are designed with seating conducive for discussion, means for visible sharing of work products, and pathways that allow student and instructor movement. The capacity of these rooms tops out at about 100 students (10 to 25 groups, depending on seating). Because many larger institutions have course capacities two to six times larger than this, moving to a SCALE-UP format has significant challenges: creating or renovating space, assigning more faculty to smaller enrollment classes, and aligning scheduling. A compromise approach implements hierarchical instructional facilitation (e.g., Lewis & Lewis, 2005; Yezierski et al., 2008). Students in class are organized into clusters of small student teams, which are located proximate to one graduate or undergraduate facilitator who mediates between the teams and the course instructor. This may use the traditional rooms, class sizes, and schedules, but requires staffing and training of student facilitators and fortuitous schedule overlap. The logistic and financial challenges are non-trivial and perhaps nonsustainable.

A third model is possible that works within the constraints of a single instructor, large-enrollment classroom, and through design, establishes features that emulate the important research-based characteristics of a cooperative learning environment. For a class of 200, where there may be 40 to 60 teams, it is impossible to give more than a few teams the direct attention they need. Too much time may be spent monitoring team membership, addressing absentee issues, or cajoling individuals to get together as a team. In such a setting, it is also difficult to facilitate well with personal charm as opposed to frustration. Students are adults and respond positively to “suggest and expect” rather than “demand and punish.” Consequently, the goal is to enact management actions that are true to the principles of cooperative learning previously cited, but avoid increasing complexity or time demands for instructor or students. In particular, class processes must: be simple for the instructor to implement and simple for students to understand, such that the processes become routine. Devices or procedures that require more than a few minutes to explain or that require repeated instructions end up misinterpreted, which interferes with the intended learning process and wastes time. minimize extra work for the instructor yet leverage important features to support learning outcomes for students. Clicker questions that support assessment of team communication skills fit this criterion. turn some management responsibility over to students (e.g., team formation, role rotation), incentivize via awarding course points, and then check for implementation via clickers.

This article presents a proof of concept supported by a research-based rationale. Data have been presented from a real classroom along with implementation guidance. However, no evidence has been developed yet to indicate whether the suggested questions or approach actually lead to the desired improvements in team communication. Certainly, the clicker questions and choices presented here could be expanded and improved, and research on efficacy pursued.

The literature review conducted for this article suggests that the pedagogic vision for clickers has been constrained by thinking of them only as an electronic extension of multiple-choice content testing (Beatty & Gerace, 2009). This article steps outside of that box by arguing that clickers can be used to monitor and direct improvement in team behaviors. Other authors have also stepped outside the box with innovative applications or approaches. For example, Bunce, Flens, and Neiles (2010) studied student attentiveness patterns during lecture and active-learning class periods. Cleary (2008) replicated classic psychology experiments in perception and recall. Organic chemists devised clever text string responses to allow a broader array of choices for students to describe molecular structures, synthetic sequences, or mechanistic reaction pathways (Flynn, 2011; Morrison, Caughran, & Sauers, 2014). More good ideas are out there.

Acknowledgments

Thanks to Dr. Kathleen Jeffery for comments on the manuscript.

Christopher Bauer (Chris.Bauer@unh.edu) is a professor in the Department of Chemistry at the University of New Hampshire in Durham, New Hampshire.

Research Teaching Strategies Postsecondary