Research & Teaching

Exploring Barriers to the Use of Evidence-Based Instructional Practices

Journal of College Science Teaching—November/December 2021 (Volume 51, Issue 2)

By Grant E. Gardner, Evelyn Brown, Zachary Grimes, and Gina Bishara

Recent reform documents in postsecondary science, technology, engineering, and mathematics (STEM) fields recommend the use of evidence-based instructional practices (EBIPs) in the classroom. National surveys in the United States suggest a continued reliance on instructor-centered practices that have little evidentiary support for long-term impacts on student learning. This study utilized a cross-sectional survey research design to explore the awareness and use of EBIPs by STEM instructors at two large universities (n = 104) in the southeastern United States. Participants’ perceived individual and situational barriers impaired their use of EBIPs. We also examined how these barriers may be related to faculty use of these instructional practices. Results indicate high awareness of many EBIPs but with little subsequent classroom use. Results also suggest that STEM faculty’s gender and pedagogical beliefs may mediate what they perceive as significant barriers to EBIP implementation.

STEM faculty use of evidence-based instructional practices

Recent reform documents in the United States related to postsecondary science, technology, engineering, and mathematics (STEM) education have reinforced the need for classroom enactment of evidence-based instructional practices (EBIPs) to support student STEM learning (e.g., AAAS, 2011; NRC, 2003). EBIPs are defined as peer-reviewed classroom instructional strategies that have both theoretical and empirical support for improving student outcomes (e.g., Freeman et al., 2014; Michael, 2006; Prince, 2004). Many EBIPs in STEM fields are student-centered (Arthurs & Kreager, 2017; Chi & Wylie, 2014; Engle & Conant, 2002). Despite the extensive evidence for EBIPs, STEM faculty continue to support instructional decisions with anecdotes and personal experiences (Eagan, 2016; Lammers & Murphy, 2002; Stains et al., 2018).

Lecture methods continue to dominate postsecondary STEM instruction (Eagan, 2016; Lammers & Murphy, 2002; Stains et al., 2018); however, closer analyses suggest that STEM faculty are integrating other practices into lecture-heavy instruction as well (Campbell et al., 2017; Stains et al., 2018). Although previous studies explore STEM faculty use of EBIPs, it is likely that these studies overestimate EBIP use (Cutler et al., 2012; Froyd et al., 2013; Henderson et al., 2012). Response bias may lead to an inaccurate representation of the population, with faculty who are aware of or using EBIPs being more likely to respond to a survey that they perceive as relevant (Froyd et al., 2013). In addition, STEM faculty’s self-reported use of EBIPs may not reflect the fidelity of implementation of the practice (Ebert-May et al., 2011; Henderson & Dancy, 2007; Stains et al., 2018; Turpen & Finkelstein, 2010). These limitations are not cited in an attempt to invalidate previous research, but rather to highlight that the use of EBIPs continues to be low among STEM faculty nationwide, despite these methods’ empirical support.

Why do many STEM faculty not use EBIPs?

It is tempting to assume that a lack of awareness of EBIPs by postsecondary STEM instructors leads to limited implementation (Froyd et al., 2013; Henderson et al., 2012). However, studies of faculty use of EBIPs in physics, engineering, and geoscience demonstrate that awareness, but not implementation, of at least some EBIPs can be upwards of 80% (Froyd et al., 2013; Henderson et al., 2012; Macdonald, 2005). What barriers exist, either real or perceived, that might explain this awareness-to-practice gap? Why do instructors continue to rely on anecdotal or personal evidence instead of research-based empirical evidence when making instructional decisions in STEM (Andrews & Lemons, 2015)?

The extant literature loosely categorizes barriers to implementation as situational/contextual or individual (Henderson & Dancy, 2007; Lund & Stains, 2015). Situational barriers are “aspects outside of the individual that impact or are impacted by the instructors’ instructional practices” (Henderson & Dancy, 2007, p. 10). Historically, research has focused on these barriers for which instructors realistically or perceptually have no control (Brownell & Tanner, 2012; Sunal et al., 2001). Individual characteristics that might serve as barriers to implementation of EBIPs are “instructor’s conceptions (i.e., beliefs, values, knowledge, etc.) about instructional practices” (Henderson & Dancy, 2007, p. 10). Henderson and Dancy (2007) suggest that both of these barriers must be considered when attempting to predict faculty use of EBIPs.

Brownell and Tanner (2012) argue that the “big three” barriers to implementation of EBIPs amongst STEM faculty are a lack of (a) training in evidence-based pedagogies, (b) perceived time to effectively implement evidence-based pedagogies, and (c) institutional support and implementation incentives. In this article, we consider the second and third barriers as situational barriers. The first barrier can be defined as situational if the lack of training is due to institutional structures that do not provide opportunities for training. Other situational barriers can include perceived lack of time for proper preparation and implementation of EBIPs, lack of institutional resources and incentives to appropriately implement EBIPs, and perceived resistance to EBIP use by colleagues. Empirical research has supported the claim that perceived lack of time is one of the most common situational barriers for some groups of STEM faculty (Dancy & Henderson, 2010).

Individual characteristics as barriers in EBIP implementation have only recently been explored in the literature (Gibbons et al., 2018). The most commonly studied individual barrier is instructor belief about teaching and learning, due to its strong connection to instructional practice (Gibbons et al., 2018; Norton et al., 2005). However, the link between more reform-oriented beliefs and instructional practices remains tenuous (Gibbons et al., 2018; Idsardi et al., 2017). For this study, we relied on a conception of reform-oriented beliefs articulated by Luft and Roehrig (2007) and Addy and Blanchard (2010). These authors conceptualize beliefs on a scale of traditional (a focus on information, transmission, structure, and/or sources) to reform based (a focus on mediation of student knowledge or interactions).

Another individual characteristic relevant to faculty engagement in EBIPs is their motivation to engage in learning about and implementing EBIPs (Bouwma-Gearhart, 2012). Some work has shown that STEM faculty are only extrinsically motivated to engage in teaching professional development due to factors such as weakened professional ego (Bouwma-Gearhart, 2012). Other researchers have used expectancy-value theory (a theory of motivation) to examine why participants engage in EBIPs (Matusovich et al., 2014). These authors found that expectancy of success in using EBIP, perceived cost of using EBIP, and the utility value of EBIP in their own classrooms were important to participant decision-making. Collectively, these papers indicate that there is a complex interaction of both situational/extrinsic and intrinsic motivational variables that might impact what instructors choose to do in their classrooms.

Achievement goal theory has proven useful to understanding student motivation (Midgley, 2002; Butler, 2007; Retelsdorf et al., 2010). Butler (2007) claims that the classroom constitutes an achievement “arena” not only for students but for teachers as well. Instructors may have one of three common orientations:

- Learning approach refers to an individual who wants to master a task and develop their abilities for personal satisfaction.

- Performance approach refers to an individual who seeks positive reinforcement from peers.

- Performance avoidance refers to an individual who is motivated by avoiding negative judgments.

There is some work showing that teachers’ goal orientations can impact their instructional practices (Abrami et al., 2004; Retelsdorf & Günther, 2011) but this research is in its infancy and these constructs have never been examined in higher-education contexts. We utilize survey items from former studies to assess the instructional goal orientations of our participants (Abrami et al., 2004; Retelsdorf & Günther, 2011).

Study goals and research questions

This study seeks to better understand undergraduate STEM instructors’ perceived situational and individual barriers to EBIP implementation (Lund & Stains, 2015). While several studies have focused on instructors’ perceived situational barriers, few have focused on individual barriers to STEM faculty use of EBIPs. The following research questions guided this study:

- Research Question 1: What are the levels of awareness and use of EBIPs for STEM faculty?

- Research Question 2: What situational and individual barriers to adoption of EBIPs do STEM faculty report, and how does the magnitude of these perceived barriers compare?

- Research Question 3: What demographic characteristics as well as situational and individual barriers predict STEM faculty members’ use of EBIPs?

Methods

Context and participants

This study utilized a cross-sectional survey research design distributed to all instructional and tenure-track faculty of basic and applied sciences at two large southeastern universities. These institutions are classified as doctoral/research universities (DRU) and are the second- and third-largest institutions in terms of undergraduate enrollment in each state. We received a total of n = 104 responses, with some or all data completed across both universities resulting in a 47.93% response rate. We analyzed each construct or group of items independently. As long as a single respondent completed all the items within a “construct” set, they were included in the analysis.

Survey instrument design and analysis

The survey instrument was adapted from previous instruments. To measure levels of awareness of EBIPs, participants were asked to rate their experience with 13 different EBIPs along a 6-point scale. The response coding protocol was the same as that used by Froyd et al. (2013). This yielded a measure of instructor awareness of specific EBIPs along the following scale: 1 = faculty were unaware of the strategy; 2 = faculty had heard about the strategy “in name only” but had never used it; 3 = faculty had heard about the strategy but did not think it was appropriate for their class; 4 = faculty had heard about the strategy and were planning on using it in their class; 5 = faculty currently used the strategy; and 6 = faculty had discontinued use of the strategy.

In line with previous research (Froyd et al., 2013), if a participant selected any value of 2 through 6 on the item, it indicated they were aware of the strategy. The same scale items were used to indicate faculty use of EBIPs. A selected value of 2 through 4 indicated that faculty were aware but had never used the strategy, 5 indicated a faculty member was currently using the strategy, and 6 indicated a faculty member was aware but had discontinued use of the strategy. Representativeness and content validity of provided EBIPs were aligned with previous studies and confirmed through a review of recent policy documents (AAAS, 2011; Froyd et al., 2013).

To measure situational barriers to adoption of EBIPs, a comprehensive list of hypothesized responses was gleaned from the literature. These barriers included eight commonly cited situational barriers: (a) perceived lack of training, (b) perceived lack of class time for implementation, (c) perceived lack of preparation time for implementation, (d) perceived lack of evidentiary support for EBIPs, (e) anticipated resistance to EBIPs from students, (f) anticipated resistance to EBIPs from administration, (g) lack of institutional incentives, and (h) lack of institutional resources for using EBIPs.

The perceived strength of each barrier was measured along a 5-point Likert scale, with 0 = no barrier to 5 = the most significant barrier to the use. The survey also asked respondents to rate perceived institutional supports at the departmental and college levels. Perceived situational barriers were rated by participants on these final two items along a 5-point Likert scale from “a lot of support” to “almost none.” Descriptive statistics for Likert scores for each situational barrier across respondents were calculated in order to determine a relative rank of situational barriers, as well as to create variables for the regression analysis.

Two potential individual constraints to adoption of EBIPs were utilized in this study and included instructor reform-oriented beliefs and instructor goal orientations for engaging in EBIPs. Reform-oriented beliefs were measured using Luft and Roehrig’s (2007) Teacher Beliefs Interview (TBI), which measures epistemological beliefs about teaching and learning, converted to survey items. Items differentiate a scale of instructor perspectives from traditional, instructional, transitional, responsive, and reform based (in that order). Generalizability, reliability, and validity of information were reported by Luft and Roehrig (2007; see also Addy & Blanchard, 2010; Gardner & Parrish, 2019). Luft and Roehrig (2007) indicated that items 1, 3, 6, and 7 refer to what faculty believe about student learning, and items 2, 4, and 5 refer to what faculty believe about instructor knowledge. The frequency of individual responses across the seven items was used to calculate a weighted sum that served as a proxy variable for the strength of reform-oriented beliefs. Individual respondent weighted beliefs scores ranged from 7 (which would indicate all traditional-level responses, with Likert value = 1 across the seven items, or 1 x 7 = 7) to 35 (which would indicate all reform-oriented responses, with Likert value = 5 across the seven items, or 5 x 7 = 35).

Instructor motivations to implement EBIPs were measured using a modified, nine-item Goal Orientation Toward Teaching (GOTT) scale as described by Kucsera et al. (2011). Items measured the subconstructs of instructor learning goals orientations, performance approach goal orientations, or performance avoidance goals orientations. Means and standard deviations of Likert scores for each goal orientation across respondents were calculated for analysis. We characterized individual faculty goal orientations utilizing a cluster analysis protocol, with the three goal orientation subconstructs as clustering variables (Fortunato & Goldblatt, 2006).

Regression analysis

We generated regression models for understanding the relationship with potential individual and situational barriers to EBIP adoption. The outcome variable used for all predictive models was the EBIP engagement indicator, which we calculated using the sum of the frequencies of reported current use of all EBIPs surveyed. In other words, an individual with a high EBIP engagement indicator would be using a lot of different evidence-based practices (as listed in the survey) and using them frequently in their classrooms. Other variables included in the models were taken from the survey items and included years of teaching experience, respondent’s self-identified gender, highest degree earned, typical teaching load, professorial rank, perceived departmental support, percentage of time allocated to teaching, goal orientations cluster membership, goal orientations (performance avoid/approach, learning approach), and reform-oriented beliefs scales. Perceived college support was not used, as it was assumed that perceived departmental support would carry more weight for faculty (also see Figure 4). The perceived barrier index was calculated by taking the average of the Likert scores for each reported barrier item (listed in Figure 3).

Results

Description of sample

The respondents were from a diversity of STEM fields: 37.5% from life sciences, 24.0% from engineering, 17.3% from chemistry, 16.3% from mathematics, 2.9% from computational science, and 1.9% from physics/astronomy. With regard to education, 71.1% had completed a doctoral degree in their field of study, 17.3% had completed a master’s degree, 7.7% had completed a bachelor’s degree, and 1.9% indicated completion of some other degree. As for the current position, 23.1% of the respondents were graduate teaching assistants, 9.6% were non-tenure-track instructors, 16.3% were tenure-track assistant professors, 23.1% were associate professors, and 26.0% were full professors.

When asked about teaching responsibilities, 12.6% responded that their full-time duties were related to undergraduate instruction, 19.4% spent about three quarters of their workload teaching, 44.7% indicated that about half their workload involved undergraduate instruction, 18.4% indicated that a quarter of their workload involved instruction, and 4.9% indicated that they had no teaching responsibilities whatsoever. As for teaching load, 2.9% taught less than 3 credit hours per semester, 37.7% taught in the range of 3 to 6 credit hours per semester, 26.1% taught 6 to 9 credit hours per semester, 18.8% taught 9 to 12 credit hours per semester, 10.1% taught 12 to 15 credit hours per semester, and 2.9% taught more than 15 hours per semester, with 1.4% choosing not to respond. Most of the respondents taught classes consisting of 15–30 students (53.3%), with 50–100 students (21.3%), 30–50 students (17.3%), 100–150 students (5.3%), and 0–15 students (2.7%) being the next most common.

Research question 1

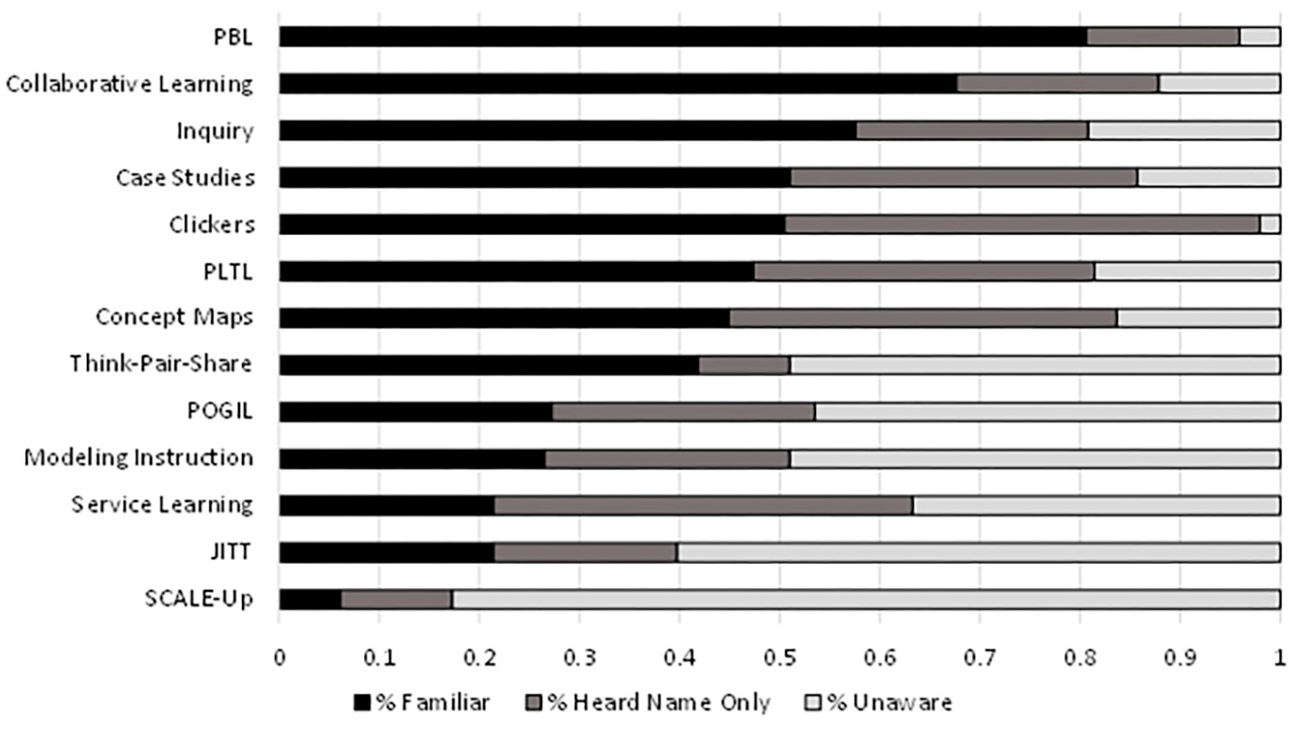

Figure 1 lists surveyed EBIPs as well as the reported awareness of each practice by responding faculty. The practices most familiar to the faculty sample are at the top of the figure (indicated by the black-shaded bars) and include Problem-Based Learning (PBL) (80.61%), collaborative learning (67.68%), and inquiry learning (57.58%). This included any faculty who responded to any of the following numerical responses: that they had heard of this but felt it wasn’t appropriate for their class (a response of 3), that they had heard of it and planned to use it (a response of 4), that they currently used it (a response of 5), or that they had used it before and discontinued use (a response of 6). The practices that the faculty were most completely unaware of (pale gray bars) include Think-Pair-Share (48.98%), Modeling instruction (48.98%), Just in Time Teaching (JiTT) (60.20%), and Student-Centered Activities for Large Enrollment (SCALE)-Up classroom learning (82.65%). The gray bars indicate the percentage of faculty who had heard about a particular practice in name only but had never used it (a response of 2). The pale-gray bars indicate the percentage of faculty who were completely unaware of a particular instructional practice prior to taking the survey (a response of 1).

Reported percentage of awareness (% of total sample that was familiar, % of total sample that had heard name only, or % of total sample that was unaware) of EBIPs by all participating faculty.

Note. PBL = Problem-Based Learning, PLTL = Peer-Led Team Learning, POGIL = Process Oriented Guided Inquiry Learning, JITT = Just in Time Teaching, SCALE-Up = Student Centered Active Learning Environment. The black bars represent the percentage of the aware sub-sample who currently use the practice. Dark-grey bars are the percentage of the aware sub-sample who discontinued use of the practice. The pale-grey bars are the percentage of the aware sub-sample who are familiar with the practice but have not used it.

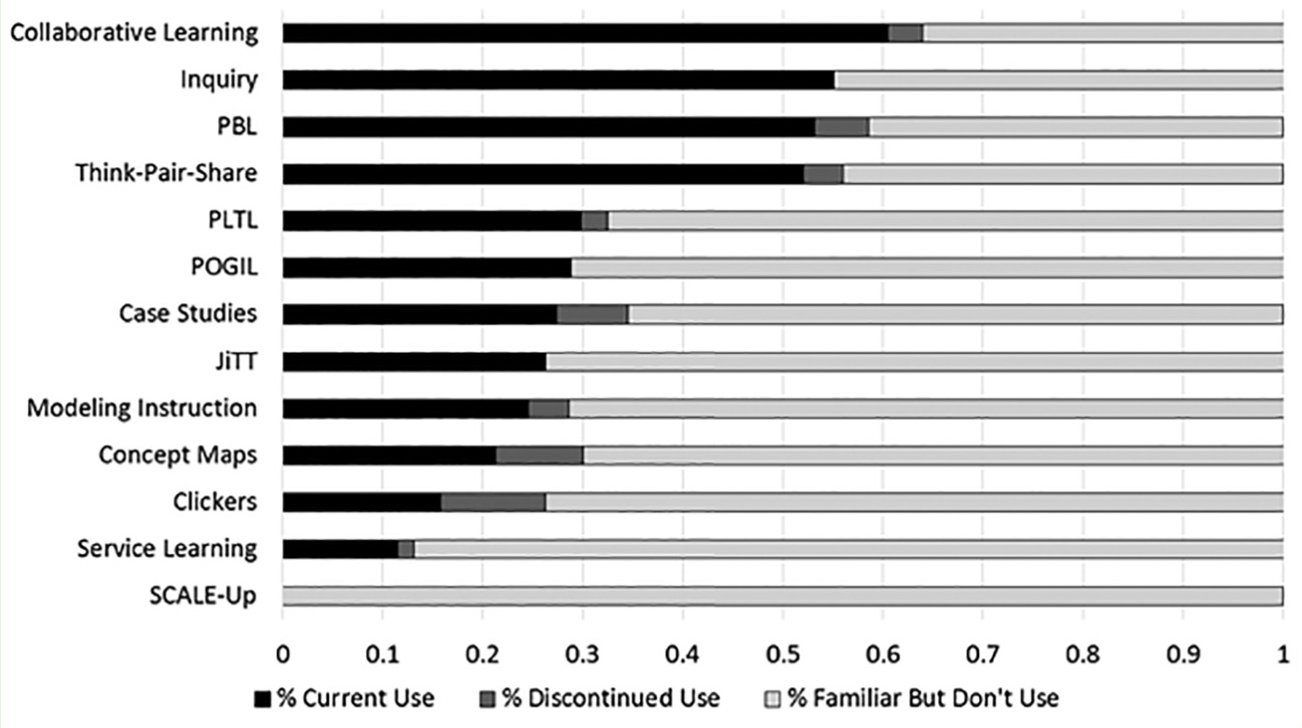

Figure 2 indicates respondents’ use of the same instructional practices as noted in Figure 1, but it excludes data from those faculty who responded that they were unaware of the particular practice (a response of 1). In this case, the practices that were most frequently used by respondents currently who had at least heard of the practice were collaborative learning (60.47%), inquiry instruction (55.12%), and Problem-Based Learning (53.19%). The practices that faculty were aware of but used least often were SCALE-Up classrooms (0.00%), service learning (11.47%), and handheld response devices (clickers; 15.79%). The dark- and pale-gray bars indicate the percentage of faculty who had tried a particular instructional practice but chose not to use it or discontinued use.

Reported percentage of use of EBIPs by participants with awareness of particular practices.

Note. PBL = Problem-Based Learning, PLTL = Peer-Led Team Learning, POGIL = Process Oriented Guided Inquiry Learning, JITT = Just in Time Teaching, SCALE-Up = Student Centered Active Learning Environment. The black bars represent the percentage of the aware sub-sample who currently use the practice. Dark-grey bars are the percentage of the aware sub-sample who discontinued use of the practice. The pale-grey bars are the percentage of the aware sub-sample who are familiar with the practice but have not used it.

Research question 2

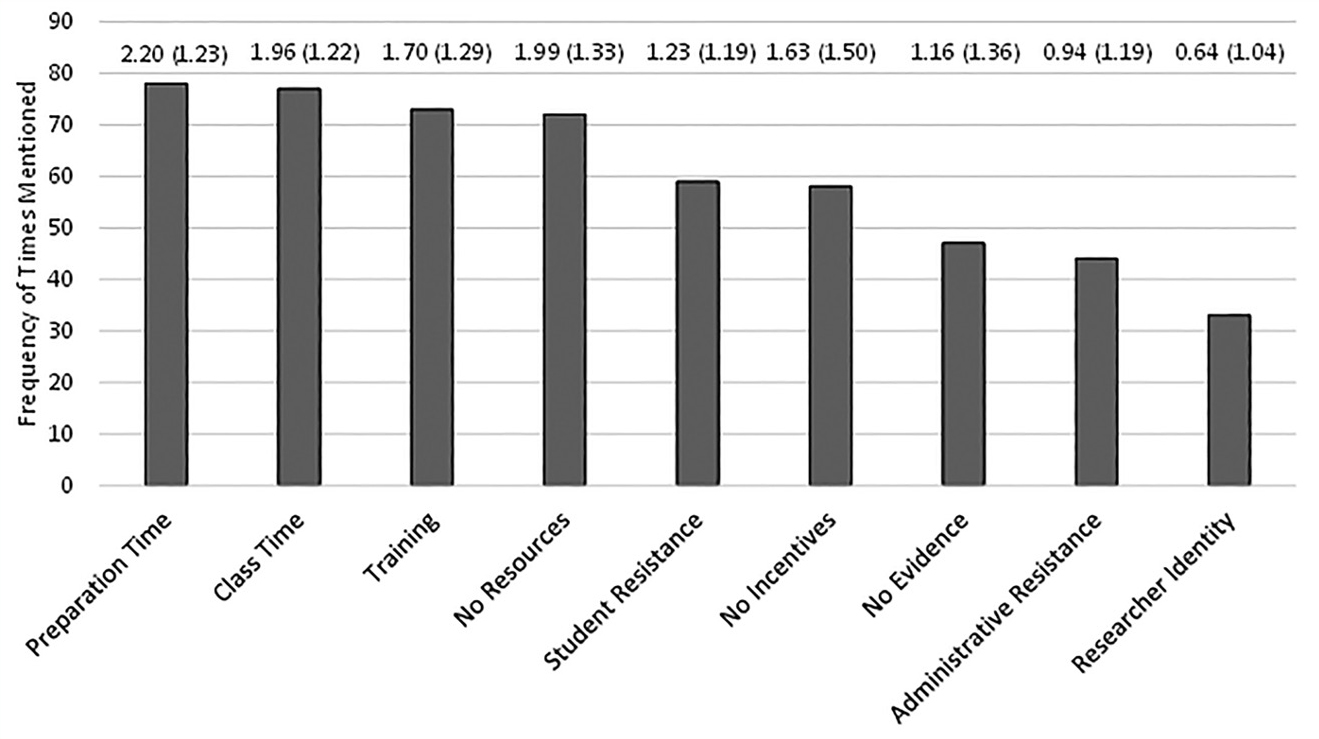

Figure 3 indicates the frequency of times particular implementation barriers were cited by respondents. The two most frequently cited barriers to implementation were related to perceived preparation time (75.00%) and perceived in-class time required to implement practices (74.04%). The least frequently cited barriers to implementation to EBIPs were lack of administrative support (42.31%) and prevalence of a researcher over teacher professional identity (31.73%). To get a collective sense of the rankings of these barriers across the sample, means and standard deviations of the Likert rankings of each barrier are shown above the bars on Figure 3.

Frequency of times mentioned and Likert means and standard deviations for particular barriers to adoption of EBIPs cited by all participating faculty.

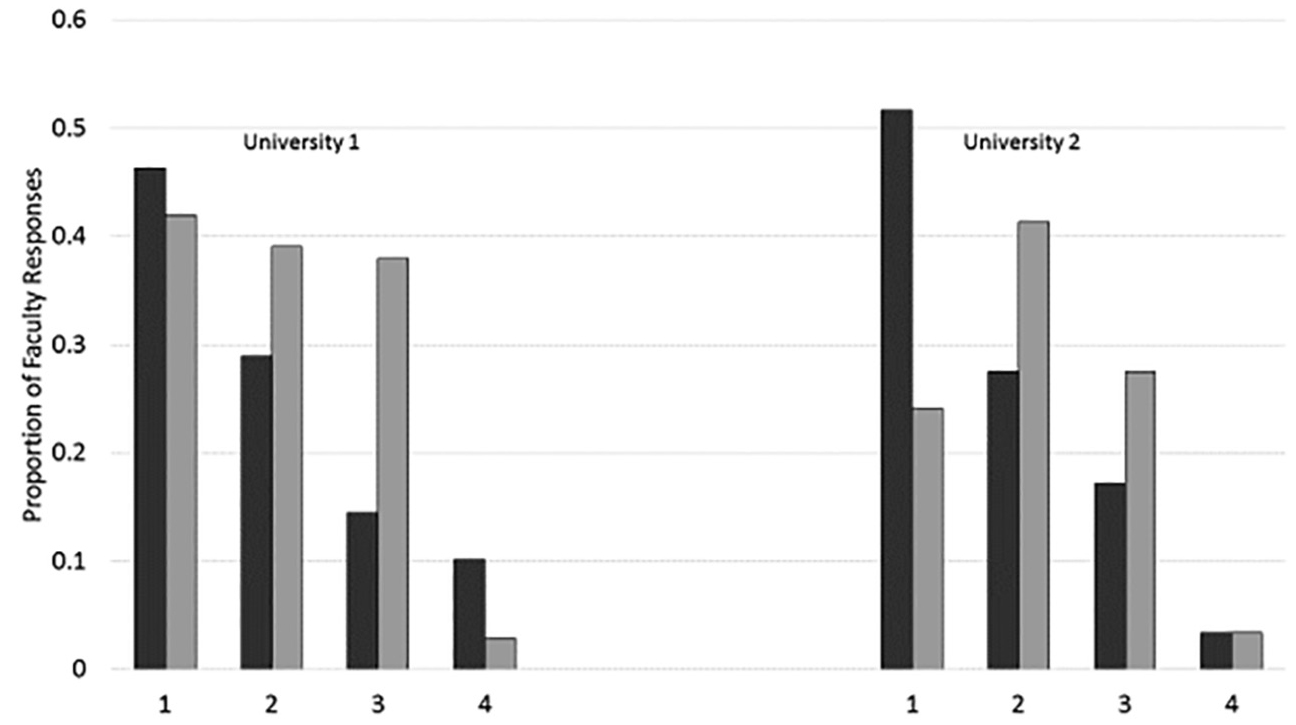

Participants perceived high levels of support and encouragement for use of EBIPs within their particular institutions (Figure 4). The two universities are largely alike in their DRU status, general regional location, and undergraduate enrollment demographics but show perceived support differences. At University 1, 46.37% of respondents perceived high levels of support at the departmental level and 42.03% perceived high levels of support at the college level. At University 2, 51.72% of the respondents perceived high levels of support at the departmental level, but only 24.14% perceived high levels of support at the college level. To determine if there were any differences in the frequency distributions of the selected responses between the two universities, a chi-square test of independence was used. Chi-square analyses indicated that the universities did not differ significantly by perceived support at the departmental [Χ2 (3) = 1.36, p = .175] or college level [Χ2 (3) = 3.28, p = .351].

Perceived levels of departmental and college support for EBIPs at the two sampled universities

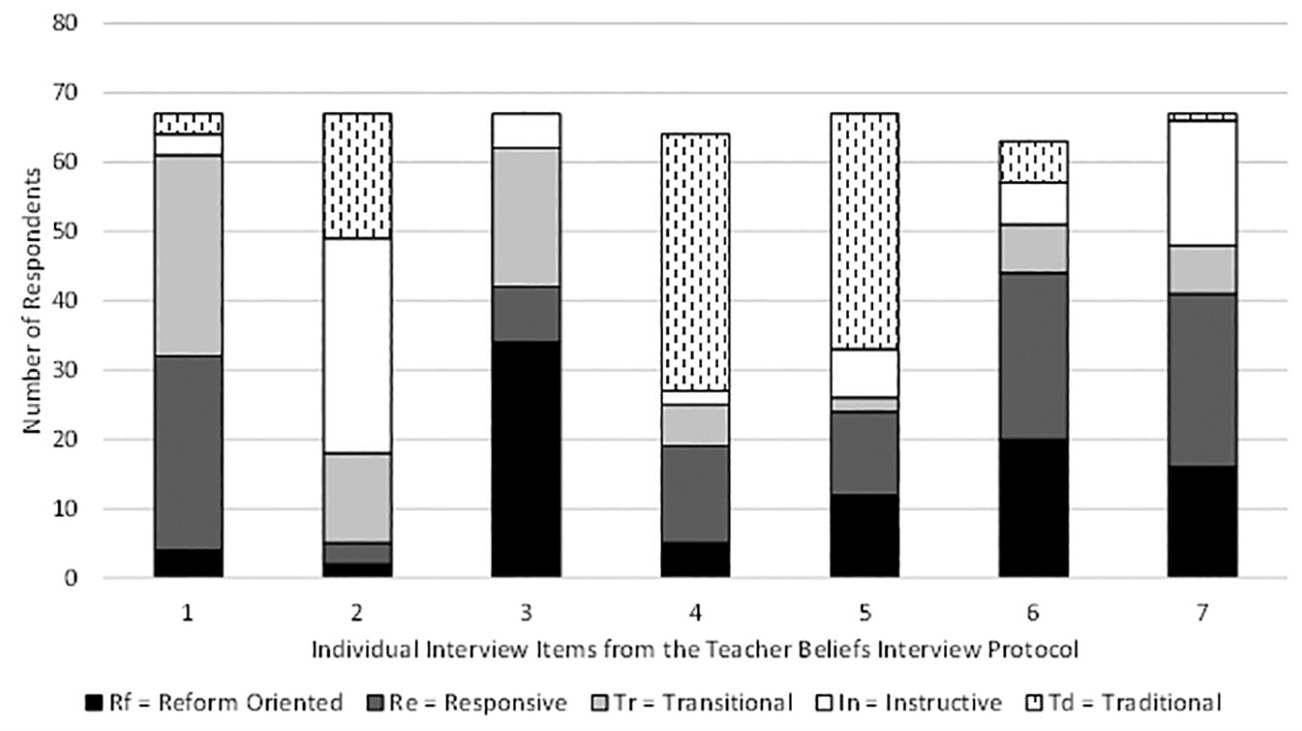

Figure 5 demonstrates the frequency of the sample respondents who answered a particular Teacher Beliefs Interview (TBI) item (listed as 1 through 7 on the x-axis) aligned with a particular teacher beliefs code. Overall, participants had average weighted reform-oriented belief scores ranging from 9.00 to 30.00 (mean = 21.64, SD = 4.52), with 7.35% reform-oriented, 41.2% responsive, 41.2% transitional, 8.2% instructional, and 0.0% traditional. The black and dark-gray bars indicate a more reform-oriented or responsive instructional belief as measured by the particular item. Items 1, 3, 6, and 7 are items related to student learning in the classroom, which qualitatively appears to have higher proportions of reform-oriented and responsive codes. Items 2, 4, and 5, are items related to teacher knowledge and qualitatively appeared to be more transitional or instructive in nature (Luft & Roehrig, 2007). A Teacher Beliefs Index was calculated by taking the weighted mean of the items with a higher weight given to those responses more aligned with reform-oriented instruction.

Frequency of sample with particular teacher beliefs for each TBI item.

Goal orientation profiles for individual instructors were created using a two-step cluster analysis as described in Fortunato and Goldblatt (2006). The ratio of cluster sizes was = 1.68 and indicated a “fair” cluster model resulting in two clusters (containing 37.3% and 62.7% of the sample, respectively). Cluster 1 had high performance avoidance (Likert mean = 4.67) and learning approach (Likert mean = 4.49) orientations and moderate performance approach orientations (Likert mean = 3.93). These results indicate that individuals in this cluster were more likely to seek out opportunities to learn about new instructional methods while avoiding judgment by their peers than those in the other cluster. Cluster 2 had moderate performance avoidance (Likert mean = 3.89) and learning approach (Likert mean = 3.83) orientations and low-moderate performance approach (Likert mean = 3.11) orientations. An analysis of variance (ANOVA) indicated statistically significant differences at the p < .0001 level for all three orientation subconstructs, adding validity to the cluster model.

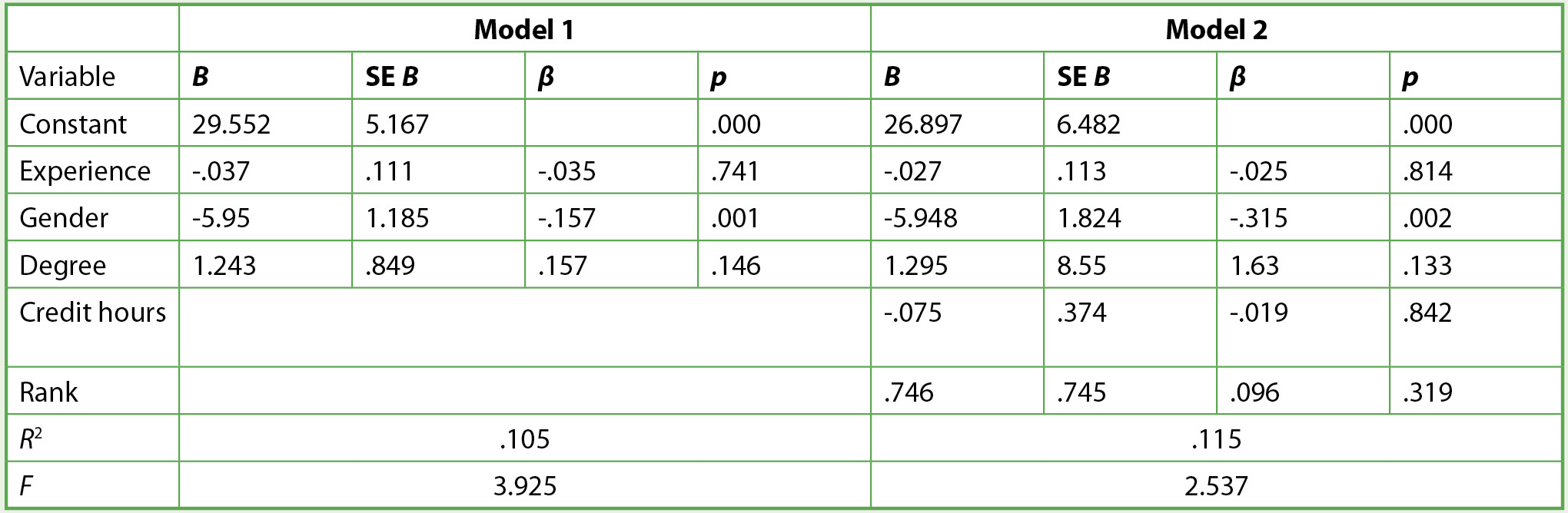

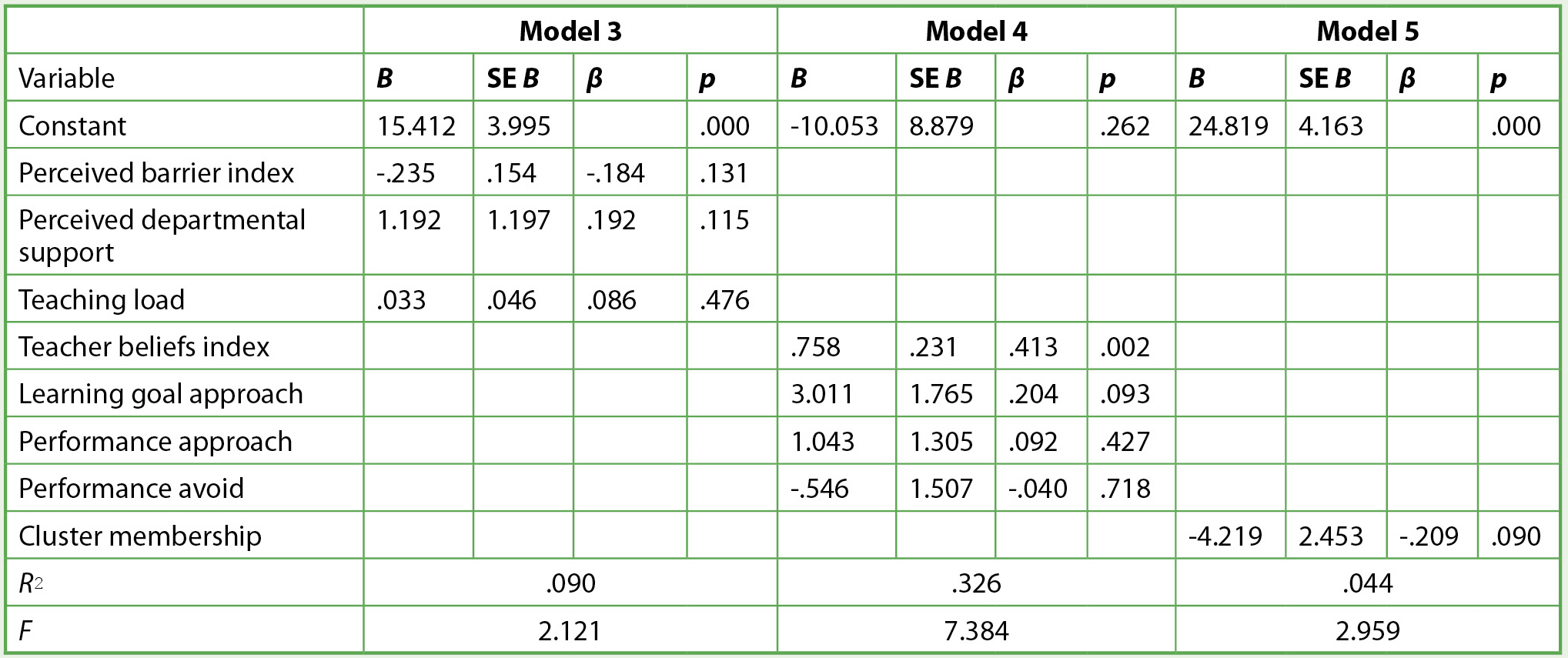

Research question 3

Correlation and multiple regression analyses were conducted to examine the relationship between engagement in active learning practices and various potential individual and situational barrier predictors (see Table 1 and Table 2). In regression models 3 and 5, there were no significant predictor variables. In models 1 and 2, gender was a significant predictor (β = -.157, p = .001; β = -.315, p = .002, respectively). This indicated that female instructors were more likely than male instructors to utilize EBIPs. In regression model 4, only the Teacher Beliefs Index was a significant predictor of engagement in active learning (β = .413, p = .002).

Regression models for demographic predictors.

Regression model for intrinsic and situational predictors.

Discussion and conclusion

The goal of this study was to explore STEM faculty’s situational and individual barriers to adoption of EBIPs at two institutions. We recognize that this population of STEM faculty may not be statistically representative of STEM faculty across the United States, but we believe this study provides guidelines toward understanding STEM faculty perspectives on barriers to use of EBIPs. This is the first study that we know of that has attempted a comprehensive use of regression models to better understand the predictive strength of situational and individual barriers on faculty use of EBIPs.

Our data examining STEM faculty align with previous studies that have collected awareness and use of EBIPs data (Cutler et al., 2012; Froyd et al., 2013). Awareness of many practices in our sample is high, with unsurprising variability in awareness depending on the strategy. STEM faculty seem to report awareness of those broadly titled practices, such as collaborative learning, inquiry learning, and clickers, without being aware of more specific practices such as SCALE-Up, Just in Time Teaching (JiTT), or Process Oriented Guided Inquiry Learning (POGIL). In addition, in previous studies (Cutler et al., 2012; Froyd et al., 2013), awareness of a particular EBIP was highly correlated to use. Although we did not specifically calculate that relationship in this study, the data in Figures 1 and 2 suggest a similar relationship.

Unlike previous studies, very few respondents reported that they had tried and discontinued using a particular strategy. Henderson and Dancy (2007) demonstrate that a quarter of their sample (23%) had tried particular EBIPs and then discontinued use. Our sample largely either tried a particular strategy and sustained use or did not try it at all. It could be that this sample is not in the “innovators” or “early adopters” category of Rogers’s (1995) technology adoption curve.

Henderson and Dancy (2007) note that a sample of physics faculty most often blamed their situational characteristics on their inability to implement EBIPs. They propose that situational barriers are more powerful indicators of faculty use of EBIPs and ask, “Why are situational barriers there, and how can they be overcome?” Faculty in their sample seemed to have more evidence-based, student-centered views about teaching and learning, but these views did not translate to classroom practice. It is clear that many faculty in our data perceive numerous situational barriers to their effective implementation of EBIPs as well. However, recent studies suggest that perceived situational barriers rarely manifest as actual barriers. Practices such as lecture are predominant no matter what the situational setting (Stains et al., 2018). This indicates that the perceived barriers may be ephemeral and individual characteristics are more predictive of EBIP use.

Perceived lack of time seems to be a particularly onerous barrier to implementation of these practices (Brownell & Tanner, 2012; Froyd et al., 2013). According to Froyd et al. (2013, p. 397), “The literature varies in its claims of how much class time various EBIPs will take, in part because this depends on how faculty members choose to implement them.” The perception and the reality of these barriers are not always in alignment. Future research as well as professional development efforts might focus on mediating faculty members’ perceptions of time required to implement EBIPs effectively and balance the costs and benefits associated with classroom improvement.

When examining individual barriers to implementation of EBIPs, respondents tend to hold epistemologies that are primarily responsive or transitional. The pattern that “student learning” teacher-beliefs items were more frequently coded as reform-oriented than “teacher knowledge” teacher-beliefs items may indicate that faculty want students to learn in an evidence-based way but do not have the pedagogical content knowledge (PCK) to implement such instruction (Norton et al., 2005), a PCK barrier.

The teacher beliefs indicator variable and gender were the only two factors that statistically predicted level of engagement with EBIPs in the regression model. What is clear from this regression model and our previous data is that the connections between beliefs and practices are complex (Gibbons et al., 2018; Kane et al., 2002; Stains et al., 2018). What is it about gender that helps predict whether a particular higher-education instructor will implement and sustain use of EBIPs? With issues of gender equity in STEM fields in particular, it is unclear why women would be more likely to engage in EBIPs considering both the real and perceived barriers related to these practices, compounded with the barriers women already face in the field.

With the growing evidence for use of EBIPs in STEM classrooms, understanding faculty awareness, use, and barriers (both real and perceived) to implementation of these practices is a key first step to dissemination of EBIPs to the wider higher education community. Although several studies do exist, more research is needed to understand the current landscape of the field in more detail. More in-depth qualitative data and large-scale predictive models in future studies are also recommended to gain a better understanding of how and why these evidence-based practices are or are not implemented more widely.

Grant E. Gardner (Grant.Gardner@mtsu.edu) is an associate professor in the Department of Biology and doctoral faculty in the Interdisciplinary Mathematics and Sciences Education Research PhD program at Middle Tennessee State University. Evelyn Brown is the director of extension research and development at North Carolina State University. Zach Grimes is an assistant professor of natural sciences at Crowley’s Ridge College. Gina Bishara is an undergraduate student in the Department of Biology at Middle Tennessee State University.

Pedagogy Teacher Preparation Teaching Strategies Postsecondary