Teacher to Teacher

Three-Dimensional Classroom Assessment Tasks

Science Scope—March 2019 (Volume 42, Issue 7)

By Susan German

Practical advice from veteran teachers

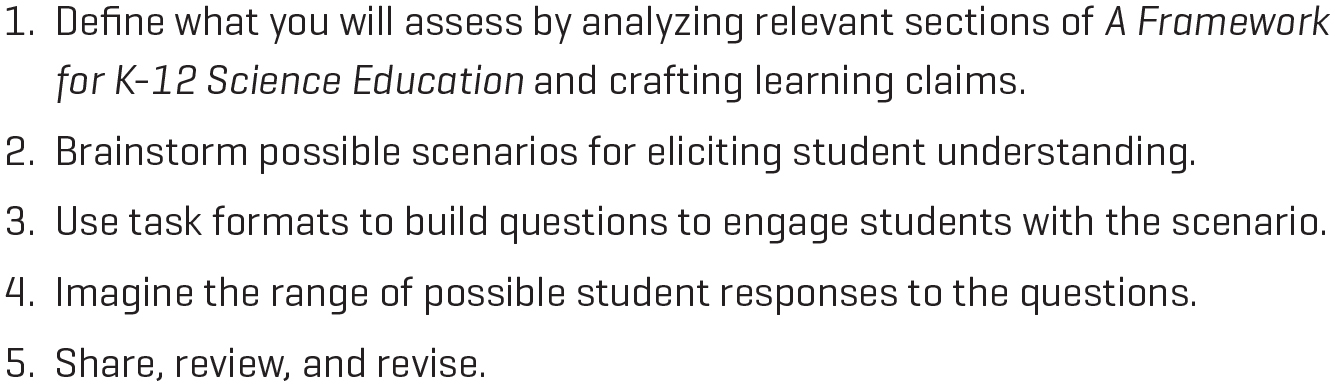

Assessment tasks present an opportunity for students to demonstrate their proficiency with disciplinary core ideas (DCIs), science and engineering practices (SEPs), and crosscutting concepts (CCs) in an integrated format (Penuel, Van Horne, and Bell 2016). For the purpose of this column, assessment tasks are defined as multicomponent assessments that use authentic contexts and incorporate multiple, related questions that allow students to create, perform, or produce something similar to what would be expected in a real-world situation. The website STEM Teaching Tools (see Resources) contains a series of tools or Practice Briefs that can assist classroom teachers in writing assessment tasks. I use the framework of the five steps outline in STEM Teaching Tools Practice Brief 29 (see Figure 1) as a guideline to getting started with the development of an assessment task.

Define the assessment

The first step of creating an assessment task is for the teacher to determine what students should be able to know and do related to the concepts being assessed. Practice Brief 29 suggests using A Framework for K–12 Science Education (NRC 2012) as the basis for developing a learning claim. A learning claim can be one or more Next Generation Science Standards performance expectations or a statement created by the teacher that incorporates the targeted DCI, SEP, or CC (Penuel et al. 2016). I find that once you understand the intent of the NGSS, you start thinking about the DCIs, SEPs, and CCs as being in three different cups. As a teacher, you make your own performance expectations and learning claims or targets using the NGSS dimensions from one or more of the cups.

Learning claim example

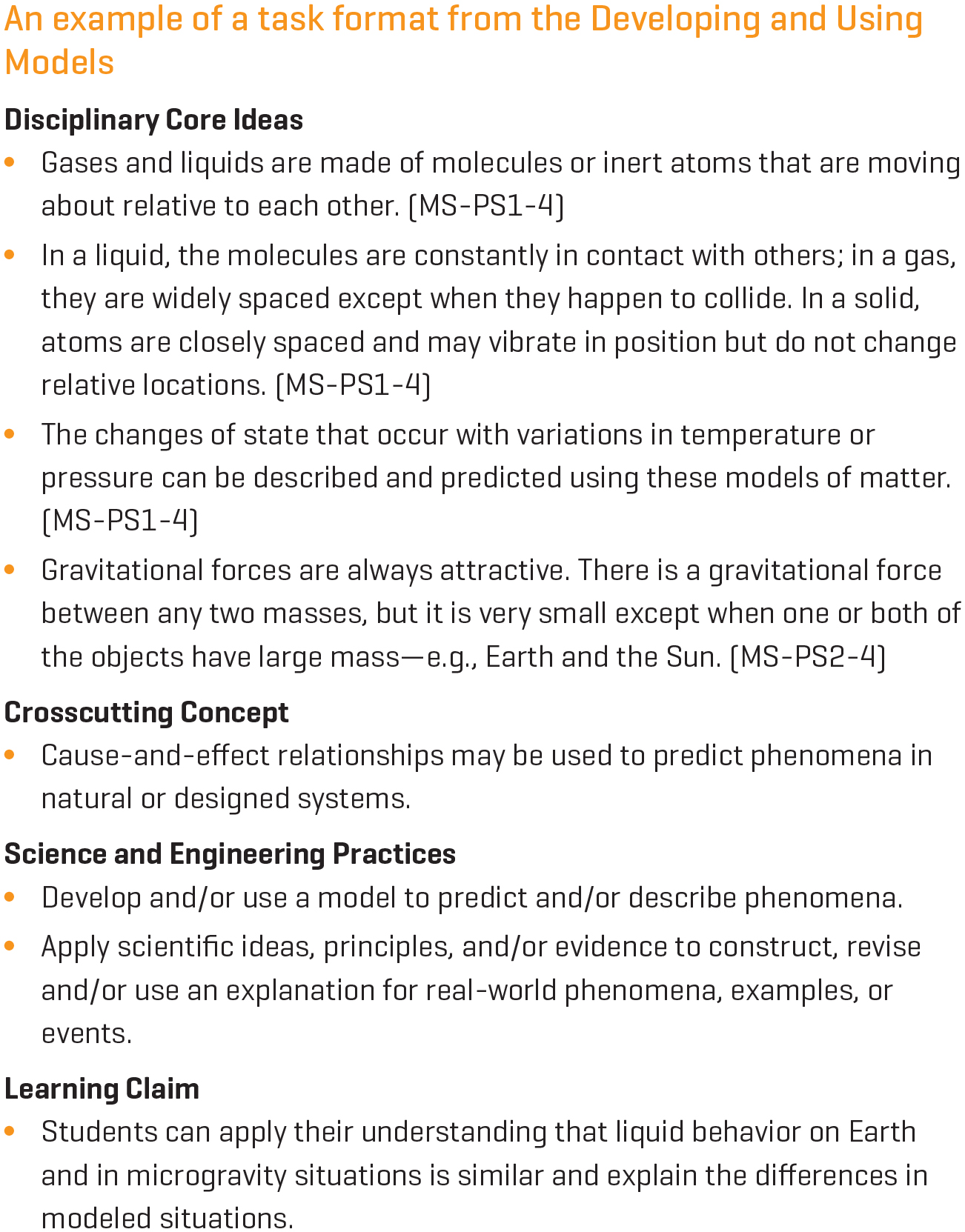

One lesson-level phenomenon my students worked to explain is why more drops of water fit on a penny than expected. While using the internet to research how to explain the phenomenon, students discovered a slow-motion video of a water balloon popping and the water within retaining the shape of the water briefly before collapsing. After a lively class discussion, we determined the water holds the shape of the balloon briefly after the balloon is popped because polar molecules have a force of attraction between molecules. However, the balloon-shaped water mass quickly falls apart because of the force of gravity. When writing the learning claim, I included DCIs related to properties of matter and gravity (Figure 2). Further, the learning claim refers to the effects of gravity but ignores the role the mass of objects play in the strength of the force. Figure 2 lists each of the three dimensions related to the task on the left-hand side and the learning claim created from the listed dimensions on the right-hand side.

Brainstorm possible scenarios

The next step is to brainstorm possible scenarios for eliciting student understanding. A reasonable scenario provides some information to students without answering any of the questions (Penuel, Van Horne, and Bell 2016). Because we included gravity in our discussion of why a penny can hold more drops of water than expected, I used a NASA video of astronaut S. Auñón-Chancellor demonstrating the behavior of liquids (lemonade) in microgravity environments (see sidebar, p. 31). In the video, she squeezes a drink pouch of lemonade to form a large bubble at the top of the straw. Then, she scoops the bubble of lemonade off the straw and onto her hand, showing how the lemonade sticks to her hand (see Resources for a link to the video). This scenario fits well with my learning claim because the International Space Station provides an authentic situation for students to observe the behavior of liquids in microgravity.

Using task formats

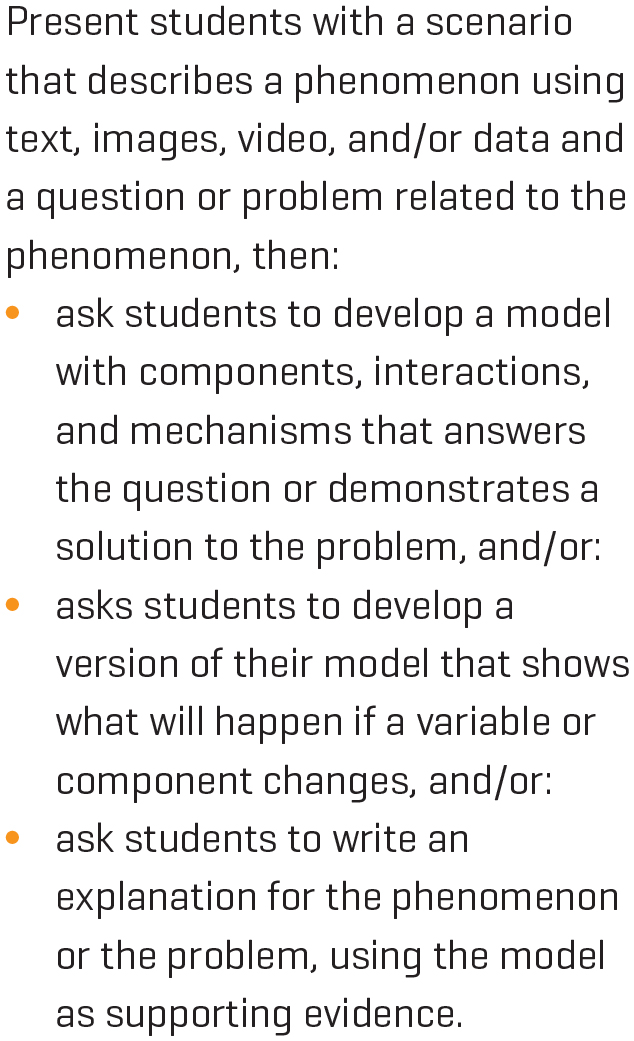

Next, I use Practice Brief 30 (Van Horne, Penuel, and Bell 2016), which lists potential task formats for each of the SEPs. STEM Practice Brief 30 suggests teachers first “present students with a scenario that describes a phenomenon using text, images, video, and/or data” then ask students to apply the identified SEP to the phenomenon. Figure 3 is one example of the numerous task formats available via Practice Brief 30.

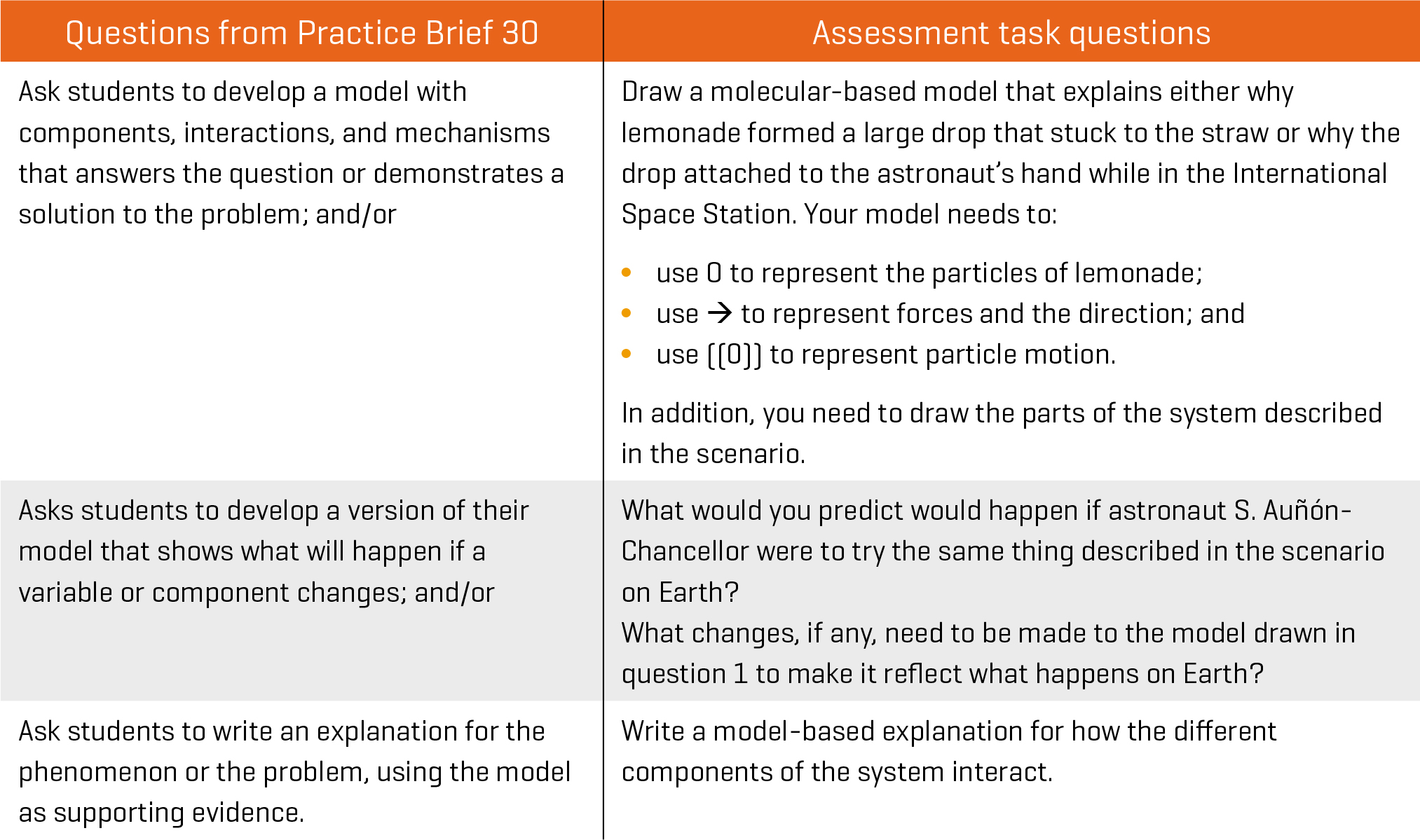

For our task, students refer to screenshots taken from astronaut S. Auñón-Chancellor’s video to develop a model explaining why lemonade behaved as it did on the International Space Station, then describe how things would be different if the same thing were tried on Earth. I included questions using the SEPs of Developing and Using Models and Constructing Explanations and Designing Solutions in my assessment task. Figure 4 provides a side-by-side example of the question provided by the task formats in Practice Brief 30 and my assessment task questions.

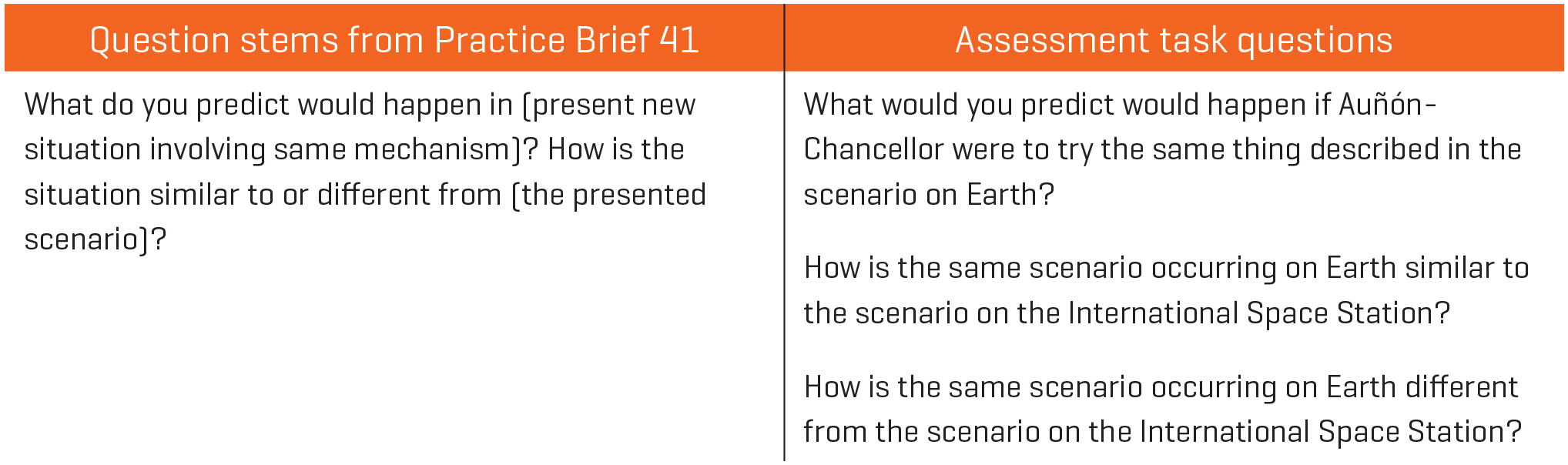

When writing a task assessment, I also borrow heavily from Practice Brief 41 (Penuel and Van Horne 2016), which contains question stems for the CCs. The question stem I selected asks for similarities and differences between situations. Figure 5 provides a side-by-side example of the question stem provided by Practice Brief 41 and my assessment task questions.

Imagine the range of possible student responses

Once you have written the assessment task, attempt to place yourself in the shoes of your students and write all the possible answers that students may come up with. When doing this, it is essential to consider your English Language Learners and students who have Individual Education Plans to ensure the questions make sense and the information provided in the scenario is sufficient (Penuel, Van Horne, and Bell 2016). An earlier version of my task asked students to draw a molecular-based model that explains why lemonade formed a large drop stuck to the straw and explain why the lemonade sticks to the astronaut’s hand while in the International Space Station. In the revised version of my task, students draw a molecular-based model for one of the explanations, eliminating the need to draw redundant models.

I thought that many of my students who struggle with science would overlook the effects of microgravity in their model because our classroom focus was on Earth-based situations. After giving the task to students and analyzing their answers, I found that approximately 75% of my students were missing any reference to gravity in their models, which indicated the need to revise the task. Ninety-three percent of my students, however, included gravity as one difference between the scenario occurring on Earth and what was happening on the International Space Station. The data indicates students understand that the force of gravity is different in both situations but do not include that in their model, which indicates a lack of practice in developing more sophisticated models as part of daily lessons.

Share, review, and revise

The final step of writing an assessment task is to share, review, and revise the assessment task. If possible, share questions with other educators for constructive feedback or pilot test items with small groups of students for response data to use in the revision process (Penuel, Van Horne, and Bell 2016). I incorporated the analysis of the response data into my revision of the task (see sidebar). To solve the problem of students omitting any reference to gravity in their model, I reorganized the list of questions to elicit student thinking regarding cause and effect before asking students to create a model. Additionally, I asked students to define the system as part of their model. This process made me realize that a student response or question will often prompt additional revision, which makes assessment writing a highly iterative process.

Assessment Three-Dimensional Learning Middle School