idea bank

Three Steps to Making Assessment Simpler and More Relevant

Evaluating information is a science practice listed in the Next Generation Science Standards (science and engineering practice #8), but did you know it’s also a practice in the revised science curricula in provinces like British Columbia and Ontario? In a society where there are concerns over fake news and fringe theories, we can see why teaching students how to evaluate information effectively is so important, but, how do we assess their ability to do it—especially on a science test? Evaluating information requires that students analyze information presented to them, determine the validity and accuracy of that information, and then generate a response to it. Multiple-choice test questions aren’t useful in assessing this practice because teachers don’t see what students are thinking when choosing a multiple-choice response. For all we know, students may simply be randomly guessing. Written response questions are better; but, even then, what are we looking for in a poor, fair, or good response?

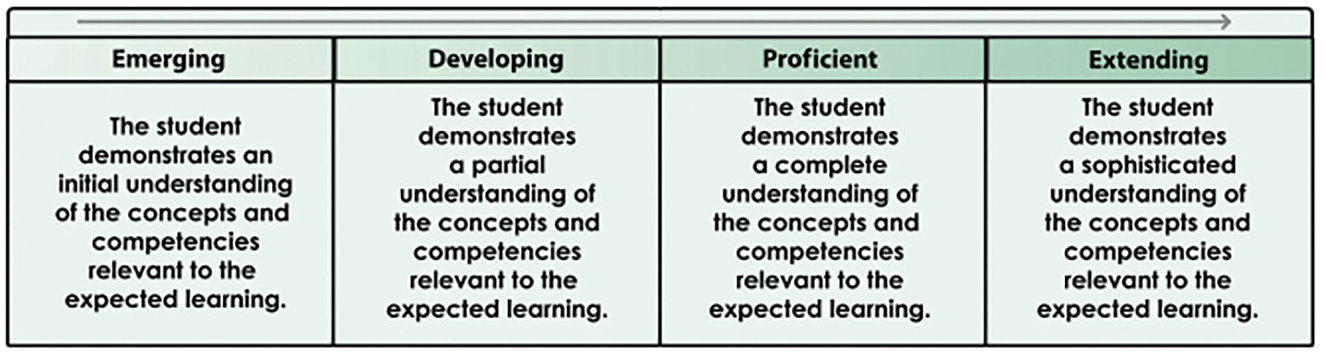

Teacher education programs suggest using marking rubrics to solve this problem. Recently, a rubric published on our school report card shows four increasing levels of proficiency (see Figure 1). On this rubric, a student at the entry (i.e., emerging) level of proficiency demonstrates an “initial” understanding of how to evaluate information while a student at the highest (i.e., extending) level would demonstrate a “sophisticated” understanding. However, even with this language, what does “initial” or “sophisticated” look like when it comes to evaluating information? Neither descriptor is very specific. Thus, we can see how assessing this skill can be so difficult and why it is something many of our colleagues struggle with.

Increasing levels of proficiency.

This past year, I dove into solving this problem with my students, and I received some positive results.

Teaching background

I teach in a public school in British Columbia where science teachers must assess students on their science practices (known as competencies in my province) using standards-based assessment. In my classroom, quizzes and assignments out of 5, 10, or 15 marks have been replaced by those that assess a student’s proficiency in a skill as being emerging, developing, proficient, or extending.

This past year, I focused on finding ways to assess science practices like evaluating information on formal science tests. First, I avoided using test banks and, instead, generated my own questions. Yes, there was much trial and error in phrasing the questions correctly so that I was assessing students on how to evaluate information and not something else. And there were a lot of times where the response students gave was far different than what I was expecting. But, in the end, I found three strategies I consistently used that worked well to help assess students’ ability to evaluate information.

Strategy 1: Give students an opinion, model, or hypothesis to evaluate

When I first started assessing students on evaluating information, one thing students struggled with was that they often did not understand what it meant to “evaluate information.” Students often asked, “What am I evaluating? And, what do I have to write down when I’m evaluating it?” I realized that students needed me to help them break down the evaluation process into smaller, manageable parts. And, I realized that the easiest way to do that was to make it relevant to them.

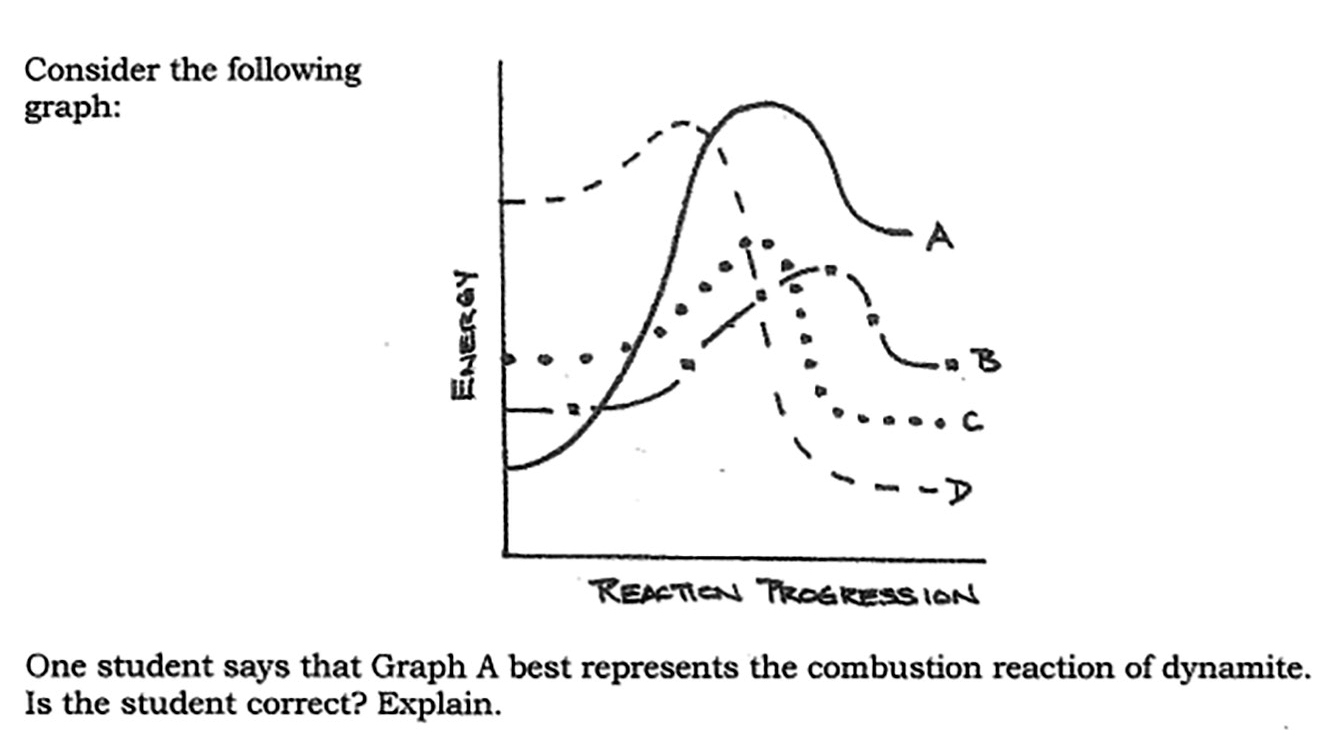

Today, I do this by giving students an opinion or hypothesis and asking them whether they personally agree with it, and why or why not. For example, a question on my science quiz on Endothermic vs. Exothermic Reactions (see Figure 2) shows students a graph with four energy diagrams followed by an opinion from a student. The student’s opinion is that graph A is the energy diagram for the combustion of dynamite. Students are then asked, “Do you agree with the student’s opinion? Provide a scientific reason as to why or why not?”

Detail from question on a science quiz on endothermic vs. exothermic reactions.

Instantly, this gives students some background knowledge to draw from (i.e., dynamite explodes and gives off heat), something tangible to evaluate (i.e., a student’s opinion as it relates to a diagram), and a structure to their response (i.e., First, do you agree? Then, why or why not?). This makes evaluating information a little bit more manageable for students. This is a good start, but students still need to know the specific parts that make up an excellent response.

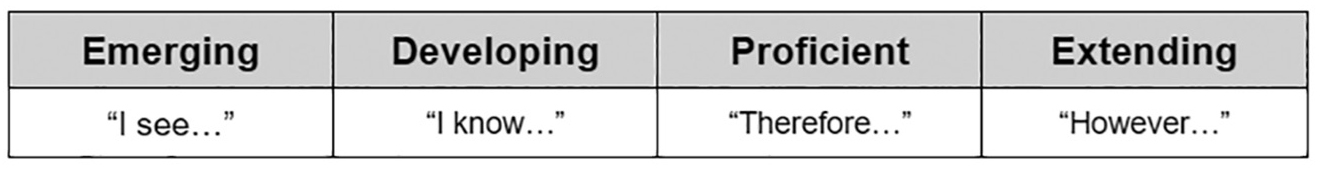

Strategy 2: Tell students the four prompts that make up an excellent response

Another thing students struggled with was that they did not understand what made up an excellent response when evaluating information. After spending hours marking responses and collaborating with colleagues, I realize that many teachers look for the same things in a strong response, which include having a student

- identify what they saw in the information presented,

- connect it to what they have learned,

- make a determination if it agrees with what they know, and

- consider alternatives.

Today, instead of saying all this to my students, I offer four prompts to follow: (1) I see, (2) I know, (3) Therefore, and (4) However. Having all or some of these prompts in their answers will help me determine the level of proficiency for each student (see Figure 3). For example, when answering the question on the Endothermic vs Exothermic quiz, one student wrote, “No, the student is not correct because graph A represents an endothermic reaction where energy is absorbed from the environment which results in a positive change of heat. Graph B would also be incorrect … [for the same reason]. While both graphs C and D are exothermic reactions where energy is released into the environment, the combustion reactions of dynamite would be graph D because there’s a greater change in heat in graph D than C.” Although the student did not use the prompts in order, the answer has all the elements that make up an excellent response. This student started with a conclusion (“therefore”) and then wrote what they saw in graph A, tied it back to what they knew about the combustion of dynamite, and then considered the other graphs. This was an excellent, or extending, response.

Student prompts.

Today, for most of my quizzes, I include the prompts so that students don’t need to focus on the wording of their response. Rather, they only need to focus on the content. And, it also makes marking student responses much easier because the expectations are clearly laid out for students.

Strategy 3: Have students practice

Today, when teachers look at my tests, many ask, “How do your students know to give the response you’re looking for?” To which I respond, “We practice beforehand—a lot!” So, during each unit and before each test, I give students scenarios and opinions for them to practice with their lab partners. For example, in the lead up to the chemical reactions quiz, my students spent a couple classes answering two practice questions using the evaluation process: one in which students evaluated the method of cooking that cooked an egg the fastest, and another in which students evaluated whether heating up a lemon before juicing releases more juice in the process. For each question, I gave students the four points I was looking for and I collected their responses so I could review their responses. We even spent time going over their responses as a class—this practice gets students comfortable in evaluating information. It also gives them the confidence to do it regardless of question or context because they now have definite steps they can follow in the evaluation process.

In conclusion, evaluating information—like all skills—can be practiced to get a better result. And, like all skills, students and teachers inherently know what makes up a good performance or a poor one. If we can communicate what makes a good response and provide relevant and meaningful ways for students to practice generating them, then students will be evaluating information at an extending level in no time.

Kent Lui (realsciencechallenge@gmail.com) is the Science Department Head at Burnaby South Secondary School, Burnaby, British Columbia and founder of REAL Science Challenge. Kent is passionate teaching and assessing STEAM and SEPs in more meaningful ways. Kent shares his work and the many strategies and resources he’s developed at realsciencechallenge.com.

Assessment Pedagogy Teaching Strategies High School