feature

Comparing Student Performance and Satisfaction Between Face-to-Face and Online Education of a Science Course in a Liberal Arts University

A Quasi-Experiment With Course Delivery Mode Fully Manipulated

Journal of College Science Teaching—November/December 2021 (Volume 51, Issue 2)

By Hongyan Geng and Mark McGinley

This study presents a quasi-experiment to assess differences in student performance and satisfaction between two different delivery modes—online and face-to-face education. We collected data from 747 (373 face-to-face cohort, 374 online cohort) students enrolled in a general education science course at a liberal arts university. There was no self-selection of delivery mode by students, since this course is required, and delivery mode of one of the cohorts changed to online education due to the outbreak of the COVID-19 pandemic. We compare the learning outcomes of the two major course assessments (midterm test and research project) and student perception between the two delivery modes using quantitative and qualitative analyses. There was no statistical difference in the student scores on the development of medium-order analytical skills (i.e., midterm test) between the two delivery modes. However, online students scored statistically higher on the development of high-order analytical skills (i.e., research project), but they scored statistically lower on measures of student satisfaction. Our study suggests that online education, although currently unfavored by students, is equally or more effective in the achievement of the learning outcomes than face-to-face education.

Online education is a process in which students and teachers communicate and interact with course content via internet-based learning technologies (Curran, 2008). This education mode was originally embraced by higher education institutions as an opportunity to meet the diverse needs of students (Taormino, 2010; Gornitsky, 2011; Baran et al., 2013). Traditionally, students who take online courses have work and family obligations and live off-campus (Bergstrand & Savage, 2013; Parcel et al., 2018). However, recent studies have found a change in this trend, with students preferring to take online courses while living on campus to optimize their school schedules (Parcel et al., 2018).

Numerous studies have been conducted comparing online and face-to-face (F2F) education. Some studies criticize online education since it leads to less effective learning (Bergstrand & Savage, 2013; Xu & Jaggars, 2013, 2014), requires more study time due to the lack of physical teaching and learning interactions (McClendon et al., 2017; Parcel et al., 2018), and reduces the amount of quality teacher-student time and may lead to more frustration and less motivation (McClendon et al., 2017). However, other studies have identified strengths, such as that it provides flexibility and accommodates diverse learning styles (Logan et al., 2002; Zhang et al., 2004; Parcel et al., 2018), achieves student-centered learning (Logan et al., 2002; Parcel et al., 2018), and produces better learning outcomes (Sitzmann et al., 2006; Parcel et al., 2018). The advantages and disadvantages of online education have also been examined for introductory science courses (Ritter, 2012; Smith, 2017; Law et al., 2020).

Previous studies have provided tremendous insight into online education, but the generalizability of the findings is limited for two reasons. First, many previous studies do not provide adequate details regarding the course or instructor practices (Kaupp, 2012, , ). Without proper details, it is unclear whether these practices influence the results. Second, these studies are unable to control for students’ self-selection of delivery mode. This is a vital issue because certain types of students may tend to choose one delivery mode over the other, making it impossible to know whether the differences of learning outcome are a result of delivery mode or self-selection.

In this study, we compare student performance and satisfaction between F2F and online education using quantitative and qualitative analyses. We focus on a general education science course at a liberal arts university. Due to COVID-19, we had the unique opportunity to compare two cohorts of the same class while controlling for self-selection. There was no self-selection because this course is a graduation requirement and students (and teachers) did not know the course would be taught online. We build on previous studies by providing adequate details on the course and, to our knowledge, being the first of such studies to fully manipulate the course delivery mode.

Background

Context of Lingnan University

Lingnan University (LU), the only liberal arts university in Hong Kong, offers undergraduate, taught postgraduate, and research postgraduate programs in the areas of arts, business, and social sciences. There are no science majors, which distinguishes LU from other liberal arts universities. The university has received recognition in two international university ranking exercises: Top 10 Liberal Arts College in Asia by Forbes in 2015 and second worldwide for quality education in the Times Higher Education University Impact Rankings 2020 (Forbes, 2015; Times Higher Education, 2020). LU is characterized by a fully residential campus and close faculty-student relationships. The current total undergraduate enrollment is 2,641, with 20% being international exchange students and a majority of the students being female (64%). LU employs 210 academic staff, with 72% of faculty members having an international education background.

About the course

LU has four common core courses (courses that all students must take to graduate), which are designed to provide undergraduate students with a broad and balanced foundation, irrespective of their major. One of these core courses, the Process of Science, provides students with fundamental knowledge for developing analytical and critical thinking abilities and making scientifically informed judgments.

The course hosts around 400 students each semester. Each week, students attend two 1-hour lectures and one 1-hour tutorial. The lecture, delivered in a big-lecture setting (all students in one lecture hall), develops students’ understanding of how science works and the role of science in the world and introduces today’s great challenges in science and technology. The tutorial, aligned with the lecture, allows students to discuss scientific issues in small-class settings (maximum of 20 students) and provides the opportunity to conduct an independent research project.

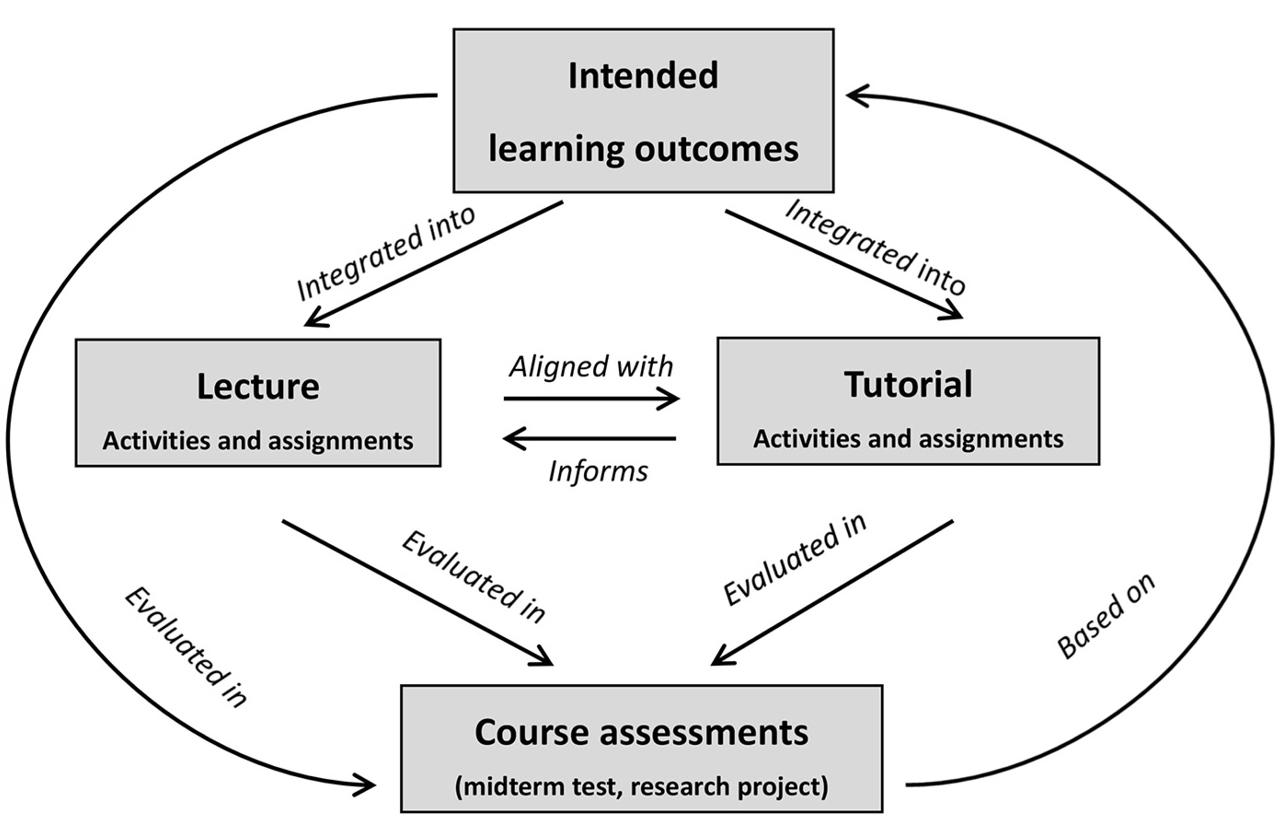

Figure 1 illustrates the organization of the course. At the start of the course for both cohorts, we reviewed the syllabus with the students, clearly communicating the goals, objectives, policies, requirements, and structure of the course. In particular, the intended learning outcomes were discussed in detail with the students. Both the lecture and the tutorial assignments and activities were designed based on the intended learning outcomes. Tutorial assignments and activities, designed to inform the lecture assignments and activities, followed up the lectures to further reinforce the intended learning outcomes. For example, thanks to the small classroom setting, tutorials help instructors identify the learning blind spots, which are then re-explained in the following lecture sessions. Guided by the intended learning outcomes, the lecture and tutorial assignments and activities contribute to the major assessments (i.e., midterm test and research project). Finally, we develop the grading rubrics based on the intended learning outcomes.

Organization of the course.

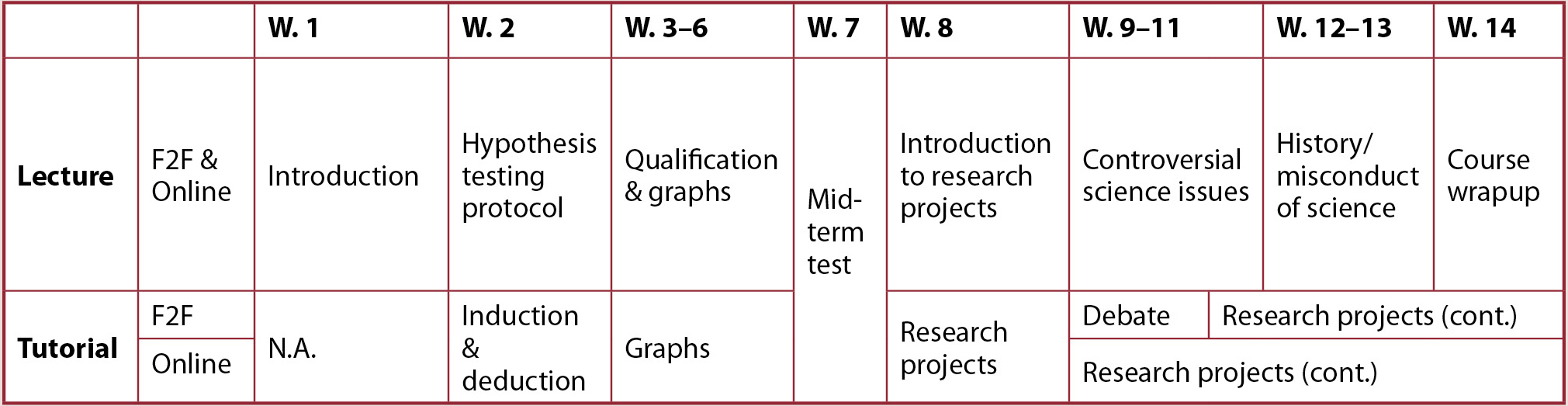

Course delivery: F2F in fall 2019 and online in spring 2020

In fall 2019, the course hosted 373 students and was mostly delivered (> 80%) by F2F. In the last two weeks of fall 2019, delivery mode switched to online mode due to social unrest in Hong Kong. We consider that online education did not contribute much in fall 2019 for two reasons. First, the lecture topics covered online in the last 2 weeks were the history of science and misconduct of science (Table 1). Both topics involve straightforward material, so it is reasonable to assume students could easily learn the materials by themselves. Second, the online tutorial sessions mainly continued the research project (Table 1) and focused on helping students finish their research projects. In this study, we consider the teaching in fall 2019 to have been done via F2F delivery mode and refer to it as the F2F cohort.

Timeline of the course (F2F = face-to-face; W. = week).

In spring 2020, the course hosted 374 students. The course started with 1 week of F2F classes, which were used to introduce the course and review the syllabus. Due to the outbreak of COVID-19, all teaching activities were shifted to online delivery mode (Table 1). In this study, we consider the teaching in spring 2020 to have been done via online delivery mode and refer to it as the online cohort.

Study design

We employ the two major course assessments (i.e., midterm test and research report) to measure the students’ achievement in the course since the tasks were carefully designed to assess student learning, with specific grading rubrics to maintain objective, consistent grading. The other two course assessments (i.e., lecture and tutorial assignments) are formative assessments directly based on the lecture and tutorial notes, and they cannot effectively test student learning. We use the Course Teaching and Learning Evaluation (CTLE) to evaluate the students’ satisfaction with the course.

Midterm test

The midterm test for both semesters was an open-book, take-home exam consisting of seven open-ended questions. These questions required students to understand and apply the theories they learned in lectures and tutorials. To maintain a comparable level of difficulty across semesters, we designed the test using the same types of questions but with different data sets. A standard rubric was used to grade the questions, and the maximum score on the midterm test was 30.

Research project

The research project allowed students to explore a topic of their own interest by either using existing data sets or collecting data with low-tech approaches. First, students chose a science question that interested them. Then, students designed an experiment and collected and analyzed data. The project culminated in a written research report, which was graded using a standard rubric. The maximum score on the research project was 30.

CTLE

We used the university-administrated course survey, CTLE, to collect data on students’ perceptions. The CTLE is conducted online at the end of each semester and asks questions about the quality of the teaching and the course. There are 20 questions graded on a 6-point Likert scale, followed by one open-ended question. All responses were anonymous and confidential, and students take approximately 10 minutes to answer all questions.

Since students have been used to F2F education and inexperienced with online education, we hypothesized that students’ achievement would be statistically better in the F2F cohort than in the online cohort and that students would be more satisfied with the learning experience in the F2F cohort. By comparing student performance on the midterm test and research project and students’ perceptions from the CTLE, this study aims to answer the following questions:

- Is student achievement statistically better in F2F than in the online cohort?

- Is student satisfaction statistically better in F2F than in the online cohort?

Data collection

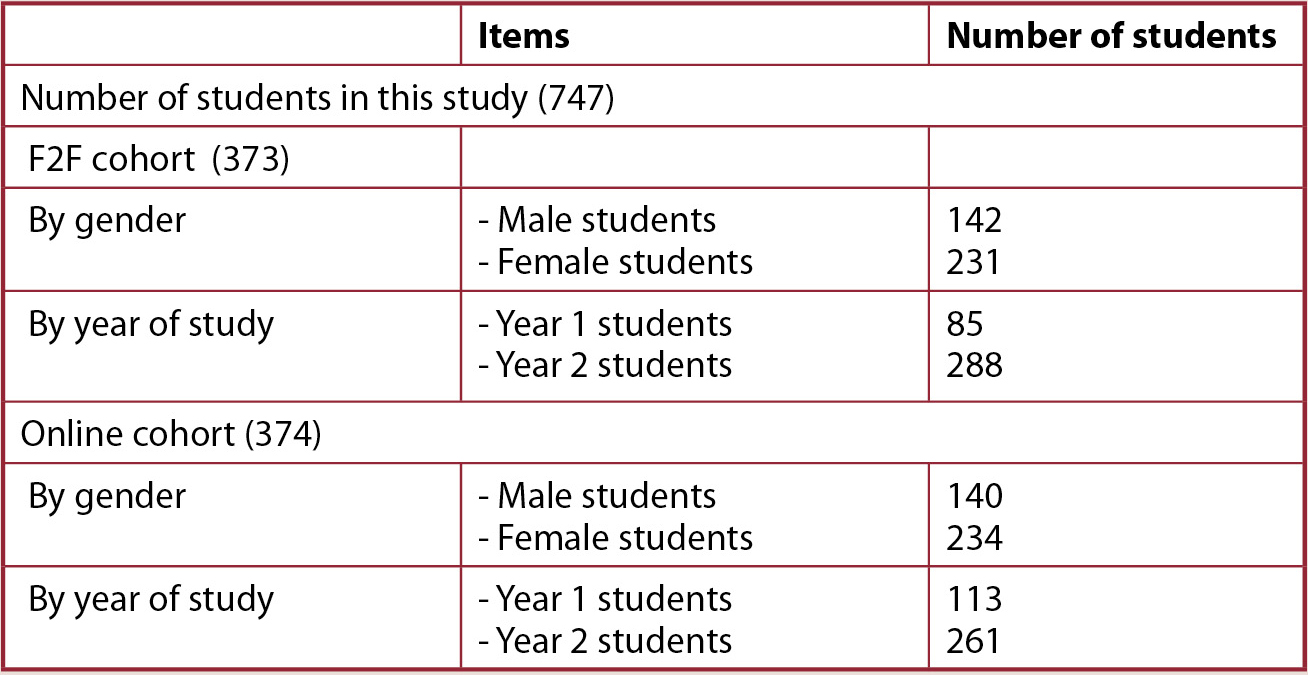

There were 380 students in the F2F cohort and 385 in the online cohort. Because the majority of students were Year 1 and Year 2 students (98% and 97% for F2F and online cohort, respectively), we excluded the senior students (7 and 11 students for F2F and online, respectively) to remove any potential influences of experience with studying at the university. Demographics of students in this study are included in Table 2.

Demographics of students in this study (F2F = face-to-face).

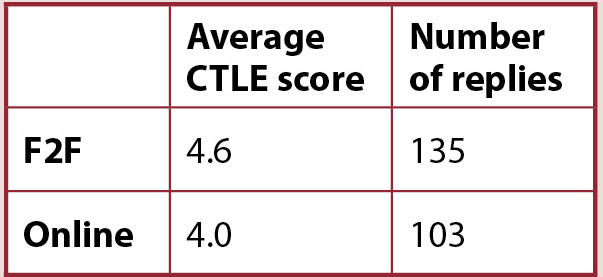

We received 135 and 103 replies on the 6-point Likert-scale questions of CTLE for the F2F and online cohorts, respectively. Among them, 69 and 58 students shared their opinions and comments on this course in the open-ended question of CTLE. We calculated the average scores of the 135 (for F2F) and 103 (for online) replies for the Likert-scale questions to estimate student satisfaction and categorized the 69 (for F2F) and 58 (for online) replies to the open-ended question to understand student perceptions.

Results

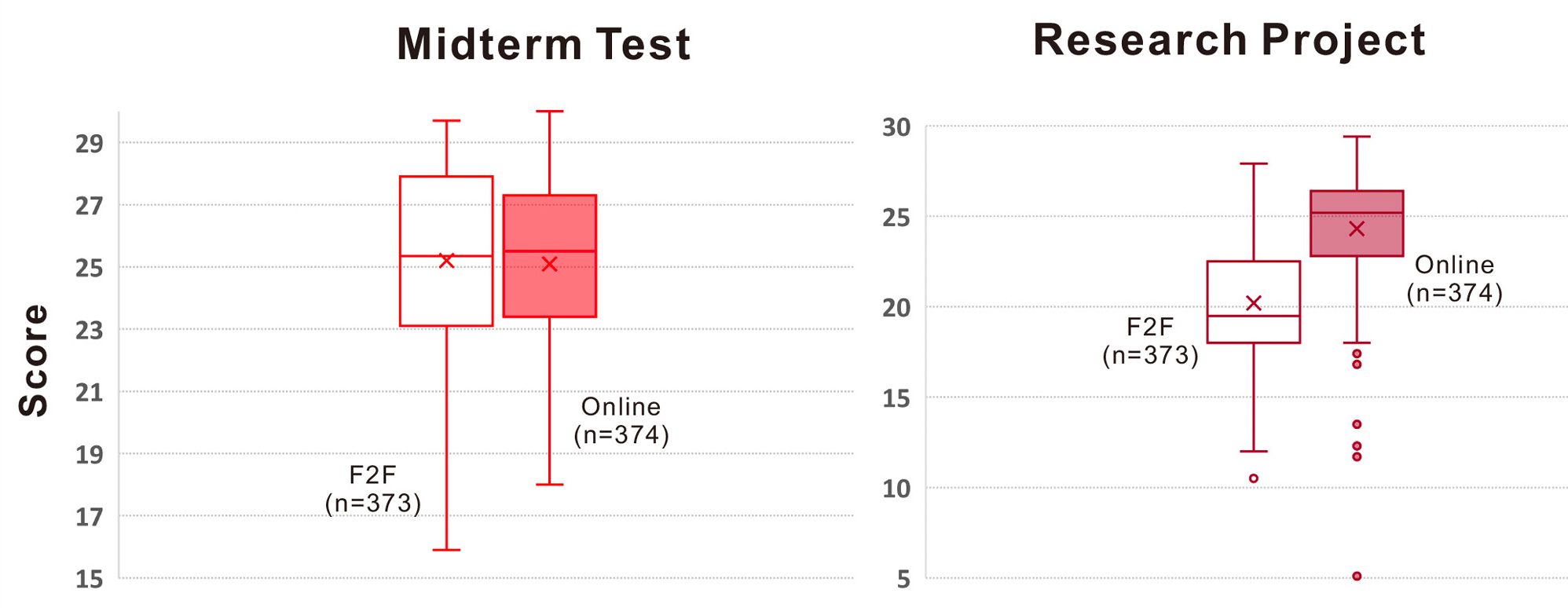

All students

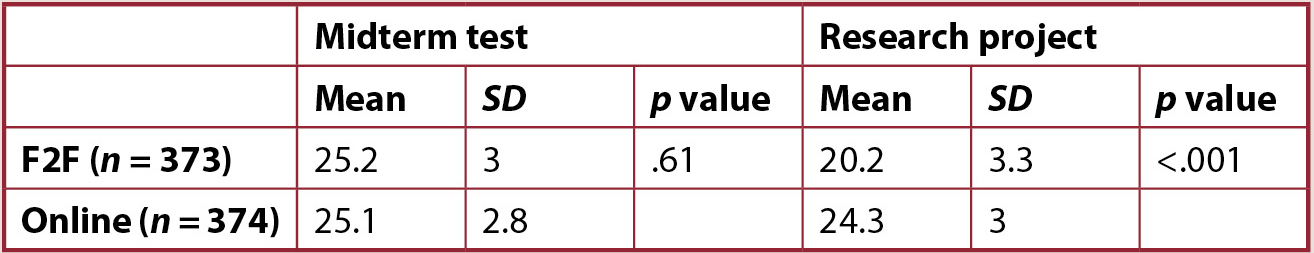

There were a total of 373 students in the F2F cohort and 374 in the online cohort. The average midterm test score for the F2F cohort was 25.2 out of 30 (84%, SD = 3.0; see Figure 2 and Table 3), while that of the online cohort was 25.1 (83.7%, SD = 2.8; see Figure 2 and Table 3). The midterm test scores for both cohorts were not statistically different (p = .61; see Figure 2 and Table 3). The average research project score of the F2F cohort was 20.2 out of 30 (67.3%, SD = 3.3), whereas that of online cohort was 24.3 (81%, SD = 3.0). The research project scores of the two cohorts were statistically different (p <.001; see Figure 2 and Table 3).

Scores on midterm test and research project for all students from F2F.

Midterm test and research project for all students (F2F = face-to-face).

Predictor variables

Since year of study or gender may influence the learning outcome, we examined the influence of these two predictor variables.

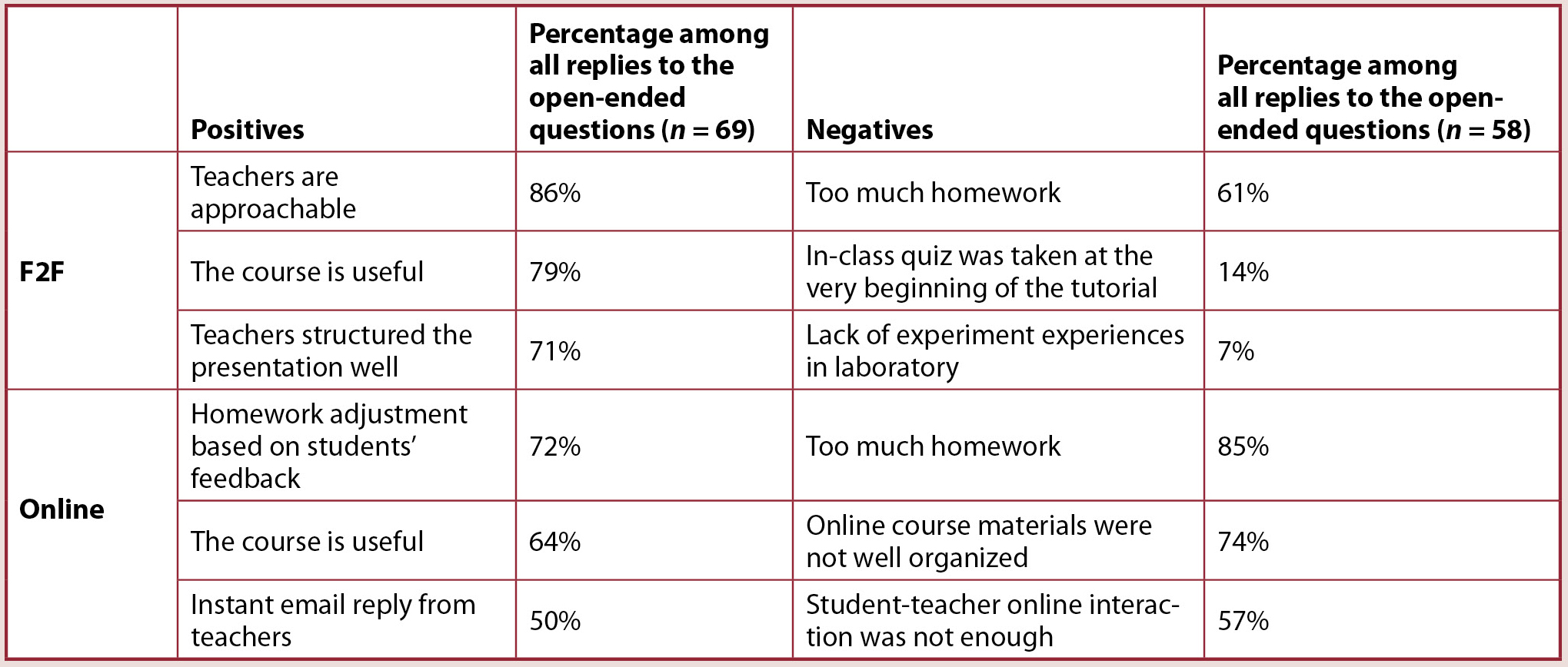

Year of study

There was no statistical difference on the midterm test scores for both Year 1 (p =.45) and Year 2 (p =.35) students between F2F and online cohorts (see Table 4 and Figure 3). For the research project, both Year 1 and Year 2 students from the online cohort received statistically higher scores (mean = 23.8, SD = 4 for Year 1 students; mean = 24.7, SD = 2.8 for Year 2 students) than those from the F2F cohort (mean = 19.7, SD = 2.6 for Year 1 students; mean = 20.7, SD = 3.3 for Year 2 students).

Midterm test and research project scores of students by year of study and gender.

Scores on midterm test and research project by year of study or gender.

Gender

Both male (p =.21) and female (p = .76) had no statistical difference on midterm test scores between the F2F and online cohorts (see Table 4 and Figure 3). For the research project, online male students earned statistically higher scores (p <.001; mean = 23.9, SD = 2.7; see Table 4 and Figure 3) than F2F students (mean = 19.6, SD = 3.5). Similarly, female students obtained higher research project scores in online (p <.001; mean = 24.5, SD = 3.1; Table 4; Figure 3) than in F2F (mean = 20.5, SD = 3.2).

To summarize, students from the F2F and online cohorts received comparable scores on the midterm test irrespective of year of study or gender, but the online cohort received statistically higher scores on the research project in comparison to the F2F cohort for all groups (Year 1 and Year 2, male and female).

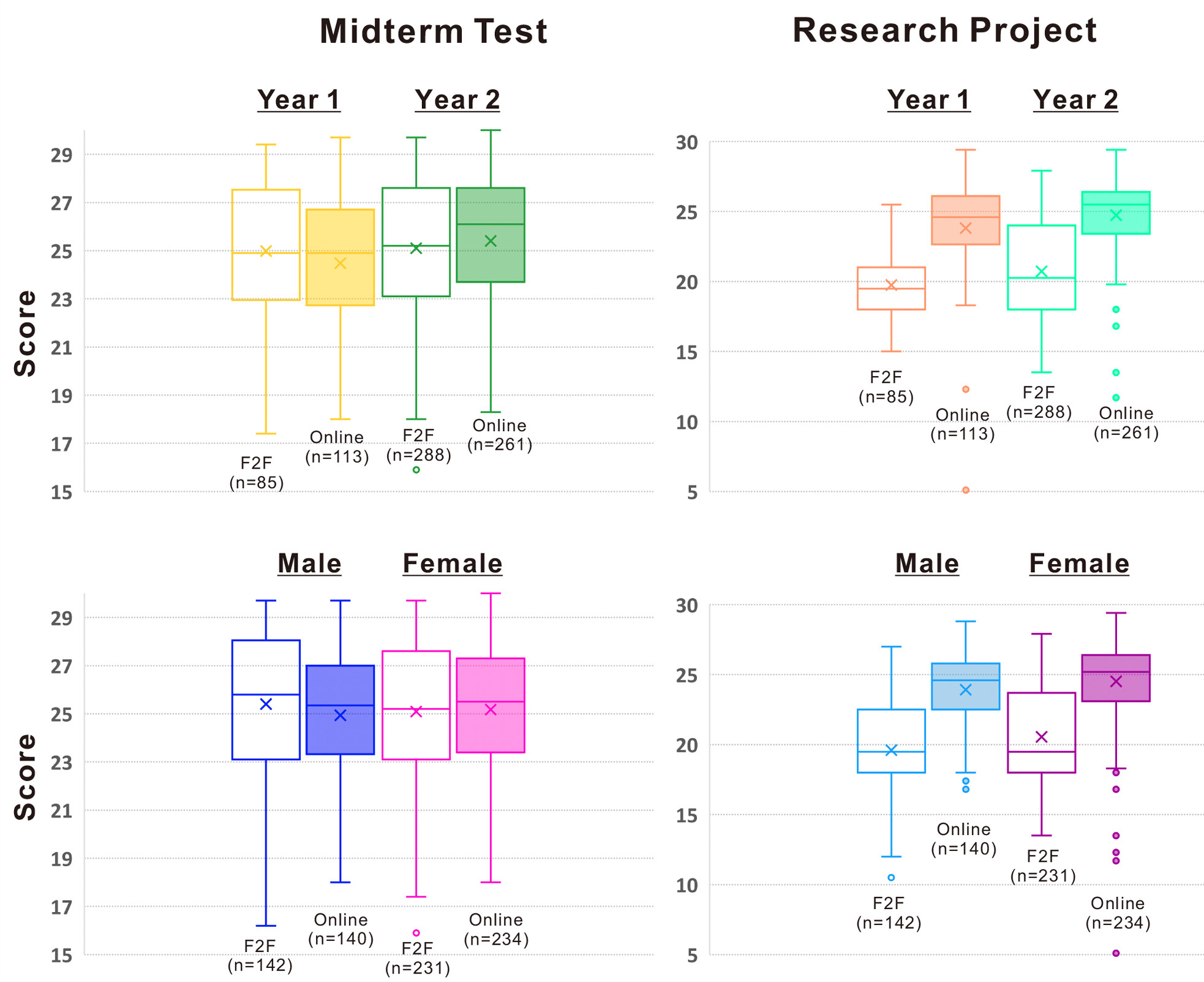

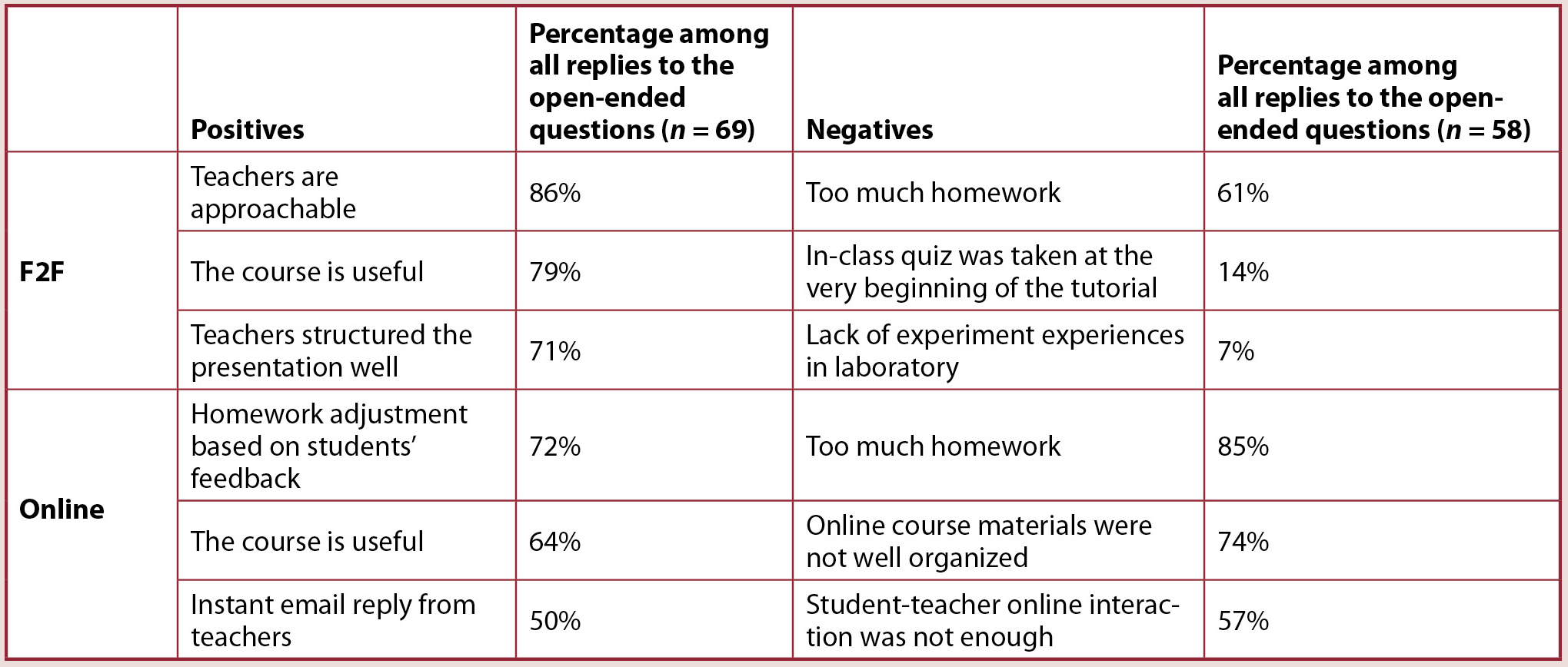

CTLE

In the F2F cohort, the average CTLE score was 4.6 out of 6 (Table 5). For the open-ended question, positive feedback included the following: teachers are approachable (86% among all replies [Table 6]), the course is useful (79%), and teacher’s presentation is well structured (71%). The negative feedback included the following: too much homework (61%), unfavorable view of the in-class quiz at the very beginning of the tutorial (14%), and lack of experiment experiences in laboratory (7%).

Summary of replies to the 6-point Likert-scale questions on the CTLE.

Note. CTLE = Course Teaching and Learning Evaluation; F2F = face-to-face.

Summary of replies to the open-ended question on the CTLE.

The online cohort gave a lower average CTLE score (4.0) compared to the F2F cohort (4.6; Table 5). The positive feedback included appreciation of homework adjustment based on students’ feedback (72%), course usefulness (64%), and instant email replies (50%), while negative feedback included too much homework (85%), unsatisfactory organization of the course material (74%), and lack of student-teacher interaction (57%).

To summarize, the F2F cohort had higher satisfaction in the course compared to the online cohort according to the average CTLE score. Based on the open-ended feedback, we identified two important factors leading to less satisfaction for online education: disorganization of the course material and less student-teacher interaction.

Discussion

Manipulation of the delivery mode

We consider our study as a quasi-experiment with the course delivery mode fully manipulated because (a) all LU students are non-science majors, and we expect that the students in the two cohorts have comparable levels of science knowledge and skills, which was confirmed by the similar performance regardless of year of study or gender; (b) we received comparable withdraw rates for this course (i.e., 4.0% and 4.5% for F2F and online, respectively) because students are required to take this course; (c) spring 2020 unexpectedly shifted to online delivery mode due to COVID-19, and students (and teachers) had not known the course would be taught via online delivery mode; and (d) the course materials, teachers, and grading standards were the same for both cohorts. Therefore, we believe there was little to no self-selection of delivery mode in our study.

Interpretation of the data

Based on the goals of a course assessment, the assessment can be connected to a type of analytical skill following Bloom’s taxonomy (Bloom et al., 1956). The midterm test examines students’ understanding and application abilities, both of which are medium-order analytical skills. The midterm test scores for the F2F and online cohorts were statistically indistinguishable, irrespective of the two predictor variables we tested (year of study or gender). Therefore, we regard F2F and online delivery modes as comparably effective in promoting medium-order analytical skills.

The research project requires students to integrate knowledge with analysis, evaluation, and synthesis skills, all of which belong to the category of higher-order analytical skills. Compared to the F2F cohort, the online cohort’s score was statistically higher, suggesting that the online delivery mode is more effective at promoting the higher-order analytical skills. Our findings echo those of Parcel et al. (2018), who revealed that students engaged in online education earned higher scores on an essay exam and were more effective at building up higher-order analytical skills.

In general, we received a low response rate for the CTLE (36% for F2F and 28% for online), which may indicate poor representation of students’ sentiments. However, considering the large capacity of the class (e.g., around 370 students for each cohort), some sensible conclusions about students’ satisfaction can be drawn based on the available responses. We found that the F2F cohort had higher satisfaction in the course than the online cohort, as seen in the average scores (F2F = 4.6, online = 4.0 [see Table 5]). This is consistent with the study by Bergstrand & Savage (2013), which revealed that students rated F2F lessons higher since they felt they were treated with more concern and respect. This also echoes a recent study in Hong Kong, which revealed that only 27% of university students were satisfied with their online learning experiences (Mok et al., 2020).

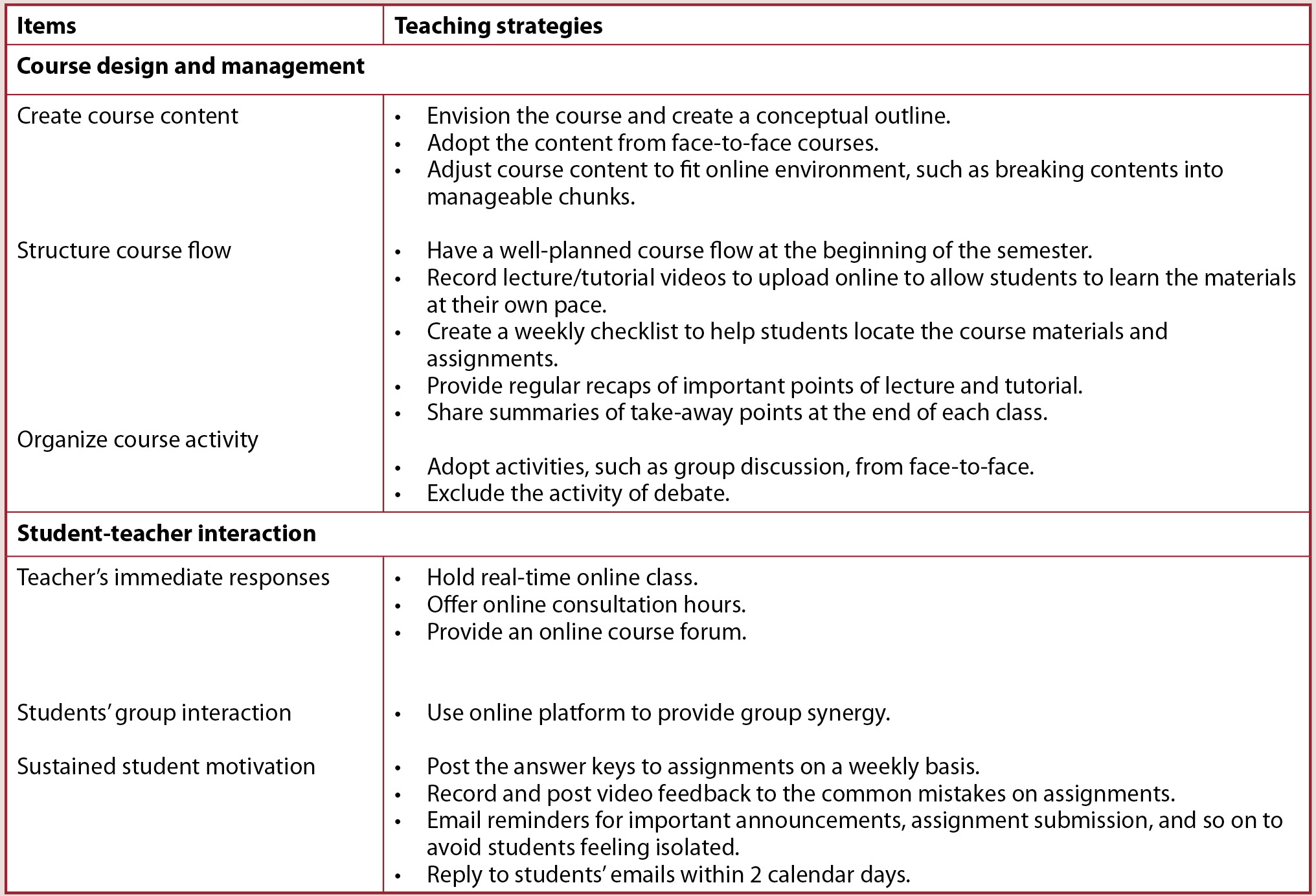

Good practices in online education

Although the fundamental principle of quality pedagogy is constant regardless of delivery mode, translating those elements into the online environment presents a unique challenge (Driscoll et al., 2012). Based on our experiences (see Table 6), given sound course content, we identify two fundamental elements for effective online education: well-structured course design and management, and frequent and instant student-teacher interactions. We discuss each of these in detail below.

Well-structured course design and management

When moving to online education, teachers usually cannot copy what they do in traditional F2F classrooms and expect equal effectiveness. Instead, teachers need to redesign the course to adapt to the online environment (McKenzie et al., 2000; ), which includes reorganizing course content, restructuring course flow, and redesigning course activities ().

Since the outbreak of COVID-19 was unexpected, all courses in spring 2020 at LU were abruptly switched to the online mode, which created a challenge when redesigning a F2F class to be taught online. From our experience, we found it helpful to reorganize course content by taking a big-picture view of the course (Table 7). This approach echoes the study by Baran et al. (2013), which revealed it is helpful to envision the entire course from initiation to completion. We maintained the majority of the course content from the F2F cohort (Table 1). This turned out to be an effective practice, especially when faced with a limited amount of preparation time. However, we modified the content to fit into an online environment (Table 7), such as breaking the content into short learning modules to help students locate the materials more easily.

Practical teaching strategies for online education.

While restructuring the course flow, there is always tension between flexibility and structure (Kanuka et al., 2002). Some teachers believe that course content and activities need to be structured early to improve student learning and efficiency (Coppola et al., 2002), while others prefer flexibility to suit students’ learning progress (Conceição, 2006). For the onset of online teaching, we attempted to reserve flexibility by providing some add-in reading materials to facilitate students’ online learning. These materials, however, worked more as a disruption and confused students. Therefore, in the context of a large-capacity course, a well-structured rather than a flexible course flow turned out to be more effective (Table 7). We also used the following approaches to structure the course flow. First, both the lecture and tutorial materials were uploaded online to allow students to learn at their own time and pace (Table 7). Second, we provided weekly checklists to help students locate the course materials and assignments, which students mentioned was helpful (Table 7). Third, we intentionally recapped the important points at the end of lecture and tutorials (Table 7), which not only strengthened students’ learning but also helped them understand how this course was designed.

As for the course activities, we kept some of the activities (such as group discussion), but excluded debate (Table 1), because it was ineffective in promoting group interactions in the online settings. In the online cohort, we hosted group discussions via the breakout room function in Zoom Cloud Meetings. One of the major differences we found between F2F and online group discussions was that shy students spoke more frequently in the online setting.

Frequent and instant student-teacher interactions

The student-teacher interaction is regarded as essential for the development of cognitive and social skills for students and is also a significant predictor of students’ perceived learning and satisfaction (Thurmond et al., 2002; LaPointe & Gunawardena, 2004; Russo & Benson, 2005; ). To meet the goal of improving student-teacher interaction, at the very beginning of the semester for both cohorts, we took time to explain the course organization, intended learning outcomes, and assessments to help students get an overview of the course. Such an approach has been shown to help alleviate students’ anxiety (). Three common critiques arise for student-teacher interaction in online education: lack of immediate conversation, difficulty in conducting student group interaction, and difficulty with sustaining student motivation (). We tried to avoid these problems in our online cohort by employing some practical measures.

Immediate conversation, such as teachers’ ability to see and interpret students’ reactions and gauge students’ levels of understanding, is critical in teaching (Parcel et al., 2018). In F2F settings, teachers easily see student reactions and can estimate their level of understanding (Brennan & Lockridge, 2006). In online settings, teachers cannot immediately see students’ reactions since most students tend to keep their cameras off and use the chatroom to communicate with teachers (Baran et al., 2013), and typed messages may result in misunderstandings because they lack emotion and sensory cues. To circumvent this problem, we encouraged students to switch on their cameras in the small-classroom tutorials (Table 7). We felt this approach helped build rapport between teachers and students by initiating immediate conversation between teacher and students. After we discovered the benefit of immediate conversation, we provided regular online consultation hours to reply to students’ questions, as well as an online course forum for students to ask questions and voice opinions anytime (Table 7).

To maintain student group interactions, we employed the breakout room function of Zoom Cloud Meetings, where students join different groups and have a private group discussion. This function also allows teachers to join and leave any breakout room during the discussion (Table 7). This function helped stimulate meaningful group interaction. We found that students were more willing to participate in the group discussion in the online settings, which may be due to this generation’s familiarity with and preference for computer-mediated communication.

One major criticism of online education has been that it cannot sustain student motivation (Summers et al., 2005; Mok et al., 2020). We believe this may be because students cannot receive instant feedback from teachers and students feel isolated or not cared for. To improve this situation, we tried to post answer keys to assignments right after the submission date and make videos providing feedback for students’ common mistakes. We also tried to repeat the important course announcements, remind students of deadlines, and reply to student emails in a timely manner (within 2 days). These approaches turned out to be very effective (Table 7).

Limitations

Our study indicates that most students favor F2F education, although online education turns out to be equally or more effective in achieving learning outcomes. However, students were relatively dissatisfied with the online learning experience. This may be due to the education tradition of LU, which has an intimate learning community and close student-teacher relationships. Because our study is based on an introductory science course for non-science majors in the context of a liberal arts university, additional studies of different universities and classroom settings will be needed to evaluate the generalizability of our findings.

Conclusions and further studies

We took a quantitative and qualitative approach to compare student performance and satisfaction between F2F and online learning modes. Based on quantitative analysis, we found that online education, compared to F2F education, is equally effective in developing the medium-order analytical skills (i.e., understanding and application skills) and more effective in developing higher-order analytical skills (i.e., analysis, evaluation, and synthesis skills). Qualitative questionnaires from students revealed that students favored the face-to-face learning, which may be due to the close student-teacher relationship tradition of LU.

Our study allows us to refine traditional teaching approaches with a new orientation: blended learning (Boda & Weiser, 2018; Mok et al., 2020)—that is, incorporating online learning modules to accommodate different learning styles while retaining F2F sessions to provide quality student-teacher interaction. This echoes a recent work by Mok et al. (2020), who found that 65% of Hong Kong university students favored blending learning over F2F or online. Moreover, despite the rapid growth of and high demand for online technologies in higher education, online education has not been treated as a new educational experience with its own conditions and affordances (Levine & Sun, 2002; Garrison & Anderson, 2003). It is therefore important to ground and drive online courses based on sound online pedagogy, rather than apply suboptimal F2F ideas. We look forward to seeing the developments in this exciting new field.

Acknowledgments

This work was supported by the Teaching Development Grants (102454, 102498) of Lingnan University. We owe thanks to Professor Jonathan Fong for the constructive feedback on and polishing of this article. We thank the anonymous reviewers for the constructive comments on this article.

Hongyan Geng (helengeng@ln.edu.hk) is a lecturer and Mark McGinley is a professor of teaching, both in Science Unit at Lingnan University in Hong Kong.

Preservice Science Education Teacher Preparation Teaching Strategies Postsecondary