Practical Research

Utilizing Technology to DiALoG Classroom Speaking and Listening

Science Scope—January/February 2022 (Volume 45, Issue 3)

By J. Bryan Henderson and Amy Lewis

Engaging in argument from evidence is a core practice of the Next Generation Science Standards (NGSS Lead States, 2013). This is, in part, because evidence suggests that science learning can be enhanced by providing students opportunities to “construct evidence-based, explanatory hypotheses or models of scientific phenomena and persuasive arguments that justify their validity” (Osborne 2010, p. 465). Osborne qualifies this with “although this may happen within the individual, it is debate and discussion with others that are most likely to enable new meanings to be tested by rebuttal and counter-arguments” (p. 464). Indeed, standards for effective speaking and listening—including “following rules for collegial discussions,” being able to “demonstrate understanding of multiple perspectives through reflection and paraphrasing,” and having the ability to “delineate a speaker’s argument and specific claims, distinguishing claims supported by reasons and evidence from claims that are not”—are important parts of the Common Core State Standards Initiative (NGAC and CCSSO 2010).

However, while speaking and listening may be fundamental to debate and discussion with others, it is reading and writing that typically receive more attention when it comes to classroom assessment. As a result, teachers have access to relatively few valid and reliable assessments for speaking and listening, and next to none that are focused on the construction and critique of scientific arguments. For better or worse, how assessments are designed has a strong association with how teachers structure classroom practice (Desoete 2008). If a limited number of speaking and listening assessments are made available for teachers, classroom opportunities for students to articulate and listen to scientific arguments may be rare.

Therefore, one of the objectives of our collaboration has been to develop a simple, practical technology that can overcome some of the challenges in assessing the quality of speaking and listening during classroom arguments. The technology is named DiALoG (Diagnosing Argumentation Levels of Groups), and the focus of this article is on how we’ve been attempting to integrate it into middle school science argumentation curriculum. To help readers visualize the synergy of theory and practice in our collaboration, the theoretical perspective of the researcher and the practical approach of the teacher is indicated by black and green text, respectively.

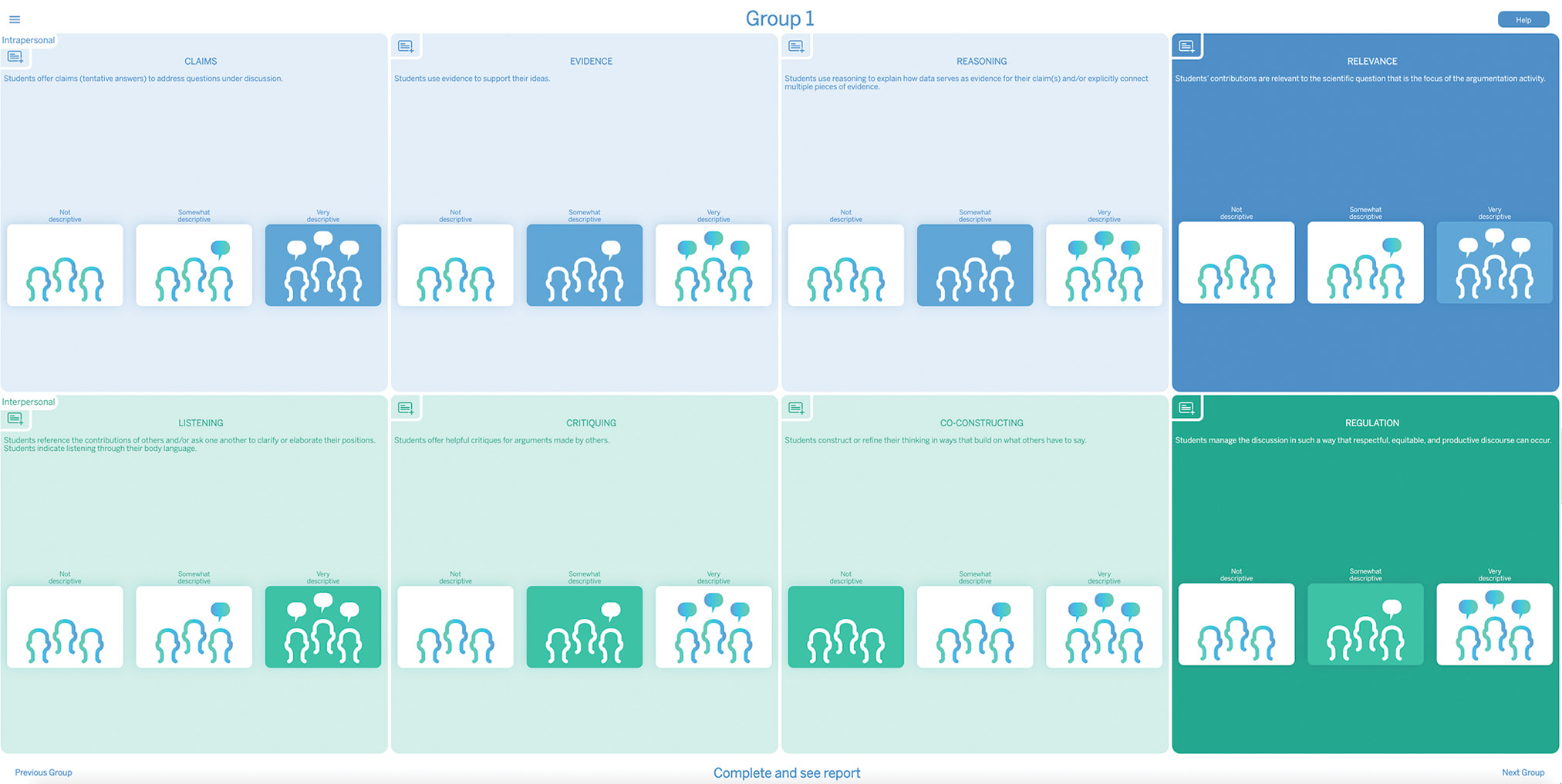

Teachers use a touch screen to score groups of students on up to eight important aspects of oral argumentation. As detailed in Henderson et al. (2021), these eight aspects of oral argumentation fall under two primary dimensions: one dimension pertains to the Intrapersonal aspects of oral argumentation taking place inside the heads of individual learners, while the other dimension is with respect to the Interpersonal aspects of how oral argumentation is also a dynamic social process. The Intrapersonal dimension consists of four assessment items: Claims, Evidence, Reasoning, and Relevance. Meanwhile, the Interpersonal dimension contains four additional assessment items: Listening, Critiquing, Co-Constructing, and Group Regulation. Each of these eight assessment items has a descriptive statement (e.g., “Students use evidence to support their ideas”), and teachers rate how descriptive that statement is (i.e., not descriptive, somewhat descriptive, or very descriptive) of what they just observed take place in their classroom. The “complete and see report” option at the bottom of the screen (see screenshot in Figure 1) allows teachers to export the scores into PDF or CSV format. The Digital Scoring Tool can work for groups as small as two, or as large as a whole-class discussion. The key is that scores are assigned for the group dynamic as a whole, not individual contributions. Teachers choose whether to spend enough time observing a group to measure all eight aspects of oral argumentation or, instead, to focus on a smaller subset of aspects across a larger number of groups.

Screenshot of the DiALoG Digital Scoring Tool.

DiALoG is an assessment system

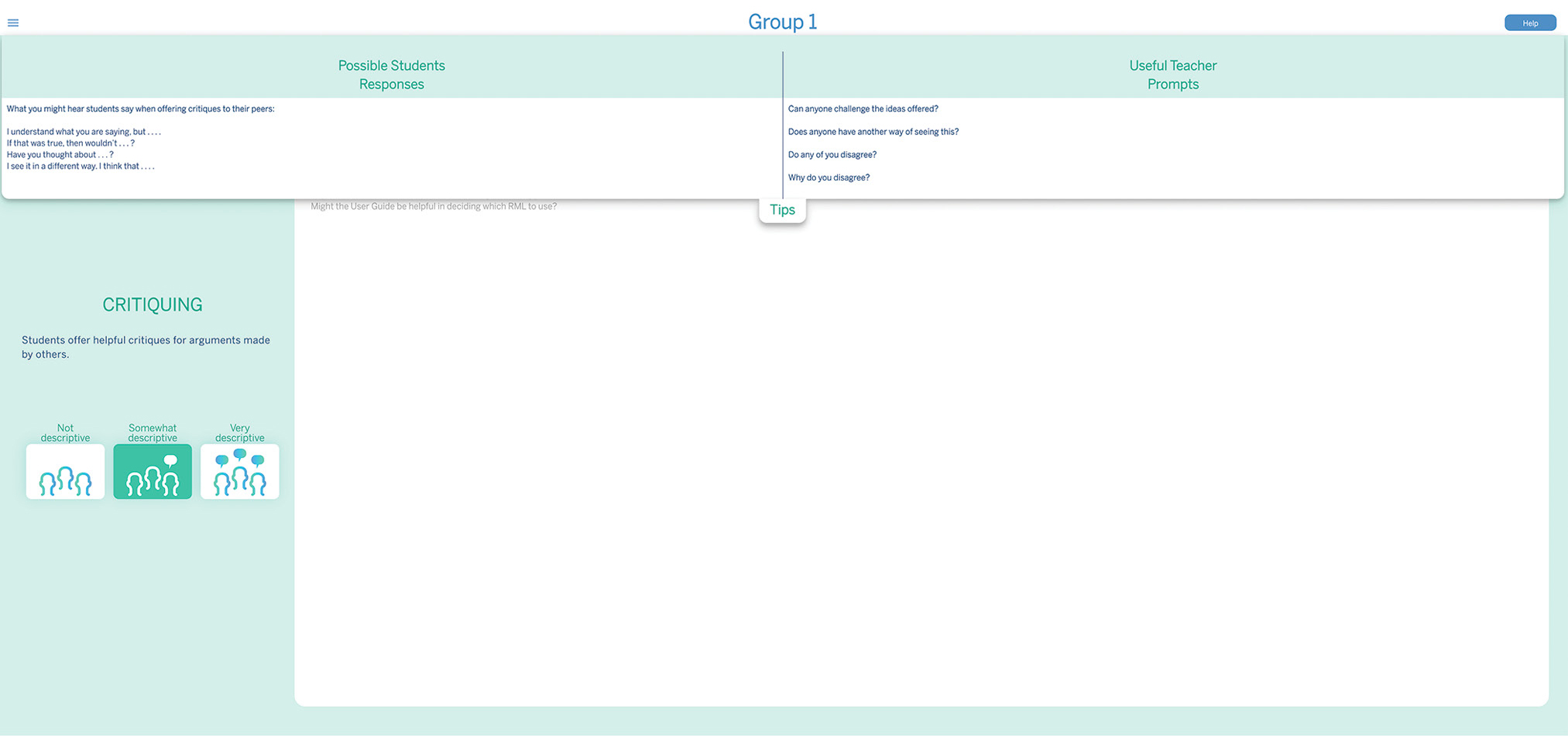

DiALoG is a digital assessment system that teachers can access with any web browser. We characterize it as a system because it is designed to do more than merely assign scores for various aspects of classroom argumentation. The system begins with the Digital Scoring Tool (Figure 1), allowing teachers to score the eight important aspects of oral argumentation described previously. For each aspect of classroom argumentation that teachers score with DiALoG, a User Guide (see screenshot in Figure 2) built into the software provides hints about student catchphrases to listen for as well as instructional prompts that can be used to raise the level of student engagement.

Screenshot of a DiALoG User Guide.

The DiALoG User Guide easily explains how the Digital Scoring Tool can be utilized. While I was teaching a unit on adaptations, the students developed two different claims for why fish lost their armor and started swimming faster. Unable to come to a decision on their own, I used the drop-down tab with suggested instructional prompts to spark more co-construction. The provided prompts kept the students engaged and focused on comparing the need of the fish to escape predators or obtain prey as the means for the adaptation.

A key feature in the design of the DiALoG system is to enable teachers to take action on the scores recorded by the Digital Scoring Tool. When teachers touch one of the eight components of the Digital Scoring Tool, they are able to annotate and adjust their score during annotation. To visualize, imagine a teacher looking at the eight aspects of argumentation depicted in Figure 1. Now, imagine they wish to focus on the Critiquing assessment item. By pressing on the upper left-hand corner of the Critiquing box (Figure 1), the Digital Scoring Tool opens a User Guide (Figure 2) that provides teachers space to annotate their observations regarding the extent to which different student groups are able to respectfully critique each other. The current score on the item is displayed next to the annotation space, and teachers are free to update their score during annotation/reflection. Furthermore, the User Guide contains a drop-down menu that aids this reflective process with suggested tips pertinent to the component being scored. For example, the User Guide depicted in Figure 2 provides a list of things teachers might listen for as indications that student critique might be taking place (e.g., “I understand what you are saying, but …”; “Have you thought about …?”), as well as possible prompts teachers might use to encourage more critique among their students (e.g., “Does anyone have another way of seeing this?”; “Do any of you disagree? Why?”).

For every score that is assigned, the DiALoG system suggests Responsive Mini-Lessons (RMLs) created for both low and moderate scores for each of the eight aspects of oral argumentation scored by DiALoG (16 RMLs total). These RMLs are intended to be near-term, follow-up activities for aspects of classroom argumentation that the DiALoG system has revealed to be in need of further support. For example, RMLs for components like Listening and Group Regulation provide students guidance on how they can work with their classmates to create safe spaces for oral argumentation. Thus, using the DiALoG system consists of more than just assigning scores—repeated use can refine what teachers look for during classroom arguments, and RMLs allow teachers to take action on the scores they assign.

While studying cause-and-effect relationships in Borneo, my students were struggling to critique one another. They were constantly agreeing or they would give poor feedback like “That just sounds stupid” or “That is a dumb idea.” To spark helpful critique, I used my Digital Scoring Tool results and the suggested RML for a low score on student Critique. This short RML took 10 minutes. The class then resumed argumentation about the cause and effects of applying pesticides in Borneo. Students applied what they learned, and the argumentation about the relationships continued.

Findings from classroom integration of DiALoG

Training workshops, classroom observations, interviews, and surveys have allowed several major themes to emerge when attempting to integrate DiALoG into the classroom. One is that the DiALoG Digital Scoring Tool, User Guide, and its accompanying RML lessons helped teachers shift from a teacher-centered to more student-centered form of instruction. With the Digital Scoring Tool in their hands, teachers restrained themselves from interjecting into student conversations as readily as they were accustomed to, and when interjections were made, they were based more on feedback from the assessment.

While teaching a lesson on genetic engineering, we were discussing the idea of cloning pets. I caught myself interjecting information between every student. On recognizing this, I quickly grabbed the DiALoG Digital Scoring Tool. It reminded me to keep myself out of the discussion, allowing students to discuss their ideas with one another rather than with me. The whole lesson shifted, and the students began to talk with one another. I could hear new information backed by evidence and data. By using the Digital Scoring Tool to monitor the discussion, rather than being a part of the discussion, I allowed students to converse and draw from their personal experiences rather than just listening to mine.

Another trend that has emerged is that of the teachers noticing gaps in their understanding of more nuanced aspects of student discussion. For example, teachers felt that DiALoG both called their attention to and improved their ability to visualize student critique and co-construction—classroom activity that they had not thought to look for previously.

One day, I had not planned on engaging in argumentation, but the chance to use it presented itself. Two students had opposing viewpoints about trying to live on Mars, and the class started a discussion. The students were able to push the discussion beyond Claims, Evidence, and Reasoning (three of the aspects of argumentation that the DiALoG system helps me assess). Other classmates joined the discussion adding useful Critique (another aspect of argumentation assessed with DiALoG) along with additional Evidence and Reasoning. We never came to a conclusion that all sides were satisfied with, but the process of argumentation with its multiple facets was evident.

Development of the DiALoG system continues as we receive more and more valuable feedback from attempts at classroom integration. As practicality and usefulness for teachers is central to the mission of the DiALoG project, our intention is to make the DiALoG system available for free in perpetuity. The most current version of the system is freely accessible (see link to DiALoG Science Argumentation Tool in Online Resources).

Feedback from new teacher users is certainly encouraged, just as feedback from practical use has been central in our attempt at classroom integration of the DiALoG technology. To see this tool in action, refer to the informational video documenting our classroom collaboration with DiALoG (see DiALoG NSTA in Online Resources). •

Acknowledgments

This research has been made possible through the generous support of the following grants: Supporting Teacher Practice to Facilitate and Assess Oral Scientific Argumentation: Embedding a Real-Time Assessment of Speaking and Listening into an Argumentation-Rich Curriculum (National Science Foundation, #1621496 and #1621441); Constructing and Critiquing Arguments in Middle School Science Classrooms: Supporting Teachers with Multimedia Educative Curriculum Materials (National Science Foundation, #1119584); and Constructing and Critiquing Arguments: Diagnostic Assessment for Information and Action System (Carnegie Corporation of New York, #B-8780).

Online Resources

DiALoG Science Argumentation Tool—http://tinyurl.com/dialogtool

DiALoG NSTA—https://tinyurl.com/DiALoG-NSTA-Full

J. Bryan Henderson (jbryanh@asu.edu) is an associate professor of science education at Arizona State University’s Mary Lou Fulton Teachers College in Tempe, Arizona. Amy Lewis is a teacher at Peoria Unified School District 11 in Glendale, Arizona.

Instructional Materials Interdisciplinary Teaching Strategies Technology