Research to Practice, Practice to Research

The Common Instrument Suite

A Means for Assessing Student Attitudes in STEM Classrooms and Out-of-School Environments

Connected Science Learning July-September 2019 (Volume 1, Issue 11)

By Cary Sneider and Gil G. Noam

The fundamental ideas in John Dewey’s 1913 essay, Interest and Effort in Education, are as true today as they were when he published it more than a century ago. His key point was that interest can motivate students to undertake efforts that may not be immediately engaging, and once they are engaged, they will start to develop skills and knowledge, leading to intellectual growth and development. The importance of interest and motivation is reflected in A Framework for K–12 Science Education, which states that “Learning science depends not only on the accumulation of facts and concepts, but also on the development of an identity as a competent learner of science with motivation and interest to learn more” (NRC 2012, p. 286).

Figure 1

Image courtesy of Museum of Science, Boston

This emphasis on students’ attitudes and self-concept is certainly not a surprise to teachers. Both classroom teachers and afterschool and summer program facilitators know that engaging their students’ interest is essential for learning to occur. Yet, only cognitive learning is routinely assessed. One reason why it is uncommon to assess students’ attitudes is that they are not generally included in education standards. The Next Generation Science Standards acknowledge the importance of attitudinal goals (NGSS Lead States 2013), but did not include them as capabilities for assessment. Nonetheless, even though attitude changes are not valued in the same way as cognitive accomplishments, there are good reasons for assessing them. That is especially true in afterschool and summer programs, where getting kids interested in STEM (science, technology, engineering, and math) is often the primary goal; but it is also important in classrooms, so that teachers can find out what activities and teaching methods inspire their students.

Although it is common to “take the temperature” of the class by observing the level of activity in the room and listening to students’ conversations, assessing changes in each student’s interest, motivation, and identity as a STEM learner is uncommon. Observing student engagement alone does not pick up more subtle changes in attitudes, or differences between boys and girls, or the views of quieter students. The Common Instrument Suite for Students (CIS-S) was designed to do just that. Although it was initially developed for use outside of school, it is of equal value in the classroom.

The Common Instrument Suite for Students

One way to know what young people are thinking or feeling is to ask them using a self-report survey. There are many such instruments in the literature that use various formats and types of questions, usually designed to evaluate a particular program. As a way of helping program leaders and evaluators take advantage of the tools that have already been developed, The PEAR Institute: Partnerships in Education and Resilience, located at McLean Hospital, an affiliate of Harvard Medical School in Boston, Massachusetts, collected existing assessment instruments and made them available through a website: Assessment Tools in Informal Science (ATIS). Each of the 60 tools on the ATIS website have been vetted by professional researchers, briefly described, categorized, and posted so they are searchable by grade level, subject domain, assessment type, or custom criteria. In addition, links are provided to the papers where the actual instruments reside so it is easy to access the tools once a user of the website has chosen one that could be useful.

ATIS is a free service developed by The PEAR Institute with support from the Noyce Foundation. Although ATIS solved one problem—the need to develop new measurement instruments for every program evaluation—there was another problem that ATIS alone could not solve. At the time, the Noyce Foundation was providing millions of dollars in funding to several large youth organizations to infuse STEM into their camps and clubs. Each organization had its own evaluator, and each evaluator used a different tool to measure impact. As long as different instruments were being used to evaluate different programs, it was not possible to compare results and determine which programs and approaches were most effective at getting kids interested in STEM and helping them develop an identify as a STEM learner. Ron Ottinger, executive director of the Noyce Foundation (now called STEM Next) asked an important question: Why not bring together the directors and evaluators to see whether they could agree on the use of one of the instruments from the ATIS website?

In July 2010, we (Sneider and Noam) facilitated a two-day meeting of several grant directors and evaluators to examine the instruments on the ATIS website to see whether we could agree on one that would be used to measure the impact of each program. The participants agreed that they all wanted youth to develop positive attitudes toward engaging in STEM activities, but none of the existing instruments were acceptable. Most were too long or applied exclusively to specific programs. Eventually the group developed a new self-report survey for student engagement that was composed of 23 items. One of us (Noam and The PEAR Institute) tested and refined the instrument on behalf of the team, eliminating questions that did not contribute significantly to its reliability. The final result was the Common Instrument (CI), a brief but highly valid and reliable self-report survey—now only 14 items—that takes only five minutes to complete, but captures students’ degree of engagement by asking them to indicate their level of agreement or disagreement with a set of statements, such as “I like to participate in science projects” (Noam et al. 2011).

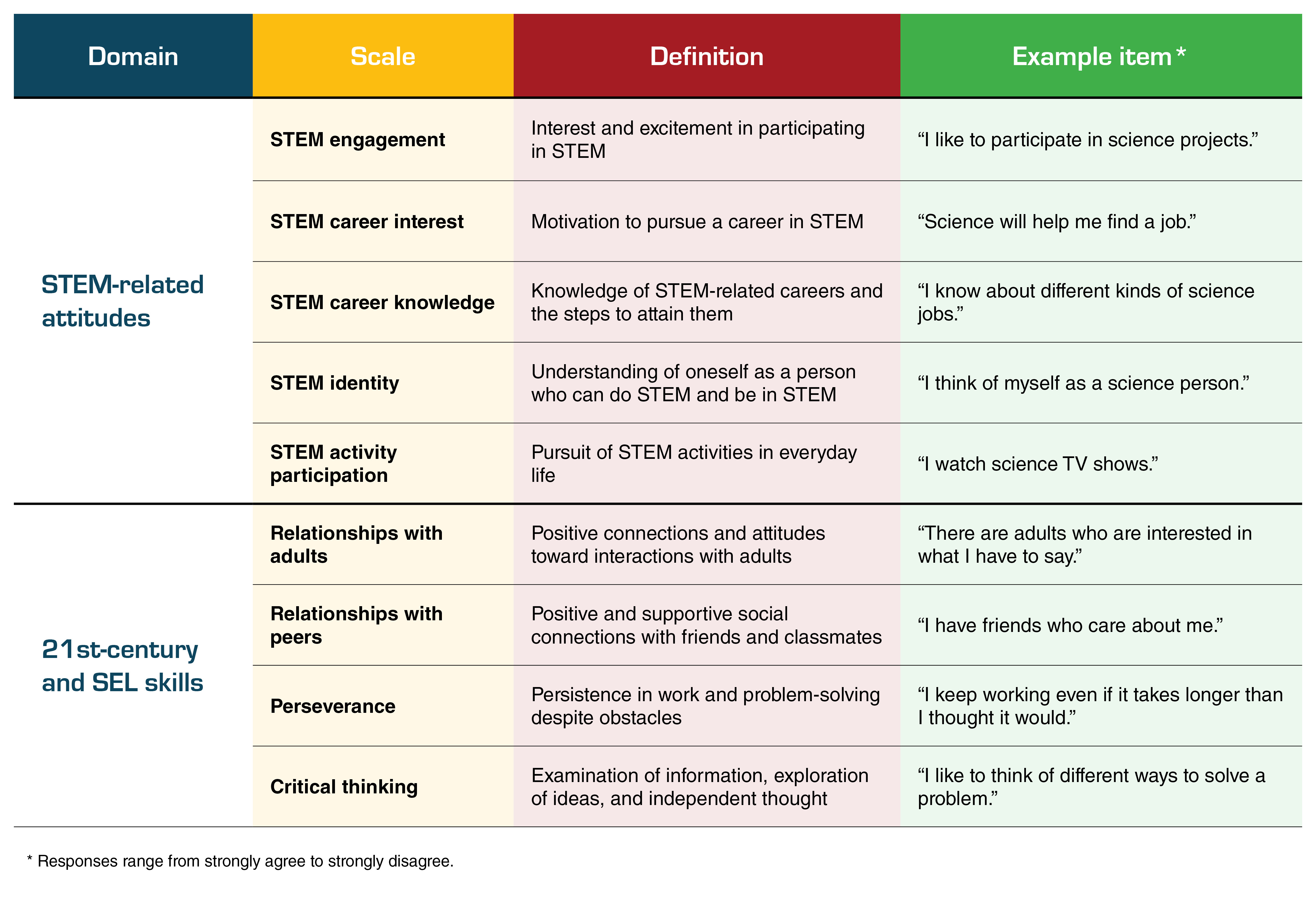

Over the next few years, practitioners, funders, and policy makers asked whether the CI could be extended to measure other dimensions of STEM attitudes, such as knowledge and interest in STEM careers, identification as someone who can “do” STEM, and voluntary participation in STEM-related activities. Other leaders asked whether the CI might also be expanded to include outcomes related to 21st-century/social-emotional skills such as critical thinking, perseverance, and relationships with peers and adults. New items were developed and tested to measure these additional dimensions. The result was the valid and reliable CIS-S. Evaluators can use just the questions from the CIS related to STEM engagement or include additional sets of questions to measure any of the other dimensions. All nine dimensions, with accompanying sample questions, are shown in Table 1. A survey that measures all nine dimensions has 57 items, which usually takes about 15 minutes for students to complete and can be used from fifth grade and up. The shorter, 14-item version is recommended for third grade and up. The complete instrument has also been tested for validity, reliability, and potential gender and multicultural bias (Noam et al., unpublished manuscript). Importantly, all scales of the CIS-S have national norms by age band and gender, so every child and every program can be compared to a representative sample. This makes the instrument truly common, so that there are markers with which one can assess local conditions without having to collect control group data. Each user benefits from all the data that were collected previously and contributes to the common data pool. The database now has more than 125,000 responses to the CI and CIS-S.

Table 1

The Common Instrument Suite for Students (CIS-S)

Recently, the PEAR team created a survey for STEM facilitators and teachers in the afterschool space. This self-report survey, called the Common Instrument Suite for Educators (CIS-E), includes questions on

- the training and professional development that educators have received and desire to receive,

- their STEM identity and levels of interest and confidence in leading STEM activities,

- their perceptions of growth in their students’ STEM skills and confidence,

- their self-assessment of the quality of STEM activities that they present, and

- their interactions with colleagues.

The survey consists of about 55 questions and takes less than 15 minutes to complete.

To help the organizations that are using these instruments, PEAR developed a dynamic data collection and reporting platform, known as Data Central. The automated platform produces an online data dashboard that displays actionable results shortly after collection is complete. Evaluators and practitioners can use these results to improve their programs and share with funders. The data reporting system also enables program leaders to compare their programs with thousands of afterschool and summer programs nationwide.

Program quality and impact: A study across 11 states

Dimensions of Success (DoS) is an observation instrument described in a companion article in this issue, “Planning for Quality: A Research-Based Approach to Developing Strong STEM Programming.” The instrument guides observers in examining the quality of STEM instruction through 12 dimensions of good teaching practices—such as strong STEM content, purposeful activities, and reflection. These qualities are equally important in classrooms as they are in afterschool and summer programs. With a two-day training, program leaders and teachers can learn to use the instrument themselves, so they do not have to hire a professional evaluator. Research studies have shown that the instrument produces reliable results, as two people trained in the use of DoS obtain very similar results when independently rating a lesson (Shah et al. 2018).

DoS and the CIS-S and CIS-E instruments were used in a study of 1,599 children and youth in grades 4–12 enrolled in 160 programs across 11 states (Allen, Noam, and Little 2017; Allen et al., unpublished manuscript). Observers conducted 252 observations of program quality, and children and youth participating in the observed activities completed the CIS-S. Results show that high ratings of quality measured using the DoS instrument are strongly correlated with positive outcomes on the CIS-S, particularly with items related to positive attitudes about engagement in STEM activities, knowledge of STEM careers, and STEM identity.

- 78% of students who participated in high-quality programs said they are more engaged in STEM.

- 73% of students said they had a more positive STEM identity.

- 80% of students said their STEM career knowledge increased.

Not only did participation in high-quality STEM afterschool programs influence how students think about STEM, but more than 70% of students across all states also reported positive gains in 21st-century skills, including perseverance and critical thinking. And youth regularly attending STEM programming for four weeks or more reported significantly more positive attitudes for all instrument items than youth participating for less time. These findings provide strong support for the claim that high-quality STEM afterschool programs yield positive outcomes for youth.

Figure 2

Image courtesy of PEAR: www.pearweb.org/atis

Pre–post tests are not essential to measure changes in attitudes

Traditionally, self-report instruments such as the CIS-S are administered as pretests and posttests. That is, the youth in the program to be evaluated are given a list of statements such as “I get excited about science” before the program begins and then again, a month or two later, after the program is over. It is not unusual for there to be no change or even an apparent drop in interest or engagement, even when interviews show that the children enjoyed the program a great deal. One way to explain this result is that participants’ reference points change between the start and end of the program. Research studies have shown that a better way of measuring change in attitudes and beliefs is to administer a self-report survey only at the end of the program (using what is called a retrospective survey method) by asking participants to reflect on how the program affected their levels of interest, engagement, and identity (Little et al., Forthcoming). The retrospective method not only has the advantage of being more accurate when measuring change in attitudes and beliefs over time, but it also avoids asking children to fill out a questionnaire at the start of an afterschool or summer program, which may dampen their enthusiasm. It also removes the challenge of matching pretests and posttests and has the very practical effect of cutting the cost of data collection in half.

Conclusion

An especially important feature of the CIS-S is that it is eminently practical. The assessment process is very brief, it only needs to be administered once at the end of a program, and it can be used by children as young as third grade.

Providing this new set of tools has accomplished more than simply making program evaluation easier and less expensive. As illustrated by the 11-state study, when used in conjunction with the DoS observation tool, the CIS-S makes it possible to view the links between program quality and youth outcomes, and to determine which aspects of STEM programs are most influential in student growth. Given the importance of students’ interest, motivation, and self-confidence for acquiring knowledge and skills in all settings, the CIS-S can become as useful to classroom teachers as it has been to afterschool and summer STEM facilitators.

Acknowledgments

The authors gratefully acknowledge the Noyce Foundation (now STEM Next Opportunity Fund), as well as the Charles Stewart Mott Foundation and the National Science Foundation for their support in developing these assessment instruments. We also want to acknowledge Dr. Patricia Allen for her careful reading, critique, and intellectual support of this paper.

Dimensions of Success is based upon work supported by the National Science Foundation under Grant No. 1008591. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation.

Cary Sneider (carysneider@gmail.com) is a visiting scholar at Portland State University in Portland, Oregon. Gil G. Noam (Gil_Noam@hms.hrvard.edu) is founder and director of The PEAR Institute at Harvard Medical School and McLean Hospital in Boston, Massachusetts.

Administration Teacher Preparation Informal Education