feature

Modifying Traditional Labs to Target Scientific Reasoning

Journal of College Science Teaching—May/June 2019 (Volume 48, Issue 5)

By Kathleen Koenig, Krista E. Wood, Larry J. Bortner and Lei Bao

This article showcases how a physics lab course was successfully redesigned to promote important reasoning abilities not explicitly addressed in the typical college setting. Student development of such abilities is essential for sound decision making, particularly when living in an information age. Essential features of our guiding curricular framework are presented. These include operationally defined scientific reasoning subskills around which all prelab and in-class activities and assessments are designed to provide repeated, deliberate practice. Details are provided for how the curriculum was developed to promote students’ abilities in one reasoning domain, namely, the identification and control of variables. Results indicate that students improve on subskills in the lower and intermediate ranges for reasoning involving controlling variables but do not improve on the higher end subskills. Suggestions for bridging students into these advanced reasoning skill sectors are discussed. Because the targeted skills are transferrable across science, technology, engineering, and mathematics (STEM), we expect that others can use features of our curricular framework to redesign their own courses and promote similar abilities in their students.

The economy and future workforce calls for a shift of educational goals from content drilling toward fostering higher end skills including reasoning, creativity, and open-ended problem solving (National Research Council, 2012). A report from the U.S. Chamber of Commerce (2017) calls for an educational focus on soft skills, such as communication, critical thinking, and collaboration, alongside subject mastery.

The literature base for critical thinking is extensive (Bangert-Drowns & Bankert, 1990). Broadly defined, critical thinking is the use of cognitive skills or strategies that increase the probability of a desirable outcome. It is the thinking involved in solving problems, formulating inferences, calculating likelihoods, and making decisions (Halpern, 2014).

Scientific reasoning (SR) is often used to label the set of skills that support critical thinking, problem solving, and creativity in STEM (science, technology, engineering, and mathematics). SR includes the thinking and reasoning skills involved in inquiry, experimentation, evidence evaluation, inference, and argument. These support the formation and modification of concepts and theories about the natural world, such as the ability to systematically explore a problem, formulate and test hypotheses, manipulate and isolate variables, and observe and evaluate consequences (Bao et al., 2009; Zimmerman, 2005). Critical thinking and SR share many features. In our work, we promote critical thinking through the development of SR.

Why target scientific reasoning abilities?

There is a growing body of research on the importance of student development of SR. Strong SR abilities, as measured by the Classroom Test of Scientific Reasoning (CTSR; Lawson, 2000), have been found to positively correlate with course achievement (Cavallo, Rozman, Blickenstaff, & Walker, 2003), improvement on concept tests (Coletta & Phillips, 2005), and engagement in higher levels of problem solving (Cracolice, Deming, & Ehlert, 2008). Unfortunately, it has been shown that college students do not necessarily advance their SR abilities across a single course (Moore & Rubbo, 2012) or over the 4 years of their undergraduate education (Ding, Wei, & Liu, 2016).

Previously, we reported on the success of two integrated lecture/lab courses that were specifically designed to target SR (Koenig, Schen, & Bao, 2012; Koenig, Schen, Edwards, & Bao, 2012). Both focused on proportional reasoning, identification and control of variables, hypothesis testing, and argumentation. For each targeted ability, the students received explicit instruction and were provided repeated and deliberate practice in a variety of contexts that increased in complexity. Although both courses demonstrated success in improving student SR abilities, they were developed as stand-alone courses for specific populations, including at-risk STEM majors and preservice teachers. Neither course included the typical STEM major who would also benefit from reasoning-focused curriculum. Because STEM majors do not typically have space in their schedules for additional courses, we decided to apply features of the curricular framework, which was successful in developing our standalone SR courses, to the first-semester introductory physics lab course required by most STEM majors at our institution.

Modifying lab course to target scientific reasoning abilities

Choosing to modify our introductory algebra- and calculus-based physics lab courses was an easy choice. The context lends itself well to the development of SR, and the labs were traditional verification labs in need of revision. The revised learning outcomes are based in part on the American Association of Physics Teachers (AAPT) recommendations for undergraduate physics labs (Kozminski et al., 2014) and include six focus areas: basic laboratory skills, experimental design, data analysis, modeling, knowledge construction, and communication of results.

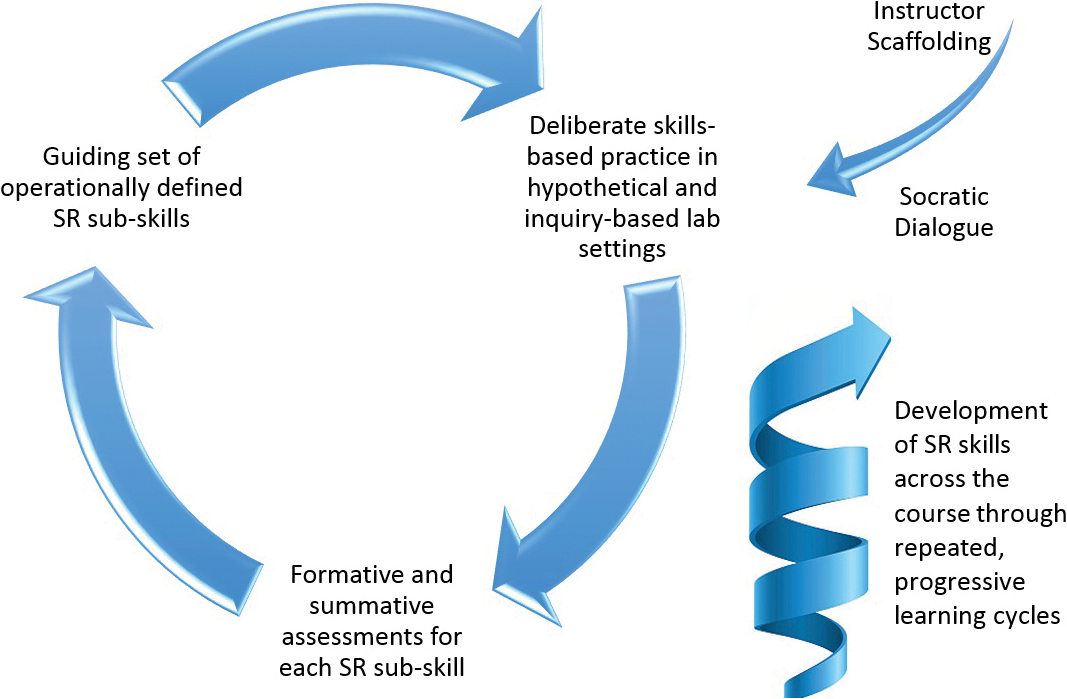

What sets our labs apart, however, is that we expanded on the AAPT recommendations by including operationally defined SR subskills as part of the learning outcomes. This inclusion of SR subskills is a key feature of our scientific reasoning curricular framework as shown in Figure 1. For each targeted SR domain, a set of specific subskills are first identified, with many coming from the research literature. From these, all prelab and in-class activities are developed such that they provide students with repeated, deliberate, and guided practice within multiple hypothetical science-based scenarios and real inquiry-based lab contexts. This explicit instructional strategy has been shown to be essential for SR development (Chen & Klahr, 1999; Ericsson, Krampe, & Tesch-Römer, 1993). In addition, all labs are designed around the Karplus Learning Cycle (Karplus 1964), which involves cycles of exploration, concept introduction, and concept application that has also been shown to positively impact the development of reasoning (Gerber, Cavallo, & Marek 2001). Designated checkpoints are built into in-class activities and involve Socratic dialogue between the instructor and students. This use of directed questioning guides students to think about the reasoning behind decisions and claims made, which supports the development of SR and conceptual understanding in parallel (Hake, 1992). Both formative and summative assessments are scattered throughout the course and are aligned with each SR subskill. The curricular framework shown in Figure 1 is based on a cyclic process that spirals across the course as students are introduced to and practice more complex skills.

Features of our scientific reasoning curricular framework. SR = scientific reasoning.

The revised labs use the same equipment, which reduces cost. The students continue to meet 2 hours per week. The number of topics that align with the lecture portion of the course, however, was reduced from 12 to 7 to allow students time to explore a topic, generate a hypothesis, create a valid experiment design, and conduct their own investigations—with much of the decision making falling on the students. Results often culminate in a mathematical model, and the extra time also allows for students to apply their model to predict an unknown quantity.

The topics in the revised lab course include the simple pendulum, projectile motion, Newton’s Laws, simple harmonic motion, momentum and energy, rotation, and a windmill blade system design challenge. Although these topics appear ordinary, the activities developed to explicitly target SR subskills within these contexts sets them apart.

Modifying lab activities to promote abilities in COV

Because of space limitations, the remainder of this article discusses how our lab curriculum was designed to promote student abilities in one SR domain, namely, control of variables (COV). We chose to showcase COV as it is fundamental to all scientific investigations. Others can use our example to design COV-targeted curriculum in their own disciplines, while extending the process to other SR domains as well.

Although AAPT provides a comprehensive set of lab outcomes, they are too broad for our purposes in developing SR-targeted curriculum. For example, the recommendations indicate students should be able to identify trends based on experimentally controlled observations. Unfortunately, this does not operationally define abilities in identifying and controlling variables. Through previous work, however, we identified nine COV subskills as shown in Table 1 (Wood, 2015; Zhou et al., 2016). Each subskill is categorized as low, intermediate, or high, which refers to the complexity of the item within a larger developmental progression for COV. We further organized the COV framework around four general COV skill domains to align with the broader research literature (Schwichow, Christoph, Boone, & Härtig, 2016). Within each category, learning outcomes are listed in increasing order of difficulty. Using this framework, the instructional sequence of lab activities was developed such that it advances the broader lab outcomes and COV subskills in parallel.

| Table 1. Framework for designing lab curriculum that targets abilities in control of variables (COV). | |||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

The overall course structure involves cycles of prelab and in-class activities, followed by a written lab report after a 2- to 3-week investigation has been completed. Much of the SR-specific instruction occurs in prelab activities, which introduce key concepts of targeted subskills and provide deliberate practice in applying these in hypothetical situations. Online prelab quizzes provide motivation for the prelab work and serve as formative assessment. In-class activities, completed by students working in groups of three to four, center on a central research question that explicitly embodies causal hypothetical reasoning embedded in cycles of scientific inquiry, reflection, and communication of outcomes while providing additional practice applying subskills in authentic scenarios. Lab report writing is heavily emphasized, and students submit four complete reports during the course. Detailed instructions are provided along with a grading rubric that awards points for behaviors sought, such as encouraging students to cite relevant data and claims of other groups to support or refute their own findings.

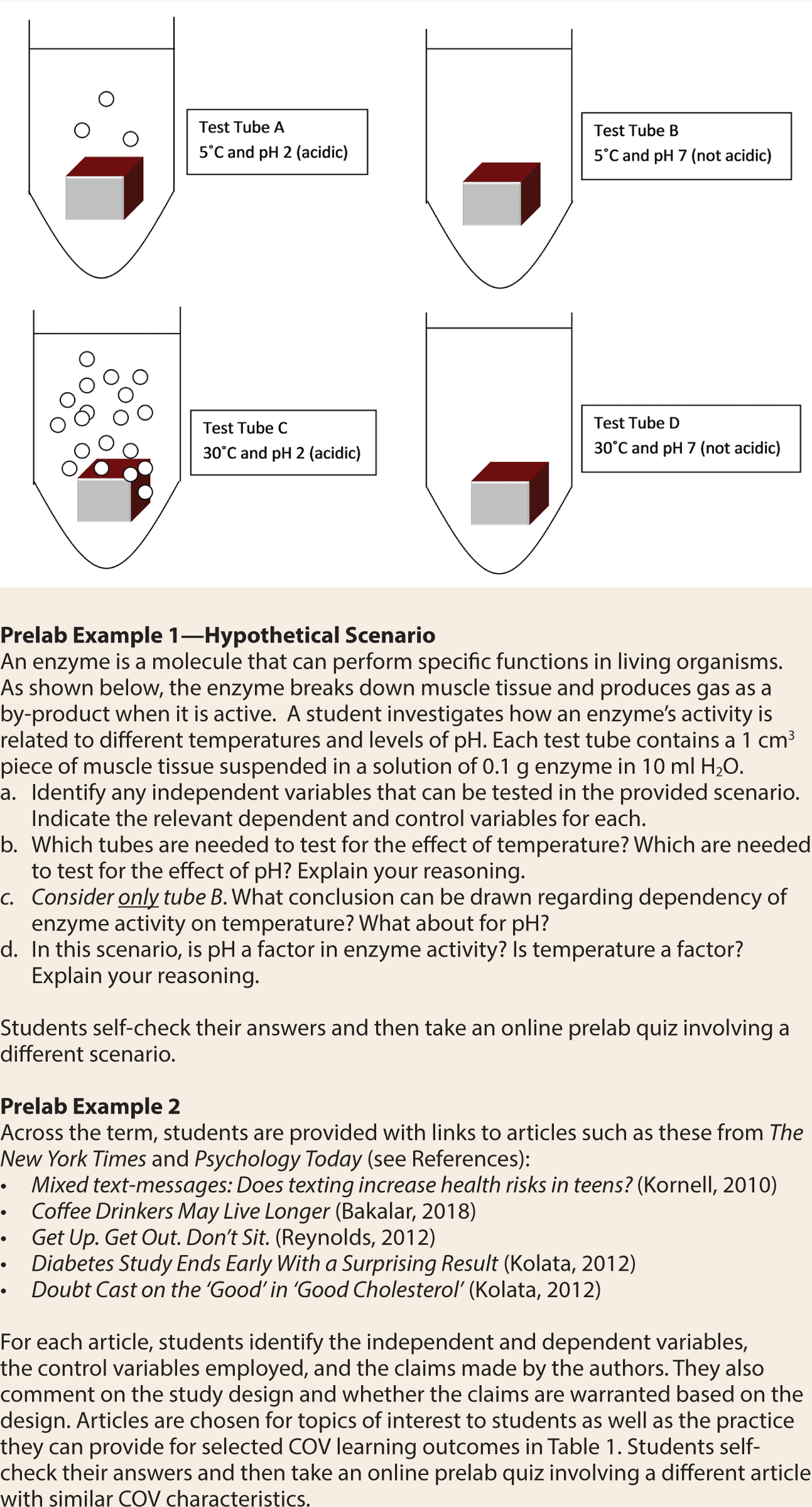

All prelab and in-class activities were developed to support the COV progression of subskills shown in Table 1. In this way, the activities scaffold one another across the term such that students become better able to address more complex research questions situated within more complex contexts. For example, prelab instruction the first week of the course sets the stage by defining the terms independent, dependent, and control variables. Students are provided with multiple thought exercises, all of which are situated within diverse science contexts, to practice applying these ideas (see Figure 2). The thought exercises involve designing and evaluating simple experiments based on considerations of COV, thereby targeting lower end COV abilities. Likewise, the in-class activities early in the course engage students in designing experiments for which the control variables are more intuitive, such as determining which factors impact the period of a pendulum.

Example of two prelab activities. The first one is early in the term and provides deliberate practice in experimental design and evaluation. The second is later in the term and provides practice in applying control of variables (COV) skills to real contexts.

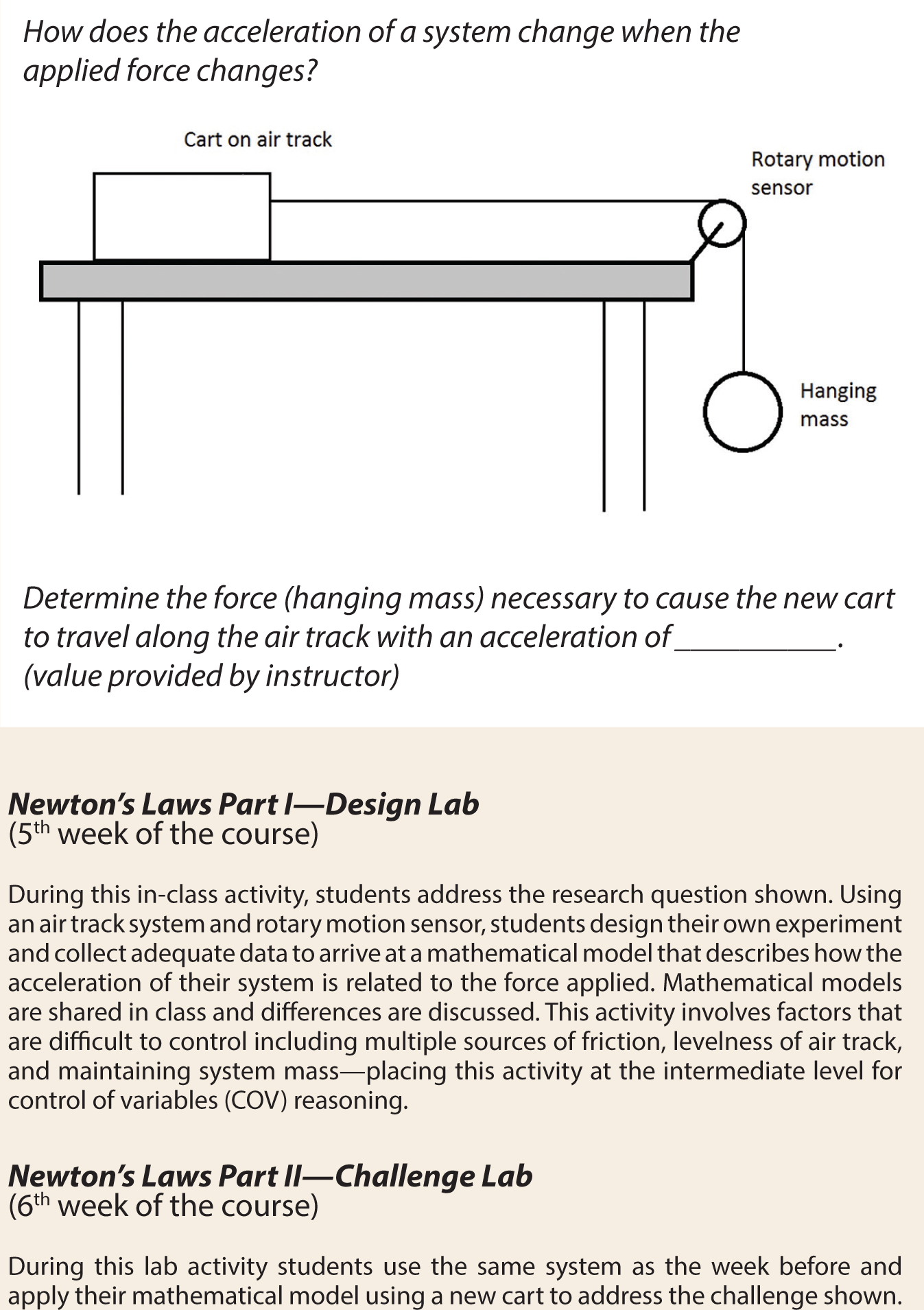

As students become more proficient, pre-lab and in-class activities move students into the intermediate range of the COV developmental progression. Here students are provided more complex situations that involve the need to control or account for factors that are not perceptually obvious or may have multiple interaction effects (see Figure 3).

Example of two in-class lab activities. Note that these are not open-ended activities and question prompts are embedded to guide students in the design and evaluation of the experiments.

Prelab exercises late in the term provide deliberate practice in interpreting controlled experiments in the form of hypothetical scenarios or articles in the media (see Figure 2). The final lab activity of the course involves a design project in which students create a windmill blade system for maximum output (rotation rate) under a constant wind input. The number of interacting variables that need isolation is expansive, including blade shape, number, surface area, pitch, mass, and so on. Although this activity provides further practice in the intermediate range of the developmental progression, related thought exercises in prelab activities push students to practice higher subskills.

It should be noted that carefully placed question prompts are used throughout the course to engage students in the desired reasoning. For example, several prelab exercises involve critically reading The New York Times articles. Students are asked to comment on whether proper controls were applied and whether claims made are warranted on the basis of considerations of COV. Likewise, in-class question prompts are used to focus attention on elements that might otherwise be missed, such as hidden variables in real contexts (e.g., levelness of air track). Lab report requirements also increase across the term, and question prompts are included to engage students in discussions about assumptions made, limitations to claims due to factors outside their control, and interaction effects between variables.

Assessment

Formative assessments include online quizzes, which assess student ability to apply skills addressed in the prelab exercises, graded lab records and reports, and in-class verbal checkpoints. Much improvement is observed across the term in student ability to articulate ideas and reasoning in written and oral assignments. A final exam, which focuses on general lab skills and the application of the targeted SR abilities to hypothetical situations, provides summative assessment.

The impact of the curriculum on specific SR subskills is measured using the Inquiry for Student Thinking and Reasoning (iSTAR) instrument as both a pre- and posttest. iSTAR was developed by two of the authors and assesses reasoning skills necessary in the systematical conduct of scientific inquiry, which includes the ability to explore a problem, formulate and test hypotheses, manipulate and isolate variables, and observe and evaluate the consequences (see ). iSTAR was developed to expand on the commonly used CTSR (Lawson, 2000) because of a ceiling effect with college students. In addition, iSTAR includes nine COV questions which assess each learning outcome in Table 1. These questions were recently validated (Wood, 2015; Wood, Koenig, Owens, & Bao, 2018).

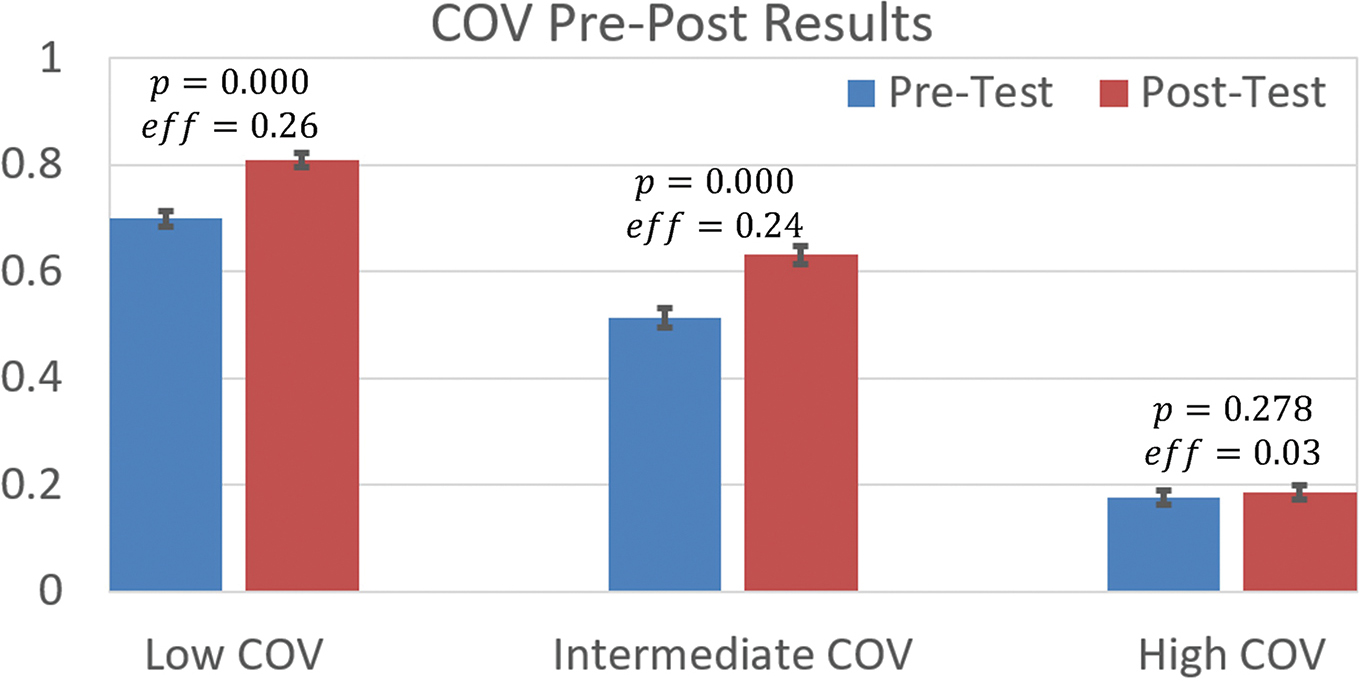

By pre- and posttesting our algebra-based (n = 296, fall 2014) and calculus-based (n = 503, spring 2015) physics students, who used the same curriculum in their respective lab courses, we were able to determine the impact of the lab course on moving students to higher orders of reasoning within COV. The results shown in Figure 4 suggest that at the low and intermediate COV levels students make significant improvements. At the highest COV level, however, little improvement occurs. Results follow similar patterns for the algebra-based physics students at our 2-year college branch campus (Wood et al., 2018).

Pre– and posttest results of students’ control of variables (COV) skills before and after taking a semester of physics lab.

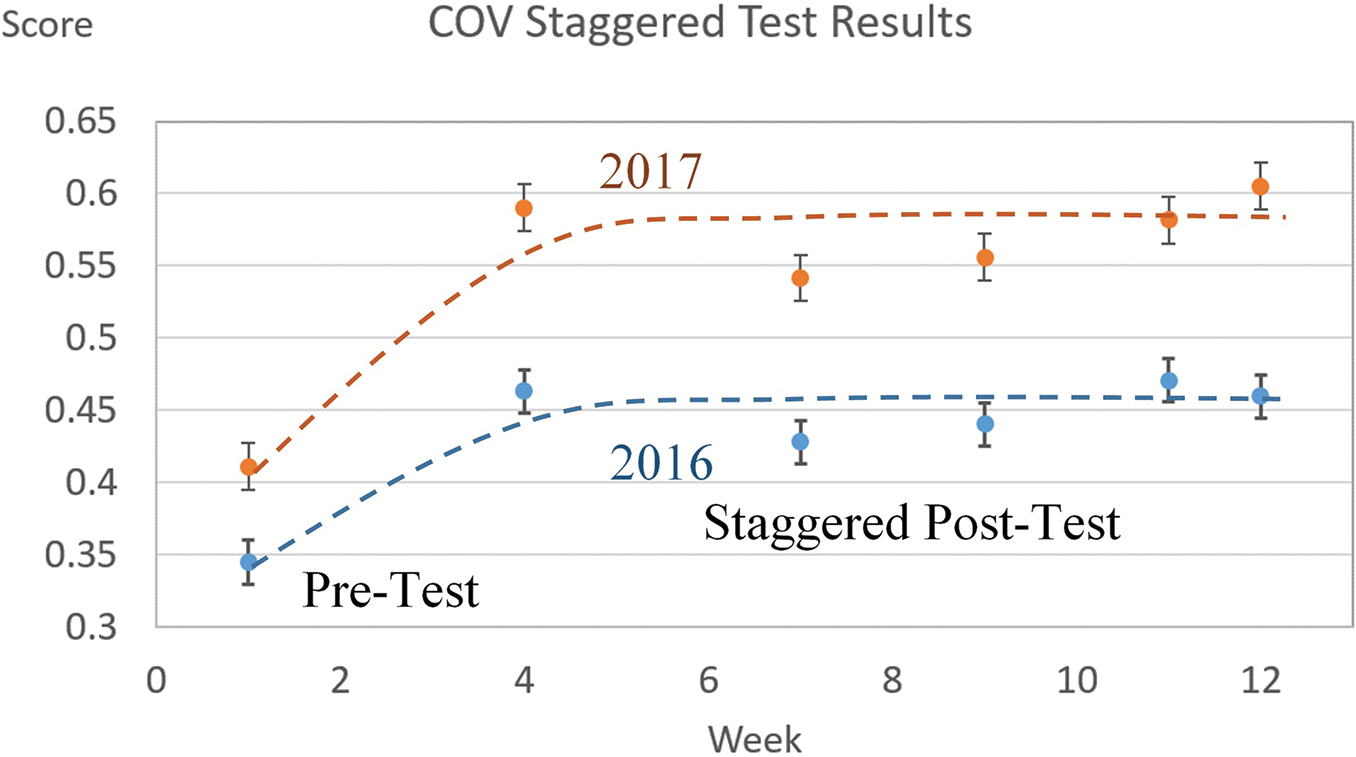

To better understand how the specific prelab and in-class activities impacted student development of COV skills, we implemented a random-group, staggered, multiple posttest design to increase the measurement frequency for more accurate assessment of both students’ changes and their timing. With over 500 students across all sections of the lab course each term, we were able to randomly form five subgroups of approximately 100 students each. This sample size allows for a standard error of a measure to be on the order of 2%, such that statistically significant comparisons could be made with signals larger than 5%. All groups took the reasoning pretest the first week of the term, and the posttest was randomly assigned to one of the groups at Weeks 4, 7, 9, 11, or 12 (end of course); the first four match up with when lab reports were due, indicating that the next investigation cycle had been completed. The subgroups were treated as equivalent samples of the same student population because of the random assignment. In this way, by combining the multiple measures of different weeks, we were able to study the covariations between students’ measured COV skills and the teaching and learning activities that occurred during the course period, with a time resolution of approximately 2 weeks (one inquiry learning cycle). On the basis of our results, we were able to determine that most of the impact on student COV skill development was due to the first 4 weeks of the lab course as shown in Figure 5 (see data from 2016), with most of the improvement in the low-to-intermediate skill range.

Staggered control of variables (COV) posttesting indicates impact of curriculum. The dashed lines are a fit to the data.

This prompted revisions to the lab activities later in the course. Changes included adding supplementary prelab exercises and question prompts for in-class activities and lab reports to provide students additional deliberate practice of the skills found lacking. Subsequent testing indicated that these changes further improved students’ abilities; the average total pre–post shift increased to 14.8% in 2017 from 10.8% in 2016 (p < .000). Although encouraging, most of the improvement was once again due to the first 4 weeks of the course as shown in Figure 5 (see data from 2017). Together with the results in Figure 4, it suggests that to further improve students’ reasoning on COV, especially on the higher level COV skills, additional coursework is needed to better target these more complex skills.

Student reaction to the labs

On an exit questionnaire, completed by 400 of 742 students during spring semester 2018, two thirds of the students indicated that they enjoyed the lab course and one quarter was neutral. Eighty-five percent liked the collaborative group work and roughly two thirds indicated that they found valuable the opportunity to design their own experiments, use Excel to generate and interpret graphs, and engage in scientific reasoning to support a claim. On the other hand, only one third of the students appreciated the use of uncertainties in measurements and calculations, skills recommended by AAPT. When asked what could be improved, the main comment was more time in lab. The lab course meets for 2 hours each week and given the exploratory nature of the activities, students felt rushed to finish. Students also commented that they liked the challenge labs, such as the windmill blade system design activity, and wanted more like this. These comments will guide future revisions.

Final discussion

As college faculty try to find ways to prepare students with necessary workplace skills, it is important to look for opportunities to do so within existing courses. This manuscript showcases how a lab course was successfully redesigned to promote important COV reasoning abilities not explicitly addressed in the typical college setting. Student development of such abilities is important for sound decision making, particularly when living in an information age. Ultimately, students need to learn how and where to get complete information, discern and filter input, synthesize large amounts of gathered information, weigh input based on relevancy, consider bias, and engage in analysis to refine information so final decisions are based on knowledge that has a high degree of accuracy (Myatt, 2012). The ability to identify and control variables is core to this decision-making framework and hence the starting point for our lab revisions.

Although we have been able to demonstrate that certain SR skills can be taught within existing course structures, students saturate early in the course for basic and intermediate levels of COV reasoning and do not improve on higher levels. These latter skills are important as they bridge into the advanced reasoning skill sectors required in hypothesis testing and validation, as suggested by Kuhn’s reasoning framework of theory-evidence coordination (Kuhn, Iordanou, Pease, & Wirkala, 2008). Two of the skills we see most needed here are statistical and causal reasoning. Statistical reasoning serves as the foundation for data analysis and quantitative evaluations of possible relations between variables, which become data and relational factors needed for causal reasoning. Causal reasoning, on the other hand, is an essential skill that supports broadly defined hypothesis testing, evidence-based decision making, and theory-evidence coordination. As a result, we are currently developing the learning outcomes, lab activities, and assessment questions for these two skill dimensions as we continue to improve our labs.

Many excellent inquiry-based college lab curricula exist, but most focus on developing basic lab skills and/or promoting deep conceptual understanding. Our curriculum, on the other hand, expands on the former and is intentionally designed to help students develop useful reasoning patterns around operationally defined subskills. The SR skills targeted in our lab course are transferrable across STEM. We expect that others can use the features of our scientific reasoning curricular framework shown in Figure 1, as well as the COV subskills defined in Table 1, to redesign their own courses and promote similar abilities in their students.

Acknowledgments

The article is based on work supported by the National Science Foundation (NSF) under Grant No. DUE-1431908. Any opinions, findings, conclusions, or recommendations expressed in this material are those of the authors and do not necessarily reflect the views of the NSF.

Kathleen Koenig (kathy.koenig@uc.edu) is an associate professor in the Department of Physics at the University of Cincinnati in Cincinnati, Ohio. Krista E. Wood is an associate professor in the Mathematics, Physics, and Computer Science Department at the University of Cincinnati Blue Ash College. Larry J. Bortner is a lab manager in the Department of Physics at the University of Cincinnati. Lei Bao is a professor in the Department of Physics at The Ohio State University in Columbus, Ohio.

Chemistry Curriculum Labs Physics Research Teacher Preparation Teaching Strategies Postsecondary