feature

The Power of Practice

Adjusting Curriculum to Include Emphasis on Skills

Critical thinking skills are sought after in the workforce and are often included in course, departmental, and programmatic learning objectives. However, in the curriculum of many courses we tend to focus on content rather than on fostering the growth of the cognitive skills needed to improve critical thinking ability. Further, skills are difficult to teach simply by modeling and lecturing, limiting our ability to teach skills in larger courses. This study examines a large-lecture course that included skill-focused content-related practice and collected data on outcomes through exam performance. The data indicate that scaffolded in-class practice make a significant difference on student exam performance on questions involving data analysis and application. The skills of applying knowledge in a new situation and analysis of graphs and data are key components of the critical thinking skills that employers value and our students need for success.

Walk into most STEM (science, technology, engineering, and mathematics) classrooms or lecture halls and you will usually find a single instructor, a variable number of students, and some audiovisual equipment. The instructor typically is at the front of the classroom talking, and all the students are pointed toward her or him. The students take notes, often furiously attempting to copy down every word the instructor utters. Sound familiar? This typical model supports the commonly held student belief that science is a body of facts to be passively bestowed on them by a person (their instructor) who already owns these facts. A student’s job here is to acquire the facts and retain them long enough to pass an exam. Most college students actively buy into this belief that the acquisition of knowledge is all they need to accomplish to make them a desirable candidate in the workforce.

Yet, the data do not support this idea. A recent evaluation of successful employees at Google revealed that of eight different skills prized in Google workers, content knowledge came in as the least important for long-term success and retention at the company (Strauss, 2017). The skills that most correlated to success were so-called soft skills of teamwork, critical thinking, problem solving, and the ability to draw connections among different ideas. Science students, when entering the workforce, will be tasked with skills of data analysis, critical evaluation, applying their knowledge in novel scenarios (such as to a new patient), and forming cogent arguments. A national conversation to reform science education has been underway for over 2 decades. In 1990 the American Association for the Advancement of Science (AAAS) published a report entitled Project 2061: Science for all Americans (Rutherford & Ahlgren, 1990), which sought to redefine scientific education. A series of reports by the National Research Council, a branch of the National Academies, recommended that instructors focus less on content and more on the skills of application, analysis, and knowledge transfer (National Research Council, 2003). In 2011, the AAAS issued a “call to action” to give concrete goals and structure to the drive to reform education; in Vision and Change in Undergraduate Biology Education: A Call to Action, the AAAS defines six core concepts and five core competencies of biology education. The emphasis on competencies underscores the idea that students should be gaining skill throughout their education in addition to content knowledge. These competencies require more than just a cookbook set of labs, but also inquiry-based education and curriculum that allow students to practice skills of analysis and application (AAAS, 2011). A recent study (Stains et al., 2018) found that a majority of college-level science courses are still taught as a traditional lecture paired with a cookbook-style lab. Lectures are important and effective pedagogical tools; however, they tend to encourage memorization rather than critical thinking and conceptual understanding (Bligh, 2000; Novack, 2002). Taken together, we can form a picture in which only a minority of college students in the United States are exposed to a scientific education that allows them to learn and practice the thinking and problem solving that a scientist actually does; instead, most of their coursework focuses on helping them to learn what scientists have already done. It becomes clear that to best prepare our students for the workforce, their education needs to include not just a body of facts. For the pace of scientific discovery is such that if we bestow on them only facts, they will be obsolete in 30 years, but if we also teach them the skills, they can thrive for the duration of their careers (Schleicher, 2017).

The traditional lecture style of teaching is exquisitely effective at knowledge transfer from instructor to student. Most science courses pair lecture with a laboratory component in which the students learn the hard skills of technique. The question emerges of where to include the teaching of analysis, teamwork, problem solving, graphic literacy, and application of information in science curriculum. Could a shift to include more active learning in the lecture promote critical thinking and science as a “way of knowing” (Moore, 1993) instead of as a body of disconnected facts?

For instructors to know their pedagogy is effective at skill-based education, they must include skill-based assessment in the course. One way to do this is to include skill-based assessment on exams. Further, for courses in which the dominant method of student assessment is exams, students perceive the test as the ultimate reflection of value in a course. Therefore, though we want to avoid the trap of “teaching to the test”; instructors should represent on the tests the material that they want the students to see value in. In short, assessment should match pedagogy (Crowe, Dirks, & Wenderoth, 2008).

One of the many ways that exams can be used to assess cognitive skills is by applying Bloom’s taxonomy categories to exam questions (Bloom et al., 1956/1964; Crowe et al., 2008; Lauer, 2005). The skills of memorization, recall, and understanding are generally considered lower order cognitive skills (LOCS), whereas the critical thinking skills of application of knowledge, data, or graphical analysis and deeper conceptual understanding are considered higher order cognitive skills (HOCS; Crowe et al., 2008). To the goal of matching assessment to pedagogy, if the students understand that skill-based assessment is on their exams, they see improvement of their critical thinking skills as an educational goal. Adding Bloom’s categories to exam questions can therefore be beneficial both for the students as well as for the instructor.

Methods

Setting

This study took place at a large R1 institution with approximately 14,000 enrolled undergraduates in the northeastern United States. Data presented here will compare two introductory biology courses. The central course examined (Introductory Biology for Allied Health Students) is open to allied health majors and nonmajors (students majoring in nonscience fields). Of the 155 total students enrolled, 23% were nonscience majors and 77% were allied health majors. This course involved 3 hours of lecture per week and one 2-hour laboratory session that met 10 weeks of the semester. A course used for comparison is open to students majoring in biology and other science fields. This course enrolled 312 students and involved 3 hours of lecture and 3 hours of lab per week. The majority of enrolled students in both courses were in the first year of their college education.

Data collection and study design

These courses employed the digital exam platform, ExamSoft. This tool allows for digital exams to be built and deployed via their website; the encrypted exam file then appears within an app on the student’s device. Exams are taken via the app, and scores and feedback are distributed to students. The novel feature of the ExamSoft platform is the ability for the instructor to tag the question with category tags as designated. In these courses, instructors utilized both content tags (i.e., cellular respiration) as well as Bloom’s taxonomy tags (Bloom’s categories assigned consistent with criteria outlined in Crowe et al., 2008). When the students receive their scores, they also receive a detailed report, called a Strengths and Opportunities report, which gives them their average performance by category. Average results for the class were discussed in the lecture meeting immediately after the exam, and general recommendations and feedback were issued to the class as a whole. In addition, the instructors offer individual appointments available to the students throughout the semester, and many students utilized these appointments to discuss their Strengths and Opportunities report and receive recommendations for alternative study strategies to strengthen performance in individual Bloom’s categories (Crowe et al., 2008).

The instructors received both individual student data as well as course average data of performance by category.

Results and discussion

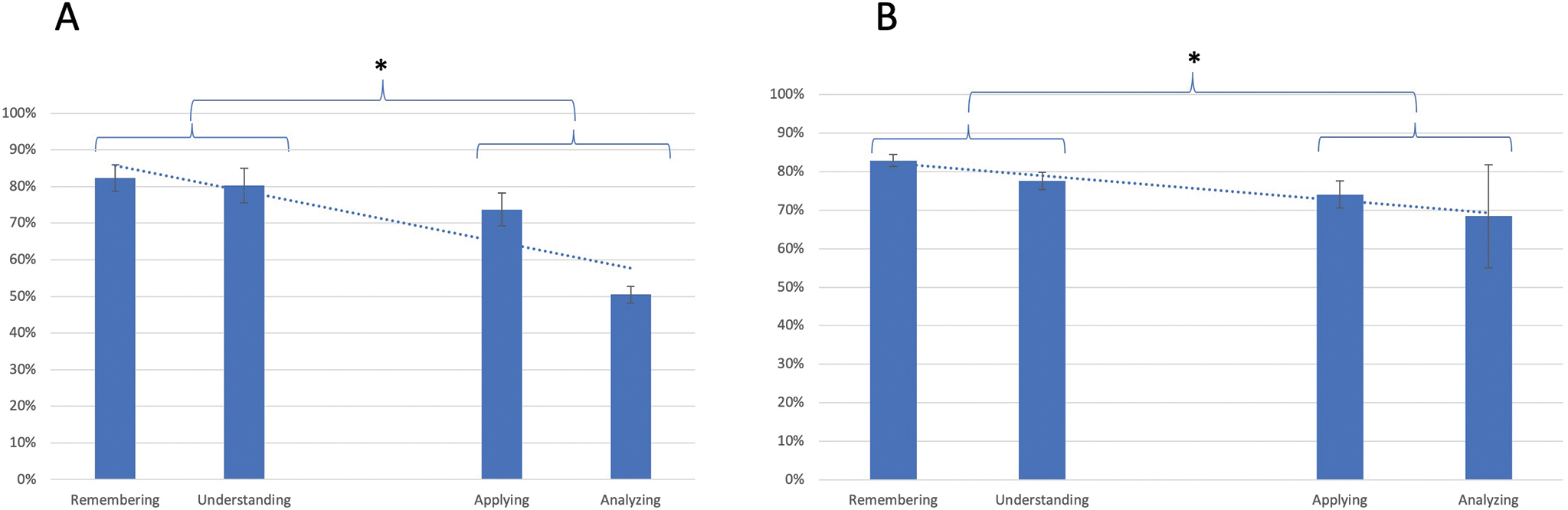

After each midterm exam the students were given a detailed report of their performance by Bloom’s category. In lecture, the instructors discussed the class average results as well as presented suggestions for study methods to improve performance in HOCS (Crowe et al., 2008). The instructors made a concerted effort to model HOCS in lecture by demonstrating graphic and data analysis and application of course material to new situations. Despite these efforts, the results of the midterm exams were consistent, with declining student performance on HOCS (Figure 1A). The difference between average performance on HOCS questions was significantly lower than average performance on LOCS (p = .03).

A) Average performance on midterm exam questions across three midterms in Introductory Biology for Allied Health, an introductory biology course for nonmajors and allied health students. Error bars represent standard error. Questions are categorized by Bloom’s taxonomy and grouped into lower order cognitive skills (LOCS; Remembering and Understanding) and higher order cognitive skills (HOCS; Applying and Analyzing). Students scored significantly better (p = .03) on LOCS questions. B) Average performance on midterm exam questions across three midterms in Introductory Biology for Science Majors, an introductory biology course for biology majors. Error bars represent standard error. Questions are categorized by Bloom’s taxonomy and grouped into LOCS (Remembering and Understanding) and HOCS (Applying and Analyzing). Students scored significantly better (p = .03) on LOCS questions.

This decline in performance for HOCS is common among freshman students. If we run the same analysis in a similar Introductory Biology course for science majors, we see again significantly lower average performance on questions requiring HOCS (p = .03; Figure 1B). It is likely that this pattern reflects the type of learning and studying that incoming college students are used to from their prior education experiences (Zoller, 1993; Zoller, 2000).

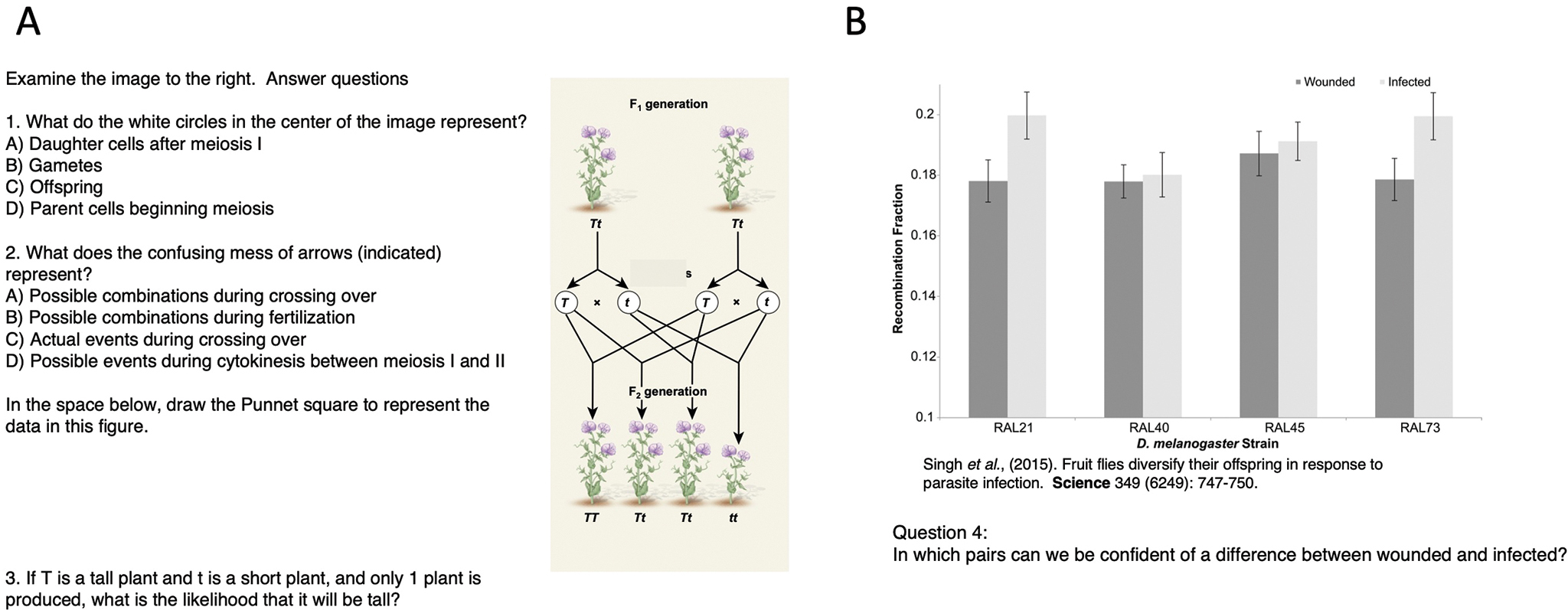

After the third midterm exam in Introductory Biology for Allied Health, the instructor began to use class time formerly dedicated to lecture and integrated active learning in small groups. The students formed groups of three to four and worked through problem sets based on the course content. The problem sets were designed to give the students practice on skills of graphic literacy, data analysis, conceptual application, and scientific literacy. Examples of questions from the problem sets can be seen in Figures 2A and 2B. During the group work time, the instructor and one learning assistant (Otero, Finklestein, McCray, & Pollock, 2006) circulated and spoke with each group as they were working. At the end of the group work time, the instructor explained each of the questions using a Power- Point presentation. During the last 4 weeks of the course, the instructor included six different in-class group work activities. The average duration of the activities was 20 minutes in a 50-minute lecture.

A) This exercise challenges the students to interpret an image as well as apply their understanding of a concept. The image is property of McGraw-Hill Higher Education. B) This exercise helps students work through graphic analysis and critical thinking.

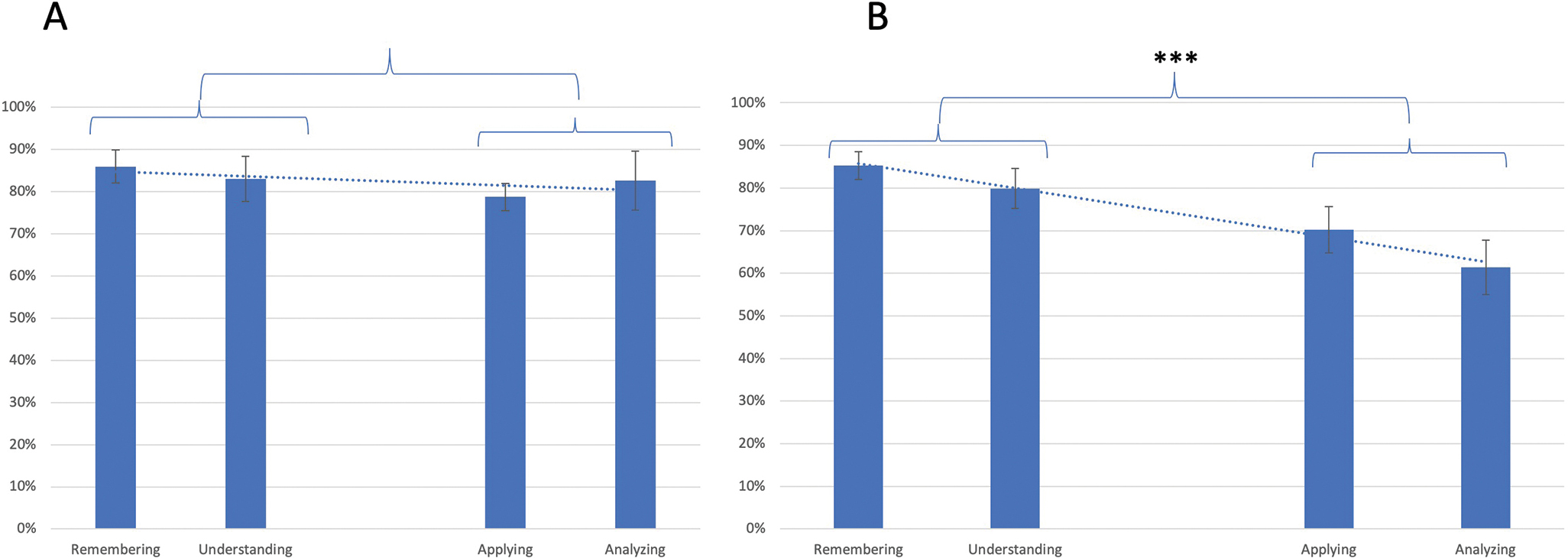

At the end of the semester, the students in the Allied Health course took a final exam that consisted of 65 questions that were a mix of new material and old material. The exam included several application, graphic literacy, and data analysis questions, but none of these were questions that the students had seen before. The results from the final exam suggest that the in-class scaffolded practice on these HOCS contributed to increases in performance on questions involving these skills (Figure 3A). Here we can see that there is no significant difference between students’ ability to succeed on questions requiring HOCS compared with questions requiring LOCS (p = .58). If we compare these results with the introductory biology course for science majors, which did not utilize class time to work in groups on skill-based problem sets, we can see that the students still perform lower on questions requiring HOCS compared with questions requiring LOCS (p = .01; Figure 3B). These data suggest that in-class practice provides students the opportunity to make gains in the skills of applying and analyzing.

A) Average performance on final exam questions in Introductory Biology for Allied Health, after 4 weeks of instruction that included active learning exercises. Error bars represent standard error. Questions are categorized by Bloom’s level and grouped into lower order cognitive skills (LOCS; Remembering and Understanding) and higher order cognitive skills (HOCS; Applying and Analyzing). There was no difference (p = .58) between students’ ability to answer LOCS or HOCS questions correctly. B) Average performance on final exam questions in Introductory Biology for Science Majors, after no deviation from the standard lecture format. Error bars represent standard error. Questions are categorized by Bloom’s level and grouped into LOCS (Remembering and Understanding) and HOCS (Applying and Analyzing). Students scored significantly better (p = .001) on LOCS questions.

Conclusions

These data indicate that scaffolded skills practice is effective at assisting students in strengthening their HOCS. Although the results reflect only one single course, the sample size is relatively large (n = 155 students). The increases in HOCS are considerable. In analytical skills, the average correct response rate rose by almost 20%, and in application skills the increase was 5%.

The call to increase critical thinking skills in our students (and future workforce) is very clear (Alberts, 2005); however, critical thinking specifically and skills in general can be difficult to teach. Add to this problem the fact that STEM classes often enroll large numbers of students and take place in inflexible teaching environments such as the lecture hall, as well as the common student attitude that learning involves the passive transfer of knowledge from instructor to student, and the deck often appears stacked against the instructor to make curricular changes that promote critical thinking. These data suggest that skills-based practice, rather than modeling and describing skills in lecture and recommending practice at home, can be effective in increasing student performance in HOCS categories. It has also been shown previously that including active learning in STEM courses decreases the failure rate and increases overall student performance (Freeman et al., 2014). Adding peer learning in the form of learning assistants further ameliorates HOCS development (Sellami, Shaked, Laski, Eagan, & Sanders, 2017). Taken together, the data are mounting that active learning, particularly skill-based active practice, can be instrumental in transforming our STEM classrooms.

Tagging the exam questions with category tags and communicating category performance results to students is likely an important key to this success. Although many educators eschew the idea of “teaching to the test,” we do know that students perceive the test as the ultimate reflection of value in a course. And yet, although educators see the importance of critical thinking skills, the vast majority of exam questions in college-level biology classes are Bloom’s levels Remembering or Understanding (Momsen, Long, Wyse, & Ebert-May, 2010). When students see not only the content on an exam, but also their performance broken down by skill category, it communicates to them the value in these skills in a way that they can concretely understand. They also can see if their efforts either in class or studying at home translate to gains in skills in a very concrete way.

Pedagogy that supports the development of critical thinking skills is paramount in education, both for future scientists and for students majoring in other fields. Poorly taught introductory science courses may deter students from continuing on in the sciences for a number of reasons (Seymour & Hewitt, 1997). It is possible that poorly taught introductory sciences courses may also discourage an affiliation with or even engender a distrust of science in students majoring in other fields. Therefore, introductory science courses can be thought of as gatekeepers, encouraging or preventing a more diverse talent pool from persisting in the STEM fields (Labov, 2004) and appreciating a role for science in everyday life. The data presented in this study suggest that practice, not just modeling and listening, is essential for the development of critical thinking skills. In-class critical thinking group work works well in large-lecture settings, but there are other models for the transformation of courses to promote critical thinking (Quitadamo & Kurtz, 2007; Rowe et al., 2015). The practice of tagging (also called “Blooming”) assessments and gathering data on student performance can be instrumental both in determining the need for pedagogy transformation and to assess if interventions are effective.

Acknowledgments

I thank Kathryn Spilios for insightful editing and perennial collaboration as well as the Office of the Provost, the Center for Teaching and Learning, and the CAS Undergraduate Academic Program Office of Boston University for the financial support required to implement ExamSoft software. I thank Cathy Ma for her hard work as a learning assistant in this and other courses.

Elizabeth Co (eco@bu.edu) is a senior lecturer in the Department of Biology at Boston University in Boston, Massachusetts.

Curriculum Instructional Materials Interdisciplinary Teaching Strategies Postsecondary