Research and Teaching

Course-Based Undergraduate Research Experiences Performance Following the Transition to Remote Learning During the COVID-19 Pandemic

Journal of College Science Teaching—September/October 2021 (Volume 51, Issue 1)

By Christine Broussard, Margaret Gough Courtney, Sarah Dunn, K. Godde, and Vanessa Preisler

Course-based undergraduate research experiences (CUREs) are an alternative pedagogical approach to the apprenticeship model for high-impact research immersion experiences. A W.M. Keck-funded Research Immersion Program at a Hispanic-serving institution in Southern California proposed to expand the benefits of the CURE model by developing lower- and upper- division CUREs across a variety of STEM (biology, computer science, mathematics, and physics) and associated disciplines (anthropology, kinesiology, sociology, rhetoric, and communications). A subset of these courses moved completely online mid-semester of spring 2020 due to the COVID-19 pandemic. The goal of the study presented here is to understand the overall experiences of students and faculty members in the CURE courses in light of the transition to online learning. We present data here that show gains in skills development and understanding research design and stability in science opinions and self-perception in the spring 2020 semester, despite the transition to remote learning. We also report faculty perceptions regarding the challenges, supports, and successes of transitioning and implementing their CUREs in a remote learning environment.

In higher education literature, the high-impact practice of organized undergraduate research experiences is viewed as valuable and important for students at the undergraduate level (Jones et al., 2010; Kuh, 2008; Lopatto, 2009; Russell et al., 2007). Course-based undergraduate research experiences (CUREs) are robust research immersion opportunities in the classroom that provide similar benefits and challenges to the traditional mentored research experience model for students. They are accessible to all students and improve retention, graduation rates, career preparedness, and overall success, as well as increasing faculty-student interactions (Adedokun et al., 2013; Astin, 1997; Auchincloss et al., 2014; Esparza et al., 2020; Lansverk et al., 2020; Pascarella & Terenzini, 1991; Tinto, 1987; Wei & Woodin, 2011; Yaffe et al., 2014).

In 2014, CUREnet defined the key elements in a CURE as the: (1) use of scientific practices, (2) focus on discovery, (3) focus on broadly relevant or important work, (4) collaboration, and (5) iteration (Auchincloss et al., 2014). These five elements reinforce one another to give students a more authentic, professional, scientific research experience in a classroom setting. In a recent publication by Faulconer et al. (2020), students indicated that course credit for undergraduate research opportunities/projects was very important to them, regardless of whether they were face-to-face or online. Although CUREs have been shown to be an effective and inclusive learning opportunity for students in a traditional undergraduate face-to-face setting, it is unknown if CUREs offer the same benefits in a remote learning environment.

In the spring of 2020, a novel Coronavirus led to the COVID-19 pandemic (Hu et al., 2020), which disrupted higher education and required a rapid transition to an online learning environment (Adedoyin & Soykan, 2020). This move to remote instruction had a drastic impact on all educational facets (Dhawan, 2020) and sent educational institutions into crisis mode (Adedoyin & Soykan, 2020). Success in online learning has been shown to correlate with faculty and students having some or more experience with online teaching or learning (Wojciechowski & Palmer, 2005), which few faculty reported to have at the start of the transition to remote learning (Rapanta et al., 2020; The Changing Landscape of Online Education, 2020). Furthermore, many faculty members had little to no support from online instructional designers, and resources (laptops, access to reliable internet, books, software, etc.) for faculty and students were sparse (Adedoyin & Soykan, 2020).

Beyond some traditional factors such as flexibility, convenience, and access (Dixson, 2015; Hachey et al., 2012; Meyer, 2014; Wojciechowski & Palmer, 2005), many authors have pointed to other critical areas for remote learning success. Online courses should meet the needs of students and motivate those enrolled (Serwatka, 2005). They should be designed to engage students (Dietz-Uhler et al., 2007) and create opportunities for peer-to-peer and mentor-to-peer interactions, while allowing for faculty feedback on student progress and success (Drouin, 2008; Kupczynski et al., 2011). Saiyad et al. (2020), in reviewing the pandemic-induced online transition, highlighted 12 principles for effective and sustainable online courses in medical training, many of which overlap with elements of the CURE model. Given the sudden transition, the current study takes a first look at the consequences of switching CUREs to a remote learning environment.

Research questions

This study examined the following research questions (RQ) for the CUREs that were transitioned to remote learning:

RQ1: Were CURE elements effectively integrated post-transition?

RQ2: Did participation in the CUREs lead to enhancement of skills development?

RQ3: Did participation in the CUREs lead to improvements in research design skills?

RQ4: Did participation in the CUREs lead to changes in science and self-perception?

RQ5: What were the faculty-perceived challenges, supports, and successes associated with the rapid transition and continued implementation of CUREs via remote learning?

Materials and methods

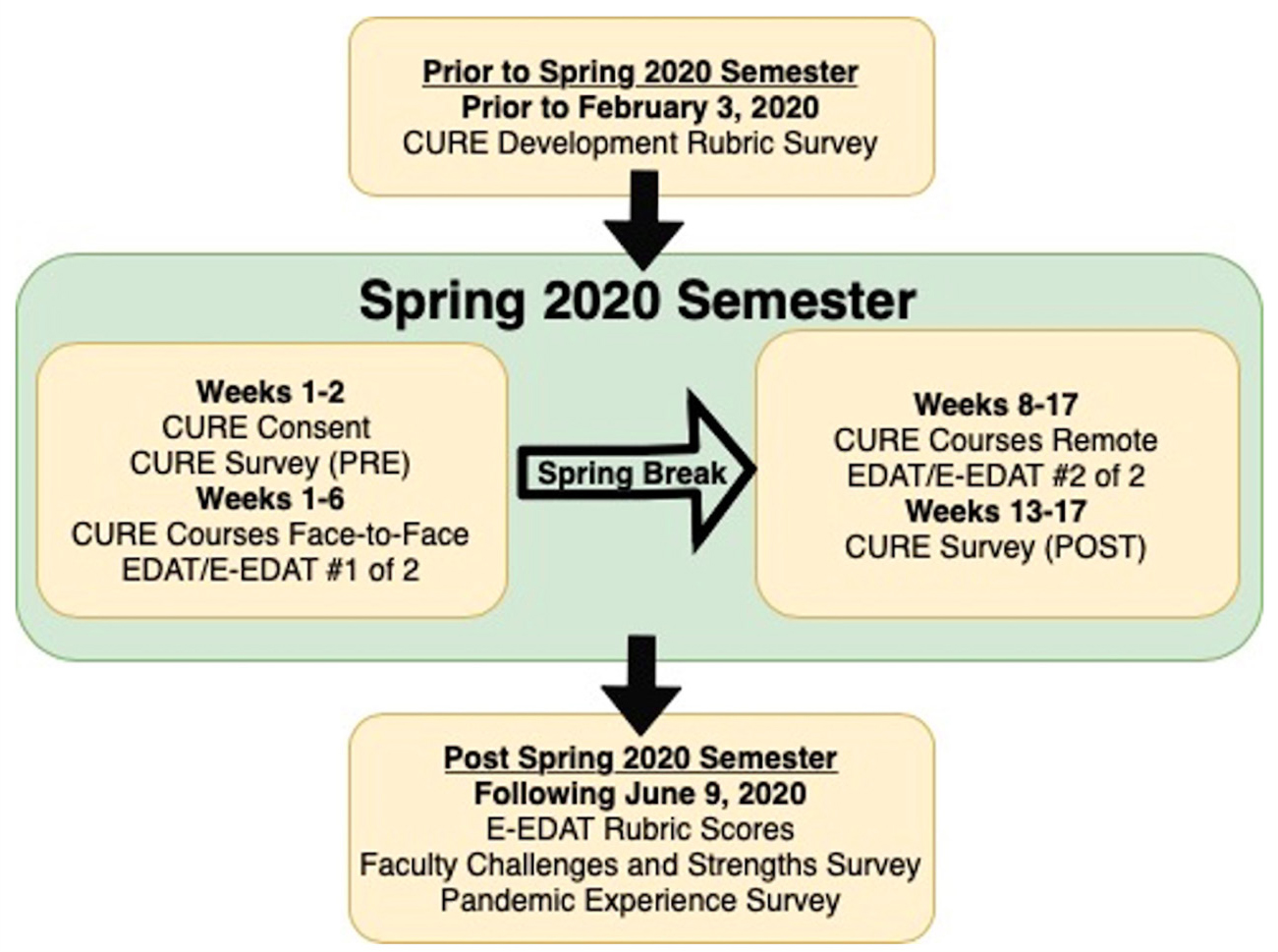

The research was determined to be exempt from review by the university Institutional Review Board. CUREs were evaluated during face-to-face and remote learning by examining student and instructor experience across the 16-week spring 2020 semester at a small private institution in southern California. Figure 1 depicts the data collection timeline in relation to the transition to remote learning.

Spring 2020 CURE implementation and data collection timeline.

The student sample and courses are described in Table 1. Consistent with institutional demographics, the sample included a larger percentage of female students than males. More than one-quarter of the sample was nontraditional-age undergraduates (ages 25+ years), and more than 40% were transfer students. Approximately three-quarters of the sample were non-White, with nearly half of the sample identifying as Hispanic or Latinx. About three-quarters of sampled students were working, with nearly 30% of students working more than 20 hours per week. Six instructors taught a total of eight courses across five departments/programs, the majority using a mix of synchronous and asynchronous content after courses went remote.

Student experience

We pool matched pre- and postdata across all courses because the goal of our study was to understand the overall experiences of students and faculty members in the transition to online learning for CURE courses. The CURE Survey (N = 49; Denofrio et al., 2007; Lopatto et al., 2008) is a validated measure that contains items that address a variety of aspects of CURE course design and apprenticeship undergraduate research experiences (UREs). With permission (D. Lopatto, personal communication, October 17, 2016), we combined the CURE survey (course elements and opinions about science) with the validated Career Adapt-Abilities Survey (CAAS); (N = 46; Savickas & Porfeli, 2012). We used a pre-post design to gauge change over time; pretreatment assessments were administered during the first few weeks of each CURE and the post-treatment survey was administered during the final few weeks of the semester (see Figure 1). Three students completed the pre- and post-CURE surveys, but not the CAAS portion of the survey.

To analyze the CURE survey data derived from student answers, a nonparametric Wilcoxon signed rank test was used as the data are categorical and not normally distributed. Nonparametric tests such as the Wilcoxon signed rank test are also ideal for small sample sizes (Weaver et al., 2017), such as the sizes we have in this study. A Bonferroni correction for multiple tests was applied to the significance cutoff (a = 0.05) for each section of the CURE instrument. For the CURE instrument, the statistical significance cutoff was 0.002 (0.05/25 items) for the course elements and 0.0021 (0.05/22 items) for opinions. Similarly, we estimated Wilcoxon signed rank tests for the five CAAS constructs that represent concern, control, curiosity, confidence, and adaptability (Bonferroni-corrected p-value = 0.01 [0.05/5 tests]).

The Experimental Design Ability Test (EDAT) (Sirum & Humburg, 2011) and Expanded Experimental Design Ability Tool (E-EDAT) (Brownell & Kloser, 2015) is a validated instrument that examines students’ ability to design an experiment (including an appropriate research question or hypothesis), to determine the plausibility of a claim, to provide justification for critical elements of their research design (dependent and independent variables, relevant populations, sample size, reproducibility), as well as to demonstrate understanding of the limitations to generalizing conclusions (will results of experimentation verify or discount the claim). Variations of the EDAT/E-EDAT, specific to the different fields of the CUREs, were implemented in a pre-post design to evaluate students’ understanding of the process of scientific inquiry and analysis (N = 70). We analyzed all available EDAT/E-EDAT data, rather than limiting the sample to those with both pre- and post-CURE survey responses because all faculty members had students complete the EDAT/E-EDAT exercise, but a few had students who did not complete the post-CURE survey. The EDAT/E-EDATs were standardized to each other and evaluated by two tests: the Wilcoxon signed rank test (to evaluate pre- to postchanges) and a Kolmogorov-Smirnov (KS) test. The nonparametric KS test compared the distributions of the percent change from pre- to post-EDAT assessment across natural science (biology, kinesiology, computer science) and social science courses (anthropology, rhetoric, and communication studies) to test if there were differences across these two broad areas.

Faculty experience

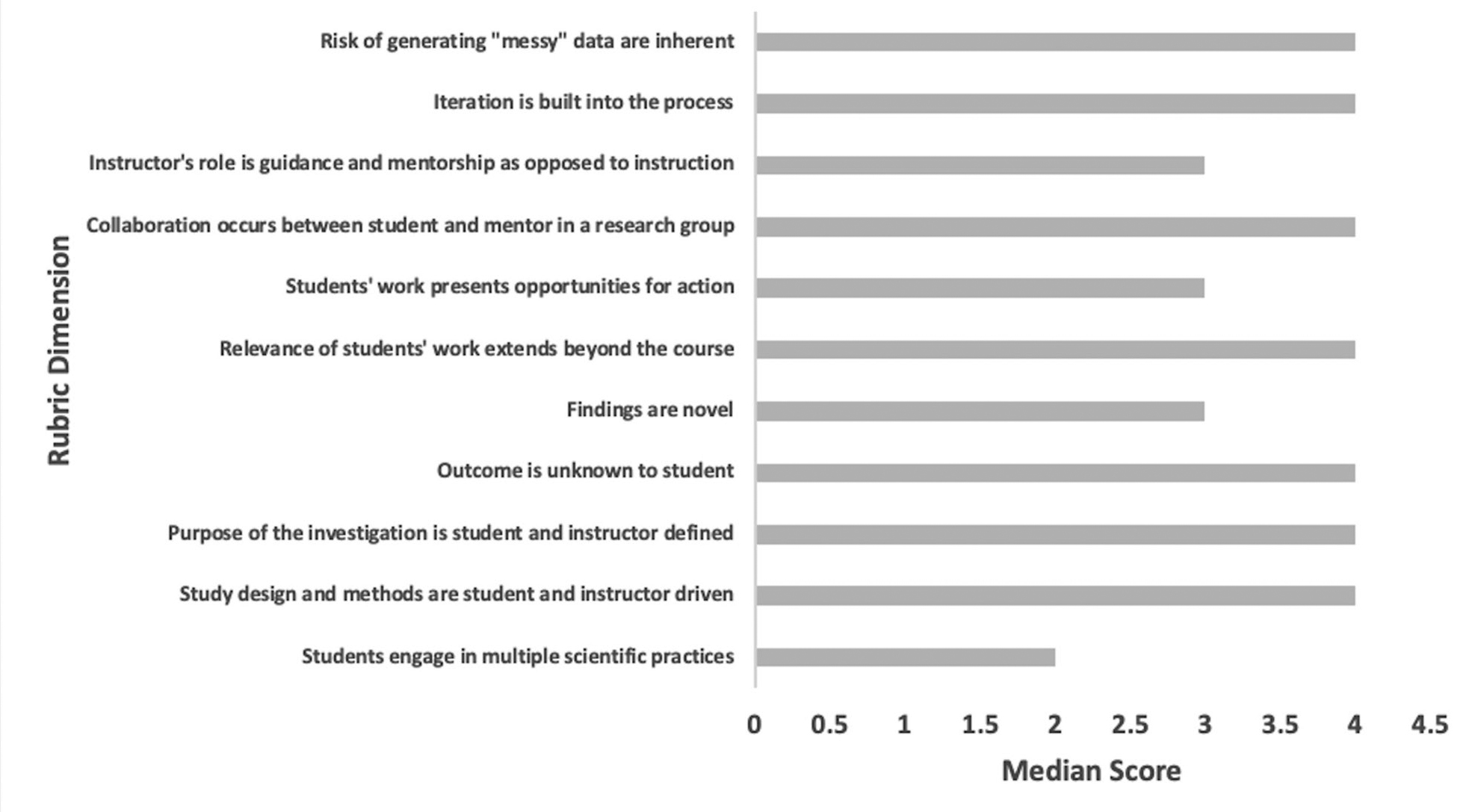

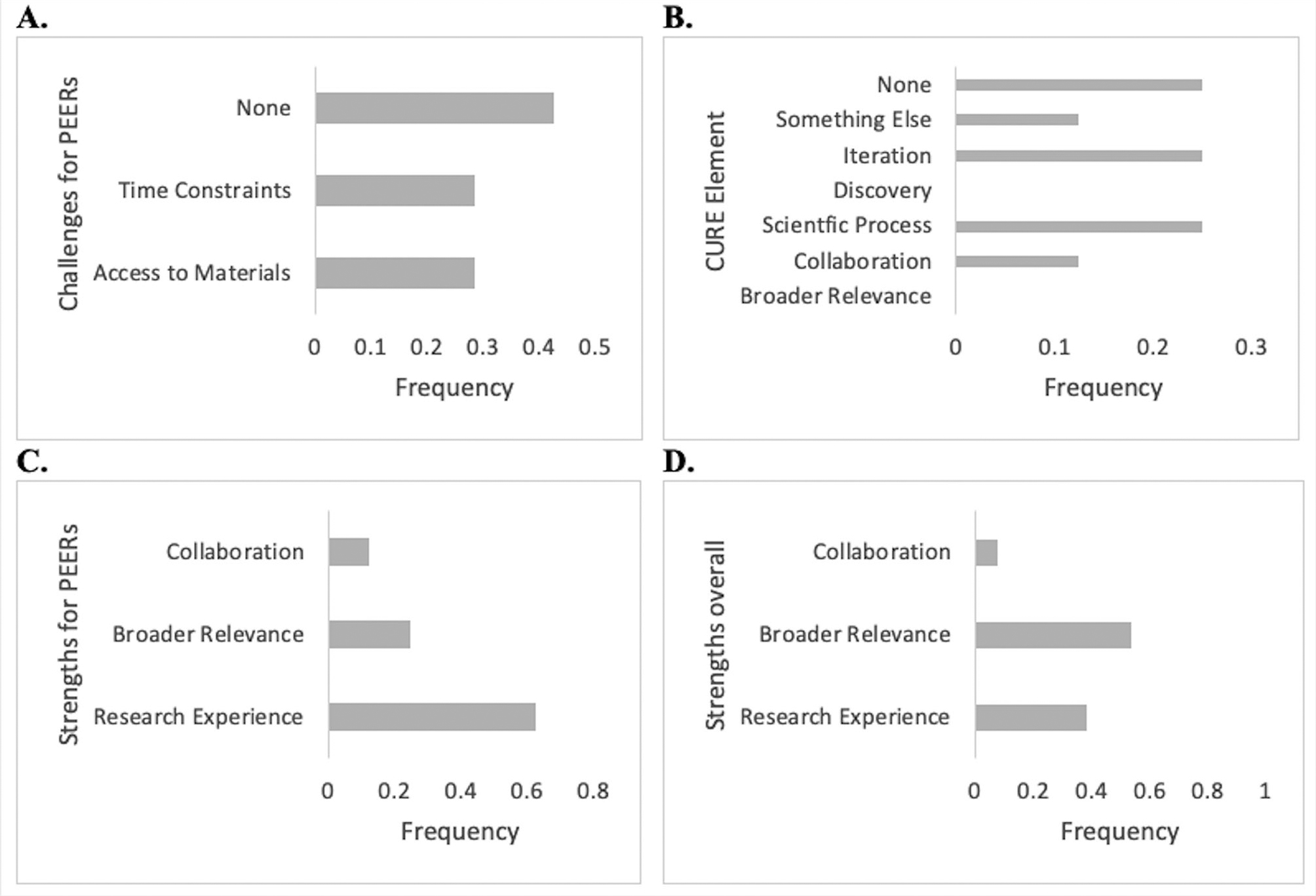

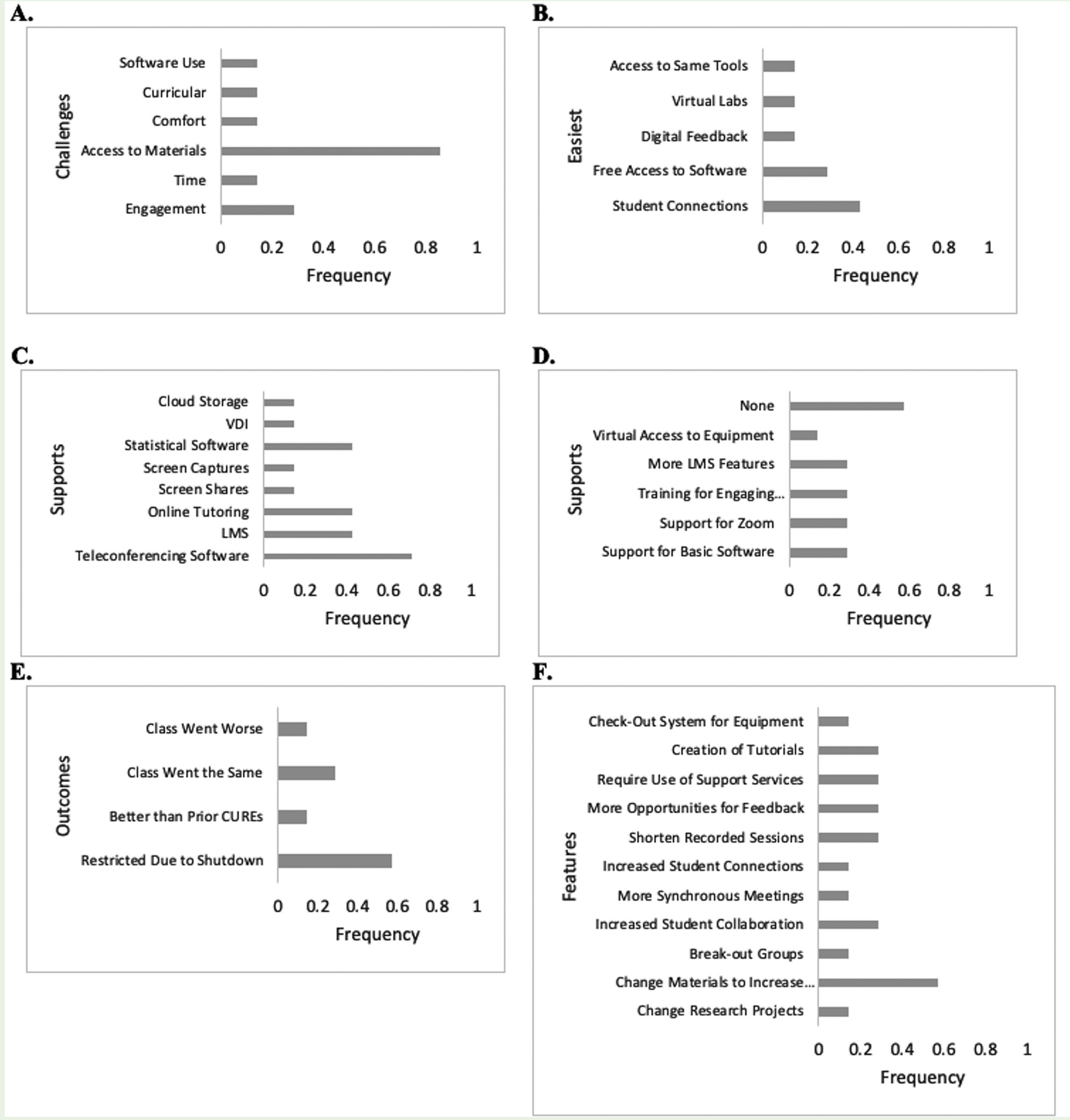

Faculty who taught a CURE in spring 2020 were evaluated with several measures designed by the research team for this project. First, instructors completed the CURE Development Rubric, a self-assessment rubric that evaluated how well instructors incorporated the five elements of a CURE into their course. Second, an end-of-year Faculty Challenges and Strengths Survey (an anonymous, open-ended, six-question instrument), was administered to examine the challenges of incorporating CURE elements and implementing the CURE, as well as to probe faculty-observed strengths of integration of CURE elements and in serving persons excluded due to ethnicity or race (PEERs) (Asai, 2020). The open-ended questions were coded to categorize responses and identify themes reported, and the frequencies of the categories were graphed to depict trends. The category of “something else” incorporated answers that were only reported once in the data and/or appeared to be specific to the course. The median scores (on a 1–4 scale) of the self-assessment rubric were plotted to evaluate the distribution of scores. Third, because the spring 2020 CUREs pivoted to remote learning six weeks into the semester, it was necessary to contextualize spring 2020 instructor responses to CURE project assessments. Thus, a Pandemic Experience Survey was developed to investigate these aspects of instructor experience: (1) Challenges moving CUREs to a remote learning platform; (2) Easiest aspect of moving CURE to a remote learning platform; (3) Types of supports available that were needed to implement the CUREs remotely; (4) Types of supports needed but not provided; (5) How the remote version of the CURE course compares to the face-to-face version; and, (6) If the instructor taught the CURE remotely again, what changes would be made?

Results and discussion

We were interested in determining whether the integration of CURE elements would yield enhancements in skills development, improvements in understanding research design, and science and self-perception changes in the remote format. We also wondered what challenges and strengths of CURE development and implementation would arise in the remote format. To assess successful design and implementation of CUREs in a remote learning setting, we collected data from three components: student self-reporting (CURE Survey Of Course Elements, CURE Survey of Opinions, Career Adapt-Abilities Scale), student performance (EDAT/E-EDAT), and faculty self-reflection (CURE Development Rubric, Faculty Challenges and Strengths Survey, Pandemic Experience Survey). The results for the three components reported here were similar in magnitude to the prepandemic findings of Broussard et al. (2020).

Student performance

Design and implementation of CUREs

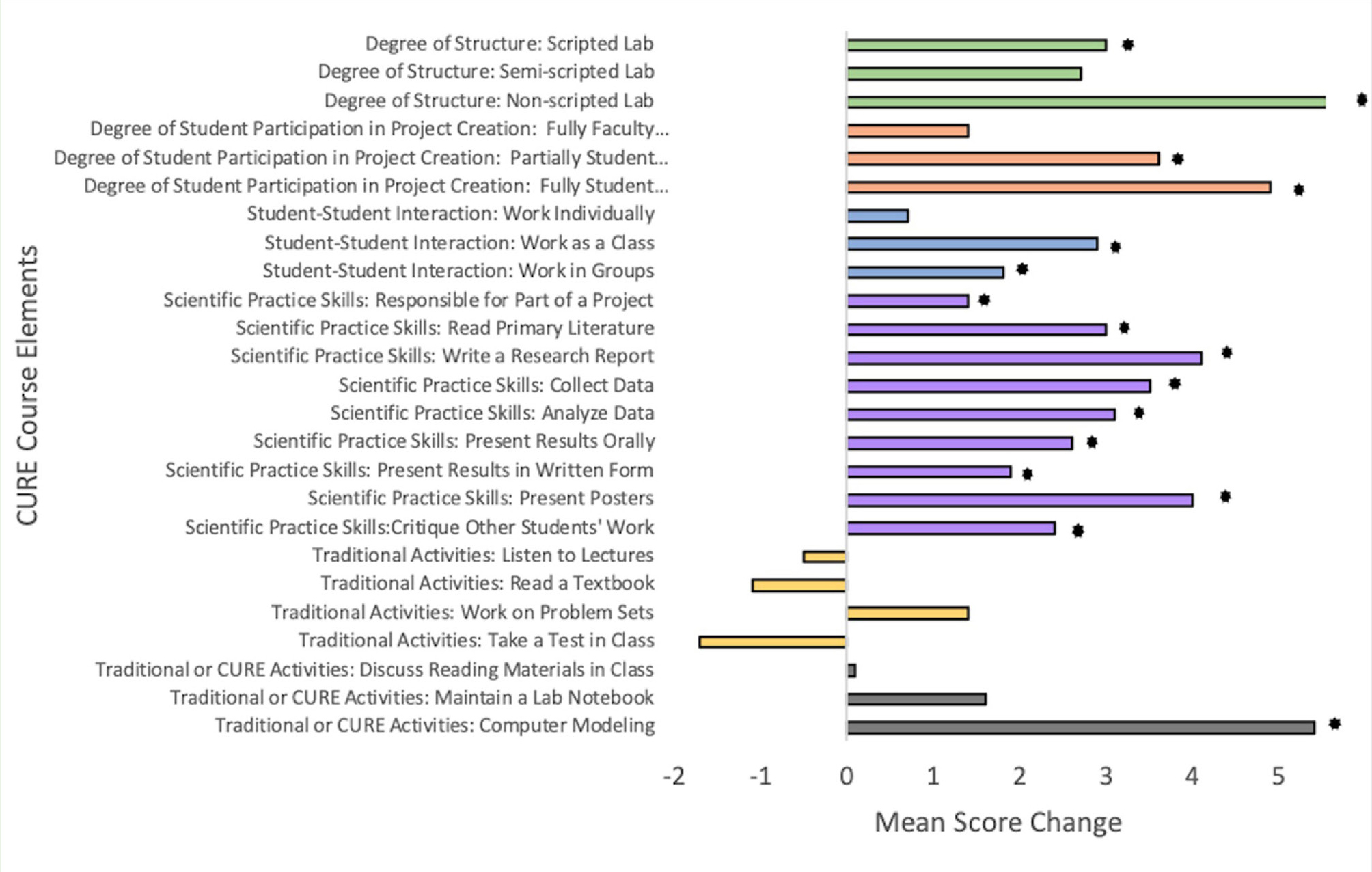

CURE developers made course design changes that either converted a traditional lab to a research-based endeavor or that restructured research experiences to fit the CURE model. The transition to remote instruction further required that CURE developers revisit those designs to triage expected and unexpected aspects of the move online, in order to determine (RQ1) whether CURE elements were effectively integrated into the remote learning environment. Supplementary Material Table A describes the CURE structure of these courses. Using items from the student-completed CURE Survey of Course Elements (CSCE), we examined the degree of structure, student participation in project creation, and student interaction (all critical elements of CUREs) in the remotely transitioned spring 2020 CUREs. These results are shown in Table 2 and Figure 2. Regarding degree of structure, we predicted that students would report an increase in nonscripted labs, given the emphasis on novel findings and unknown outcomes, but the data showed significant gains for both scripted and nonscripted labs. This result may be due to the CURE developers’ design choices that scaffolded student experiences (i.e., more scripted experiences early in the semester versus less-scripted or nonscripted experiences later in the semester, once students had developed necessary skills). Items focused on degree of student participation in project creation (faculty-created project, student created [partially], student created [fully]) were anticipated to be increased, and significant gains for student-created projects (partially and fully) were observed. Regarding level of student–student interaction (students work individually, as a whole class, or in small groups), we predicted and found significant gains in working as a class and working in groups. Further, some of the CURE Survey Opinions (CSO) items (Table 3, Figure 3) also examined general design and implementation of CUREs (students writing about science and explaining ideas helped understanding). Responses to these items were expected to increase, but did not. This result may be due to design choices of the CURE developers to include (or not) experiences that used these approaches or to student confidence in their writing or teaching ability. Other CSO items explored structural components of CUREs (instructor structures work for students to learn themselves and experiments confirm information in class). The former of these items was expected to increase whereas the latter was predicted to decrease. A statistically significant increase was observed for the survey item stating that “experiments confirm information in class.” This suggests that students may have missed the opportunity to make the connection between lab work and discovery, a central element of CUREs.

Mean score changes from pre- to post- for CURE survey of course elements. N = 49 students completing pre-and postcourse survey, *indicates p < 0.002.

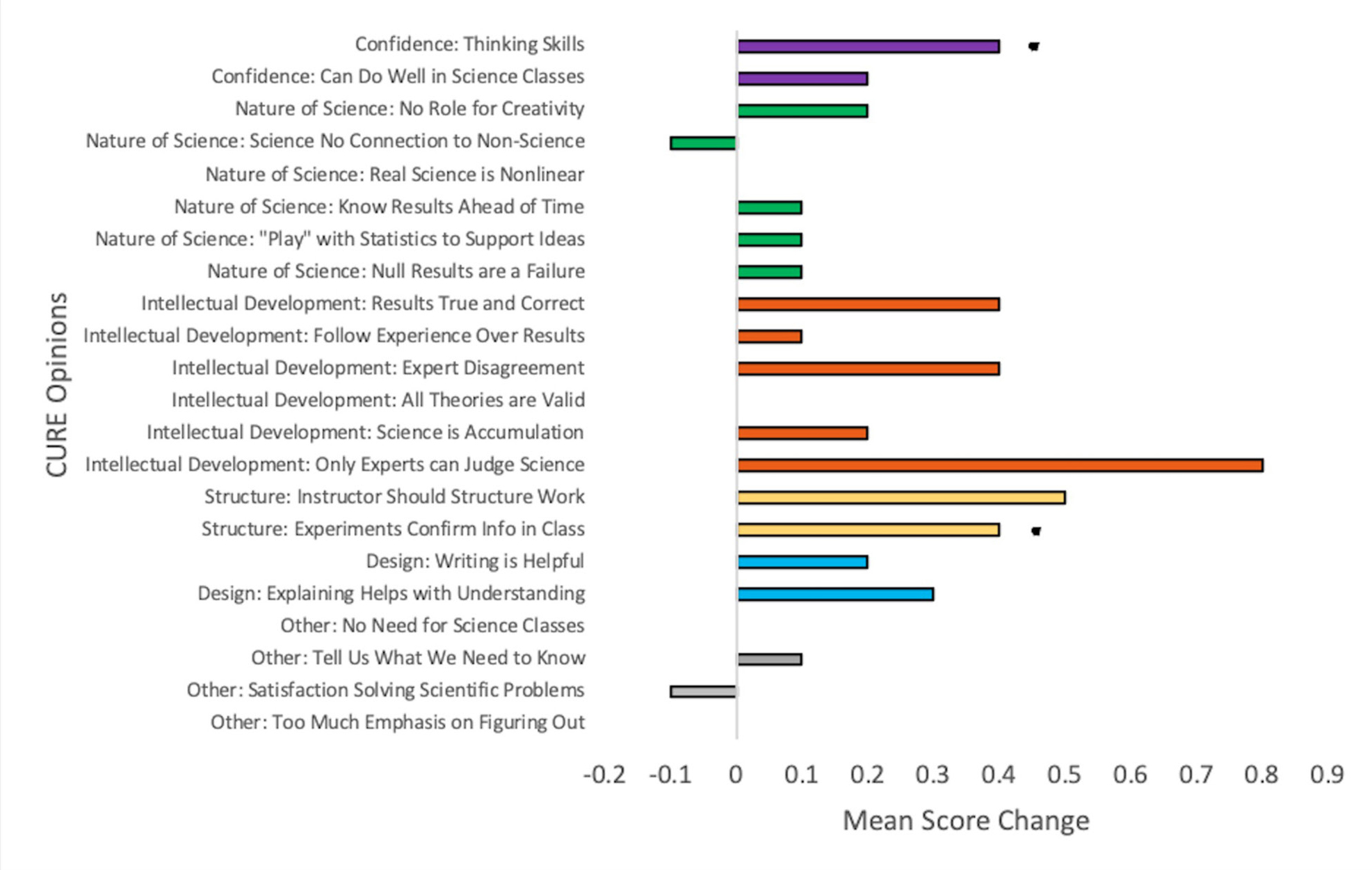

Mean score changes from pre- to post- for CURE survey of opinions. N = 49 students completing pre-and postcourse survey, *indicates p < 0.0021.

Skills development

To assess skills development in remotely taught CUREs, we analyzed assessments of the inclusion of scientific practice skills (RQ2), the presence of traditional classroom activities, and experimental design skills (RQ3), using items from the CSCE (Table 2, Figure 2) and EDAT/E-EDAT (Figure 4). CSCE items that address inclusion of research-specific skills (become responsible for a part of a project, read primary scientific literature, write a research proposal, collect data, analyze data, present results orally, present results in written papers or reports, present posters, and critique the work of other students) were predicted to be increased in effectively designed CURE courses. Statistically significant increases were observed for all of these prompts, providing evidence for the integration of opportunities for scientific practice skills development in CUREs across STEM and non-STEM disciplines in the remote setting. CSCE items that are associated with traditional, nonresearch classes (listen to lectures, read a textbook, work on problem sets, and take a test in class), were expected to remain unchanged or to decrease pre- to postcourse, as they represent pedagogical elements not aligned with the CURE model. For spring 2020, no significant changes were observed in these items. Other CSCE items that potentially applied to traditional and CURE courses (discuss reading materials in class, maintain a lab notebook, and computer modeling), were expected to be unchanged or to vary by course. We observed a significant increase only for computer modeling.

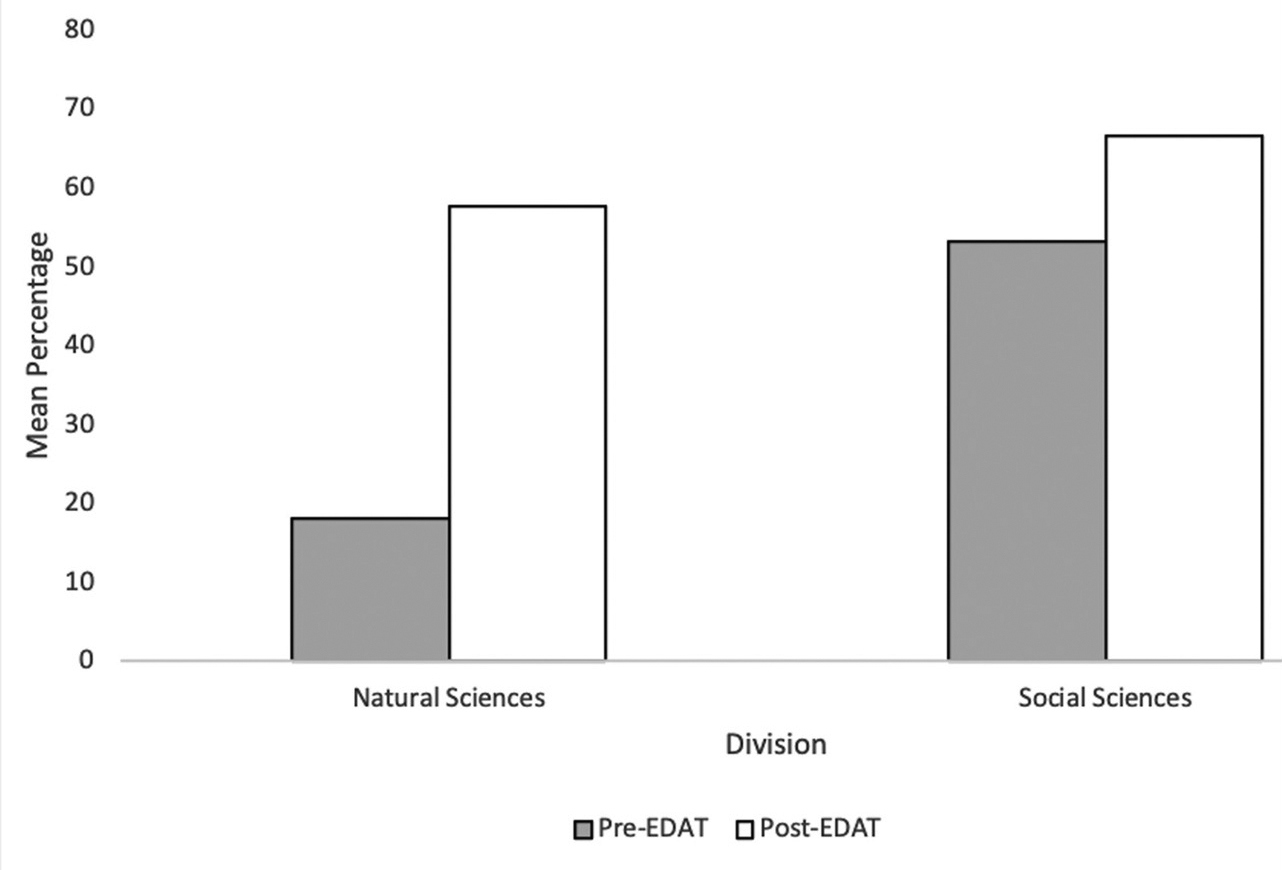

Mean pre- and post-EDAT scores (percentages) in natural and social science CUREs. N = 70 students completing the pre- and postcourse EDAT.

To determine whether providing opportunities for skills development in the remote environment translated into actual skills development, we analyzed student performance using the EDAT/E-EDAT (Figure 4). The Wilcoxon rank test results indicated a statistically significant pre-post 30.88% increase (z = 6.13, p < 0.001) for spring 2020. The KS tests detected the increases for the natural and social sciences were significantly different from one another (D = 0.5054, p < .001), with larger gains (39.27%) in the natural science classes compared to the social science disciplines (14.8%). Precourse average EDAT scores were 18.11% for the natural sciences and 53.19% for the social sciences, whereas the postcourse average EDAT scores were 57.55% in the natural sciences and 66.69% in the social sciences. This finding suggests potential differences in the initial student skill level in the natural versus social sciences and merits further investigation.

Overall, in terms of subjective opportunities for skills development and objective performance, we saw significant pre-post increases for research-specific skills. This provides strong evidence that participating in CURE courses in a remote setting leads to significant learning gains in scientific skills development in a variety of disciplines.

Perceptions of science and self

To assess student experiences (RQ4), we analyzed subjective assessments of changes in science opinions, and changes in self-perception using the CSO (Figure 3, Table 3) and CAAS (Table 4). A useful framework for analysis of science and student self-perception was provided by Hunter et al. (2007) who outlined these potential gains from research experience: (1) Thinking and working like a scientist (application of knowledge, skills, and understanding); (2) Becoming a scientist (attitudes and behaviors); (3) Skills (in scientific practices); (4) Personal-professional (confidence and identity as a practitioner); (5) Career/education path (solidification of career plans); and (6) Enhanced career/graduate school preparation. The CSO addressed becoming a scientist (attitudes and behaviors); the personal-professional (confidence and identity as a practitioner); as well as epistemological development. Relevant CSO items (no role for creativity in science, science not connected to nonscience, scientists follow scientific method in a straight line, scientists know results ahead of time, scientists “play” with statistics to support ideas, and null results are a failure) addressed the nature of science, a component of becoming a scientist. We anticipated that these items would decrease. However, none of these items showed a statistically significant pre-post change. These results suggest that students did not improve their understanding of the nature of science.

Several CSO items probe epistemological development. Simplistically, epistemological development (Bendixen & Rule, 2004; Hofer, 2001) represents transitions in intellectual development and is accompanied by a shift from external authority to internal authority. Baxter Magolda (2004; based on Bendixen & Rule, 2004; Hofer, 2001) posited that development of internal authority correlates with the development of identity. Thus, according to Baxter Magolda (2004) the stages of knowing include absolutist (knowledge is certain and resides in an outside authority), transitional (some knowledge is uncertain and one must search for truth), independent (most knowledge is uncertain and one must think for themselves), and contextual (knowledge is shaped by context and one must consider context to determine truth). We predicted that responses to CSO items that represented absolutist viewpoints (follow experience over rules; expert disagreement; science is facts, rules, and formulas; and only experts can judge science) would decrease, whereas those that represented transitional viewpoints (science not certain) would increase, suggesting that students shifted from absolutist to transitional epistemological development with exposure to CURE courses. The lack of significant results suggests that students in spring 2020 CUREs did not make gains in their epistemological development.

Gains in confidence and identity as a practitioner (e.g., social scientist, natural scientist, designer, or other), what Hunter et al. (2007) called “personal-professional,” were examined by CSO items “using thinking skills” and “can do well in science classes.” Only “using thinking skills” had a statistically significant increase (Figure 3, Table 3). The CAAS also addresses confidence, but none of the relevant items had statistically significant pre-post differences (Table 4). These results support minor gains in confidence and identity.

Faculty experience

Design, implementation, and skills development

Olivares-Donoso and Gonzalez (2019) posited that one of the challenges of providing high-quality, research-based courses and undergraduate research experiences is ensuring that these opportunities meet minimum standards for qualifying as research. We addressed this concern by creating a CURE Development Rubric (CDR) for CURE developers, which allowed instructors to examine their course(s) for inclusion of critical CURE elements identified by Auchincloss et al. (2014). The faculty completed the 11-dimension CDR, to assess their CURE design (Figure 5). Seven items received median scores of 4 out of 4: study design and methods are student and instructor driven, the purpose of the investigation is student and instructor driven, outcome is unknown to student, relevance of students’ work extends beyond course, collaboration occurs between student and mentor, iteration is built into the process, and risk of generating “messy” data are inherent. Only one item scored below a 3: engaging in multiple scientific processes. The final three aspects (novelty of findings, student projects had opportunities for action outside of course, and the instructor’s role was mentorship and guidance) received median scores of 3. These findings indicate that faculty felt their courses integrated most CURE elements effectively.

CURE development rubric self-assessment scores for incorporating each rubric dimension of CURE elements. N = 8 course sections.

Faculty perceptions of challenges, supports, and successes (RQ5)

To probe the challenges of integrating and implementing CURE elements in their spring 2020 CURE courses, faculty completed a Faculty Challenges and Strengths Survey (FCSS). The most common response from instructors to the question asking about challenges was “none” (i.e., that they had no challenges) with regard to incorporating CURE elements into the course for all students (Figure 6a and b). The strengths reported by instructors of CUREs for all students included research experience, broader relevance, and collaboration (Figure 6c and d) and reflected expanded opportunities for PEERs to engage in authentic research when offered in a course-based format. To contextualize the challenges and successes feedback, faculty completed a Pandemic Experience Survey. Access to materials was the greatest challenge reported during spring 2020 (Figure 7a). Instructors reported teleconferencing software, statistical software, a learning management system (LMS), and online tutoring as the most valuable online tools to which they had access while teaching a CURE (Figure 7c). In general, instructors felt they had the online tools they needed to teach a CURE in a remote setting, but would have benefitted from additional supports for the tools they used (e.g., training; Figure 7d). Despite this, cumulatively, instructors reported their remote CUREs went the “same” or “better” at a higher frequency than being “worse” than prior face-to-face versions (Figure 7e). Moreover, the greatest difference between the online and face-to-face versions of CUREs for these instructors was restrictions due to the campus closing.

Faculty challenges and strengths survey CURE developer responses when implementing a CURE. Frequencies of coded themes for (a) challenges implementing CUREs for PEERs/underrepresented students, (b) challenges implementing CURE elements, (c) strengths when implementing CUREs for PEERs/underrepresented students, (d) strengths implementing CURE elements. N = 6 instructors.

Pandemic experience survey responses to moving a CURE online unexpectedly during the semester. (a) Frequencies of challenges, (b) Frequencies of the reported easiest elements, (c) Frequencies of online supports that were important, (d) Frequencies of online supports that were not available, but would have been useful, (e) Comparisons of on-the-ground CUREs to online CUREs, (f) Frequencies of changes for teaching an online CURE in the future. N =6 instructors.

When asked what were the easiest aspects of teaching a CURE online (Figure 7b), student connection was the most frequent answer reported, which aligns with previous findings by Esparza et al. (2020), indicating student and instructor interactions are a valuable piece of the CURE experience. Additional indicators of success were reported by individual faculty, such as discipline-specific conference presentations by students and faculty participants, and CURE-related student research awards.

In sum, while new challenges were encountered due to the pivot to remote learning six weeks into the semester, overall, the CUREs went better or the same, which demonstrates learning modality flexibility inherent in CURE design and an added benefit to their implementation. Instructors also shared a variety of changes that could improve online CUREs in the future with the most popular being enhanced materials to increase student interest remotely (Figure 7f).

Conclusions

Research experiences have been shown to be effective tools for retention and success of STEM majors, but many institutions lack the infrastructural (Desai et al., 2008; Wood, 2003), human (Dimaculangan et al., 2001; Herreid, 1998; Sundberg & Moncada, 1994) or financial (Hue et al., 2010; Lewis et al., 2003) capital to provide authentic research experiences for all of their undergraduates. Moreover, students of low socioeconomic status often do not satisfy entrance requirements to research programs, lack acculturation to apply for extracurricular research (Bangera & Brownell, 2014), or have family and financial obligations (Malcom et al., 2010) that preclude them from taking advantage of and gaining maximal benefit from apprenticeship research experiences. Malcom et al. (2010) suggested that expanding the pipeline of Latinx students in STEM fields could be accomplished by increasing research opportunities at community colleges and Hispanic-Serving Institutions (HSIs) through integration into the core curriculum, rather than having separate extracurricular programs. As Dolan (2016) concluded, more adaptations to and systematic studies of CUREs are needed to effectively broaden undergraduate participation in research and realize Malcom’s vision.

Our study was novel in that it examined CURE design changes (RQ1) and resulting student cognitive (RQ2, RQ3) and psychosocial (RQ4) gains at an HSI among disciplines that are not traditionally associated with the CURE approach and using partially remote instruction. Overall, both subjective and objective measures of student gains indicate significant pre-post skills development, even in the context of a remote learning environment. With regard to psychosocial development, gains were much more modest. Data from the small number of courses, instructors, and students in this study suggest that online/remote learning CUREs could be a viable strategy to broaden participation beyond its use for pandemic and natural disaster planning.

Clearly not every discipline or course is amenable to remote instruction. Perhaps the most challenging would be research areas that require wet labs and specialized equipment. However, in many fields secondary data sets are available that would allow original, authentic research to be conducted in online CUREs. Our results suggest that institutions can offer CUREs in a remote learning format (either fully online or hybrid) and expect similar learning outcomes to on-the-ground courses (RQ5). Offering remote CUREs may allow students with barriers to participating in apprenticeship experiences to engage in nontraditional learning opportunities to satisfy graduation requirements and improve retention and persistence. Therefore, offering effectively designed online/remote CURE courses may help to equalize access and opportunity for PEERs. ■

Christine Broussard (cbroussard@laverne.edu) is a professor of biology, Margaret Gough Courtney is an associate professor of sociology, K. Godde is an associate professor of anthropology, and Vanessa Preisler is an associate professor of physics, all at the University of La Verne in La Verne, California. Sarah Dunn is an associate professor of kinesiology at California State University, San Bernardino.

New Science Teachers Preservice Science Education Research Teacher Preparation Teaching Strategies Technology Postsecondary