Methods and Strategies

Useful Beyond Assessments

Science and Children—May/June 2022 (Volume 59, Issue 5)

By Sarah K. Benson, William J. Therrien, Gail E. Lovette, Christian Doabler, and Maria Longhi

In today’s science classrooms, students are increasingly required to integrate mastery of core concepts with complex scientific and engineering practices. As a result, teachers must be intentional about selecting scientific practices that align with curricular objectives while also considering how to assess mastery of that practice, a challenge considering that few measurement tools of this nature exist. We believe that developing and utilizing rubrics based on learning progressions is an effective way to build student capacity and measure their progress.

Scientific argumentation, one of eight science and engineering practices outlined in the Next Generation Science Standards (NGSS), provides a salient example as the cognitive complexity of the practice requires students to simultaneously engage in multiple component skills while also exploring important scientific concepts (NSTA 2014). Scientific argumentation is defined by the NGSS as engaging in “reasoning and argument based on evidence” to provide the best explanation of a phenomena or solution to a problem (NRC 2012). For novice learners, scientific argumentation can be difficult to master if the larger practice is not broken down into manageable component skills. The individual skills necessary for practicing scientific argumentation include the ability to comprehend and analyze text, interpret and collect data, and engage with peers through discussion and writing. Therefore, scaffolded supports and accommodations are necessary so that all students in the science classroom can engage in these component skills.

To that end, rubrics comprised of learning progressions are an effective way for teachers to envision the developmental continuum of the component skills necessary for proficient scientific argumentation practices. Learning progressions track students’ competency in a specific skill while also accounting for developmental level and instructional scaffolds. A well-developed learning progression for scientific argumentation, like that described by Berland and McNeill (2010), provides a roadmap to mastery of the practice. Further, Osborne and colleagues’ (2016) model of a science-focused learning progression ensures that teachers can both build student capacity for complex engagement with scientific argumentation while also maintaining differentiated learning goals for the full range of student abilities in a classroom.

Along with providing formative feedback about levels of mastery, we argue that this type of rubric designed for NGSS science and engineering practices can also serve as a practical resource for providing appropriate instructional contexts, supports, and scaffolding to students. In this article, we provide a brief overview of the types and applications of rubrics. Next, we detail the process we used to develop a multifaceted learning progression rubric that can act as both a formative assessment of student mastery and as a guide for scaffolding for the development and measurement of scientific argumentation mastery for all students.

Traditional Rubrics

Rubrics generally fall into two categories, holistic and analytic, both designed to measure and provide feedback to students. Holistic rubrics are based on a single scale to assess different dimensions of a performance as a whole unit; while analytic rubrics break performance into different dimensions and provide descriptive feedback of each facet of performance. For example, a holistic rubric would give students general feedback on the entirety of their argumentation paper; an analytic rubric will give feedback on each component, the claim, evidence, justification, and argument. Although both rubrics provide valuable instructional feedback to students, hereafter our focus will be on the use of analytic rubrics, as they are more descriptive and detailed and ultimately better designed to support use beyond summative assessments.

Analytic rubrics provide an outline of increasingly complex cognitive demands by capturing the component skills that comprise complex tasks. Creating and using rubrics that detail not only the knowledge and skills but also the learning progression of a competency increases teachers’ ability to include and engage all students in their classrooms.

Instructional rubrics, a type of analytic rubric, bridge the gap between rubrics developed for assessment and those that can be routinely used to inform instruction. Although many rubrics are used to provide a summative score on an assignment, the rubric we developed below provides teachers with a way to formatively assess and guide student progression through the science curriculum. This rubric can also serve to deepen a teacher’s understanding of content and scientific practices as it can also be used as a resource to systematically scaffold learning and plan instruction for students of all abilities.

Student Benefits

Although all students benefit from the use of instructional rubrics, they are especially effective when used with students at-risk for or with an identified learning disability (LD), students in English language learning programs, and other struggling learners. Instructional rubrics can help alleviate cognitive load by targeting an appropriate component skill within the practice (e.g., scientific argumentation) and ensure that the instructional context is not so demanding that the student is unable to engage in the practice. Students with LD often demonstrate difficulties with expressive and receptive language; core academic skills (e.g. mathematics and reading); and knowledge acquisition, retention, and retrieval (Therrien et al. 2017). When presented with a complex instructional task such as scientific argumentation, students with LD may be unable to fully engage in the practice as their cognitive load capacity is overwhelmed by the demands of the underlying component skills (e.g., reading, communicating with peers, and retrieving core concepts from long-term memory) embedded within the practice.

English learners (EL) struggle with argumentation as well, due to limitations in not only language but also dominant cultural practices of argumentation in a classroom (González-Howard and McNeill 2016). For EL students who are still developing their spoken English, the complexity of academic and scientific language adds an additional layer of challenge. Working in various instructional contexts such as peer-to-peer and small groups bolsters student learning and increases competency (González-Howard and McNeill, 2016). The scaffolds and instructional contexts we are proposing in this rubric can be used to reduce the language load to help EL students build confidence at each progressive step.

Scaffolds to support language development are included, making our rubric multifaceted in supporting all learners. The rubric we have developed (see Figure 1 for a sample; see Supplemental Resources for the complete rubric) is designed as an instructional rubric to help teachers differentiate, support, and guide students through learning scientific argumentation.

Taking Rubrics Further

Rubrics can help teachers identify component skills embedded within NGSS’ eight scientific and engineering practices as well as the levels of independence students should demonstrate for mastery of the practice. By developing rubrics that include instructional context in addition to skill markers, it is possible to use this as a formative guide to teaching and learning throughout a unit.

Although scientific argumentation is a complex practice, requiring many cross-curricular components that students are required to meaningfully engage in during elementary school, research has shown that many students are not given the time and opportunity to practice, develop, or master this important scientific practice (Osborne et al. 2016). The use of a rubric that details a comprehensive learning progression can enable teachers to assess students’ mastery at the end of the unit while simultaneously formatively measuring and facilitating students’ development of the component skills that comprise scientific argumentation.

To develop the rubric, we began with an analysis of the skills and knowledge necessary to meet the science and engineering practices performance expectations and learning progressions at the second-grade level (NGSS Lead States 2013). Next, utilizing the competencies of scientific argumentation and levels of knowledge synthesized by Osborne et al. (2016), we divided the four distinct skill sets (claim, evidence, justification, and argument) into two levels of competency (identifying/critiquing and constructing/providing). Using performance expectations for each grade level, the skills and knowledge areas are described in detail to show the increasingly complex path to mastery (novice to advanced). The mastery levels slowly build skills but also simultaneously increase the cognitive load at each competency level (Berland and McNeill 2010; Osborne et al. 2016).

All of the skills and knowledge components are anchored by the instructional context boxes, intended to guide teachers in structuring a student’s learning at each level. Students who are learning to engage in meaningful scientific argumentation, especially those identified with LD or EL, need scaffolding to master each complex set of skills. Berland and McNeill (2010) outline various levels of scaffolding and ways to limit cognitive load to support student development. These recommended levels of scaffolding have been captured in an easy-to-follow and concrete way within our rubric. Including the learning context of partnered, small, or whole-group accommodations, scaffolding, and classroom supports available, teachers can systematically apply or fade supports for students, creating a rich, responsive learning experience for students. Using the rubric as a formative assessment tool throughout curricular units provides teachers a systematic way to scaffold their instruction while also supporting and reinforcing the practice of scientific argumentation. The instructional context engages teachers in thinking about how to increase curriculum-specific competency, along with developing or supporting executive function, increasing or lessening cognitive loads, and supporting language development, thus expanding students’ capacity for engaging in increasingly complex tasks and practices.

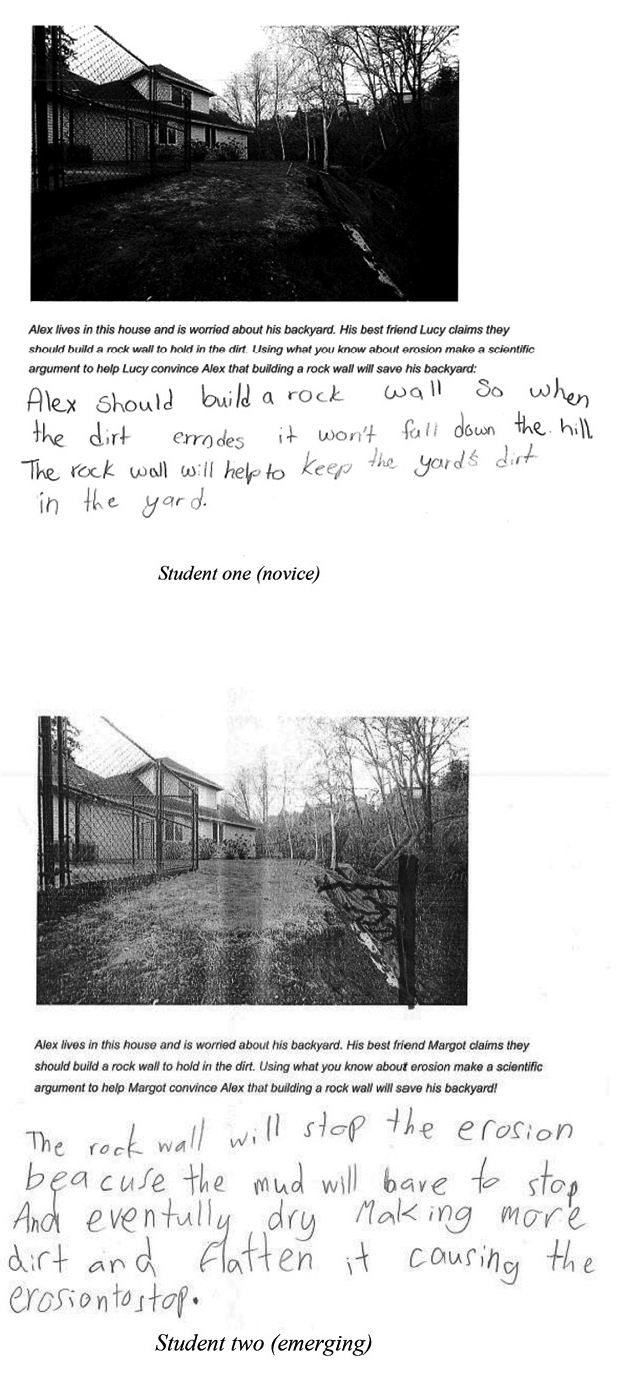

Our argumentation rubric can be used for all students, providing systematic scaffolds for students with LD or ELs while also demonstrating how to differentiate supports for accelerated students. Formative writing samples focused on constructing a claim and providing warrant and justification were collected by a second-grade teacher (Figure 2). Using the rubric, students were evaluated on their ability to make a claim, and support it with evidence and a justification. The rubric separates the skills of identifying from construction of an argument and breaks down the individual components of argumentation (justification, argument, claim and evidence). Utilizing this information, the teacher can then decide how to focus and scaffold instruction. Both students have provided a claim about the picture, but student 2 has linked the claim to the justification that the mud will dry, flatten, and stop the erosion. Student 1 has repeated the claim, but provided no justification. The students both need more scaffolds to be able to make a scientific argument.

Student work demonstrating the ability to make a claim, supported with justification.

Student 2 would be considered emerging and benefit from vocabulary lists and explicit information about the problem, solution, and evidence in order to construct an argument. Using the rubric to guide next steps, the science teacher can provide opportunities for this student to identify and relate evidence to their claim. This can be done through sorting activities so they are simultaneously working on their capacity to rank and sort evidence. Student 1 is novice and needs not only the vocabulary choices but also sentence starters and cloze passages to support their argument construction. Using the rubric as a guide, student 1 should be using the above tools to support mastery of linking evidence to their claim. This could begin verbally but then progress to the student being able to construct written sentence including both their claim and evidence.

This formative writing sample is used to drive the instructional context during their unit on erosion. Four stations are created based on the rubric evaluation of the formative picture prompt assessment. Each station is differentiated using the instructional context from the rubric, allowing students to all work on creating claims and justification within scientific argumentation, at a level that is appropriately challenging. Each station should provide a variety of informational texts providing the specific problems with facts and evidence and differentiated by reading complexity. Employing varied instructional supports such as highlighted, bolded text with vocabulary lists that help students recognize key words and important themes would be features of the novice and emerging stations. The novice station might include sentence starters to develop claims, while the advanced station asks students to generate their own and work with partners to critique others’ claims. Scaffolds to help students use evidence to justify their claims are also employed at each station. Student one, the novice, would be given sentence starters and a limited list of facts in order to develop an initial justification while student 2, the emerging student, must identify the appropriate data and evidence to further support and refine their justification. Throughout, the activity students should be encouraged to move through the stations as they demonstrate mastery of the skills.

By breaking the NGSS science and engineering practice of argumentation down into its component skills, our rubric provides teachers with an access point for all learners. Using the instructional context and scaffolds from the rubric, teachers are able to choose how to present the material and how to best support all learners: The student can be given the data in isolation, or given word banks with vocabulary or sentence starters to make and support the claim. When the class moves on to critiquing claims or collecting and using their own data to make a claim, the same supports can remain in place to reduce cognitive load and allow struggling learners to focus on the practice of argumentation.

Conclusion

Rubrics that include instructional contexts and scaffolds enable teachers to both formatively assess and systematically differentiate instruction for all students. This allows teachers to meaningfully participate in science instruction and support students in mastering critical scientific practices and core content. Use of a rubric that outlines instructional practices to reduce students’ cognitive load is especially important for students at risk for or identified with LD who often are penalized due to their struggle with mathematics and language skills, but—when provided instructional scaffolds—are able to master scientific practices. EL students also benefit from the variety of scaffolds as they develop their language skills. Additionally, the amount of peer-to-peer engagement in a classroom can enhance language acquisition, making the instructional context important in creating a supportive learning environment (González-Howard and McNeill 2016). To create a strong instructional foundation, teachers need to analyze the scientific and engineering practices in the NGSS and not only think about what students must do to demonstrate mastery but also how varying instructional contexts can ensure that all students make progress on learning complex scientific practices and core science concepts.

Acknowledgment

This rubric was developed as a part of a larger grant project and the authors would like to acknowledge the ongoing work of researchers at the University of Virginia and the University of Texas at Austin for their curriculum development efforts.

This project is funded by the National Science Foundation, grant Promoting Scientific Explorers Among Students with Learning Disabilities: The Design and Testing of a Grade 2 Science Program Focused on Earth’s Systems to The University of Virginia and The University of Texas at Austin. Any opinions, findings, and conclusions or recommendations expressed in these materials are those of the author(s) and do not necessarily reflect the views of the National Science Foundation.

Supplemental Resources

Download the rubric at https://bit.ly/3vczLMy

Sarah K. Benson (s.k.benson@bham.ac.uk) is an assistant professor at the University of Birmingham-Dubai. William J. Therrien is a professor, and Gail E. Lovette is an assistant professor, both at the University of Virginia, in Charlottesville, Virginia. Christian Doabler is an associate professor, and Maria Longhi is project director, both at the University of Texas in Austin, Texas.

Assessment Professional Learning Teaching Strategies Elementary