feature

Formative Assessment for Equitable Learning

Leveraging Student Voice Through Practical Measures

The Science Teacher—November/December 2021 (Volume 89, Issue 2)

By Krista Fincke, Deb Morrison, Kristen Bergsman, and Phillip Bell

As educators, we all engage in formative assessment; however, to ensure we are fostering equity in how diverse learners experience learning, we need to expand our assessment toolkit.

Practical measures surveys provide an effective way to center learners’ interests, cultural backgrounds, and experiences in classrooms that can be used to inform instruction and disrupt issues of inequity.

Science education, like all other aspects of our society today, has persistent issues of inequity rooted in issues of anti-Blackness, colonialism, and racism; however, as a community of science educators we are exploring ways to engage deeply in abolition and de-colonial efforts within science learning contexts. Examples of such justice-centered teaching are found in the Next Gen Navigator (Bell and Morrison 2020). The Framework for K–12 Science Education (NRC 2012) provides a roadmap that helps define and address issues of equity within science education; however, the pragmatics of how to assess the needs of culturally diverse learners are still often unclear. Additionally, in this time of remote learning, we also have the compounding challenges of sensing students’ learning experiences from a distance to inform our culturally responsive instruction.

Practical measures as a form of assessment

One way of sensing how diverse learners are engaging in science learning is through practical measures surveys, a type of formative assessment that amplifies students’ voice around how they are learning (Bryk et al. 2015). Historically, formative assessment within science education has centered on what students have learned. Recent work in assessment provides more insight into how we might center culture and justice within our science pedagogies around the diverse experiences of marginalized youth (Bell and Morrison 2020; NRC 2014). This framing requires educators to center issues of learner identity and interest and puts the responsibility on educators to design and support sensemaking for diverse learners. More than ever, it is imperative for science educators to use different assessment practices, such as practical measures, to lift learners’ voices and inform instructional shifts that affirm learners’ cultural experiences and knowledge (see www.stemteachingtools.org and https://tinyurl.com/isme-pm).

Types of practical measures

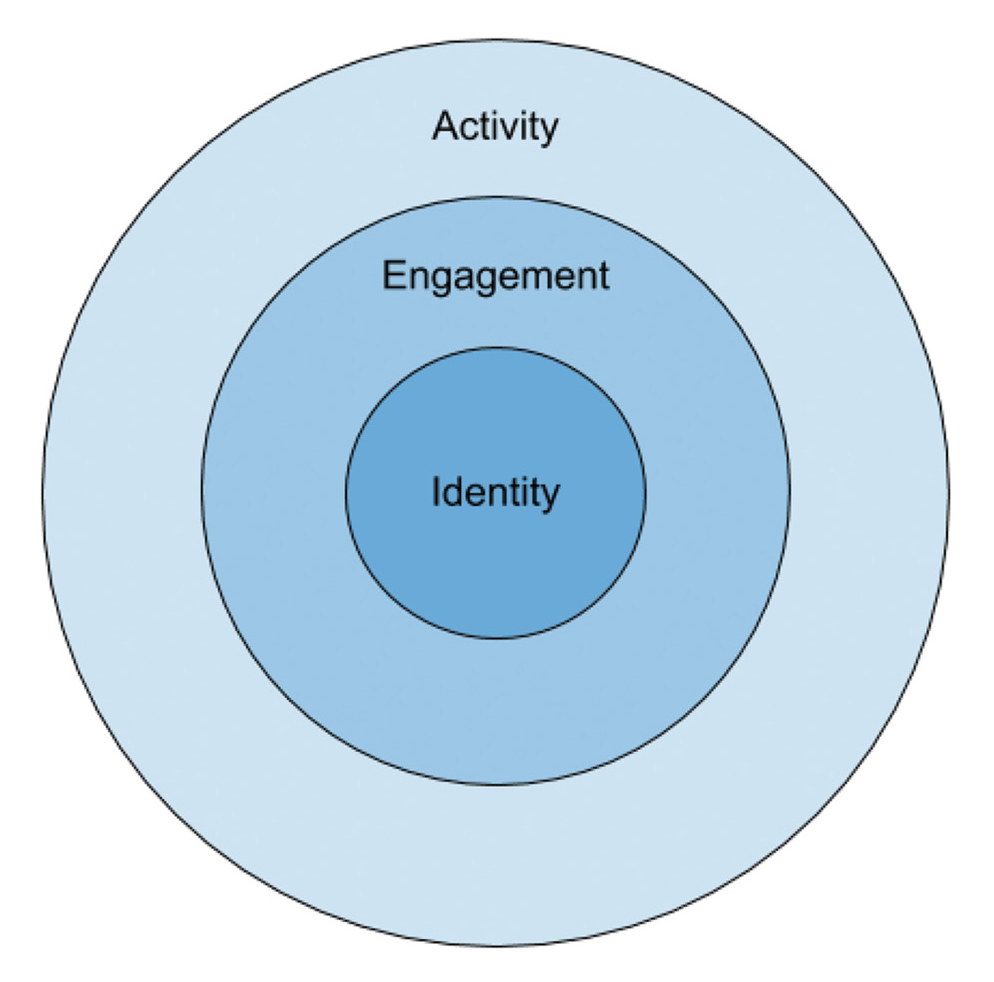

Practical measures can be designed to elicit information about learners’ activity, engagement, and identity. These measures collectively form a nested framework to understand how students learn (Figure 1); learning activities inform how learners are engaged and engagement informs how identity formation or expansion is fostered. Below we explore some examples of different kinds of practical measures to make distinctions between these three measures.

Nested nature of goals of practical measures items.

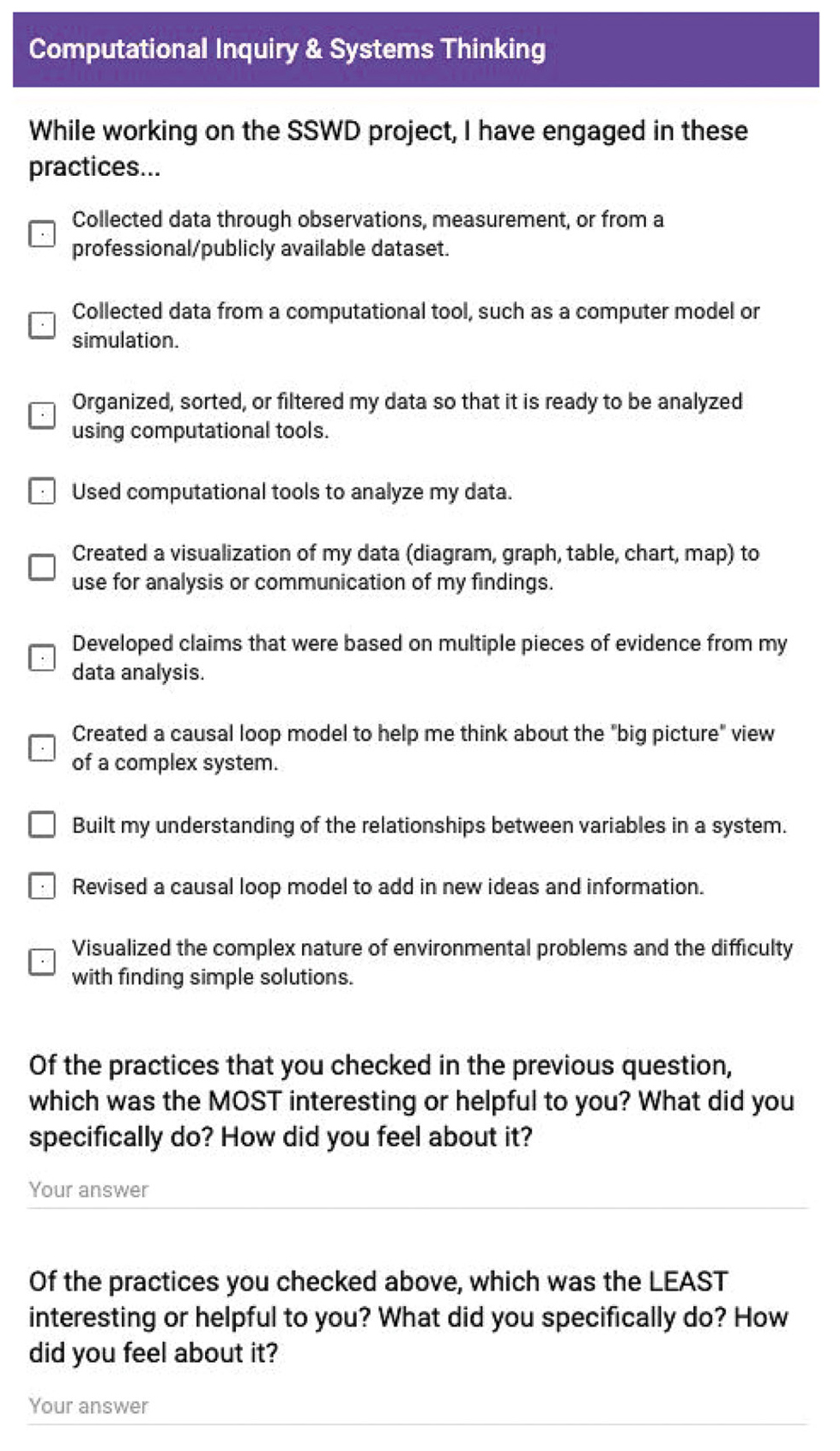

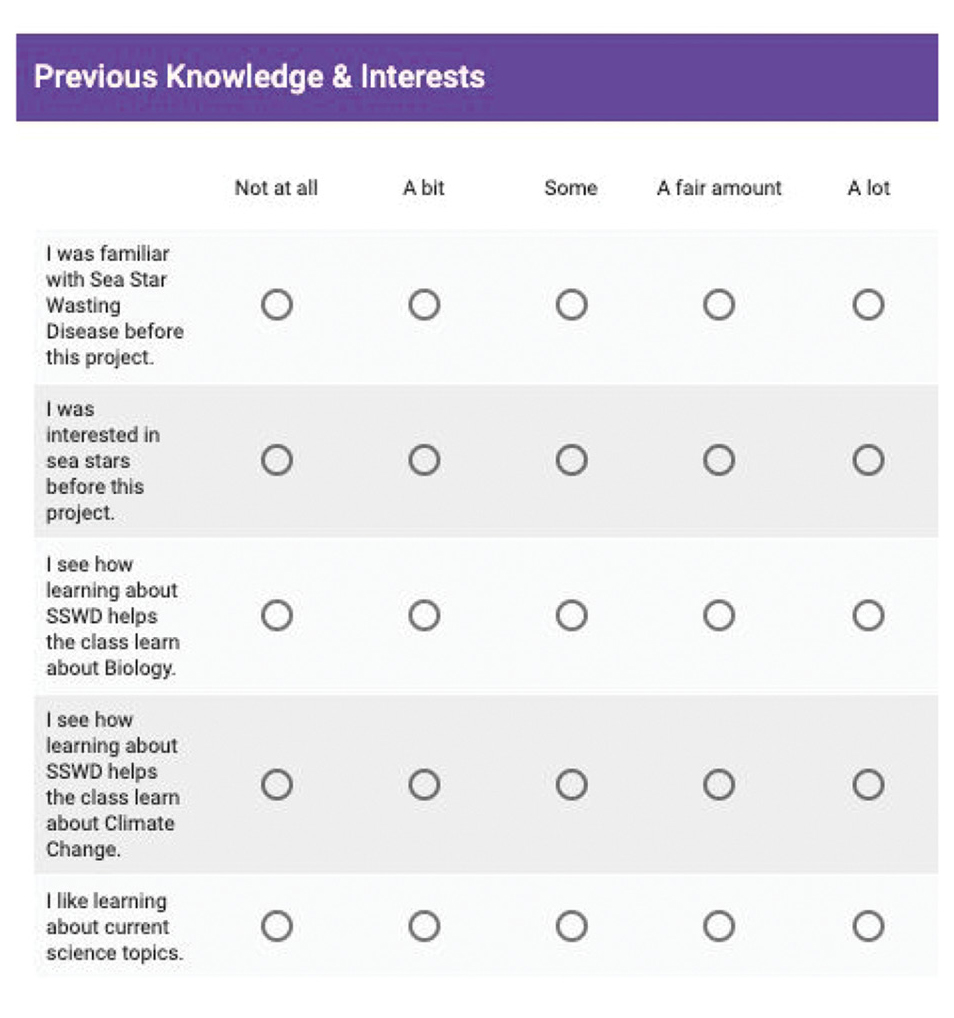

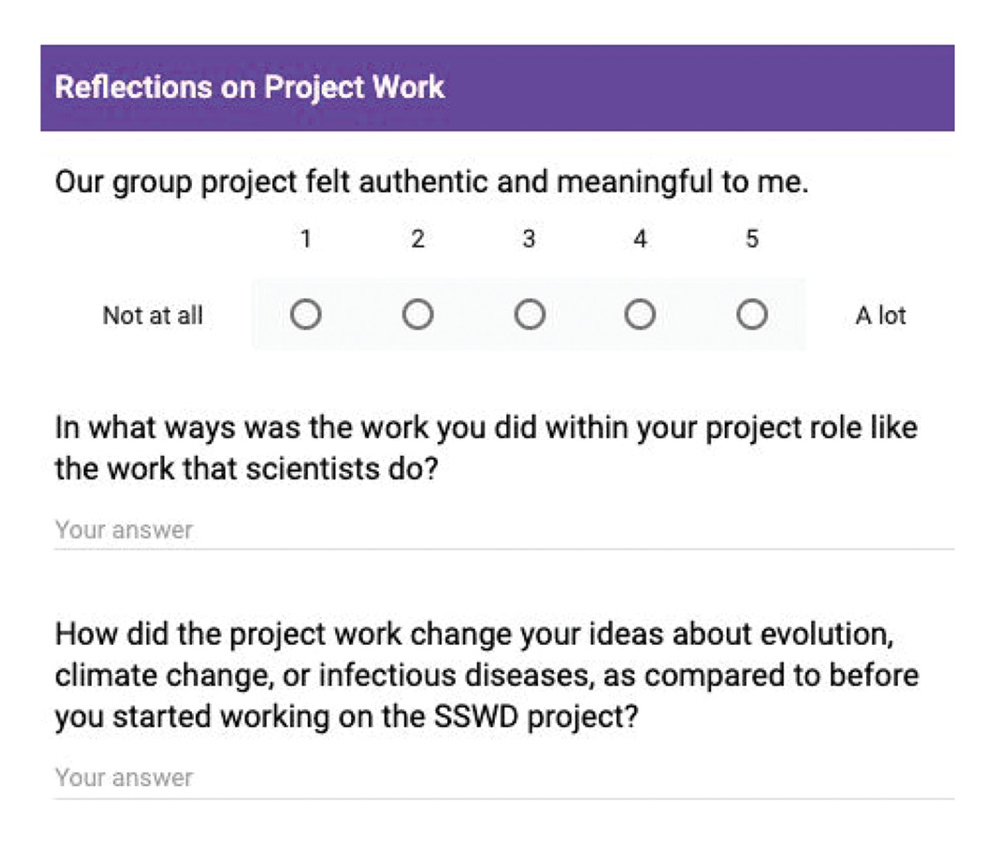

The examples we share are drawn from the Computational Science Project (CSP), a research-practice partnership focused on integrating NGSS computational thinking practices into science classrooms. As part of the CSP, a ninth-grade biology teacher enacted a computationally rich curriculum unit focused on how sea star health was being impacted by climate change and Sea Star Wasting Disease (SSWD). Practical measures surveys were used collaboratively by the biology teacher, instructional coach, and educational researchers as a tool during the initial enactment of the curriculum, serving several purposes. First, the surveys helped elevate student voice so that the classroom teacher could adjust instruction in a responsive and informed way, centering students’ identity and cultural backgrounds in learning. Second, survey data provided feedback from students on their lived experiences with the curriculum materials, which helped inform revisions to the unit. Examples of different kinds of practical measures are described on the following pages (see Figures 2–4).

Example of activity-centered practice measures.

Example of engagement-centered practical measures.

Example of identity-centered practical measures.

Activity-centered practical measures describe what learners see themselves, their peers, and their educators doing during learning activities. This may include perceptions of disciplinary practices in which learners engaged (e.g., scientific argumentation) or the way that particular supports in the classroom are understood (e.g., ELL scaffolds). These measures tell educators how learners are interpreting the learning activities and where actionable opportunities for improvement exist. An example of activity-centered practical measures for the biology unit is presented in Figure 2.

Second, engagement-centered practical measures (see example in Figure 3) can elicit information about the climate of the learning setting, equitable practices, and learners’ interests in and connections to the curriculum. Engagement-centered practical measures help educators collect data about what learners value within lessons, learners’ perceptions of how a space is conducive to their learning, or ways educators can make learning more engaging. This information can be used to bridge the everyday lives of learners with disciplinary-based learning objectives (e.g., http://stemteachingtools.org/brief/46) and impact equitable opportunities for learning (NASEM 2018a; NASEM 2018b).

Lastly, educators can use identity-centered practical measures to make sense of how learners identify with what they are learning, connect to the discipline, and see themselves as able to take action. Practical measures focused on identity provide science educators with insight on how learners are seeing themselves during learning and how they are identifying with what they are learning. Improving these aspects of learning helps learners apply new knowledge and practices in their personal lives and to their possible future lives. This is particularly important when considering how equitable learning opportunities lead to further activity within a disciplinary space (NASEM 2018b; Figure 4).

Designing practical measure surveys

Throughout the CSP’s 10-week biology unit, practical measure surveys were administered to students at four points. The surveys were created using Google Forms (a free, device-neutral online software) that included both Likert-scale and open-ended prompts, which allowed for easy compilation of survey data. These prompts were developed within a set of categories —known as constructs—to better understand nuances within each type of practical measure. CSP constructs are shown in Table 1. The prompts were organized into four surveys, ensuring that questions within each construct were included across multiple surveys to provide insight into students’ learning experiences over the course of the unit.

The classroom teacher, instructional coach, and researchers used the information gained through these surveys to center student voice and shift classroom practices in response to students’ surveys across the multiple constructs that were relevant to the course, curriculum, and classroom culture. For example, within the CSP work when survey results showed that some students were struggling with team dynamics during project work, the teacher checked in with each group the following class period.

Using feedback in instructional decision making

Learners who provide consistent feedback feel empowered and valued in the learning process (Bryk et al. 2015). These learners become active participants in their learning, making a deeper connection to their own cultural experiences and how their actions impact the practices and knowledge they build. When used regularly, practical measures allow learners to see themselves as contributors to the design of the learning environment, challenging the problematic issues of framing learning as the acquisition of knowledge and instruction as being informed only by teacher decisions.

A characteristic of practical measures is that they provide timely data that is actionable to shift instruction. For example, an educator may ask if students engaged in a particular scientific practice during a given week of instruction. If instruction was designed for that practice to be present but learners were unaware that they were engaging in such work, more explicit instruction may be needed to ensure they are understanding the purpose of any given learning activity. Analyzing and applying learner feedback quickly indicates to learners that educators care, thereby increasing learners’ engagement.

Practical measures also promote equity in learning activities For example, educators can use survey information to gauge how heard or respected students feel during classroom discussions and make adjustments accordingly. Educators can use data they collect to examine trends by class or individual learners across time, analyzing which learners frequently feel heard or if there are specific activities that make learners feel more or less connected to the work. For example, which students are speaking up more in whole-class versus small-group discussions? Depending on what you learn from your practical measures, you may engage a range of structured talk protocols (e.g., http://stemteachingtools.org/pd/playlist-talk).

Guidance for designing practical measures surveys

In designing practical measures, educators should consider a few key components to ensure the data that are produced are actionable (see Furtak, Heredia, and Morrison 2019 for additional guidance):

- Collect data that can be aggregated and disaggregated by subgroup. Grouping data should provide actionable information and allow educators to look at both trends of all of their learners as well as how a particular class period or subgroup is experiencing learning. For example, an educator may want to know how their English language learners are engaging with learning activities compared to other learners.

- Surveys should be iterative in nature. Asking learners to respond to similar prompts over time allows educators to see how their instruction may be shifting the experiences of learners.

- Vary question types. Using both Likert-scaling and open-ended questions offer learners different ways to share their experiences, while enabling educators to collect timely data and examine overall trends.

- Anonymity. Keeping learners’ answers anonymous builds and maintains learners’ trust, especially with the pervasive culture of judgment and evaluation that often surrounds surveys in the classroom. Educators should use their judgment about whether surveys are completely anonymous or not, based on the culture in their classroom or program, the goal of the survey, and how they want to be able to disaggregate the data.

- Efficient to deliver and analyze. Survey data from online platforms is easier to analyze and more likely to be considered when making instructional or curricular changes. They afford quick completion, efficient data analysis, more anonymity than paper surveys and are accessible to learners and educators engaged in remote learning contexts.

- Not graded or evaluative in any way. Practical measures surveys should not be graded. Grading learners based on their responses to practical measures surveys can quickly erode trust in the classroom and opposes the collaborative improvement model that these surveys seek to attend to for educators and learners.

- Sharing data with learners. Data should be simplified enough to share trends with learners. Attending to data quickly shows learners that their educators are incorporating their feedback and voice into the curriculum regularly.

Challenges and opportunities

There are a few things that may prevent educators from using practical measures surveys regularly. First, data that educators collect around learning is often used as a judgment, for both educators and learners, rather than an opportunity for growth. This has fostered a particular narrative around surveys and data that we need to overcome to create a safe and trusting environment where data is not used against learners or educators. Related to this, data is often looked at from a deficit lens, which further exacerbates perceived “gaps” and potential hesitancy to collect data regularly. Educators should use this data to reflect on how they can better support learners.

Next, sensemaking from survey data takes time. If data is being used in a trusting environment, then practical measures surveys can aid in fostering this classroom culture where learners feel safe to express their opinions, rely on the routine of providing candid feedback, and see it implemented regularly. If learners do not see educators close the feedback loop, however, this trust can erode quickly.

Conclusion

As a type of formative assessment, practical measures surveys attend to learners’ activity, engagement, and identity in science classrooms and programs. These surveys allow educators to empower learners by elevating student voice to provide insight and perspective on their learning for the benefit of more equitable instruction. Using practical measures in routine ways shifts power dynamics in classroom assessment practices to foster student-centered learning instead of teacher-controlled instruction. Engaging in practical measures surveys as part of formative assessment toolkits can benefit learning communities through building trust, illuminating issues of inequity that need to be addressed, and lifting student voice and experiences into the center of instructional design. Moreover, in a time of remote learning due to the COVID-19 pandemic, online practical measures surveys are a way for educators to check in with and be responsive to learners’ experiences from a distance. ■

Example assessment constructs from the computational science project.

|

Type of Practical Measure |

Assessment Category (Construct) |

|

Activity centered |

|

|

Engagement centered |

|

|

Identity centered |

|

Acknowledgments

This material is based upon work supported by the National Science Foundation under Grant No. DRL-1543255. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation. We want to recognize the contributions of our broader research collaborative in this specific work as well, especially Elaine Klein, Angelica Clark, and Veronica McGowan

Further Reading Resources

Jackson, K., E. Henrick, P. Cobb, N. Kochmanski, and H. Nieman. 2016. Practical measures to improve the quality of small-group and whole-class discussion [White Paper]. See https://www.education.uw.edu/slmi/practical-measures/ for information around this paper.

Kochmanski, N., E. Henrick, and A. Cobb. 2015. On the Advancement of Content-Specific Practical Measures Assessing Aspects of Instruction Associated with Student Learning. National Center on Scaling Up Effective Schools Conference, Nashville, Tennessee, October 8, 2015.

Penuel, W.R., M. Novak, T. McGill, K. Van Horne, and B. Reiser. March, 2017. How to define meaningful daily learning objectives for science investigations (Practice Brief No. 46). STEM Teaching Tools. http://stemteachingtools.org/assets/landscapes/STEM-Teaching-Tool-46-Defining-Daily- Learning-Goals.pdf

Rigby, J.G., S.L. Woulfin, and V. März. 2016. Understanding how structure and agency influence education policy implementation and organizational change. American Journal of Education 122 (3): 295–302.

Ruiz-Primo, M.A., and E.M. Furtak. 2007. Exploring teachers’ informal formative assessment practices and students’ understanding in the context of scientific inquiry. Journal of Research In Science Teaching 44 (1): 57–84.

Krista Fincke (kfincke@gmail.com) is Dean of Curriculum and Instruction at Excel Academy Charter School in East Boston, Massashusetts. Deb Morrison (eddeb@uw.edu) is a learning scientist at the University of Washington’s Institute of Science and Math Education. Kristen Bergsman, PhD, is a learning scientist, educational researcher, and STEM curriculum designer at the University of Washington. Philip Bell is Professor and Chair of Learning Sciences & Human Development in the College of Education at the University of Washington.

Assessment Equity Pedagogy Social Justice Teaching Strategies