feature

Marginalizing Misinformation

The Fast-and-Frugal Way

Society is plagued with purveyors of scientific misinformation. Climate change naysayers. Anti-vaxxers. Flat-Earthers. COVID-denying conspiracists. And others. What can an ordinary science teacher do? We can provide students with some simple tools to help distinguish genuine science from the junk imitations posted on the internet and cascading through social media. Here, I describe a core lesson based on a recent expert report, Science Education in an Age of Misinformation (Osborne et al. 2022; see also Osborne and Pimentel 2022). It focuses on the NGSS science and engineering practice 8, and it helps articulate what is meant by “Evaluate the validity and reliability of multiple claims that appear in ... media reports.”

Focus on the source, not the claim

Students need methods to deal with the torrent of misleading information. Our first intuition may be to redouble our efforts in teaching scientific reasoning and critical thinking. Unfortunately, the agents of disinformation try to dodge scrutiny by deploying tactical deception: They present cherry-picked evidence and then invite the reader to make (erroneous) conclusions on their own. They try to use a single shard of evidence to falsify a robust and well-founded theory. They present plausible arguments and persuasive graphs that only an expert can untangle. They leverage commonplace scientific skepticism into monumental doubt and disbelief. In multiple ways, they deliberately exploit the very image of science to bolster nonscientific claims. Their “playbook” is by now familiar to the guardians of science (e.g., Kenner 2015; Union of Concerned Scientists 2019).

To diagnose a scientific claim in the media, students must first appreciate the fundamentals of trust. Trust in the experts. The easy access to information on the internet may beguile us with an illusion of unbounded understanding. However, we must resist the temptation to rely on our own wits and pretend that we have just as much knowledge as the experts. Genuine knowledge comes only with experience and awareness of the many, many methodological pitfalls and sources of error. We cannot second-guess the experts. Indeed, that is why we turn to them, whether it be a doctor, an IT tech, a solar engineer, or an earthquake geologist. We rely on their specialized knowledge. Ultimately, expertise matters. And, perhaps, a bit of intellectual humility?

This need not leave us gullible to every science con artist. We must learn who to trust and how to trust in science. What is the evidence for someone’s expertise? What is the evidence for their honesty? What is the evidence for a consensus among the relevant scientific community? These probes differ greatly from evaluating the evidence for the scientific arguments themselves.

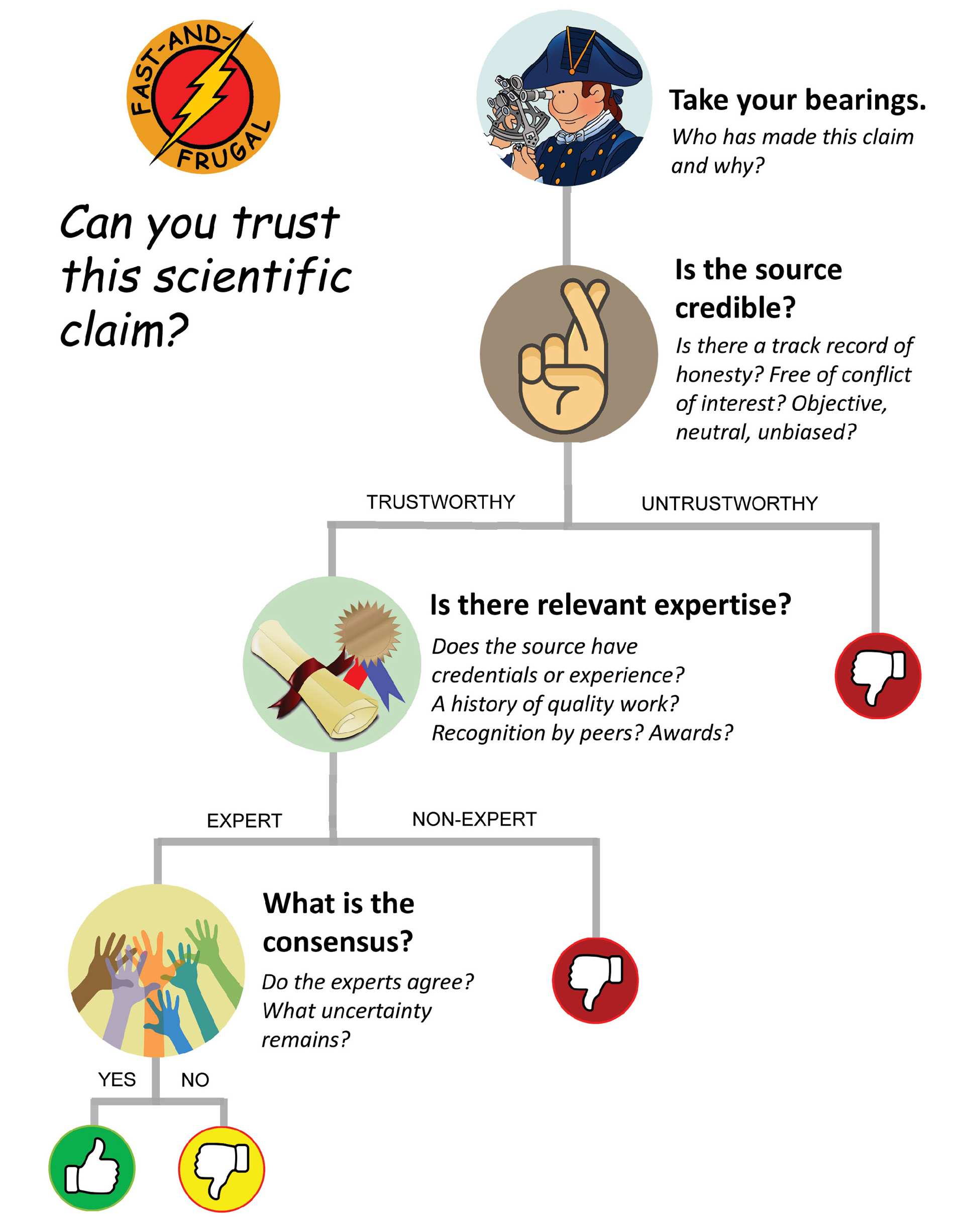

Decision-makers often look for quick and economical ways to manage complex issues. They develop fast-and-frugal (F+F) decision trees, based on a few key questions that are easily answered (Hafenbrädl et al. 2016). Students can apply that time-saving strategy to the challenge of detecting and disarming misinformation. Figure 1 offers a roadmap. It is based on what professional fact-checkers do (Neuvonen et al. 2018).

Take your bearings

First, when encountering unfamiliar claims, do what experienced navigators do in strange territory: orient yourself. Don’t delve into the text or argument. Don’t read the “About” page. Explore the media context. Open a new tab in your web browser. Research who is tweeting or sponsoring the website. Wikipedia may be helpful here. Be patient. Exercise click restraint. Before following the links, scan the search results quickly for helpful information that might shorten your overall effort. Learn about who is making the claim and why (Wineburg et al. 2021).

Is the source credible?

Next, establish whether the source itself is trustworthy. Do they have a track record of honesty? Is there a conflict of interest—a way the claimant might profit from persuading you? Is there evidence of objectivity or neutrality? Or does the source exhibit a commercial, a political, or an ideological bias? (Be sure not to be taken in, even if you agree with their stance. Someone who shares your views or identity may be your “trusted” ally but not necessarily a reliable and trustworthy expert!) At this point, some teachers may wish to elaborate on credible sources, such as the NRC, AAAS, CDC, IPCC, WHO, EPA, and so on (see Allchin 2020). Do not confuse ordinary trust (loyalty or moral virtue) with epistemic trust—reserved for questions of knowledge. If you cannot establish the speaker’s credibility, exit. Namely, jettison the claim as unreliable, at best. There are plenty of other places to find good information.

Is there relevant expertise?

Third, if the source proves credible, ask yourself, “Do they exhibit relevant expertise?” Namely, does the person have the depth of knowledge to vouch for this claim? Do they have a track record of reliable research? Do their expert peers respect them and esteem the quality of their work? (There might be awards or other recognitions, or leadership positions in professional organizations.) Do they have the appropriate educational background? Or perhaps they have substantial relevant experience that is not documented in academic credentials? Are they employed at a prestigious university or research institution? Make a special allowance for media gatekeepers—professional science journalists who do all this vetting work for you. There are many clues to ascertaining someone’s expertise.

Still, be on the lookout for distractors and confounders. A white lab coat and a deep, serious voice are not markers of scientific expertise. These superficial features can easily mislead us. Beware of bogus credentials. For example, a fancy title and organizational affiliation may be part of the disguise. A political leader may have a fine public reputation, but that does not make them a credible expert. A celebrity may inspire you as a role model, but is their view on a socioscientific issue based on anything more than mere personal opinion? They are not a scientist, either! Likewise, someone who shares your political affiliation or identity may be worth “trusting” and socializing with, but remember that such trust does not automatically extend to scientific trustworthiness. Just being a friend, or friendly, is not an indicator of expertise.

Also, check the relevance of the expertise. Is the field of expertise aligned with the claim in question? For example, a nuclear scientist is not a scholarly authority on secondhand smoke, although such an appeal has occurred historically. The very same scientist even purported expertise on the ozone layer, acid rain, and global warming! Even a Nobel Prize–winning scientist is not an expert on everything, only on their respective area of specialization. You can afford to be choosy. Wait until you find the testimony of a fully validated expert.

What is the consensus?

Finally, if you have a credible and expert source, is there evidence that the majority of scientists concur? Experts may sometimes legitimately disagree, especially during unfinished science-in-the-making. But we should be duly impressed when the experts generally agree. (A lone dissenter may be very visible and vocal, but a single opinion is rarely worth heeding.) Sometimes, the view of the leading or most respected experts is sufficient, depending on the circumstances.

Consensus in science is important. Just publishing a paper in a journal is no guarantee that the findings have been weighed and accepted by others. Scientists review one another’s work, before publication and after. They may find unwarranted assumptions or flaws in the methods. They may apply different theoretical perspectives, leading to different interpretations. When scientists disagree, they typically engage in further research to resolve any ambiguities or uncertainties. Reciprocal criticism yields a potent system of checks and balances. The upshot is knowledge that is more secure than what any one individual might reach on their own (Oreskes 2019). This feature of the scientific method is often overlooked in school settings. But it is important to the trustworthiness of scientific claims in personal and public decision-making.

Teaching trust in the science classroom

That’s it: the fast-and-frugal way to marginalize misinformation. It does not require in-depth scientific knowledge. That’s fortunate, because no one can be an expert in all things! We all depend on experts to guide us. Nor does it require us even to master the subtleties of all the logical and statistical fallacies. That is why we have experts to begin with—to make those assessments on our behalf. Although individual scientists may not be perfect, a consensus effectively filters out errors and biases. Ultimately, as a consumer of science, we only need to ensure that whoever reports the consensus of experts is credible (Figure 1). It is all about knowing how to trust.

The F+F decision tree can be presented in the science classroom as a roadmap for action. Cases are easily found in the local news media or online. (Time will vary depending on the depth and complexity of the issue.) Possible scientific claims for students to evaluate include

- Do cell phones or 5G communication towers cause cancer?

- Can ivermectin prevent COVID-19?

- Can earthquakes be precisely predicted?

- Are GMO foods safe to eat?

- Are recent extreme weather events (hurricanes, droughts, floods) related to climate change?

- Do waste incinerators generate more pollution than automobiles and trucks?

- Does managed turf store or generate greenhouse gases?

- Are annual flu vaccines safe and effective?

Alternatively, teachers who use inquiry-based (constructivist) instructional methods can problematize the issue of credibility for classroom discussion (see Allchin 2020 for a suite of “credibility games”). By posing the challenges directly to students, they can work through and develop the various concepts about exercising scientific trust on their own. Perhaps classes may develop their own list of “go-to” credible sources and trustworthy fact-checking websites (such as Snopes.com or FactCheck.org). The great benefit is that addressing contemporary socioscientific issues inevitably engages students, and they readily appreciate that the lessons from the science classroom enrich their everyday lives. The F+F misinformation decision tree is one such valuable tool.

Douglas Allchin (allchindouglas@gmail.com) is a former biology teacher and now resident fellow at the Minnesota Center for the Philosophy of Science, University of Minnesota, Minneapolis, MN. He is author of Teaching the Nature of Science: Perspectives and Resources.

General Science Instructional Materials Literacy NGSS Science and Engineering Practices Teaching Strategies High School